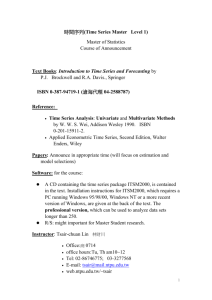

Lecture 2 - WordPress.com

advertisement

Lecture 2

ARMA models

2012 International Finance

CYCU

1

White noise?

• About color?

• About sounds?

• Remember the statistical definition!

2

White noise

• Def: {t} is a white-noise process if each

value in the series:

– zero mean

– constant variance

– no autocorrelation

• In statistical sense:

– E(t) = 0, for all t

– var(t) = 2 , for all t

– cov(t t-k ) = cov(t-j t-k-j ) = 0, for all j, k, jk

3

White noise

• w.n. ~ iid (0, 2 )

iid: independently identical distribution

• white noise is a statistical “random”

variable in time series

4

The AR(1) model (with w.n.)

• yt = a0 + a1 yt-1 + t

• Solution by iterations

•

yt = a0 + a1 yt-1 + t

•

yt-1 = a0 + a1 yt-2 + t-1

•

yt-2 = a0 + a1 yt-3 + t-2

•

•

y1 = a0 + a1 y0 + 1

5

General form of AR(1)

t 1

t 1

i 0

i 0

y t a 0 a1i a1t y0 a1i t i

• Taking E(.) for both sides of the eq.

t 1

t 1 i

t

i

E( y t ) E a 0 a1 E a1 y0 E a1 t i

i 0

i 0

t 1

E( y t ) a 0 a a y 0

i 0

i

1

t

1

6

Compare AR(1) models

• Math. AR(1)

1 a1t

t

yt a 0

(a 1 ) y 0

1 a1

• “true” AR(1) in time series

1 a1t

(a1 ) t y 0

E(y t ) a 0

1 a1

7

Infinite population {yt}

• If yt is an infinite DGP, E(yt) implies

a0

lim y t

(note: a constant)

t

(1 a1 )

• Why? If |a1| < 1

1 a

t

yt a 0

(a 1 ) y 0

1 a1

t

1

8

Stationarity in TS

• In strong form

– f(y|t) is a distribution function in time t

– f(.) is strongly stationary if

f(y|t) = f(y|t-j) for all j

• In weak form

– constant mean

– constant variance

– constant autocorrelation

9

Weakly Stationarity in TS

Also called “Covariance-stationarity”

• Three key features

– constant mean

– constant variance

– constant autocorrelation

• In statistical sense: if {yt} is weakly

stationary,

– E(yt) = a constant, for all t

– var(yt) = 2 (a constant), for all t

– cov(yt yt-k ) = cov(yt-j yt-k-j ) =a constant,

for all j, k, jk

10

AR(p) models

p

y t a 0 a i y t i t

i 1

– where t ~ w. n.

• For example: AR(2)

– yt = a0 + a1 yt-1 + a2 yt-2 + t

• EX. please write down the AR(5) model

11

The AR(5) model

• yt=a0 +a1 yt-1+a2 yt-2+a3 yt-3+a4 yt-4+a5 yt-5+

t

12

Stationarity Restrictions for

ARMA(p,q)

• Enders, p.60-61.

• Sufficient condition

p

| a

i 1

i

| 1

• Necessary condition

p

a

i 1

i

1

13

MA(q) models

• MA: moving average

– the general form

q

y t a 0 t b i t i

i 1

– where t ~ w. n.

14

MA(q) models

• MA(1)

yt a 0 t b1 t 1

• Ex. Write down the MA(2) model...

15

The MA(2) model

• Make sure you can write down MA(2) as...

yt a 0 ε t b1ε t 1 b2ε t 2

• Ex. Write down the MA(5) model...

16

The MA(5) model

• yt=a0+a1yt-1+a2yt-2+a3yt-3+a4 yt-4 + a5 yt-5 + t

17

ARMA(p,q) models

• ARMA=AR+MA, i.e.

– general form

p

q

i 1

i 1

y t a 0 a i y t i ε t b i ε t i

• ARMA=AR+MA, i.e.

– ARMA(1,1) = AR(1) + MA(1)

yt a 0 a i yt i ε t bi ε t i

18

Ex. ARMA(1,2) & ARMA(1,2)

• ARMA(1,2)

yt a 0 a1 yt 1 ε t b1 ε t 1 b2ε t 2

• Please write donw: ARMA(1,2) !

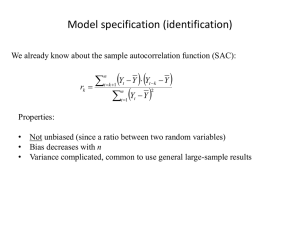

19