Big Data in Genomics and Cancer Treatment Tanya Maslyanko Big

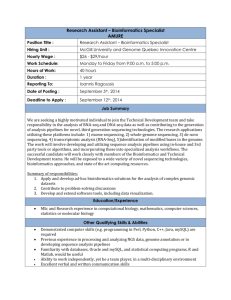

advertisement

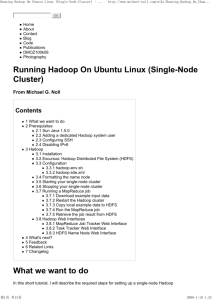

Big Data in Genomics and Cancer Treatment Tanya Maslyanko Big data. These are two words the world has been hearing a lot lately and it has been in relevance to a wide array of use cases in social media, government regulation, auto insurance, retail targeting, etc. The list goes on. However, a very important concept that should receive the same (if not more) recognition is the presence of big data in human genome research. Three billion base pairs make up the DNA present in humans. It’s probably safe to say that such a massive amount of data should be organized in a useful way, especially if it presents the possibility of eliminating cancer. Cancer treatment has been around since its first documented case in Egypt (1500 BC) when humans began distinguishing between malignant and benign tumors by learning how to surgically remove them. It is intriguing and scientifically helpful to take a look at how far the world’s knowledge of cancer has progressed since that time and what kind of role big data (and its management and analysis) plays in the search for a cure. The most concerning issue with cancer, and the ultimate reason for why it still hasn’t been completely cured, is that it mutates differently for every individual and reacts in unexpected ways with people’s genetic make up. Professionals and researchers in the field of oncology have to assert the fact that each patient requires personalized treatment and medication in order to manage the specific type of cancer that they have. Elaine Mardis, PhD, co-director of the Genome Institute at the School of Medicine, believes that it is essential to identify mutations at the root of each tumor and to map their genetic evolution in order to make progress in the battle against cancer. “Genome analysis can play a role at multiple time points during a patient’s treatment, to identify ‘driver’ mutations in the tumor genome and to determine whether cells carrying those mutations have been eliminated by treatment.” However, the extensive amount of data that comes with the analysis of human genetics undoubtedly requires a more stable structure and organization to help researchers and scientists to make sense of it all and relate it accordingly to necessary medical care. Many companies have recently been developing their own compilations of data that allow them to sort and analyze genomic data. This is a significant step forward, but to bring this data to its full potential, companies could benefit from Apache Hadoop as a data platform by allowing it to store and sort the massive income of information that keeps increasing from new and upcoming research. For instance, MediSapiens is a Finnish company that hosts the world’s largest unified gene expression database and provides software that allows oncologists to cross-reference 19,000 genes (as well as 40 tissues types and 70 cancer types) across over 20,000 patients. New research advancements are presented through their quarterly data updates, which include molecular profile data selection (the most recent, relevant gene expression data), clinical data curation (data annotations and validity analysis), and data unification (publication of journals). Nevertheless, simply storing this information is not enough to aid the organization and comparison of scientific prospects that continue to develop today. Bioinformatics research of DNA and genes has gone from $1 million in 2007 to $1 thousand in 2012, which allows for an incredibly large increase in sequencing activity and data. Although it’s comforting that genome studies have become so financially accessible, this actually creates a problem for the efficient management of genomic datasets. At Hadoop Summit 2010, Jeremy Bruestle from Spiral Genetics, Inc. spoke about how Hadoop could help solve the challenge of big datasets in the field of genomics. Hadoop supports parallelization, offers good composability, and maps genomics problems through Map Reduce. According to Bruestle, assembly and annotation could become significantly less complicated. There is definitely a need for Hadoop in genomic studies and progress. In “Making sense of cancer genomic data”, Lynda Chin et al. explain that genome analysis has already developed into something extraordinary, leading to new cancer therapy targets and discoveries about certain cancer mutations and the medical responses they require. They also point out that these discoveries need to be handled more effectively. “For one, these large-scale genome characterization efforts involve generation and interpretation of data at an unprecedented scale which has brought into sharp focus the need for improved information technology infrastructure and new computational tools to render the data suitable for meaningful analysis.” This presents the perfect opportunity for Hadoop. Fortunately, there have been quite a few companies that have tapped into the useful power of Hadoop. It initially started at the University of Maryland in 2009, where Michael Schatz released his Cloudburst software on Hadoop, which specialized in mapping sequence data to reference genomes. Since then, many software applications have been developed that focus particularly on genome analysis. Crossbow, for example, is a software company that runs components like short read aligners (Bowtie) and genotypers (SoapSNP) on a Hadoop cluster. The UNC-CH Lineberger Bioinformatics Group also stated that they use Hadoop for its high throughput sequencing services for computational analysis. Hadoop-BAM is another specific data platform that works with the BAM (Binary Alignment/Map) format and uses MapReduce to perform functions like genotyping and peak calling. Deepak Singh, the principal product manager at Amazon Web Services, said, “We've definitely seen an uptake in adopting Hadoop in the life sciences community, mostly targeting next-generation sequencing, and simple read mapping because what [developers] discovered was that a number of bioinformatics problems transferred very well to Hadoop, especially at scale.” On top of sequencing, Hadoop has also sparked an interest at pharmaceutical companies because it doesn’t make data formatting a tedious and worrisome issue, allowing these companies to focus their efforts (and money) on building hypotheses from their collected data. Together, the worlds of bioinformatics and big data are joining forces to conjure up innovative ways to spread knowledge about personalized cancer treatments. For example, Nantworks is working with Verizon to develop the Cancer Knowledge Action Network, using a cloud database, which will allow doctors to easily access protocols about specific cancer medicines and treatments. Dr. Patrick Soon-Shiong of Nantworks stated, “Our goal is to turn this data into actionable information at the point of care, enabling better care through mobile devices in hospitals, clinics and homes.” Basically, this network would be a self-learning health care system equipped with the most up-to-date reassessment of information. Big data bioinformatics projects, like Cloudburst and the Cancer Knowledge Action Network, are placing doctors and scientists at the very hub and turning point of cancer treatment research and development. Oncologists are now able to access necessary information on the spot to make medical decisions and possibly save lives by evaluating and removing tumors before they spread. The momentum that big data is gaining every day has allowed for an impressive advancement in the betterment of the world’s health. The key is to continue down this path in a knowledgeable and efficient way in order to use upcoming research to the utmost advantage.