Week 2 Lesson 1 Hw from ppt

advertisement

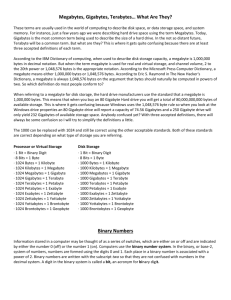

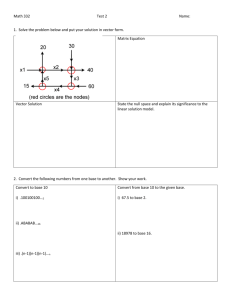

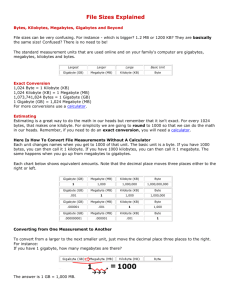

Units of data Objectives: i. Define the terms bit, nibble, byte, kilobyte, megabyte, gigabyte, terabyte Bit: A bit (short for "binary digit") is the smallest unit of measurement used to quantify computer data. It contains a single binary value of 0 or 1. Nibble: In computers and digital technology, is a four binary digits or half of an eightbit byte. A nibble can be conveniently represented by one hexadecimal digit. Byte: In most computer systems, a byte is a unit of data that is eight binary digits long. A byte is the unit most computers use to represent a character such as a letter, number, or typographic symbol (for example, "g", "5", or "?"). A byte can also hold a string of bits that need to be used in some larger unit for application purposes (for example, the stream of bits that constitute a visual image for a program that displays images or the string of bits that constitutes the machine code of a computer program). Kilobyte: A kilobyte is 103 or 1,000 bytes. The kilobyte (abbreviated "K" or "KB") is the smallest unit of measurement greater than a byte. Megabyte: A megabyte is 106 or 1,000,000 bytes. One megabyte (abbreviated "MB") is equal to 1,000 kilobytes and precedes the gigabyte unit of measurement. While a megabyte is technically 1,000,000 bytes, megabytes are often used synonymously with mebibytes, which contain 1,048,576 bytes (220 or 1,024 x 1,024 bytes). Gigabyte: a measure of storage capacity equal to 2 Terabyte: 2 40(1,099,511,627,776) 30(1024) bytes. bytes; 1024 gigabytes. ii. Understand that data needs to be converted into a binary format to be processed by a computer. Why binary? Computers can only understand 1s & 0s They process and store binary numbers Any other type of data is useless Data must be translated into binary form before it can be used... It is digitized