Answers

advertisement

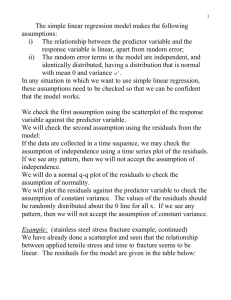

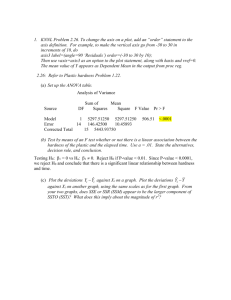

Project #2 Answers STAT 870 Fall 2012 Complete the following problems below. Within each part, include your R program output with code inside of it and any additional information needed to explain your answer. Note that you will need to edit your output and code in order to make it look nice after you copy and paste it into your Word document. 1) (27 total points) Continue using taste as the response variable and acetic acid as the predictor variable as in project #1 for the cheese data. Complete the following. a) (2 points) Give the ANOVA table. > library(RODBC) > z<-odbcConnectExcel(xls.file = "C:\\chris\\unl\\Dropbox\\NEW\\ STAT870\\projects\\Fall2012\\cheese.xls") > cheese<-sqlFetch(channel = z, sqtable = "Sheet1") > close(z) > head(cheese) Case taste Acetic H2S Lactic 1 1 12.3 4.543 3.135 0.86 2 2 20.9 5.159 5.043 1.53 3 3 39.0 5.366 5.438 1.57 4 4 47.9 5.759 7.496 1.81 5 5 5.6 4.663 3.807 0.99 6 6 25.9 5.697 7.601 1.09 > mod.fit1<-lm(formula = taste ~ Acetic, data = cheese) > summary(mod.fit1) Call: lm(formula = taste ~ Acetic, data = cheese) Residuals: Min 1Q -29.642 -7.443 Median 2.082 3Q 6.597 Max 26.581 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -61.499 24.846 -2.475 0.01964 * Acetic 15.648 4.496 3.481 0.00166 ** --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 13.82 on 28 degrees of freedom Multiple R-squared: 0.302, Adjusted R-squared: 0.2771 F-statistic: 12.11 on 1 and 28 DF, p-value: 0.001658 > anova(mod.fit1) Analysis of Variance Table Response: taste Df Sum Sq Mean Sq F value Pr(>F) Acetic 1 2314.1 2314.14 12.114 0.001658 ** Residuals 28 5348.7 191.03 --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 1 > var(cheese$taste)*(nrow(cheese) - 1) [1] 7662.887 > save.anova<-anova(mod.fit1) > names(save.anova) [1] "Df" "Sum Sq" "Mean Sq" "F value" "Pr(>F)" > save.anova$"Sum Sq" [1] 2314.142 5348.745 > sum(save.anova$"Sum Sq") [1] 7662.887 Source of variation Regression Error Total df 1 28 29 SS MS F 2314.1 2314.14 12.114 5348.7 191.03 7662.8 Note that there 2314.1 + 5348.7 = 7662.8 in the ANOVA table although SSTO is actually 7662.9 when rounded to one decimal place. The difference is due to rounding error. b) (3 points) Using the relevant information from the ANOVA table, perform an F-test for 1 = 0 vs. 1 0. Use = 0.05. i) H0: 1 = 0 vs. Ha: 1 0 ii) F = 12.114, p-value = 0.0017 iii) = 0.05 iv) Because 0.0017 < 0.05, reject H0 v) There is sufficient evidence of a linear relationship between taste and acetic acid. c) (3 points) What is R2 for the sample regression model? Fully interpret its value. R2 = 0.30 as given in the summary(mod.fit1) output. Approximately 30% of the variation in taste is accounted for by the acetic acid value. d) (8 points) Using my examine.mod.simple() function, comment on the following items with regards to the model: i) Linearity of the regression function ii) Constant error variance iii) Normality of i iv) Outliers Make sure to specifically refer to plots and numerical values in your comments. > save.it1<-examine.mod.simple(mod.fit.obj = mod.fit1, const.var.test = TRUE, boxcox.find = TRUE) > save.it1$levene Levene's Test for Homogeneity of Variance (center = median) Df F value Pr(>F) group 1 4.5572 0.04167 * 28 --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 > save.it1$bp 2 Breusch-Pagan test data: Y ~ X BP = 5.4974, df = 1, p-value = 0.01905 > save.it1$lambda.hat [1] 0.6 Box plot Residuals vs. predictor Residuals -20 -10 -30 6.5 6.0 5.5 5.0 Predictor variable Predictor variable Residuals vs. estimated mean response ei vs. estimated mean response * 25 35 30 40 Density 15 20 25 1 0 20 25 30 35 40 0.0 0.1 0.2 0.3 0.4 0.5 0.6 20 10 0 Residuals -20 -10 10 15 Histogram of semistud. residuals -30 5 10 Estimated mean response Estimated mean response 0 -1 -3 20 15 10 Residuals vs. observation number -2 Semistud. residuals 2 20 Residuals -20 -10 -30 0 10 50 40 30 20 Response variable 10 0 40 30 20 10 0 30 -2 Observation number -1 0 1 2 Semistud. residuals Normal Q-Q Plot Box-Cox transformation plot -100 -180 -140 log-Likelihood 1 0 -1 Semistud. residuals -60 2 95% -2 Response variable 50 3 Dot plot 4.5 6.5 6.0 5.5 5.0 4.5 Box plot 0 10 50 40 30 20 Response variable 0 4.5 10 6.0 5.5 Predictor variable 20 6.5 Response vs. predictor 5.0 6.0 5.5 5.0 4.5 Predictor variable 6.5 Dot plot -2 -1 0 1 2 -2 -1 0 1 2 Theoretical Quantiles i) Linearity of the regression function – The plot of the residuals vs. acetic acid shows no pattern among plotting points. This indicates the linearity assumption is reasonable. ii) Constant error variance – The plot of the residuals vs. the estimated mean response show less variability at the smaller values of estimated mean response than at larger values. 3 However, there are much fewer observations at the smaller values so concluding nonconstant error variance is difficult. The BP test has a p-value of 0.0191 and Levene’s test has a p-value of 0.0417, so there is marginal evidence of non-constant variance from these hypothesis tests. The Box-Cox transformation plot shows the upper bound for the 95% confidence interval value for does not contain 1, but it is quite close. Again, there is marginal evidence then of non-constant variance. iii) Normality of i – The normal QQ-plot has some deviation from the straight line toward the tails of the distribution. The histogram of the semi-studentized residuals also has possible deviation from normality. However, the sample size is only 30 so it may be difficult to assess normality with this size of a sample. iv) Outliers – A plot of the semistudentized residuals vs. the estimated potency shows no points outside of the 3 bounds. Therefore, there are no outliers. e) (6 points) You should detect one or more potential problems with the model through the work in part d). Transform the response variable to be taste . Why do you think this transformation was chosen? Determine if the transformation helps to solve a problem with the model. This transformation was chosen because ̂ was estimate to be 0.6. Rather than choosing 0.6, I chose 0.5 because it results in a more meaningful transformation (square root). > mod.fit2<-lm(formula = sqrt(taste) ~ Acetic, data = cheese) > summary(mod.fit2) Call: lm(formula = sqrt(taste) ~ Acetic, data = cheese) Residuals: Min 1Q -3.5072 -0.9050 Median 0.4291 3Q 0.8931 Max 2.3050 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -4.5639 2.7805 -1.641 0.11191 Acetic 1.6719 0.5031 3.323 0.00249 ** --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 1.547 on 28 degrees of freedom Multiple R-squared: 0.2828, Adjusted R-squared: 0.2572 F-statistic: 11.04 on 1 and 28 DF, p-value: 0.002489 > save.it2<-examine.mod.simple(mod.fit.obj = mod.fit2, const.var.test = TRUE, boxcox.find = TRUE, Y = sqrt(cheese$taste)) > save.it2$levene Levene's Test for Homogeneity of Variance (center = median) Df F value Pr(>F) group 1 0.5849 0.4508 28 > save.it2$bp Breusch-Pagan test data: Y ~ X BP = 1.0556, df = 1, p-value = 0.3042 4 > save.it2$lambda.hat [1] 1.19 Response vs. predictor Residuals vs. predictor 1 0 -3 1 4.5 4.5 2 -2 -1 Residuals 6 5 4 3 Response variable 6.0 5.5 Predictor variable 5.0 6.0 5.5 5.0 Predictor variable 7 2 6.5 Dot plot 6.5 Box plot 4.5 Dot plot 5.5 6.0 6.5 4.5 5.0 5.5 6.0 6.5 Predictor variable Predictor variable Residuals vs. estimated mean response ei vs. estimated mean response * 2 1 0 -1 Semistud. residuals -3 -3 -2 1 0 -2 -1 Residuals 6 5 4 3 2 1 Response variable 6 5 4 3 2 1 3.0 3.5 4.0 4.5 5.0 5.5 6.0 3.0 Estimated mean response Density 5 10 15 20 25 30 -2 Observation number 1 2 -80 -70 -60 -50 -40 -30 -20 log-Likelihood 1 0 -1 0 5.0 5.5 6.0 -1 0 1 Box-Cox transformation plot -2 -1 4.5 Semistud. residuals Normal Q-Q Plot -2 4.0 Estimated mean response 0.0 0.1 0.2 0.3 0.4 0.5 0.6 1 0 -1 -3 -2 Residuals 0 3.5 Histogram of semistud. residuals 2 Residuals vs. observation number Semistud. residuals Response variable 2 7 7 3 Box plot 5.0 95% -2 -1 0 1 2 Theoretical Quantiles The transformation appears to help solve the non-constant error variance problem, although there is still a little less variability for smaller values of Ŷ than for larger values. The 95% confidence interval for now contains 1. Also, both the BP and Levene’s tests have large pvalues. f) (3 points) Using the new model that was estimated for part e), find the 95% confidence intervals for taste (not taste ) when acetic acid has a value of 4.5 and 6.4. Compare the 5 intervals to those found in project #1. Which intervals (project #1 or #2) are more likely to have 95% confidence? Explain. The purpose of this problem is to make sure you understand how to find the response variable in its original form. Also, I wanted you to think about what happens if model assumptions are not satisfied. > pred1<-predict(object = mod.fit1, newdata = data.frame(Acetic = c(4.5, 6.4)), level = 0.95, interval = "confidence") > pred2<-predict(object = mod.fit2, newdata = data.frame(Acetic = c(4.5, 6.4)), level = 0.95, interval = "confidence") > pred1 fit lwr upr 1 8.91634 -1.628502 19.46118 2 38.64710 28.863754 48.43044 > pred2^2 fit lwr upr 1 8.759054 3.166644 17.13656 2 37.652245 25.414686 52.28717 The 95% confidence intervals for taste (using the transformation-based model) are 3.17 < E(taste) < 17.14 when acetic acid is 4.5 and 25.41 < E(taste) < 52.29 when acetic acid is 6.4. In project #1, the corresponding intervals were -1.63 < E(taste) < 19.46 and 28.86 < E(taste) < 48.43, so we do see some differences. The new intervals for this project are more likely to have 95% confidence because the model’s assumptions are closer to being satisfied. g) (2 points) Are there any other problems with the model after what was done in part e)? Justify your answer. Note that you do not need to actually implement any changes to the model. There still may be problems with the normality of taste . The normal QQ-plot has some deviation from the straight line toward the tails of the distribution. The histogram of the semistudentized residuals also has possible deviation from normality. However, the sample size is only 30 so it may be difficult to assess normality with this size of a sample. 2) (12 total points) The extra credit of project #1 asked you to simulate data from a sample regression model using taste as the response variable (not transformed) and acetic acid as the predictor variable. Complete the following using my simulated data from the answer key. a) (1 point) Run my code to simulate the data. Give the simulated Y values to show that you simulated the data correctly. > sum.fit1<-summary(mod.fit1) > set.seed(7172) > Y.star<-mod.fit1$coefficients[1] + mod.fit1$coefficients[2]*cheese$Acetic + rnorm(n = 30, mean = 0, sd = sum.fit1$sigma) > Y.star [1] -10.310369 19.442977 46.226673 22.374284 1.770760 39.405165 28.965504 37.815467 14.692547 4.394592 [11] 47.148690 49.616239 21.646524 12.658015 27.213700 15.769941 12.332883 14.699950 27.294941 14.316974 [21] 5.417620 2.693531 24.563771 27.522578 26.537968 52.735022 42.552976 19.317254 30.032733 36.492530 6 b) (8 points) Estimate the appropriate regression model for the simulated data. Using my examine.mod.simple() function, comment on the following items with regards to the model: i) Linearity of the regression function ii) Constant error variance (do not examine the Box-Cox transformation value) iii) Normality of i iv) Outliers Make sure to specifically refer to plots and numerical values in your comments. > mod.fit.sim<-lm(formula = Y.star ~ cheese$Acetic) > summary(mod.fit.sim) Call: lm(formula = Y.star ~ cheese$Acetic) Residuals: Min 1Q -24.523 -6.374 Median 0.103 3Q 4.641 Max 24.887 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -80.467 20.509 -3.924 0.000516 *** cheese$Acetic 18.973 3.711 5.113 2.04e-05 *** --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 11.41 on 28 degrees of freedom Multiple R-squared: 0.4828, Adjusted R-squared: 0.4643 F-statistic: 26.14 on 1 and 28 DF, p-value: 2.038e-05 > save.it.sim<-examine.mod.simple(mod.fit.obj = mod.fit.sim, const.var.test = TRUE, boxcox.find = TRUE) > save.it.sim$levene Levene's Test for Homogeneity of Variance (center = median) Df F value Pr(>F) group 1 0.1333 0.7178 28 > save.it.sim$bp Breusch-Pagan test data: Y ~ X BP = 0.0608, df = 1, p-value = 0.8052 7 -20 5.0 5.5 6.0 6.5 5.0 5.5 6.0 6.5 Predictor variable Residuals vs. estimated mean response ei vs. estimated mean response 3 * 0 -1 Semistud. residuals -3 -2 10 0 Residuals -10 -20 1 2 20 50 20 10 0 -10 4.5 Predictor variable 40 30 Response variable 40 30 20 10 0 -10 10 20 30 40 10 Estimated mean response 30 40 Estimated mean response Histogram of semistud. residuals 0.1 0.2 Density 0 -10 0.0 -20 Residuals 10 0.3 20 Residuals vs. observation number 20 0 5 10 15 20 25 30 Observation number -3 -2 -1 0 1 2 3 Semistud. residuals 1 0 -1 Semistud. residuals 2 Normal Q-Q Plot -2 Response variable 50 Dot plot 0 Residuals 20 0 -10 4.5 4.5 4.5 Box plot -10 30 10 40 20 50 Residuals vs. predictor 10 Response variable 6.0 5.0 5.5 Predictor variable 6.0 5.5 5.0 Predictor variable Response vs. predictor 6.5 Dot plot 6.5 Box plot -2 -1 0 1 2 Theoretical Quantiles i) Linearity of the regression function – The plot of the residuals vs. acetic acid shows no pattern among plotting points. This indicates the linearity assumption is reasonable. ii) Constant error variance – The plot of the residuals vs. the estimated mean response show similar levels of variability in the residuals. This indicates that there is not sufficient evidence against the constant variance assumption. The BP test has a p-value of 0.81 and Levene’s test has a p-value of 0.72, so there again is not sufficient evidence against the constant variance assumption. iii) Normality of i – The normal QQ-plot has very little deviation from the straight line in the tails. The histogram of the semi-studentized residuals roughly follows a normal distribution, especially for a sample of size 30 only. 8 iv) Outliers – A plot of the semistudentized residuals vs. the estimated potency shows no points outside of the 3 bounds. Therefore, there are no outliers. c) (3 points) Answer one of the following: i) If you found potential problems, what could be a reason for them? Because we simulated the data, we know the model is correct and that there should not be any problems. Why did we perhaps have some problems detected then with the QQ-plot? One potential reason could be due to a sample size of only 30. ii) If you did not find potential problems, why is this expected? Based on what I saw in the plots and the summary measures, I would have been satisfied with the model. This is expected because we simulated the data with all of the correct assumptions! Out of curiosity, I increased the sample size to 900 using the following code. > set.seed(7172) > Y.star2<-mod.fit1$coefficients[1] + mod.fit1$coefficients[2]*rep(cheese$Acetic, times = 30) + rnorm(n = 900, mean = 0, sd = sum.fit1$sigma) > mod.fit.sim2<-lm(formula = Y.star2 ~ rep(cheese$Acetic, times = 30)) > summary(mod.fit.sim2) Call: lm(formula = Y.star2 ~ rep(cheese$Acetic, times = 30)) Residuals: Min 1Q -41.952 -9.361 Median -0.046 3Q 8.797 Max 43.113 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -67.6876 4.4980 -15.05 <2e-16 *** rep(cheese$Acetic, times = 30) 16.7935 0.8139 20.63 <2e-16 *** --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 13.7 on 898 degrees of freedom Multiple R-squared: 0.3216, Adjusted R-squared: 0.3209 F-statistic: 425.8 on 1 and 898 DF, p-value: < 2.2e-16 > save.it.sim<-examine.mod.simple(mod.fit.obj = mod.fit.sim2, const.var.test = TRUE) 9 Dot plot Dot plot 40 Residuals -20 -40 5.0 5.5 6.0 6.5 4.5 5.5 6.0 6.5 Predictor variable Residuals vs. estimated mean response ei vs. estimated mean response * 1 0 -1 Semistud. residuals -3 -2 20 0 Residuals -20 -40 15 20 25 30 35 40 10 Estimated mean response 15 20 25 30 35 40 Estimated mean response Histogram of semistud. residuals 0.2 0.0 -40 -20 0.1 0 Density 20 0.3 40 0.4 Residuals vs. observation number Residuals 2 3 40 60 40 20 0 Response variable -20 10 0 200 400 600 800 -3 Observation number -2 -1 0 1 2 3 Semistud. residuals 2 1 0 -1 -3 Semistud. residuals 3 Normal Q-Q Plot -2 60 40 20 0 -20 Response variable 5.0 Predictor variable 80 Box plot 0 20 60 40 20 -20 0 Response variable 6.0 5.5 Predictor variable 4.5 4.5 80 Residuals vs. predictor 80 6.5 Response vs. predictor 5.0 6.0 5.5 5.0 4.5 Predictor variable 6.5 Box plot -3 -2 -1 0 1 2 3 Theoretical Quantiles Notice the histogram has a much closer shape to a normal distribution. Also, the QQ-plot has almost all of its points very close to the straight line. Thus, normality looks to be satisfied. This occurs because the larger sample size makes it easier to differentiate non-normal from normal. Also, notice there are 4 semistudentized residuals just outside of the -3 and 3. For a normal distribution, we would expect about > 900 - 900*(pnorm(q = 3) - pnorm(q = -3)) [1] 2.429816 to be outside of the boundaries. Thus, even though we have some values just outside of these boundary lines, this is to be expected. In actual application, one should be worried about a model if there were a considerable number more than 2.4 outside and if some were much farther outside than what we observe here. 10