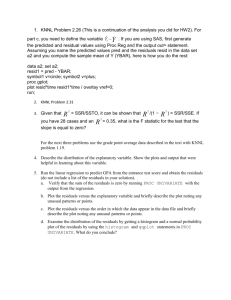

Rice, page 556, problem 24

advertisement

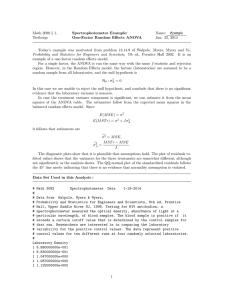

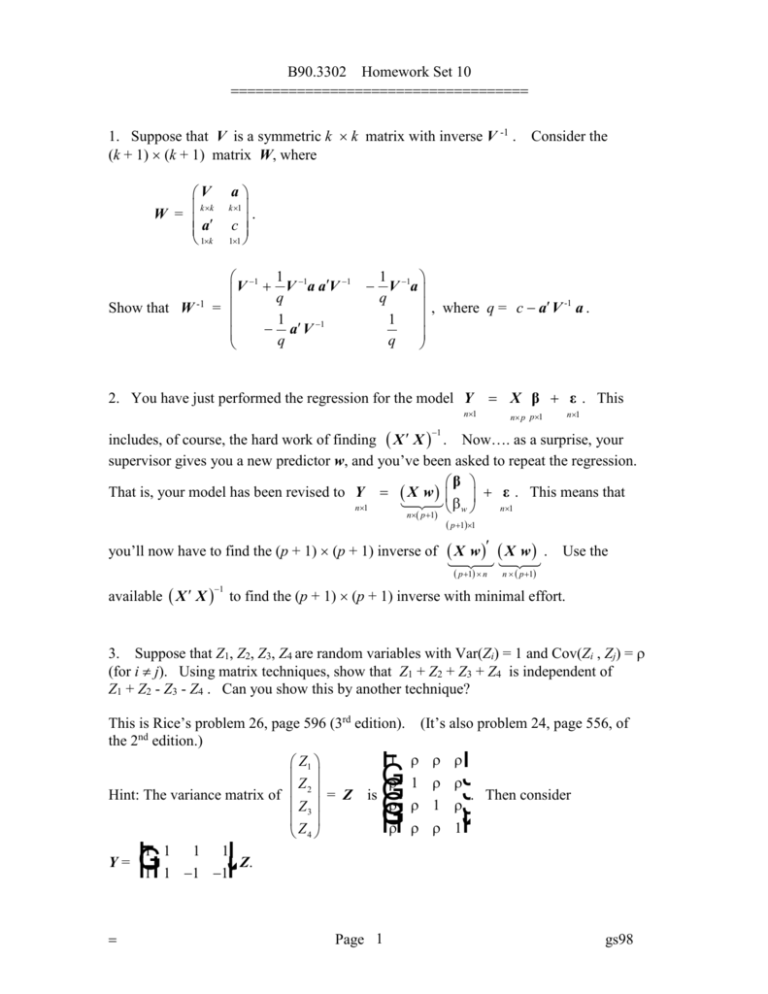

B90.3302 Homework Set 10 1. Suppose that V is a symmetric k k matrix with inverse V -1 . (k + 1) (k + 1) matrix W, where V k k W = a 1k Show that W -1 Consider the a . c 11 k 1 1 1 1 1 V q V a a V = 1 a V 1 q 1 V 1a q , where q = c a V -1 a . 1 q 2. You have just performed the regression for the model Y n1 X β ε . This n1 n p p1 includes, of course, the hard work of finding X X . Now…. as a surprise, your supervisor gives you a new predictor w, and you’ve been asked to repeat the regression. β That is, your model has been revised to Y X w ε . This means that n1 n1 w 1 n p 1 you’ll now have to find the (p + 1) (p + 1) inverse of p 11 X w X w p 1 n available X X 1 . Use the n p 1 to find the (p + 1) (p + 1) inverse with minimal effort. 3. Suppose that Z1, Z2, Z3, Z4 are random variables with Var(Zi) = 1 and Cov(Zi , Zj) = (for i j). Using matrix techniques, show that Z1 + Z2 + Z3 + Z4 is independent of Z1 + Z2 - Z3 - Z4 . Can you show this by another technique? This is Rice’s problem 26, page 596 (3rd edition). the 2nd edition.) 1 Z1 Z Hint: The variance matrix of 2 = Z is Z3 Z4 Y= 1 1 F G 1 1 H IJ K F G 1 G G G H (It’s also problem 24, page 556, of 1 I JJ JJ K . Then consider 1 1 1 Z. 1 1 Page 1 gs98 B90.3302 Homework Set 10 4. Using the model Y = X + , (a) (b) (c) show that the vector of residuals is orthogonal to every column of X. if the design matrix X has a column 1 (meaning that the model has an intercept), show that the residuals sum to zero. show why the assumption about the column 1 is critical to (b). This is adapted from Rice, 3rd edition, problem 19, page 595. (In the 2nd edition, thisis problem 17 on page 556.) Observe that Rice uses e for the vector of noise terms and ê for the residuals. (In this course we have preferred for the vector of noise terms and e for the residuals.) HINTS: -1 The residuals are found as e = Y Yˆ = Y X X X X Y = I X X X -1 X Y = I PX Y . Part (a) asks you to show that e X 0 . 1n n p 1 p Part (b) asks you to show that e 1 0 . 1n n1 11 5. Suppose that Yi = xi + i , where the ’s are independent normal random variables with mean 0 and standard deviation . This is the regression-through-the-origin model. (a) Find the least squares estimate of . (b) Find the maximum likelihood estimate of . 6. This is the same setup as the previous problem, but now the i’s are independent normal with mean 0 and Var(i) = (xi )2. (a) Find the least squares estimate of . (b) Find the maximum likelihood estimate of . Page 2 gs98