HW3sol

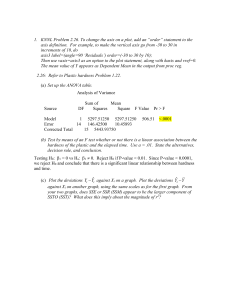

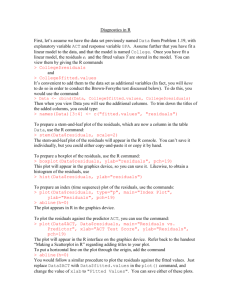

advertisement

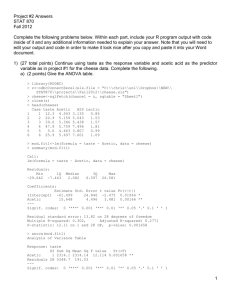

1. KNNL Problem 2.26. To change the axis on a plot, add an “order” statement to the axis definition. For example, to make the vertical axis go from -30 to 30 in increments of 10, do axis3 label=(angle=90 ‘Residuals’) order=(-30 to 30 by 10); Then use vaxis=axis3 as an option to the plot statement, along with haxis and vref=0. The mean value of Y appears as Dependent Mean in the output from proc reg. 2.26: Refer to Plastic hardness Problem 1.22. (a) Set up the ANOVA table. Analysis of Variance Source DF Sum of Mean Squares Square F Value Pr > F Model 1 5297.51250 5297.51250 Error 14 146.42500 10.45893 Corrected Total 15 5443.93750 506.51 <.0001 (b) Test by means of an F test whether or not there is a linear association between the hardness of the plastic and the elapsed time. Use a = .01. State the alternatives, decision rule, and conclusion. Testing H0: β1 = 0 vs Ha: β1 0. Reject H0 if P-value < 0.01. Since P-value < 0.0001, we reject H0 and conclude that there is a significant linear relationship between hardness and time. (c) Plot the deviations Yi Yˆi against Xi on a graph. Plot the deviations Yˆi Y against Xi on another graph, using the same scales as for the first graph. From your two graphs, does SSE or SSR (SSM) appear to be the larger component of SSTO (SST)? What does this imply about the magnitude of r2? Re s i d u a l s ( Ob s e r v e d - Pr e d i c t e d ) 30 20 10 0 - 10 - 20 - 30 10 20 30 40 t i me ( h o u r s ) De v i a t i o n s ( Pr e d i c t e d - Me a n ) 30 20 10 0 - 10 - 20 - 30 10 20 30 40 t i me ( h o u r s ) On the graphs, it is apparent that the residuals Yi Yˆi are much smaller than the deviations Yˆ Y of the predictors from the mean. Thus the SSM should be much larger i than the SSE, and we expect r 2 SSM to be close to 1. SST (d) Calculate r2 and r. The values of r2 and r can be obtained from the SAS output: r2 = 0.9731 and so r = 0.9865. (Choose the positive square root because b1 > 0). Root MSE 3.23403 R-Square 0.9731 Dependent Mean 225.56250 Adj R-Sq 0.9712 Coeff Var 1.43376 2. Problem 2.31 a) Source Regression Error Total SS 93,462,942 455,273,165 548,736,107 df MS 1 93,462,942 82 5,552,112 83 b). H0 : β1 = 0, Ha : β1 = 0. F ∗ = 93, 462, 942/5, 552, 112 = 16.8338, F (.99; 1, 82) =6.9544. If F ∗ ≤ 6.9544 conclude H0 , otherwise Ha. Conclude Ha . (t∗ )2 = (−4.102895)2 = 16.8338 = F ∗ . [t(.995; 82)]2 = (2.63712)2 = 6.9544 = F (.99; 1, 82) so the two tests are, indeed, equivalent c) SSR = 93, 462, 942 which is 17.03% or 0.1703 d) -0.4127 3. Given that R2 = SSM/SST, it can be shown that R2 / (1 – R2) = SSM / SSE. If you have n = 28 cases and R2 = 0.35, what is the F statistic for the test that the slope is equal to zero? dfR = 1 and dfE = n – 2 = 26. The F-statistic is MSM SSM / df M SSM df E R 2 26 0.35 26 F 14 MSE SSE / df E SSE df M 0.65 1 R2 1 The α = 0.05 critical value for (1,24) df is 4.26 (from page 1342) and the P-value using that df is 0.001. The results for (1,26) df will be similar. We reject H0: β1 = 0 and conclude that the slope is non-zero. 4. Problem 2.54 – Yes to the first question and no to the second one. 5. KNNL Problem 2.55 2 _ _ _ ^ _ SSR Y i Y b 0 b1X i Y b 0 b1X i b 0 b1X b X i X 2 2 n n n i 1 i 1 i 1 6. KNNL Problem 2.57 (The degrees of freedom dfR referred to in the problem are error degrees of freedom for the reduced model, i.e. dfE(R)).: The normal error regression model (2.1) is assumed to be applicable. 2 1 n i 1 (a) When testing H0: β1 = 5 versus Ha: β1 5 by means of a general linear test, what is the reduced model? What are the degrees of freedom dfR? The reduced model is Y 0 5 X . Since there is only one model parameter to estimate, the error df for the reduced model is n-1. (b) When testing H0: β0 = 2, β1 = 5 versus Ha: not both β0 = 2 and β1 = 5 by means of a general linear test, what is the reduced model? What are the degrees of freedom dfR? The reduced model is Y 2 5 X . Since there are no model parameters to estimate, the error df for the reduced model is n. For the next 3 questions use the grade point average data described in the text with KNNL problem 1.19. 7. Describe the distribution of the explanatory variable. Show the plots and output that were helpful in learning about this variable. Using PROC UNIVARIATE we see there are 20 observations ranging between 3.9 and 6.3 with a mean of 5 and median of 4.85; their standard deviation is 0.69. There are no extreme observations (i.e., ones far away from the others) as shown in the histogram plot below. The distribution appears to be reasonably symmetric but not completely so; it has a slight skew to the right. The UNIVARIATE Procedure Variable: testscore Moments N 20 Sum Weights Mean 5 Sum Observations Std Deviation 0.69282032 Variance Skewness 0.49695171 Kurtosis Uncorrected SS 509.12 Corrected SS Coeff Variation 13.8564065 Std Error Mean Basic Statistical Measures Location Variability Mean 5.000000 Std Deviation Median 4.850000 Variance Mode 4.700000 Range Interquartile Range Extreme Observations ----Lowest-------Highest--Value Obs Value Obs 3.9 4 5.5 1 4.1 19 5.9 18 4.3 16 6.0 7 4.3 10 6.2 6 4.5 5 6.3 14 Stem 6 5 5 4 4 3 Leaf 023 59 0024 5677789 133 9 ----+----+----+----+ # 3 2 4 7 3 1 20 100 0.48 -0.595083 9.12 0.15491933 0.69282 0.48000 2.40000 0.90000 Boxplot | | +--+--+ *-----* | | 35 30 25 P e r c e n t 20 15 10 5 0 2. 7 3. 3 3. 9 4. 5 5. 1 5. 7 6. 3 6. 9 7. 5 t est scor e 6. 5 6. 0 5. 5 t e s t s c o r e 5. 0 4. 5 4. 0 3. 5 - 2 - 1 0 No r ma l 1 2 Qu a n t i l e s 8. Run the linear regression to predict GPA from the entrance test score and obtain the residuals (do not include a list of the residuals in your solution). a. Verify that the sum of the residuals is zero by running PROC UNIVARIATE with the output from the regression. The UNIVARIATE Procedure Variable: resid (Residual) Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 20 0 0.4234117 0.05677081 3.40627193 . Sum Weights Sum Observations Variance Kurtosis Corrected SS Std Error Mean 20 0 0.17927747 -1.0342174 3.40627193 0.09467773 b. Plot the residuals versus the explanatory variable and briefly describe the plot noting any unusual patterns or points. r esi dual pl ot 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 0. 0 - 0. 1 - 0. 2 - 0. 3 - 0. 4 - 0. 5 - 0. 6 - 0. 7 - 0. 8 3 4 5 Tes t 6 7 Sc o r e There does not appear to be any obvious pattern or outlier in this residual plot. It looks like a random scatter of points and the variance is reasonably constant. c. Plot the residuals versus the order in which the data appear in the data file and briefly describe the plot noting any unusual patterns or points. r esi dual pl ot 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 0. 0 - 0. 1 - 0. 2 - 0. 3 - 0. 4 - 0. 5 - 0. 6 - 0. 7 - 0. 8 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 Ob s e r v a t i o n S e q u e n c e There is some suggestion that the residuals may become more negative over time but it does not appear to be a big problem. d. Examine the distribution of the residuals by getting a histogram and a normal probability plot of the residuals by using the histogram and qqplot statements in PROC UNIVARIATE. What do you conclude? 30 25 20 P e r c e n t 15 10 5 0 - 1. 65 - 1. 35 - 1. 05 - 0. 75 - 0. 45 - 0. 15 0. 15 0. 45 0. 75 1. 05 1. 35 1. 65 Re s i d u a l 1. 00 0. 75 0. 50 R e s i d u a l 0. 25 0 - 0. 25 - 0. 50 - 0. 75 - 2 - 1 0 No r ma l 1 2 Qu a n t i l e s The residuals appear reasonably normal and symmetric since the histogram appears fairly normal and the qqplot is fairly linear. There is some suggestion of an S-shape to the qqplot and a flattening of the histogram, but it is not too bad.