Lecture 6: Boosting

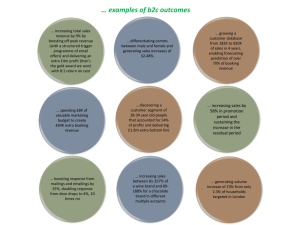

advertisement

Lecture 6

Ensemble Learning (1)

Boosting

Adaboost

Boosting is an additive model

Brief intro to lasso

The relationship

Boosting

Combine multiple classifiers.

Construct a sequence of weak classifiers, and combine

them into a strong classifier by a weighted majority vote.

“weak”: better than random coin-tossing

Some properties:

Flexible.

Able to select features.

Good generalization.

Could fit noise.

Boosting

Adaboost:

(Freund

&Schapire

1995)

Boosting

“A Tutorial on Boosting”,Yoav Freund and Rob Schapire

Boosting

“A Tutorial on Boosting”,Yoav Freund and Rob Schapire

Boosting

“A Tutorial on Boosting”,Yoav Freund and Rob Schapire

Boosting

“A Tutorial on Boosting”,Yoav Freund and Rob Schapire

Boosting

Boosting

This is the weight of the current weak classifier in

the final model.

This weight is for individual observations.

Notice it is stacked from step 1.

If an observation is correctly classified at this step, its

weight doesn’t change.

If incorrectly classified, its weight increases.

Boosting

Boosting

Boosting

Boosting

10 predictors

The weak

classifier is a

Stump: a twolevel tree.

Boosting

Boosting can be seen as fitting an additive model, with

the general form:

Expansion

coefficients

Examples of γ:

Sigmoidal function in

neural networks;

A split in a tree model;

Basis functions:

Simple functions of feature

x, with parameters γ

Boosting

In general, such functions are fit by minimizing a loss

function

This could be computationally intensive.

An alternative is to go stepwise, fitting a sub-problem of a

single basis function

Boosting

Forward stagewise additive modeling --- add new

basis functions without adjusting previously added

ones.

Example:

* Squared loss function is not good for classification.

Boosting

The version of Adaboost we discussed uses this loss

function:

The basis functions are individual weak classifiers.

Boosting

Margin: y*f(x)

>0, correct

<0, incorrect

The goal of classification –

to produce positive margin

as much as possible.

Negative margin should be

penalized more.

Exponential penalize

negative margin more

heavily.

Boosting

To be solved:

Independent

from β and G

Boosting

Observations are either correctly or incorrectly

classified. Then the target function to be minimized is:

For any β> 0, Gm has to satisfy:

G is the classifier that minimizes the weighted error rate.

Boosting

Solving for the Gm will give us a weighted error rate.

Plug it back to get β:

Update the overall classifier by plugging these in:

Boosting

The weight for next iteration becomes:

Using

Independent of i.

Ignored.

Lasso

The equivalent Lagrangian form:

j2

Ridge regression:

Elastic Net: åéëa b j + (1- a ) b j2 ùû

Lasso

s

t

ˆ

j

Lasso

ˆ j

ˆ j

Orthogonal x

ˆ j are the least squares estimates

ˆ j

Lasso

Lasso

Ridge

Error contour in parameter space.

Boosted linear regression

{Tk} : a collection of basis functions

Boosted linear regression

Here the T’s are X’s themselves in a linear regression

setting.