Clinical Trial Design

advertisement

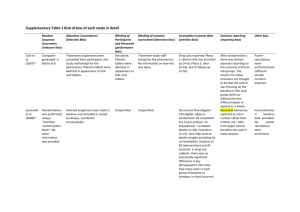

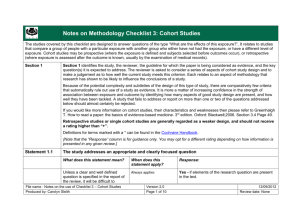

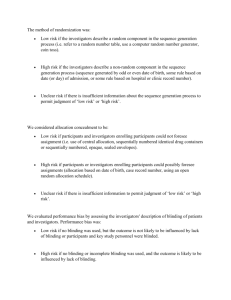

Department of OUTCOMES RESEARCH Clinical Research Design Sources of Error Types of Clinical Research Randomized Trials Daniel I. Sessler, M.D. Professor and Chair Department of OUTCOMES RESEARCH The Cleveland Clinic Sources of Error There is no perfect study • All are limited by practical and ethical considerations • It is impossible to control all potential confounders • Multiple studies required to prove a hypothesis Good design limits risk of false results • Statistics at best partially compensate for systematic error Major types of error • Selection bias • Measurement bias • Confounding • Reverse causation • Chance Statistical Association Chance Causal A B Causal B A Statistical Association Measurement Bias Selection Bias Confounding Bias Selection Bias Non-random selection for inclusion / treatment • Or selective loss Subtle forms of disease may be missed When treatment is non-random: • Newer treatments assigned to patients most likely to benefit • “Better” patients seek out latest treatments • “Nice” patients may be given the preferred treatment Compliance may vary as a function of treatment • Patients drop out for lack of efficacy or because of side effects Largely prevented by randomization Confounding Association between two factors caused by third factor For example: • Transfusions are associated with high mortality • But larger, longer operations require more blood • Increased mortality consequent to larger operations Another example: • Mortality greater in Florida than Alaska • But average age is much higher in Florida • Increased mortality from age, rather than geography of FL Largely prevented by randomization Measurement Bias Quality of measurement varies non-randomly Quality of records generally poor • Not necessarily randomly so Patients given new treatments watched more closely Subjects with disease may better remember exposures When treatment is unblinded • Benefit may be over-estimated • Complications may be under-estimated Largely prevented by blinding Example of Measurement Bias Reported parental history Arthritis (%) No arthritis (%) Neither parent 27 50 One parent 58 42 Both parents 15 8 P = 0.003 From Schull & Cobb, J Chronic Dis, 1969 Reverse Causation Factor of interest causes or unmasks disease For example: • Morphine use is common in patients with gall bladder disease • But morphine worsens symptoms which promotes diagnosis • Conclusion that morphine causes gall bladder disease incorrect Another example: • Patients with cancer have frequent bacterial infections • However, cancer is immunosuppressive • Conclusion that bacteria cause cancer is incorrect Largely prevented by randomization External Threats to Validity External validity Internal validity Subjects enrolled Population of interest Selection bias Measurement bias Confounding Chance Eligible Subjects ? ?? Conclusion Types of Clinical Research Observational • Case series – Implicit historical control – “The pleural of anecdote is not data” • Single cohort (natural history) • Retrospective cohort • Case-control Retrospective versus prospective • Prospective data usually of higher quality Randomized clinical trial • Strongest design; gold standard • First major example: use of streptomycin for TB in 1948 Case-Control Studies Identify cases & matched controls Look back in time and compare on exposure E x p o s u r e Time Case Group Control Group Cohort Studies Identify exposed & matched unexposed patients Look forward in time and compare on disease Exposed Unexposed Time D i s e a s e Timing of Cohort Studies RETROSPECTIVE COHORT STUDY AMBIDIRECTIONAL COHORT STUDY PROSPECTIVE COHORT STUDY Time Initial exposures Disease onset or diagnosis Randomized Clinical Trials (RCTs) A type of prospective cohort study Best protection again bias and confounding • Randomization: reduces selection bias & confounding • Blinding: reduces measurement error • Not subject to reverse causation RCTs often “correct” observational results Types • Parallel group • Cross-over • Factorial • Cluster Parallel Group Enrollment Criteria Randomize participants to treatment groups Intervention A Intervention B Outcome A Outcome B Cross-over Diagram Enrollment Criteria Randomize individuals To sequential treatment Treatment A ± Washout Treatment B Treatment B ± Washout Treatment A Pros & Cons of Cross-over Design Strategy • Sequential treatments in each participant • Patients act as their own controls Advantages • Paired statistical analysis markedly increases power • Good when treatment effect small versus population variability Disadvantages • Assumes underlying disease state is static • Assumes lack of carry-over effect • May require a treatment-free washout period • Evaluate markers rather than “hard” outcomes • Can’t be used for one-time treatments such as surgery Factorial Simultaneously test 2 or more interventions • Balanced treatment allocation Clonidine +ASA Placebo + ASA Clonidine + Placebo Placebo + Placebo Advantages • More efficient than separate trials • Can test for interactions Disadvantages • Complexity, potential for reduced compliance • Reduces fraction of eligible subjects and enrollment • Rarely powered for interactions – But interactions influence sample size requirements Factorial Outcome Example 60 No antiemetics Incidence of PONV (%) 50 One antiemetic 40 Ond 30 20 Dex Drop Two antiemetics Three antiemetics Ond Ond Dex & Dex &Drop &Drop 10 0 Apfel, et al. NEJM 2004 Subject Selection Tight criteria • Reduces variability and sample size • Excludes subjects at risk of treatment complications • Includes subjects most likely to benefit • May restrict to advance disease, compliant patients, etc. • Slows enrollment • “Best case” results – Compliant low-risk patients with ideal disease stage Loose criteria • Includes more “real world” participants • Increases variability and sample size • Speeds enrollment • Enhances generalizability Randomization and Allocation Only reliable protection against • Selection bias • Confounding Concealed allocation • Independent of investigators • Unpredictable Methods • Computer-controlled • Random-block • Envelopes, web-accessed, telephone Stratification • Rarely necessary Blinding / Masking Only reliable prevention for measurement bias • Essential for subjective responses – Use for objective responses whenever possible • Careful design required to maintain blinding Potential groups to blind • Patients • Providers • Investigators, including data collection & adjudicators Maintain blinding throughout data analysis • Even data-entry errors can be non-random • Statisticians are not immune to bias! Placebo effect can be enormous Importance of Blinding Chronic Pain Analgesic Mackey, Personal communication More About Placebo Effect Kaptchuk, PLoS ONE, 2010 Selection of Outcomes Surrogate or intermediate • May not actually relate to outcomes of interest – Bone density for fractures – Intraoperative hypotension for stroke • Usually continuous: implies smaller sample size • Rarely powered for complications Major outcomes • Severe events (i.e., myocardial infarction, stroke) • Usual dichotomous: implies larger sample size • Mortality Cost effectiveness / cost utility Quality-of-life Composite Outcomes Any of ≥2 component outcomes, for example: • Cardiac death, myocardial infarction, or non-fatal arrest • Wound infection, anastomotic leak, abscess, or sepsis Usually permits a smaller sample size Incidence of each should be comparable • Otherwise common outcome(s) dominate composite Severity of each should be comparable • Unreasonable to lump minor and major events • Death often included to prevent survivor bias Beware of heterogeneous results Trial Management Case-report forms • Require careful design and specific field definitions • Every field should be completed – Missing data can’t be assumed to be zero or no event Data-management (custom database best) • Evaluate quality and completeness in real time • Range and statistical checks • Trace to source documents Independent monitoring team Interim Analyses & Stopping Rules Reasons trials are stopped early • Ethics • Money • Regulatory issues • Drug expiration • Personnel • Other opportunities Pre-defined interim analyses • Spend alpha and beta power • Avoid “convenience sample” • Avoid “looking” between scheduled analyses Pre-defined stopping rules • Efficacy versus futility Potential Problems Poor compliance • Patients • Clinicians Drop-outs Crossovers Insufficient power • Greater-than-expected variability • Treatment effect smaller than anticipated Fragile Results Consider two identical trials of treatment for infarction • N=200 versus n=8,000 Trial N Treatment Events Placebo Events RR P A 200 1 9 0.11 0.02 B 4,000 200 250 0.80 0.02 Which result do you believe? Which is biologically plausible? What happens if you add two events to each Rx group? • Study A p=0.13 • Study B p=0.02 Four versus Five Rx for CML Problem Solved? How About Now? Small Studies Often Wrong! Multi-center Trials Advantages • Necessary when large enrollment required • Diverse populations increase generalizability of results • Problems in individual center(s) balanced by other centers – Often required by Food and Drug Administration Disadvantages • Difficult to enforce protocol – Inevitable subtle protocol differences among centers • Expensive! “Multi-center” does not necessarily mean “better” Unsupported Conclusions Beta error • Insufficient detection power confused with negative result Conclusions that flat-out contradict presented results • “Wishful thinking” — evidence of bias Inappropriate generalization: internal vs. external validity • To healthier or sicker patients than studied • To alternative care environments • Efficacy versus effectiveness Failure to acknowledge substantial limitations Statistical significance ≠ clinical importance • And the reverse! Conclusion: Good Clinical Trials… Test a specific a priori hypothesis • Evaluate clinically important outcomes Are well designed, with • A priori and adequate sample size • Defined stopping rules Are randomized and blinded when possible Use appropriate statistical analysis Make conclusions that follow from the data • And acknowledged substantive limitations Department of OUTCOMES RESEARCH Meta-analysis “Super analysis” of multiple similar studies • Often helpful when there are many marginally powered studies Many serious limitations • Search and selection bias • Publication bias – Authors – Editors – Corporate sponsors • Heterogeneity of results Good generalizability Rajagopalan, Anesthesiology 2008 Design Strategies Life is short; do things that matter! • Is the question important? Is it worth years of your life? Concise hypothesis testing of important outcomes • Usually only one or two hypotheses per study • Beware of studies without a specified hypothesis A priori design • Planned comparisons with identified primary outcome • Intention-to-treat design General statistical approach • Superiority, equivalence, non-inferiority • Two-tailed versus one-tailed It’s not brain surgery, but…