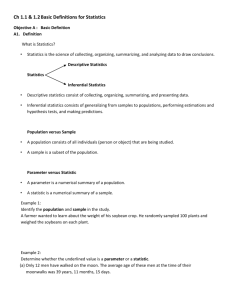

Qualitative research - Primer on Population Health

advertisement