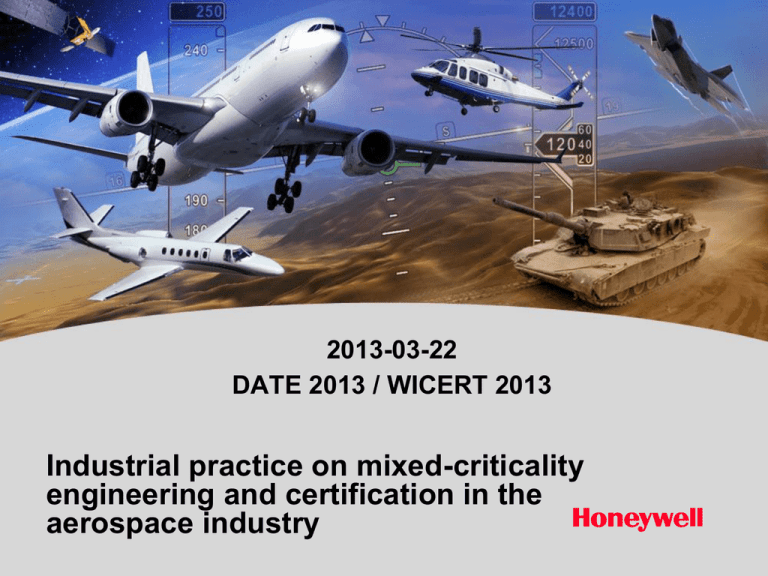

Industrial practice on mixed-criticality engineering and certification

advertisement

2013-03-22 DATE 2013 / WICERT 2013 Industrial practice on mixed-criticality engineering and certification in the aerospace industry Development process in Aerospace 2 DATE 2013 / WICERT 2013 Development process - Definition 3 DATE 2013 / WICERT 2013 Development process – Integration and V&V 4 DATE 2013 / WICERT 2013 Verification vs. Validation 5 DATE 2013 / WICERT 2013 Who defines the requirements • Customer - Typically OEM – formal and … “less formal” definition • Civil Aviation Authority - FAA, EASA, etc., through Technical Standard Orders (TSO, ETSO) • Internal standards - Body performing the development ! Functional, non-functional, and process requirements 6 DATE 2013 / WICERT 2013 Who checks whether requirements are met • Customer - Typically OEM – Reviews, Validation, Integration testing • Civil Aviation Authority - FAA, EASA, etc. - Through Certification • Internal standards - Body performing the development – As defined by operating procedures. Typically aligned with aerospace development processes. 7 DATE 2013 / WICERT 2013 How is certification done • Civil Aviation Authority does not only check the requirements testing checklist. • CAA primarily controls the Development process and checks the evidence that it was followed - Typically, an agreement must be reached with the CAA on acceptable means of compliance/certification. Typically, this includes: Meeting DO-254 / ED-80 for electronics Meeting DO-178B / ED-12B for software (or newly DO-178C) Meeting agreed CRI – Certification Review Item - The following items are agreed: The means of compliance The certification team involvement in the compliance determination process The need for test witnessing by CAA Significant decisions affecting the result of the certification process 8 DATE 2013 / WICERT 2013 Why not having one process? • • • • • • • • • • • • • • • • • • • 9 CS-22 (Sailplanes and Powered Sailplanes) CS-23 (Normal, Utility, Aerobatic and Commuter Aeroplanes) – <5.6t,<9pax or twin-prop <9t,<19pax CS-25 (Large Aeroplanes) CS-27 (Small Rotorcraft) CS-29 (Large Rotorcraft) CS-31GB (Gas Balloons) CS-31HB (Hot Air Balloons) CS-34 (Aircraft Engine Emissions and Fuel Venting) CS-36 (Aircraft Noise) CS-APU (Auxiliary Power Units) CS-AWO (All Weather Operations) CS-E (Engines) CS-FSTD(A) (Aeroplane Flight Simulation Training Devices) CS-FSTD(H) (Helicopter Flight Simulation Training Devices) CS-LSA (Light Sport Aeroplanes) CS-P (Propellers) CS-VLA (Very Light Aeroplanes) CS-VLR (Very Light Rotorcraft) AMC-20 (General Acceptable Means of Compliance for Airworthiness of Products, Parts and Appliances) DATE 2013 / WICERT 2013 Small vs. large aircraft (CS23 vs. CS25) Table modified from FAA AC 23.1309-1D Figure from FAA AC 25.1309-1A >10-5 Failure examples Probability Airliner DAL Probability DAL Airliner for 2-seater 2-seat Reduced functions <10-5 D <10-3 D,D Injuries <10-8 A <10-4 C,D Damage to aircraft <10-6 B <10-5 C,D Crash 10 <10-9 A <10-6 C,C 10-5-10-9 <10-9 >10-5 Can happen to any aircraft 10-5-10-9 Happens to some aircraft in fleet <10-9 Never happens DATE 2013 / WICERT 2013 Software-related standards – DO-178B(C) • Development Assurance Level for software (A-D) - Similar definition also exists for Item, Function … • Specifies - Minimum set of design assurance steps - Requirements for the development process - Documents required for certification - Specific Objectives to be proven Level 11 Approx. failure category Objectives (178B)178C Objectives met with independent review (178C) A Catastrophic (66)71 33 B Hazardous (65)69 21 C Major (57)62 8 D Minor (28)26 5 E No Safety Effect 0 0 DATE 2013 / WICERT 2013 More than one function • Two situations: - Single-purpose device (e.g. NAV system, FADEC – Full Authority Digital Engine Controller, etc.) Typically developed and certified to a single assurance level Exceptions exist - Multi-purpose device (e.g. IMA – Integrated Modular Avionics) 12 DATE 2013 / WICERT 2013 Mixed-criticality • Same HW runs SW of mixed criticality - For SW, more or less equals to mixed DAL • Implicates additional requirements - Aircraft class specific - CS-25: Very strict Time and space partitioning (e.g. ARINC 653) Hard-real-time execution determinism (Problem with multicores) Standards for inter-partition communication - CS-23: Less strict Means to achieve safety are negotiable with certification body 13 DATE 2013 / WICERT 2013 Design assurance, verification, certification • Design assurance + qualification does it work? - Functional verification - Task schedulability - Worst-case execution time analysis - … and includes any of the verification below • Verification does it work as specified? - Verifies requirements Depending on the aircraft class and agreed certification baseline, requirements might include response time or other definition of meeting the temporal requirements • On singlecore platforms, this is composable – isolation is guaranteed • On multicore platforms – currently in negotiations (FAA, EASA, Industry, Academia, …) Requirements are typically verified by testing • Certification is it approved as safe? - Showing the evidence of all the above 14 DATE 2013 / WICERT 2013 Software verification means (1) • Dynamic verification - Testing – based on test cases prepared during the development cycle. Integral part of the development phase. Unit testing – Is the implementation correct? Integration testing – Do the units work together as anticipated? System testing – Does the system perform according to (functional and non-functional) requirements? Acceptance testing – As black-box - does the system meet user requirements? - Runtime analysis – Memory usage, race detection, profiling, assertions, contract checking … and sometimes (pseudo)WCET • Domain verification – not with respect to requirements, but e.g. checking for contradictions in requirements, syntax consistency, memory leaks 15 DATE 2013 / WICERT 2013 Software verification means (2) • Static analysis – type checking, code coverage, range checking, etc. • Symbolic execution • Formal verification – formal definition of correct behavior, formal proof, computer-assisted theorem proving, model checking, equivalence checking - Not much used today … just yet 16 DATE 2013 / WICERT 2013 Verification for certification • Typical approach is to heavily rely on testing, supported by static analysis and informal verification • DO-178C (recent update of DO-178B) defines requirements for using - Qualified tools for Verification and Development – DO-330 - Model-based development and verification – DO-331 - Object-oriented technologies – DO-332 (that’s right, 2012) - Formal methods – DO-333 To complement (not replace) testing • Tool qualification - Very expensive Tools that can insert error: basically follow the same process as SW development of same DAL. Usable only for small tools for specific purposes. Tools that replace testing: for A,B: developed and tested using similar development process. For C,D: subset needed. Can use COTS tools. 17 DATE 2013 / WICERT 2013 Near future • Replacement of (some) testing by qualified verification tools Towards formal verification • Adoption of DO-178C instead of DO-178B • Definition of technical and process requirements for multicore platforms • Growth of model-based development and verification • Enablers for incremental certification (which assumes composability of safety) 18 DATE 2013 / WICERT 2013 ... and that’s it! Ondřej Kotaba ondrej.kotaba@honeywell.com