Document 10639673

advertisement

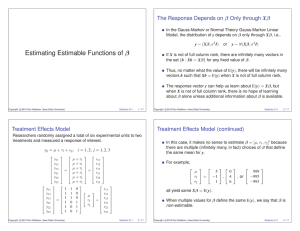

Estimating Estimable Functions of β

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

1 / 17

The Response Depends on β Only through Xβ

In the Gauss-Markov or Normal Theory Gauss-Markov Linear

Model, the distribution of y depends on β only through Xβ, i.e.,

y ∼ (Xβ, σ 2 I)

or

y ∼ N(Xβ, σ 2 I)

If X is not of full column rank, there are infinitely many vectors in

the set {b : Xb = Xβ} for any fixed value of β.

Thus, no matter what the value of E(y), there will be infinitely many

vectors b such that Xb = E(y) when X is not of full column rank.

The response vector y can help us learn about E(y) = Xβ, but

when X is not of full column rank, there is no hope of learning

about β alone unless additional information about β is available.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

2 / 17

Treatment Effects Model

Researchers randomly assigned a total of six experimental units to two

treatments and measured a response of interest.

yij = µ + τi + ij ,

µ + τ1

11

µ + τ1 12

µ + τ1 13

=

+

µ + τ2 21

µ + τ2 22

µ + τ2

23

1 1 0

11

1 1 0

12

1 1 0 µ

=

τ1 + 13

1 0 1

21

τ2

1 0 1

22

23

1 0 1

y11

y12

y13

y21

y22

y23

y11

y12

y13

y21

y22

y23

i = 1, 2; j = 1, 2, 3

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

3 / 17

Treatment Effects Model (continued)

In this case, it makes no sense to estimate β = [µ, τ1 , τ2 ]0 because

there are multiple (infinitely many, in fact) choices of β that define

the same mean for y.

For example,

µ

5

0

999

τ1 = −1 , 4 , or −995

τ2

1

6

−993

all yield same Xβ = E(y).

When multiple values for β define the same E(y), we say that β is

non-estimable.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

4 / 17

Estimable Functions of β

A linear function of β, Cβ, is said to be estimable if there is a

linear function of y, Ay, that is an unbiased estimator of Cβ.

Otherwise, Cβ is said to be non-estimable.

Note that Ay is an unbiased estimator of Cβ if and only if

E(Ay) = Cβ ∀ β ∈ IRp

⇐⇒

AXβ = Cβ ∀ β ∈ IRp

⇐⇒

AX = C.

This says that we can estimate Cβ as long as Cβ = AXβ = AE(y)

for some A, i.e., as long as Cβ is a linear function of E(y).

The bottom line is that we can always estimate E(y) and all linear

functions of E(y); all other linear functions of β are non-estimable.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

5 / 17

Treatment Effects Model (continued)

E(y) = Xβ =

1

1

1

1

1

1

1

1

1

0

0

0

0

0

0

1

1

1

µ

τ1 =

τ2

µ + τ1

µ + τ1

µ + τ1

µ + τ2

µ + τ2

µ + τ2

=⇒

[1, 0, 0, 0, 0, 0]Xβ

=

[1, 1, 0]β

=

µ + τ1

[0, 0, 0, 1, 0, 0]Xβ

=

[1, 0, 1]β

=

µ + τ2

[1, 0, 0, −1, 0, 0]Xβ

=

[0, 1, −1]β

=

τ1 − τ2

are estimable functions of β.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

6 / 17

Estimating Estimable Functions of β

If Cβ is estimable, then there exists a matrix A such that C = AX

and Cβ = AXβ = AE(y) for any β ∈ IRp .

It makes sense to estimate Cβ = AXβ = AE(y) by

d = Aŷ = APX y = AX(X0 X)− X0 y = AX(X0 X)− X0 Xβ̂

AE(y)

= APX Xβ̂ = AXβ̂ = Cβ̂.

Cβ̂ is called the Ordinary Least Squares (OLS) estimator of Cβ.

Note that although the “hat” is on β, it is Cβ that we are estimating.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

7 / 17

Invariance of Cβ̂ to the Choice of β̂

Although there are infinitely many solutions to the normal

equations when X is not of full column rank, Cβ̂ is the same for all

normal equation solutions β̂ whenever Cβ is estimable.

To see this, suppose β̂ 1 and β̂ 2 are any two solutions to the

normal equations. Then

Cβ̂ 1 = AXβ̂ 1 = APX Xβ̂ 1

= AX(X0 X)− X0 Xβ̂ 1 = AX(X0 X)− X0 y

= AX(X0 X)− X0 Xβ̂ 2 = APX Xβ̂ 2

= AXβ̂ 2 = Cβ̂ 2 .

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

8 / 17

Treatment Effects Model (continued)

Suppose our aim is to estimate τ1 − τ2 .

As noted before,

Xβ =

1

1

1

1

1

1

1

1

1

0

0

0

0

0

0

1

1

1

µ

τ1 =

τ2

µ + τ1

µ + τ1

µ + τ1

µ + τ2

µ + τ2

µ + τ2

=⇒

[1, 0, 0, −1, 0, 0]Xβ = [0, 1, −1]β = τ1 − τ2 .

Thus, we can compute the OLS estimator of τ1 − τ2 as

[1, 0, 0, −1, 0, 0]ŷ = [0, 1, −1]β̂,

where ŷ = X(X0 X)− X0 y and β̂ is any solution to the normal

equations.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

9 / 17

Treatment Effects Model (continued)

The normal equations in this case are

1

1

1

1

1

1

1

1

1

0

0

0

0

0

0

1

1

1

0

1

1

1

1

1

1

1

1

1

0

0

0

0

0

0

1

1

1

b1

b2 =

b3

1

1

1

1

1

1

1

1

1

0

0

0

0

0

0

1

1

1

0

y11

y12

y13

y21

y22

y23

6 3 3

b1

y··

⇐⇒ 3 3 0 b2 = y1· .

3 0 3

b3

y2·

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

10 / 17

Treatment Effects Model (continued)

ȳ··

0

are each solutions to

the normal equations

β̂ 1 ≡ ȳ1· − ȳ··

and β̂ 2 ≡ ȳ1·

ȳ2· − ȳ··

ȳ2·

because

6 3 3

6 3 3

0

ȳ··

y··

3 3 0 ȳ1· − ȳ·· = y1· = 3 3 0 ȳ1· .

3 0 3

y2·

3 0 3

ȳ2·

ȳ2· − ȳ··

Thus, the OLS estimator of Cβ = [0, 1, −1]β = τ1 − τ2 is

ȳ··

0

Cβ̂ 1 = [0, 1, −1] ȳ1· − ȳ·· = ȳ1· − ȳ2· = [0, 1, −1] ȳ1· = Cβ̂ 2 .

ȳ2· − ȳ··

ȳ2·

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

11 / 17

Treatment Effects Model (continued)

1/6

0

Let (X0 X)−

1 =

0

0

0

0

0

0

0 1/3

1/6 −1/6 and (X0 X)−

0 .

2 =

−1/6

1/6

0

0 1/3

−

0

It is straightforward to verify that (X0 X)−

1 and (X X)2 are each

0

generalized inverses of X X.

− 0

0

0

It is also easy to show that β̂ 1 = (X0 X)−

1 X y and β̂ 2 = (X X)2 X y.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

12 / 17

Treatment Effects Model (continued)

PX

= X(X0 X)− X0 =

=

0

0

0

0

0

0

1

1

1

1

1

1

1

1

1

0

0

0

1/3 0

1/3 0

1

1/3 0

1

0 1/3

0

0 1/3

0 1/3

c

Copyright 2012

Dan Nettleton (Iowa State University)

0

0

0

1

1

1

0

0

0

0

0

1/3

0

0 1/3

1

1

0

1

1

0

1

0

1

1

0

1

1

1

1

1

1

1

1

3

1

3

1

3

1

0 =

0

1

0

0

1

1

1

0

0

0

0

0

0

1

1

1

1

3

1

3

1

3

1

3

1

3

1

3

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

1

3

1

3

1

3

1

3

1

3

1

3

1

3

1

3

1

3

Statistics 511

.

13 / 17

Treatment Effects Model (continued)

Thus

d

E(y) = ŷ = PX y =

1

3

1

3

1

3

1

3

1

3

1

3

1

3

1

3

1

3

0 0 0

y11

y12

0 0 0

0 0 0

y13

1

1

1

0 0 0 3 3 3 y21

0 0 0 13 31 13 y22

y23

0 0 0 13 31 13

=

ȳ1·

ȳ1·

ȳ1·

ȳ2·

ȳ2·

ȳ2·

is our OLS estimator of

E(y) = Xβ =

1

1

1

1

1

1

c

Copyright 2012

Dan Nettleton (Iowa State University)

1

1

1

0

0

0

0

0

0

1

1

1

µ

τ1 =

τ2

µ + τ1

µ + τ1

µ + τ1

µ + τ2

µ + τ2

µ + τ2

.

Statistics 511

14 / 17

Treatment Effects Model (continued)

Also, we can see that the OLS estimator of

µ

= [0, 1, −1] τ1 = [1, 0, 0, −1, 0, 0]

τ2

τ1 − τ2

= [1, 0, 0, −1, 0, 0]

c

Copyright 2012

Dan Nettleton (Iowa State University)

µ + τ1

µ + τ1

µ + τ1

µ + τ2

µ + τ2

µ + τ2

1

1

1

1

1

1

1

1

1

0

0

0

0

0

0

1

1

1

µ

τ1

τ2

= [1, 0, 0, −1, 0, 0]E(y) is

Statistics 511

15 / 17

Treatment Effects Model (continued)

d = [1, 0, 0, −1, 0, 0]ŷ

[1, 0, 0, −1, 0, 0]E(y)

= [1, 0, 0, −1, 0, 0]

ȳ1·

ȳ1·

ȳ1·

ȳ2·

ȳ2·

ȳ2·

= ȳ1· − ȳ2·

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

16 / 17

The Gauss-Markov Theorem

Under the Gauss-Markov Linear Model, the OLS estimator c0 β̂ of an

estimable linear function c0 β is the unique Best Linear Unbiased

Estimator (BLUE) in the sense that Var(c0 β̂) is strictly less than the

variance of any other linear unbiased estimator of c0 β for all β ∈ IRp

and all σ 2 ∈ IR+ .

The Gauss-Markov Theorem says that if we want to estimate an

estimable linear function c0 β using a linear estimator that is

unbiased, we should always use the OLS estimator.

In our simple example of the treatment effects model, we could

have used y11 − y21 to estimate τ1 − τ2 . It is easy to see that

y11 − y21 is a linear estimator that is unbiased for τ1 − τ2 , but its

variance is clearly larger than the variance of the OLS estimator

ȳ1· − ȳ2· (as guaranteed by the Gauss-Markov Theorem).

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 511

17 / 17