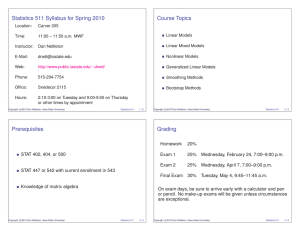

Document 10639922

advertisement

When is the OLSE the BLUE? c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 1 / 40 When is the Ordinary Least Squares Estimator (OLSE) the Best Linear Unbiased Estimator (BLUE)? c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 2 / 40 We already know the OLSE is the BLUE under the GMM, but are there other situations where the OLSE is the BLUE? c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 3 / 40 Consider an experiment involving 4 plants. Two leaves are randomly selected from each plant. One leaf from each plant is randomly selected for treatment with treatment 1. The other leaf from each plant receives treatment 2. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 4 / 40 Let yij = the response for the treatment i leaf from plant j (i = 1, 2; j = 1, 2, 3, 4). Suppose yij = µi + pj + eij , where p1 , . . . , p4 , e11 , . . . , e24 are uncorrelated, E(pj ) = E(eij ) = 0, Var(pj ) = σp2 , Var(eij ) = σ 2 c Copyright 2012 Dan Nettleton (Iowa State University) ∀ i = 1, 2; j = 1, . . . , 4. Statistics 611 5 / 40 Suppose σp2 /σ 2 is known to be equal to 2 and y = (y11 , y21 , y12 , y22 , y13 , y23 , y14 , y24 )0 . Show that the AM holds. Find OLSE pf µ1 − µ2 and the BLUE of µ1 − µ2 . c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 6 / 40 E(y) = Xβ, where 1 0 1 0 X= 1 0 1 0 c Copyright 2012 Dan Nettleton (Iowa State University) 0 1 0 1 0 1 0 1 " and β = µ1 µ2 # . Statistics 611 7 / 40 Var(yij ) = Var(µi + pj + eij ) c Copyright 2012 Dan Nettleton (Iowa State University) = Var(pj + eij ) = Var(pj ) + Var(eij ) = σp2 + σ 2 = σ 2 (σp2 /σ 2 + 1) = 3σ 2 . Statistics 611 8 / 40 Cov(y1j , y2j ) = Cov(µ1 + pj + e1j , µ2 + pj + e2j ) = Cov(pj + e1j , pj + e2j ) = Cov(pj , pj ) + Cov(pj , e2j ) + Cov(pj , e1j ) + Cov(e1j , e2j ) = Cov(pj , pj ) = Var(pj ) = σp2 = σ 2 (σp2 /σ 2 ) = 2σ 2 . c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 9 / 40 Cov(yij , yi∗ j∗ ) = 0 if j 6= j∗ because = Cov(pj + eij , pj∗ + ei∗ j∗ ) = Cov(pj , pj∗ ) + Cov(pj , ei∗ j∗ ) + Cov(pj∗ , eij ) + Cov(eij , ei∗ j∗ ) = 0. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 10 / 40 Thus, Var(y) = σ 2 V, where 3 2 0 0 V= 0 0 0 0 c Copyright 2012 Dan Nettleton (Iowa State University) 2 0 0 0 0 0 0 3 0 0 0 0 0 0 0 3 2 0 0 0 0 0 2 3 0 0 0 0 . 0 0 0 3 2 0 0 0 0 0 2 3 0 0 0 0 0 0 0 3 2 0 0 0 0 0 2 3 Statistics 611 11 / 40 We can write the model as y = Xβ + ε, where p1 + e11 p1 + e21 p2 + e12 p + e 22 2 ε= , p3 + e13 p3 + e23 p + e 14 4 p4 + e24 c Copyright 2012 Dan Nettleton (Iowa State University) E(ε) = 0 and Var(ε) = σ 2 V. Statistics 611 12 / 40 Note that I 2×2 I X = 2×2 = 1 ⊗ I. I 4×1 2×2 c Copyright 2012 Dan Nettleton (Iowa State University) 2×2 I 2×2 Statistics 611 13 / 40 Thus, I I X0 X = [I, I, I, I] = 4I I 2×2 I (X0 X)−1 = 1/4I. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 14 / 40 " X0 y = [I, I, I, I]y = y1· # . y2· Thus, β̂ OLS " # " # ȳ1· 1 y1· = . = I 4 y2· ȳ2· c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 15 / 40 Thus, the OLSE of µ1 − µ2 is [1, −1]β̂ OLS = ȳ1· − ȳ2· . c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 16 / 40 To find " the#GLSE, which we know is the BLUE for this AM, let 3 2 A= . Then 2 3 A 0 V= 0 0 c Copyright 2012 Dan Nettleton (Iowa State University) 0 0 0 0 I ⊗ A. =4×4 0 A 0 0 0 A A 0 Statistics 611 17 / 40 V −1 = I ⊗ A−1 4×4 c Copyright 2012 Dan Nettleton (Iowa State University) A−1 0 0 0 0 −1 A 0 0 = −1 0 0 A 0 0 0 0 A−1 Statistics 611 18 / 40 It follows that X0 V −1 X = [10 ⊗ I][I ⊗ A−1 ][1 × I] I A−1 0 0 0 0 A−1 0 0 I = [I, I, I, I] 0 0 A−1 0 I −1 I 0 0 0 A = 4A−1 . Thus, (X0 V −1 X)−1 = 41 A. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 19 / 40 A−1 0 0 0 0 A−1 0 0 0 −1 X V y = [I, I, I, I] y −1 0 0 A 0 0 0 0 A−1 = [A−1 , A−1 , A−1 , A−1 ]y = A−1 [I, I, I, I]y. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 20 / 40 Thus, 1 β̂ GLS = AA−1 [I, I, I, I]y 4 1 = [I, I, I, I]y 4 " # ȳ1· = = β̂ OLS . ȳ2· c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 21 / 40 Thus, the GLSE of µ1 − µ2 and the BLUE of µ1 − µ2 is [1, −1]β̂ GLS = ȳ1· − ȳ2· . Thus, OLSE = GLSE = BLUE in this case. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 22 / 40 Although we assumed that σp2 /σ 2 = 2 in our example, that assumption was not needed to find the GLSE. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 23 / 40 We have looked at one specific example where the OLSE = GLSE = BLUE. What general conditions must be satisfied for this to hold? c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 24 / 40 Result 4.3: Suppose the AM holds. The estimator t0 y is the BLUE for E(t0 y) ⇐⇒ t0 y is uncorrelated with all linear unbiased estimators of zero. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 25 / 40 Proof: (⇐=) Let h0 y be an arbitrary linear unbiased estimator of E(t0 y). Then E((h − t)0 y) = E(h0 y − t0 y) = E(h0 y) − E(t0 y) = 0. Thus, (h − t)0 y is linear unbiased for zero. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 26 / 40 It follows that Cov(t0 y, (h − t)0 y) = 0. Var(h0 y) = Var(h0 y − t0 y + t0 y) = Var((h − t)0 y) + Var(t0 y). ∴ Var(h0 y) ≥ Var(t0 y) with equality iff Var((h − t)0 y) = 0 ⇐⇒ h = t. ∴ t0 y is the BLUE of E(t0 y). c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 27 / 40 (=⇒) Suppose h0 y is a linear unbiased estimator of zero. If h = 0, then Cov(t0 y, h0 y) = Cov(t0 y, 0) = 0. Now suppose h 6= 0. Let c = Cov(t0 y, h0 y) and d = Var(h0 y) > 0. We need to show c = 0. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 28 / 40 Now consider a0 y = t0 y − (c/d)h0 y. E(a0 y) = E(t0 y) − (c/d)E(h0 y) = E(t0 y). Thus, a0 y is a linear unbiased estimator of E(t0 y). c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 29 / 40 Var(a0 y) = Var(t0 y − (c/d)h0 y) = Var(t0 y) + c2 Var(h0 y) d2 − 2Cov(t0 y, (c/d)h0 y) c2 d − 2(c/d)c d2 c2 = Var(t0 y) − . d = Var(t0 y) + c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 30 / 40 Now Var(a0 y) = Var(t0 y) − c2 d ⇒ c = 0 ∵ t0 y has lowest variance among all linear unbiased estimator of E(t0 y). c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 31 / 40 Corollary 4.1: Under the AM, the estimator t0 y is the BLUE of E(t0 y) ⇐⇒ Vt ∈ C(X). c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 32 / 40 Proof of Corollary 4.1: First note that h0 y a linear unbiased estimator of zero is equivalent to E(h0 y) = 0 ∀ β ∈ Rp ⇐⇒ h0 Xβ = 0 ∀ β ∈ Rp ⇐⇒ h0 X = 00 ⇐⇒ X0 h = 0 ⇐⇒ h ∈ N (X0 ). c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 33 / 40 Thus, by Result 4.3, t0 y BLUE for E(t0 y) ⇐⇒ Cov(h0 y, t0 y) = 0 ∀ h ∈ N (X0 ) ⇐⇒ σ 2 h0 Vt = 0 ⇐⇒ h0 Vt = 0 ∀ h ∈ N (X0 ) ∀ h ∈ N (X0 ) ⇐⇒ Vt ∈ N (X0 )⊥ = C(X). c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 34 / 40 Result 4.4: Under the AM, the OLSE of c0 β is the BLUE of c0 β ∀ estimable c0 β ⇐⇒ ∃ a matrix Q 3 VX = XQ. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 35 / 40 Proof of Result 4.4: (⇐=) Suppose c0 β is estimable. Let t0 = c0 (X0 X)− X0 . Then VX = XQ ⇒ VX[(X0 X)− ]0 c = XQ[(X0 X)− ]0 c ⇒ Vt ∈ C(X), which by Cor. 4.1, ⇒ t0 y is BLUE of E(t0 y) ⇒ c0 β̂ OLS is BLUE of c0 β. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 36 / 40 (=⇒) By Corollary 4.1, c0 β̂ OLS is BLUE for any estimable c0 β. ⇒ VX[(X0 X)− ]0 c ∈ C(X) ∀ c ∈ C(X0 ) ⇒ VX[(X0 X)− ]0 X0 a ∈ C(X) ⇒ VPX a ∈ C(X) ∀ a ∈ Rn ∀ a ∈ Rn ⇒ ∃ qi 3 VPX xi = Xqi ∀ i = 1, . . . , p, where xi denotes the ith column of X. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 37 / 40 ⇒ VPX [x1 , . . . , xp ] = X[q1 , . . . , qp ] ⇒ VPX X = XQ, where Q = [q1 , . . . , qp ] ⇒ VX = XQ c Copyright 2012 Dan Nettleton (Iowa State University) for Q = [q1 , . . . , qp ]. Statistics 611 38 / 40 Show that ∃ Q 3 VX = XQ in our previous example. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 39 / 40 A I A 0 0 0 0 A 0 0 I A VX = = 0 0 A 0 I A A 0 0 0 A I I I = A = XA = XQ, where I I c Copyright 2012 Dan Nettleton (Iowa State University) Q = A. Statistics 611 40 / 40