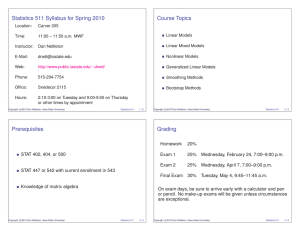

The Gauss-Markov Linear Model The Column Space of the Design Matrix y

advertisement

The Gauss-Markov Linear Model

The Column Space of the Design Matrix

Xβ is a linear combination of the columns of X:

⎡

⎤

β1

⎢

⎥

Xβ = [x1 , . . . , xp ] ⎣ ... ⎦ = β1 x1 + · · · + βp xp .

y = Xβ + y is an n × 1 random vector of responses.

βp

X is an n × p matrix of constants with columns corresponding to

explanatory variables. X is sometimes referred to as the design

matrix.

The set of all possible linear combinations of the columns of X is

called the column space of X and is denoted by

C(X) = {Xa : a ∈ IRp }.

β is an unknown parameter vector in IRp .

is an n × 1 random vector of errors.

E() = 0 and Var() = σ 2 I, where σ 2 is an unknown parameter in

IR+ .

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

1 / 25

An Example Column Space

The Gauss-Markov linear model says y is a random vector whose

mean is in the column space of X and whose variance is σ 2 I for

some positive real number σ 2 , i.e.,

E(y) ∈ C(X) and Var(y) = σ 2 I, σ 2 ∈ IR+ .

c

Copyright 2010

Dan Nettleton (Iowa State University)

X=

1

1

1

⎢ 1

X=⎢

⎣ 0

0

=⇒ C(X) = {Xa : a ∈ IRp }

1 a1 : a1 ∈ IR

=

1

1

: a1 ∈ IR

=

a1

1

a1

: a1 ∈ IR

=

a1

⎤

0

0 ⎥

⎥ =⇒ C(X)

1 ⎦

1

=

=

=

=

c

Copyright 2010

Dan Nettleton (Iowa State University)

2 / 25

Another Example Column Space

⎡

Statistics 511

Statistics 511

3 / 25

c

Copyright 2010

Dan Nettleton (Iowa State University)

⎧⎡

⎫

⎤

1 0 ⎪

⎪

⎪

⎪

⎬

⎨⎢

⎥ a1

1

0

2

⎢

⎥

:

a

∈

IR

⎣

⎦

0 1

a2

⎪

⎪

⎪

⎪

⎭

⎩

0 1

⎧ ⎡ ⎤

⎫

⎡ ⎤

1

0

⎪

⎪

⎪

⎪

⎨ ⎢ ⎥

⎬

⎢ 0 ⎥

1

⎥ + a2 ⎢ ⎥ : a1 , a2 ∈ IR

a1 ⎢

⎦

⎦

⎣

⎣

0

1

⎪

⎪

⎪

⎪

⎩

⎭

0

1

⎧⎡

⎫

⎤ ⎡

⎤

0

a1

⎪

⎪

⎪

⎪

⎨⎢

⎬

⎥ ⎢ 0 ⎥

a

1

⎢

⎥+⎢

⎥ : a1 , a2 ∈ IR

⎣

⎣

⎦

⎦

a2

0

⎪

⎪

⎪

⎪

⎩

⎭

a2

0

⎧⎡

⎫

⎤

a1

⎪

⎪

⎪

⎪

⎨⎢

⎬

⎥

a

1

⎢

⎥ : a1 , a2 ∈ IR

⎣ a2 ⎦

⎪

⎪

⎪

⎪

⎩

⎭

a2

Statistics 511

4 / 25

Another Column Space Example

Another Column Space Example (continued)

⎡

⎡

1

⎢ 1

X1 = ⎢

⎣ 0

0

x ∈ C(X1 )

⎤

0

0 ⎥

⎥

1 ⎦

1

⎡

1

⎢ 1

X2 = ⎢

⎣ 1

1

1

1

0

0

1

⎢ 1

X1 = ⎢

⎣ 0

0

⎤

0

0 ⎥

⎥

1 ⎦

1

x ∈ C(X2 )

x = X1 a for some a ∈ IR2

0

x = X2

for some a ∈ IR2

a

=⇒

=⇒

=⇒

x = X2 b for some b ∈ IR3

=⇒

x ∈ C(X2 )

=⇒

=⇒

=⇒

Thus, C(X1 ) ⊆ C(X2 ).

c

Copyright 2010

Dan Nettleton (Iowa State University)

=⇒

Statistics 511

5 / 25

⎤

0

0 ⎥

⎥

1 ⎦

1

⎡

1

⎢ 1

X2 = ⎢

⎣ 1

1

⎤

0

0 ⎥

⎥

1 ⎦

1

1

1

0

0

x = X2 a for some a ∈ IR3

⎡ ⎤

⎡ ⎤

⎡ ⎤

1

1

0

⎢ 1 ⎥

⎢ 1 ⎥

⎢ 0 ⎥

3

⎥

⎢ ⎥

⎢ ⎥

x = a1 ⎢

⎣ 1 ⎦ + a2 ⎣ 0 ⎦ + a3 ⎣ 1 ⎦ for some a ∈ IR

1

0

1

⎡

⎤

a1 + a2

⎢ a1 + a 2 ⎥

⎥

x=⎢

⎣ a1 + a3 ⎦ for some a1 , a2 , a3 ∈ IR

a1 + a 3

a 1 + a2

for some a1 , a2 , a3 ∈ IR

x = X1

a 1 + a3

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

6 / 25

Estimation of E(y)

Another Column Space Example (continued)

A fundamental goal of linear model analysis is to estimate E(y).

a1 + a2

a1 + a3

We could, of course, use y to estimate E(y).

for some a1 , a2 , a3 ∈ IR

=⇒

x = X1

=⇒

x = X1 b for some b ∈ IR2

=⇒

x ∈ C(X1 )

y is obviously an unbiased estimator of E(y), but it is often not a

very sensible estimator.

For example, suppose

y1

1

1

6.1

=

μ+

, and we observe y =

.

1

2.3

y2

2

Thus, C(X2 ) ⊆ C(X1 ).

We previously showed that C(X1 ) ⊆ C(X2 ).

Thus, it follows that C(X1 ) = C(X2 ).

c

Copyright 2010

Dan Nettleton (Iowa State University)

Should we estimate E(y) =

Statistics 511

7 / 25

c

Copyright 2010

Dan Nettleton (Iowa State University)

μ

μ

by y =

6.1

?

2.3

Statistics 511

8 / 25

Estimation of E(y)

Orthogonal Projection Matrices

The Gauss-Markov linear models says that E(y) ∈ C(X), so we

should use that information when estimating E(y).

It can be shown that...

1

Consider estimating E(y) by the point in C(X) that is closest to y

(as measured by the usual Euclidean distance).

This unique point is called the orthogonal projection of y onto C(X)

might be

and denoted by ŷ (although it could be argued that E(y)

better notation).

By definition, ||y − ŷ|| = minz∈C(X) ||y − z||, where ||a|| ≡

c

Copyright 2010

Dan Nettleton (Iowa State University)

n

2

i=1 ai .

Statistics 511

9 / 25

Why Does PX X = X?

∀ y ∈ IRn , ŷ = PX y, where PX is a unique n × n matrix known as an

orthogonal projection matrix.

2

PX is idempotent: PX PX = PX .

3

PX is symmetric: PX = PX .

4

PX X = X and X PX = X .

5

PX = X(X X)− X , where (X X)− is any generalized inverse of X X.

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

10 / 25

Generalized Inverses

G is a generalized inverse of a matrix A if AGA = A.

PX X = PX [x1 , . . . , xp ]

We usually denote a generalized inverse of A by A− .

If A is nonsingular, i.e., if A−1 exists, then A−1 is the one and only

generalized inverse of A.

= [PX x1 , . . . , PX xp ]

= [x1 , . . . , xp ]

AA−1 A = AI = IA = A

If A is singular, i.e., if A−1 does not exist, then there are infinitely

many generalized inverses of A.

= X.

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

11 / 25

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

12 / 25

Invariance of PX = X(X X)− X to Choice of (X X)−

An Example Orthogonal Projection Matrix

X)−1 X

If X X is nonsingular, then PX = X(X

generalized inverse of X X is (X X)−1 .

Suppose

because the only

X)− X

If X X is singular, then PX = X(X

and the choice of the

generalized inverse (X X)− does not matter because

PX = X(X X)− X will turn out to be the same matrix no matter

which generalized inverse of X X is used.

y1

y2

−

=

X(X X) X

X(X

X)−

1X

= X(X

− X)−

2 X X(X X)1 X

= X(X

Statistics 511

μ+

=

=

=

=

X)−

2X.

c

Copyright 2010

Dan Nettleton (Iowa State University)

−

Suppose (X X)−

1 and (X X)2 are any two generalized inverses of

X X. Then

1

1

=

13 / 25

1

1

6.1

2.3

Thus, the orthogonal projection of y =

onto the column space of X =

is PX y =

c

Copyright 2010

Dan Nettleton (Iowa State University)

6.1

2.3

1

1

1

1

− 1

1

6.1

2.3

.

−

1

[1 1]

[1 1]

1

1

1

1

[2]−1 [ 1 1 ] =

[1 1]

1

1

2

1 1 1

1 1

[1 1]=

2 1

2 1 1

1/2 1/2

.

1/2 1/2

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

, and we observe y =

1

1

Suppose X =

1/2 1/2

1/2 1/2

14 / 25

Why is PX called an orthogonal projection matrix?

An Example Orthogonal Projection

1

2

=

1

1

and y =

2

3

4

.

X●

4.2

4.2

1

2

.

Statistics 511

15 / 25

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

16 / 25

Why is PX called an orthogonal projection matrix?

Suppose X =

1

2

and y =

2

Why is PX called an orthogonal projection matrix?

Suppose X =

.

3

4

C (X )

1

2

and y =

2

.

3

4

C (X )

X●

X●

●

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

17 / 25

Why is PX called an orthogonal projection matrix?

Suppose X =

1

2

and y =

2

3

4

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

18 / 25

Why is PX called an orthogonal projection matrix?

Suppose X =

.

C (X )

1

2

and y =

2

3

4

.

C (X )

X●

X●

^

y

●

^

y

●

●

y

●

●

c

Copyright 2010

Dan Nettleton (Iowa State University)

y

Statistics 511

19 / 25

y

^

y−y

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

20 / 25

Why is PX called an orthogonal projection matrix?

Optimality of ŷ as an Estimator of E(y)

The angle between ŷ and y − ŷ is 90◦ .

ŷ is an unbiased estimator of E(y):

The vectors ŷ and y − ŷ are orthogonal.

E(ŷ) = E(PX y) = PX E(y) = PX Xβ = Xβ = E(y).

ŷ (y − ŷ) = ŷ (y − PX y) = ŷ (I − PX )y

It can be shown that ŷ = PX y is the best estimator of E(y) in the

class of linear unbiased estimators, i.e., estimators of the form My

for M satisfying

= (PX y) (I − PX )y = y PX (I − PX )y

E(My) = E(y) ∀ β ∈ IRp ⇐⇒ MXβ = Xβ ∀ β ∈ IRp ⇐⇒ MX = X.

= = y PX (I − PX )y = y (PX − PX PX )y

Under the Normal Theory Gauss-Markov Linear Model, ŷ = PX y is

best among all unbiased estimators of E(y).

= y (PX − PX )y = 0.

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

21 / 25

Ordinary Least Squares (OLS) Estimation of E(y)

n

i=1

n

i=1

(yi − x(i) b)2 .

X Xb = X y.

If X X is nonsingular, multiplying both sides of the normal

equations by (X X)−1 shows that the only solution to the normal

equations is b∗ = (X X)−1 X y.

(yi − x(i) b)2 = (y − Xb) (y − Xb) = ||y − Xb||2 .

To minimize this sum of squares, we need to choose b∗ ∈ IRp such

Xb∗ will be the point in C(X) that is closest to y.

If X X is singular, there are infinitely many solutions that include

(X X)− X y for all choices of generalized inverse of X X.

X X[(X X)− X y] = X [X(X X)− X ]y = X PX y = X y

In other words, we need to choose b∗ such that

Xb∗ = PX y = X(X X)− X y.

Henceforth, we will use β̂ to denote any solution to the normal

equations.

Clearly, choosing b∗ = (X X)− X y will work.

c

Copyright 2010

Dan Nettleton (Iowa State University)

22 / 25

Often calculus is used to show that Q(b∗ ) ≤ Q(b) ∀ b ∈ IRp if and

only if b∗ is a solution to the normal equations:

Note that

Q(b) =

Statistics 511

Ordinary Least Squares and the Normal Equations

OLS: Find a vector b∗ ∈ IRp such that

Q(b∗ ) ≤ Q(b) ∀ b ∈ IRp , where Q(b) ≡

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

23 / 25

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

24 / 25

Ordinary Least Squares Estimator of E(y) = Xβ

We call Xβ̂ = PX Xβ̂ = X(X X)− X Xβ̂ = X(X X)− X y = PX y = ŷ the

OLS estimator of E(y) = Xβ.

rather than Xβ̂ to denote

It might be more appropriate to use Xβ

our estimator because we are estimating Xβ rather than

pre-multiplying an estimator of β by X.

As we shall soon see, it does not make sense to estimate β when

X X is singular.

However, it does make sense to estimate E(y) = Xβ whether X X

is singular or nonsingular.

c

Copyright 2010

Dan Nettleton (Iowa State University)

Statistics 511

25 / 25