Radial Basis Functions

advertisement

Radial Basis Function Networks

Computer Science,

KAIST

3/22/2016

236875 Visual Recognition

1

contents

•

•

•

•

•

Introduction

Architecture

Designing

Learning strategies

MLP vs RBFN

3/22/2016

236875 Visual Recognition

2

introduction

• Completely different approach by viewing the

design of a neural network as a curve-fitting

(approximation) problem in high-dimensional

space ( I.e MLP )

3/22/2016

236875 Visual Recognition

3

introductio

n

In MLP

3/22/2016

236875 Visual Recognition

4

introductio

n

In RBFN

3/22/2016

236875 Visual Recognition

5

introductio

n

Radial Basis Function Network

• A kind of supervised neural networks

• Design of NN as curve-fitting problem

• Learning

– find surface in multidimensional space best fit to

training data

• Generalization

– Use of this multidimensional surface to interpolate the

test data

3/22/2016

236875 Visual Recognition

6

introductio

n

Radial Basis Function Network

• Approximate function with linear combination of

Radial basis functions

F(x) = S wi h(x)

• h(x) is mostly Gaussian function

3/22/2016

236875 Visual Recognition

7

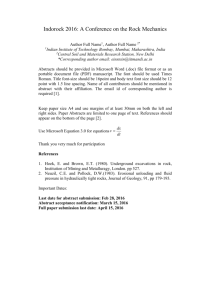

architecture

x1

h1

x2

h2

x3

h3

xn

3/22/2016

Input layer

W1

W2

hm

W3

f(x)

Wm

236875 Visual Recognition

Hidden

layer

Output layer

8

architecture

Three layers

• Input layer

– Source nodes that connect to the network to its

environment

• Hidden layer

– Hidden units provide a set of basis function

– High dimensionality

• Output layer

– Linear combination of hidden functions

3/22/2016

236875 Visual Recognition

9

architecture

Radial basis function

m

f(x) = wjhj(x)

j=1

hj(x) = exp( -(x-cj)2 / rj2 )

Where

cj is center of a region,

rj is width of the receptive field

3/22/2016

236875 Visual Recognition

10

designing

• Require

– Selection of the radial basis function width parameter

– Number of radial basis neurons

3/22/2016

236875 Visual Recognition

11

designing

Selection of the RBF width para.

• Not required for an MLP

• smaller width

– alerting in untrained test data

• Larger width

– network of smaller size & faster execution

3/22/2016

236875 Visual Recognition

12

designing

Number of radial basis neurons

•

•

•

•

By designer

Max of neurons = number of input

Min of neurons = ( experimentally determined)

More neurons

– More complex, but smaller tolerance

3/22/2016

236875 Visual Recognition

13

learning strategies

• Two levels of Learning

– Center and spread learning (or determination)

– Output layer Weights Learning

• Make # ( parameters) small as possible

– Curse of Dimensionality

3/22/2016

236875 Visual Recognition

14

learning strategies

Various learning strategies

• how the centers of the radial-basis functions of the

network are specified.

• Fixed centers selected at random

• Self-organized selection of centers

• Supervised selection of centers

3/22/2016

236875 Visual Recognition

15

learning strategies

Fixed centers selected at random(1)

• Fixed RBFs of the hidden units

• The locations of the centers may be chosen

randomly from the training data set.

• We can use different values of centers and widths

for each radial basis function -> experimentation

with training data is needed.

3/22/2016

236875 Visual Recognition

16

learning strategies

Fixed centers selected at random(2)

• Only output layer weight is need to be learned.

• Obtain the value of the output layer weight by

pseudo-inverse method

• Main problem

– Require a large training set for a satisfactory level of

performance

3/22/2016

236875 Visual Recognition

17

learning strategies

Self-organized selection of centers(1)

• Hybrid learning

– self-organized learning to estimate the centers of RBFs

in hidden layer

– supervised learning to estimate the linear weights of the

output layer

• Self-organized learning of centers by means of

clustering.

• Supervised learning of output weights by LMS

algorithm.

3/22/2016

236875 Visual Recognition

18

learning strategies

Self-organized selection of centers(2)

•

k-means clustering

1.

2.

3.

4.

5.

3/22/2016

Initialization

Sampling

Similarity matching

Updating

Continuation

236875 Visual Recognition

19

learning strategies

Supervised selection of centers

• All free parameters of the network are changed by

supervised learning process.

• Error-correction learning using LMS algorithm.

3/22/2016

236875 Visual Recognition

20

learning strategies

Learning formula

•

Linear weights (output layer)

N

(n)

e j (n)G(|| x j t i (n) ||Ci )

wi (n) j 1

•

wi (n 1) wi (n) 1

i 1,2,..., M

Positions of centers (hidden layer)

N

E (n)

2wi (n) e j (n)G ' (|| x j t i (n) ||Ci )Si1[x j t i (n)]

t i (n)

j 1

•

E (n)

,

wi (n)

t i (n 1) t i (n) 2

E (n)

,

t i (n)

i 1,2,..., M

Spreads of centers (hidden layer)

N

E (n)

wi (n) e j (n)G ' (|| x j t i (n) ||Ci )Q ji (n)

1

Si (n)

j 1

S i 1 (n 1) S i 1 (n) 3

3/22/2016

E (n)

S i1 (n)

Q ji (n) [x j t i (n)][ x j t i (n)]T

236875 Visual Recognition

21

MLP vs RBFN

Global hyperplane

Local receptive field

EBP

LMS

Local minima

Serious local minima

Smaller number of hidden

neurons

Larger number of hidden

neurons

Shorter computation time

Longer computation time

Longer learning time

Shorter learning time

3/22/2016

236875 Visual Recognition

22

MLP vs RBFN

Approximation

• MLP : Global network

– All inputs cause an output

• RBF : Local network

– Only inputs near a receptive field produce an activation

– Can give “don’t know” output

3/22/2016

236875 Visual Recognition

23

Gaussian Mixture

• Given a finite number of data points xn, n=1,…N, draw

from an unknown distribution, the probability function p(x)

of this distribution can be modeled by

– Parametric methods

• Assuming a known density function (e.g., Gaussian) to start

with, then

• Estimate their parameters by maximum likelihood

• For a data set of N vectors c={x1,…, xN} drawn independently

from the distribution p(x|q), the joint probability density of

the whole data set c is given by

N

p (c | q) p ( x n | q) L (q)

n 1

3/22/2016

236875 Visual Recognition

24

Gaussian Mixture

• L(q) can be viewed as a function of q for fixed c, in other

words, it is the likelihood of q for the given c

• The technique of maximum likelihood sets the value of q by

maximizing L(q).

• In practice, often, the negative logarithm of the likelihood is

considered, and the minimum of E is found.

• For normal distribution, the estimated parameters can be found

N

by analytic differentiation of E: E ln L(q) ln p (x n | q)

n 1

1

N

1

S

N

3/22/2016

N

x

n

n 1

N

T

(

x

)(

x

)

n

n

n 1

236875 Visual Recognition

25

Gaussian Mixture

• Non-parametric methods

– Histograms

An illustration of the histogram

approach to density estimation. The

set of 30 sample data points are

drawn from the sum of two normal

distribution, with means 0.3 and 0.8,

standard deviations 0.1 and

amplitudes 0.7 and 0.3 respectively.

The original distribution is shown

by the dashed curve, and the

histogram estimates are shown by

the rectangular bins. The number M

of histogram bins within the given

interval determines the width of the

bins, which in turn controls the

smoothness of the estimated density.

3/22/2016

236875 Visual Recognition

26

Gaussian Mixture

–Density estimation by basis functions, e.g., Kenel

functions, or k-nn

(a) kernel function,

(b) K-nn

Examples of kernel and K-nn approaches to density estimation.

3/22/2016

236875 Visual Recognition

27

Gaussian Mixture

• Discussions

• Parametric approach assumes a specific form for the

density function, which may be different from the true

density, but

• The density function can be evaluated rapidly for new input

vectors

• Non-parametric methods allows very general forms of

density functions, thus the number of variables in the

model grows directly with the number of training data

points.

•The model can not be rapidly evaluated for new input vectors

• Mixture model is a combine of both: (1) not restricted to

specific functional form, and (2) yet the size of the

model only grows with the complexity of the problem

being solved, not the size of the data set.

3/22/2016

236875 Visual Recognition

28

Gaussian Mixture

• The mixture model is a linear combination of component

densities p(x| j ) in the form

M

p ( x ) p( x | j )P ( j )

j 1

P( j ) is the mixing parameters of data point x,

M

P( j ) 1, and 0 P( j ) 1

j 1

the component density function are normalized

p( x | j )dx 1, and hence

p( x | j ) can be

regarded as a class - conditiona l density

3/22/2016

236875 Visual Recognition

29

Gaussian Mixture

• The key difference between the mixture model representation and a

true classification problem lies in the nature of the training data, since

in this case we are not provided with any “class labels” to say which

component was responsible for generating each data point.

• This is so called the representation of “incomplete data”

• However, the technique of mixture modeling can be applied separately

to each class-conditional density p(x|Ck) in a true classification

problem.

• In this case, each class-conditional density p(x|Ck) is represented by an

independent mixture model of the form

M

p( x ) p( x | j )P( j )

j 1

3/22/2016

236875 Visual Recognition

30

Gaussian Mixture

• Analog to conditional densities and using Bayes’ theorem, the posterior

Probabilities of the component densities can be derived as

M

p ( x | j ) P( j )

P( j | x )

, and P( j | x ) 1.

p( x )

j 1

• The value of P(j|x) represents the probability that a component j was

responsible for generating the data point x.

• Limited to the Gaussian distribution, each individual component

densities are given by :

x 2

1

j

p (x | j )

exp

,

2 d /2

2

( 2 j )

2 j

with a mean j and convarianc e matrix S j 2j I.

• Determine the parameters of Gaussian Mixture methods:

(1) maximum likelihood, (2) EM algorithm.

3/22/2016

236875 Visual Recognition

31

Gaussian Mixture

Representation of the mixture model in terms of a

network diagram. For a component densities p(x|j), lines

connecting the inputs xi to the component p(x|j) represents

the elements ji of the corresponding mean vectors j of the

component j.

3/22/2016

236875 Visual Recognition

32

Maximum likelihood

• The mixture density contains adjustable parameters: P(j), j and j where

j=1, …,M.

• The negative log-likelihood for the data set {xn} is given by:

M

n

E ln L ln p(x ) ln p(x j ) P( j )

n 1

n 1

j 1

N

N

n

• Maximizing the likelihood is then equivalent to minimizing E

• Differentiation E with respect to

–the centres j :

E

P( j x n )

j n 1

N

–the variances j

3/22/2016

:

( j x n )

2j

n

N

x

j

E

n d

P( j x )

3

j n 1

j

j

236875 Visual Recognition

2

33

Maximum likelihood

•Minimizing of E with respect to to the mixing parameters P(j),

must subject to the constraints S P(j) =1, and 0< P(j) <1. This

can be alleviated by changing P(j) in terms a set of M

auxiliary variables {gj} such that: exp( g )

j

P( j ) M

, g

j

exp(

g

)

k 1

k

• The transformation is called the softmax function, and

• the minimization of E with respect to gj is

P(k )

jk P( j ) P( j ) P(k ),

g j

M

E

E P ( k )

•using chain rule in the form

g j k 1 P(k ) g j

• then,

3/22/2016

N

E

{P( j x n ) P( j )}

g j

n 1

236875 Visual Recognition

34

Maximum likelihood

• Setting

E

0, we obtain

i

E

1

2

0

,

then

ˆ j

• Setting

j

d

E

1

ˆ

0

,

then

P( j )

• Setting g

N

j

ˆ j

n

n

P

(

j

x

)

x

n

n

P

(

j

x

)

n

P ( j x ) x ˆ j

n

n

n

2

n

P

(

j

x

)

n

N

n

P

(

j

x

)

n 1

• These formulai give some insight of the maximum likelihood

solution, they do not provide a direct method for calculating

the parameters, i.e., these formulai are in terms of P(j|x).

• They do suggest an iterative scheme for finding the minimal

of E

3/22/2016

236875 Visual Recognition

35

Maximum likelihood

• we can make some initial guess for the parameters, and use these

formula to compute a revised value of the parameters.

- using , and to compute p( x n|j ), and

- using P (j), p( x n|j ), and Bayes' theorem to compute P( j|x n )

• Then, using P(j|xn) to estimate new parameters,

• Repeats these processes until converges

3/22/2016

236875 Visual Recognition

36

The EM algorithm

• The iteration process consists of (1) expectation and (2)

maximization steps, thus it is called EM algorithm.

• We can write the change in error of E, in terms of old and

new

n

new parameters by: new

)

p

x

old

E E ln old n

n

p x )

M

• Using p(x) p(x | j )P( j ) we can rewrite this as follows

)

)

new

new

n

old

n

)

)

P

j

p

x

j

p

j

x

j

new

old

E E ln

old

n

old

n

)

p

x

p jx

n

• Using Jensen’s inequality: given a set of numbers lj 0,

• such that Sjlj=1,

j 1

3/22/2016

ln l j x j l j ln x j )

j

j 236875 Visual

Recognition

37

The EM algorithm

• Consider Pold(j|x) as lj, then the changes of E gives

E new E old

new

new

n

P

(

j

)

p

(

x

j)

old

n

p ( j x ) ln old n old

n

n

j

p (x ) p ( j x )

old

old

• Let Q = Sn Sj p , then E new E old Q , and E Q is an

upper bound of Enew.

• As shown in figure, minimizing Q will lead to a decrease of

Enew, unless Enew is already at a local minimum.

Schematic plot of the error function E as a

function of the new value qnew of one of the

parameters of the mixture model. The curve

Eold + Q(qnew) provides an upper bound on the

value of E (qnew) and the EM algorithm

involves finding the minimum value of this

upper bound.

3/22/2016

236875 Visual Recognition

38

The EM algorithm

• Let’s drop terms in Q that depends on only old parameters,

and rewrite Q as

~

Q p old j x n ln P new j ) p new x n j )

)

n

j

• the smallest value for the upper bound is found by

minimizing this quantity Q~

• for the Gaussian mixture model, the quality Q~ can be

n

new 2

x j

~

old

n

new

new

Q p j x ln P j ) d ln j

const.

new 2

n

j

2 j )

• we can now minimize this function with respect to ‘new’

parameters, and they are:

old

n

n

new 2

old

n

n

P jx x

1 n P j x x j

new 2

new

n

j

, j )

old

n

old

n

d

P

j

x

P

j

x

n

n

)

)

)

)

3/22/2016

236875 Visual Recognition

)

39

The EM algorithm

• For the mixing parameters Pnew (j), the constraint SjPnew (j)=1

can be considered by using the Lagrange multiplier l and

minimizing the combined function Z Qˆ l P new j ) 1

j

• Setting the derivative of Z with respect to Pnew (j) to zero,

P old j x n

0 new

l

P j)

n

)

• using SjPnew (j)=1 and SjPold (j|xn)=1, we obtain l = N, thus

P j )

new

1

N

)

old

n

P

j

x

n

• Since the SjPold (j|xn) term is on the right side, thus this

results are ready for iteration computation

• Exercise 2: shown on236875

the Visual

netsRecognition

3/22/2016

40