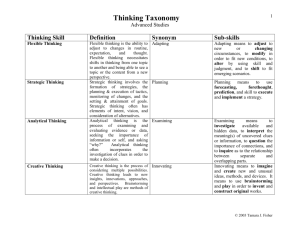

Sketching for Knowledge Capture

advertisement

Symbolic Supercomputer

for

Artificial Intelligence

and

Cognitive Science

Research

Kenneth D. Forbus

Dedre Gentner

Northwestern University

Overview

• Why symbolic supercomputing?

• Off-line experiments

– Work in progress: Large-scale corpus analysis

– Distributed experiments harness

• Interactive Cognitive Architecture experiments

– Companion Cognitive Systems (DARPA)

– Explanation Agent

Off-line experiments

• Sensitivity Analysis

– Every cognitive simulation has parameters

• Analyzing how performance depends on parameters important

for understanding models

– Sensitivity analyses can be expensive

• 1994 MAC/FAC simulations took weeks of CPU time

• 2000: 4.8 million SME runs in SEQL sensitivity analyses took

23 days (400 mhz PII), should be 4 days today.

• Corpora Analyses

– Text

– Sketches

– Problems

Larger-scale simulations

• Goal: Increased use of

automatically generated

inputs

– Reduce tailorability

– Increase # of stimuli

generated and used.

• Processes

– Analogical Encoding

– Conceptual problem

solving

Symbolic models and parallelism

• Our approach is based on Gentner’s (1983)

structure-mapping theory

– Assumes parallel processing both within modules and

between modules

– Currently emulate on serial processors

• Coarse-grained parallelism could provide

important benefits

– Continue to simulate within-module parallelism on

single CPUs

– Exploit parallel processing between modules

• Incrementally update retrievals during reasoning

• Incrementally construct generalizations during reasoning

• Reason about domain, interactions, and self in parallel

Traditional Supercomputers ineffective for

symbolic processing

• Optimized for

– Floating-point processing

– Pipelined, with vector or grid model

– okay CPUs, low RAM, fast floating point

• Symbolic processing

– Involves many pointer operations

– Some floating-point, but over irregular structures

(graphs, sparse-vectors)

– fast CPUs, high RAM, okay floating point

Optimizing a cluster for symbolic processing

1. Use the fastest CPU available.

2. Distribute the processing in large,

functionally-organized units.

– Avoid communication overhead

– Data-parallel programming style poor fit for

clusters

– Replicate knowledge base as needed

3. Organize memory to be as fast as possible.

– Maximize RAM, cache

– Avoid virtual memory

Why large memories are crucial

• If a program is going to know a lot, it has to put it

somewhere

• Example: Subset of Cyc KB contents we use

– 35,070 concepts, 8939 relations, and 3,917 functions

– 1,283,835 axioms, divided into 3,537 microtheories

– Added knowledge (DARPA HPKB, CPOF, RKF)

• Military tasks, units, equipment

• Countries, international relationships, terrorist incidents

• Qualitative models, terrain, trafficability, visual representation

conventions, developed by our group

– Takes roughly 495 MB of storage, due to indexing

overhead

• May double in size as we learn by accumulating experiences

Mk2

• Hardware: Linux

Networx

– 5 year maintenance

contract

• 67 nodes

– Dual 3.2Ghz Xeon CPUs

– 3GB RAM/node

– 80GB disk/node

• Allegro Common Lisp for

Linux

– Provides flexible

development environment

Mk2 Cluster Network

Master Host

Node 1

Node 2

…

Node 3

Backplane Subnet

Cisco PIX

Firewall/Router

Frontplane Subnet

Public

Internet

Packet

filtering,

trusted

whitelist of

hosts

Gigabit

switched

Ethernet

Node 67

One-command

provisioning, P2P data

distribution system

Qualitative reasoning for

intelligent agents

(ONR AI Program)

Situation

updates

Queries

Estimates,

warnings

Model of ongoing

situation/system

Explanation

Agent

Objective

Create science base for intelligent

software agents that can

• Reason about the physical

phenomena and systems in a

human-like way

• Extend their knowledge

incrementally, by communicating

with human collaborators in natural

language.

Technical Approach

•

New examples

Knowledge Base

(general knowledge

+ libraries of cases)

•

•

Develop qualitative reasoning techniques for

solving problems under time pressure with

partial, incomplete knowledge (“back of the

envelope” reasoning)

Explore the use of qualitative

representations as part of the semantics for a

natural language system.

Develop techniques to assimilate controlledlanguage reports to extend an agent’s

models of the physical world.

QP Theory in Natural Language Semantics

•

Idea: Qualitative Process theory can be

used as a framework for understanding

NL descriptions of physical phenomena.

– Right level of abstraction

– Consistent with human mental models

– Support for compositionality

•

Approach

– Identify syntactic patterns corresponding

to QP theory concepts via corpus

analysis

– Recast QP theory in terms of frames

– Use controlled subset of English to

simplify parsing, focus on semantics

•

(1) A pipe connects cylinder c1 to

cylinder c2.

(2) Cylinder C1 contains 5 liters of

water.

(3) Cylinder C2 contains 2 liters of

water.

(4) Water flows from cylinder C1 to

cylinder C2, because the pressure in

cylinder C1 is greater than the

pressure

in cylinder C2.

(5) The higher the pressure in cylinder

C1,

the higher the flowrate of the water.

(6) When the pressure in cylinder C2

increases, the flowrate of the water

decreases.

Current status

Type:

(isa flow3606 Translation-Flow)

Participants:

(isa c1 Container) [QuantityFrame

q3609]

(isa c2 Container) [QuantityFrame

q3603]

Conditions:

(> (pressure c1) (pressure c2))

Quantities:

[QuantityFrames q3608 and q3605]

Consequences:

(qprop (flowrate flow3606)

(pressure c1))

(qprop- (flowrate flow3606)

(pressure c2))

(I- (water c1) (flowrate

flow3606))

(I+ (water c2) (flowrate

flow3606))

– NL system translates paragraph sized

texts about physical processes into

formal representations

– Tested on a dozen examples

•

Next steps

– Expand range of texts handled

– Develop knowledge assimilation

techniques to construct knowledge bases

by reading multiple texts

C1

C2

The EA natural language system

Parser

Input

text

QRG-CE

grammar

Retrieval of

semantic

information

Word-Sense

Disambiguation

Lexicon

WSD

Data

Frame

Construction

Frame

Rules

Merge

Rules

Process Frame

Construction

Process

Rules

1.2 million

fact subset

of Cyc

KB

Only 15 out

of ~100

grammar

rules are

QP-specific

Patterns for QPspecific

constituents

QP Frames

QP Theory

constraints

Facts

Sven

Kuehne’s

Ph.D

thesis

Corpus Analysis (in progress)

•

Kuehne and Forbus (2002) used by-hand corpus

analysis to identify syntactic patterns

– Four chapters of an introductory science book, 216

sentences total

– 43% of the material in physical explanatory text could

be captured via QP theory.

•

Do the syntactic patterns that we found for

explanatory physical texts apply to everyday

texts?

– If they do, what is their coverage?

– How many more patterns are there?

Looking for quantities

• 1999 volume of the New York Times, consisting of 6.4 million sentences

• First stage used 30 word list for filtering (7.5 hours)

– ~172,000 sentences output

• Second stage used regular expressions (12 hours)

– Derived from vocabulary and syntactic patterns from previous corpus analysis.

– Result: ~19,000 sentences worth examining more closely

• Third stage uses modified version of our Explanation Agent NLU system

(less than 2 days, 17 hours, on 3 nodes)

– Previously, used Quantity and PhysicalQuantity

– Generalized to the Cyc concept ScalarInterval,

• Subsumes temperament, monetary values, feeling attributes, formality/politeness of

speech, plus others.

• 14,000+ quantities found.

– 0.2% of the sentences mention a recognizable quantity

– Lexicon limitations may have a strong effect here

• Expanding it via hand-labor (Cycorp) plus co-training is probably necessary

• e.g., “intensification of the war effort”

Qualitative changes in the New York Times

• Starting point: Corpus of 6.4 million sentences

• Filter using word list of 89 synonyms for

increases, 66 for decreases (~10 hours each)

–

–

–

–

62,117 candidate sentences mentioning decreases

195,452 candidate sentences mentioning increases

Around 4% of corpus

Contrast: 43% of the material in physical explanatory

text could be captured via QP theory.

• Larger analysis only concerns qualitative proportionalities

• Qualitative representations may play a smaller role in

understanding political texts versus physical texts.

• Genre differences: newspapers versus explanatory material

– E.g., “(X i.e., Y)” common on web, not in newspapers

Dexp: Distributed Experiment Tool

• Provides support for running distributed

experiments

– Written in Common Lisp

– Uses sandbox to avoid configuration issues

• Experimenter divides computation into work units

– Example: For N queries, find all of the solutions to

them

– Provides list of work units to dexp as a file, along with

a startup file and code tree to use

– Gets back a set of files containing the results.

dexp Architecture

• Experiment Coordinator

– Manage distribution and

execution of work units

– Collect results

Coordinator

Load

balancer (*)

• Experiment pool nodes

– Executes a work unit, returns

results.

– Execution uses sandbox for

configuration control

distributed

experiment pool

• Load Balancer

– Dynamically allocate nodes

for work units

– Will balances demands from

multiple simultaneous

experiments

n66

n15

n65

n34

n31

n33

How dexp simplifies experiments: Example

• A experiment analyzing semantic translations in

ResearchCyc KB consisted of ~1200 work units

– Each consisted of a query to see how many examples in

the KB satisfied the semantic patterns given for verbs

• With 24 nodes, most of the experiment was

completed in 34 minutes

– Estimate: 11 hours on a single CPU, if no failures

• Five work units churned for 12 hours, failed to

finish due to heap blow-out

– Most of the results were available quickly

– Much easier to diagnose what was going wrong, instead

of waiting for hours to hit a failure.

Companion Cognitive Systems

A new cognitive systems

architecture

• Robust reasoning and learning

– Companions will learn about

their domains, their users, and

themselves.

• Longevity

– Companions will operate

continuously over weeks and

months at a time.

• Interactivity

– Companions will be capable of

high bandwidth interaction with

their human partners. This

includes taking advice.

– Sketching is a major

interaction modality

Mk2

(ONR, 67 nodes)

Central hypotheses

• Analogical processing will

enable us to create systems

with human-like learning and

reasoning abilities

– Able to handle relational

information

– Able to incrementally adapt

and extend their knowledge

– Able to apply what they learn

in one domain to other

domains

• Using a cluster can make an

analogical processing

architecture fast enough to be

used in interactive systems

– Changes the kinds of

experiments that become

feasible as well.

Colossus

(DARPA, 5

nodes)

Companions as Structure-Mapping Architecture

Psychological Bets

• Ubiquitous use of

structure-mapping for

reasoning and learning

– SME for matching

– MAC/FAC for similaritybased retrieval

– SEQL for generalization

• Qualitative representations

play central role

– Part of visual structure in

spatial reasoning

– Representation of causal

knowledge and arguments

Engineering Choices

• Distributed agent

architecture using KQML

• Logic-based TMS for

working memory

• No hardwired workingmemory capacity limits

Companion Architecture Year One

Cluster

MAC/FAC

Domain

Tickler

Facilitator

Session

Reasoner

Node

Master node

User’s Windows box

Session

Manager

nuSketch System

(sKEA or nuSketch

Battlespace)

Relational

Concept

Map

Node

w/Thomas

Hinrichs, Jeff

Usher, Matt

Klenk, Greg

Dunham,

Emmett Tomai,

Tom Ouyang,

Hyeonkyeong

Kim, and Brian

Kyckelhahn

Bennett Mechanical Comprehension Test

• Widely used standardized exam

for technicians

• Used in cognitive psychology

as indicator of spatial ability

• Difficulty lies in breadth of

situations, not narrow technical

knowledge

• Best score to date: 10 correct

out of a subset of 13 BMCT

problems (77%). [P < 0.001]

Q: Which crane is more stable?

Example describes

how physical

principles apply to a

real-world situation

Analogies

with example

provides

causal

models

needed for

solution

Suggesting visual/conceptual relations by analogy

109

candidates

184

candidates

Analogical

inferences

are

surmises,

not

certainties

189

candidates

109

candidates

MAC/FAC

Knowledge Base

(including case libraries of examples)

Suggestions

Filtering

Candidate

Inference

Extraction

Visual/Conceptual Relations: Experimental Results

• Ex1: Focused Tasking

– 54 sketches (18 situations

drawn by three KEs) as case

library for BMCT

experiment

• Round Robin method: For

each sketch, remove from

library, remove its VCR

answers, generate

suggestions via analogy

– Yielded “exam” of 181

VCR questions

– Score = 74.25 (P << 10-5)

– Coverage = 54%

– Accuracy = 87%

• Ex2: Open tasking

– 10 situations selected from

BMCT problems, covering

larger range of phenomena

(e.g., “a boat moving in

water”, “a bicycle”)

– Each situation sketched by

two graduate students, told

to illustrate the principle(s)

you think are important.

• Round Robin method

– Yielded “exam” of 138

questions

– Score = 21.75 (P < 10-7)

– Coverage = 46%

– Accuracy = 57%

MAC/FAC

Domain Model

Tickler

Session

Reasoner

SEQL

Domain

Generalizer

MAC/FAC

Self Model

Tickler

nuSketch GUI

Dialogue

Manager

Executive

Headless

nuSketch

MAC/FAC

User Model

Tickler

SEQL

Self Model

Generalizer

Facilitator

Relational

Concept

Map

Interactive

Explanation

Interface

SEQL

User Model

Generalizer

Offline

Learning

Offline

Learning

Offline

Learning

Cluster

Session

Manager

User’s

Windows box

Companions

Architecture

as of 9/05

Explanation Agent Prototype

• Use Companions Architecture as infrastructure

• Incorporate other ONR advances

– EA NLU system (Sven Kuehne)

– Back of the envelope reasoning (Praveen Paritosh)

– Spatial prepositions model to link language and

sketches (Kate Lockwood)

– Analogical Problem Solver (Tom Ouyang)

• Use for cognitive simulations

– Natural language, sketching for stimulus input

Back of the Envelope Reasoning (Paritosh)

How much

oxygen is

left?

Is anyone

still alive in

there?

How long

to repair it?

Goal: Develop theories that enable software to reason quantitatively

in real-world situations

• Qualitative

Estimate parameter directly

representations

Use known value

essential for framing

if available

the problems,

Feel for numbers

supporting comparisons

Estimate based

• Analogical reasoning

on

similar

situation

Create estimation model

used to find similar

situations for estimation

Problem solving

models, construct

Find modeling strategy

qualitative

Find values for

representations via

parameters

generalization over

in model

experience

Back of the Envelope Reasoning Progress

• Implemented BoTE-Solver

– Solves 13 problems to date

• Examples

• How many K-8 school

teachers are in the USA?

• How much money is spent on

newspapers in USA per year?

• What is the total annual

gasoline consumption by cars

in US?

• What is the annual cost of

healthcare in USA?

• How much power can an adult

human generate?

• Claim: There is a core

collection of strategic

knowledge, specifically, seven

strategies that capture most of

back of the envelope

reasoning.

• Source:

– Strategies in Bote-Solver

– Analysis of all problems (n=44)

from Force and Pressure,

Rotation and Mechanics, Heat

and Astronomy from Clifford

Swartz’s Back-of-the-Envelope

Physics.

CARVE: Using analogy to generate qualitative

representations

Dimensional

partitioning

for each

quantity

(k-means

clustering)

C1

Input cases

(isa Algeria

(HighValueContextualizedFn

Area AfricanCountries)

.

.

Add these facts

to original cases

Quantity 1

Cj

Cases + structural

limit points and

distributional

partitions

S1

L1

S2

S3

L2

Structural

clustering

using SEQL

C1

Analogical Estimation

• Analogical estimator: makes guesses for a numeric

parameter based on analogy.

(GrossDomesticProduct Brazil ?x)

– The value is known.

– Find an analogous case for which value is known.

– Find anything in the KB which might be a basis for an

estimate.

• Hypothesis: Representations augmented with

symbolic representation will lead to more accurate

estimates.

Basketball Stats Domain

• Quantities (e.g., points per game, rebounds per

game, assists per game, etc.)

• Causal relationships

– Being taller helps being able to rebound and block

– Power forwards are taller and are expected to shoot,

rebound and block

– Being good at getting 3 point field goals means one is a

good shooter, so their free throw success rates will be

higher.

• Case library

– 15 players from different positions on field

– 11 facts per player

(seasonThreePointsPercent JasonKidd 0.404)

(qprop seasonThreePointPercent seasonFreeThrowPercent

BasketballPlayers)

Results: Errors

80

70

60

50

Enriched mean % error

40

Raw mean % error

30

20

10

Al

l

eb

ou

nd

Th

s

re

e

po

in

t%

R

Po

in

ts

s

Fr

e

e

th

r

ow

sis

ts

As

H

ei

gh

t

0

SpaceCase: Motivation

• Multimodal interfaces

potentially useful for

military needs

– Language plus diagrams,

other spatial displays

• Software’s notion of

similarity needs to be like

their human partners

– Including visual properties

– Including retrieval, for

shared history

– Including shared language

• Recent research points to

role of non-geometric

properties in spatial

preposition use

– Coventry 1994; Coventry &

Prat-Sala, 1999; Herskovitz,

1986; Feist & Gentner,

2003; Garrod et al., 1999;

Coventry & Garrod, 2004;

Carlson & van der Zee,

2005

• Spatial language can affect

retrieval of pictures

– Feist and Gentner, 2001

Lockwood, K., Forbus, K., and Usher, J.

SpaceCase: a Model of Spatial Preposition Use

Proceedings of CogSci-05, to appear

sKEA Sketching Interface

sKEA Sketching Interface

firefly -> insect ->

animate

functions as weak

container

firefly

dish

ground_supports_

figure

medium_

curvature

Sketch corpus crucial for model development

• Building a corpus of sketches

– Gathering library of examples from literature

– Use sKEA to capture them in machine-understandable

form

– Estimate: ~ 200 sketches will be needed to cover the set

of prepositions and phenomena to be modeled

• Cluster will be used for

– Regression testing

– Sensitivity analyses: How does performance depend on

parameter values?

Problem-solving experiments

• Starting point: Pisan’s (1998) Thermodynamics

Problem Solver

– Solved 80% of the problems typically found in first four chapters

in engineering thermodynamics textbooks

– Used graphs and property tables

– Produced human-like solutions

• Generalize: Analogical Problem Solver

– Focus on conceptual comprehension questions

– Declarative strategies now include analogical processing

• when/what to retrieve, what candidate inferences to use, level of

effort in testing

– Experiment in progress: Can strategy variations explain

novice/expert differences?

• Pilot results promising, should have full data by end of summer.

Questions?

Technology Transfer

The Whodunit Problem

• Goal: Generate plausible

hypotheses about who

performed an event.

• Formal version: Given

some event E whose

perpetrator is unknown,

construct a small set of

hypotheses {Hp} about

the identity of the

perpetrator of E.

– Include explanations as to

why these are the likely

ones

– Able to explain on demand

why others are less likely.

Assumptions & Limitations

• Formal inputs. Structured

descriptions, including

relational information,

expressed in CycL.

• Accurate inputs.

• One-shot operation. No

incremental updates.

• Passive operation.

Doesn’t generate

differential diagnosis

information

Method 1: Closest Exemplar

Memory pool

Probe

Output =

memory item

+ SME results

CVmatch

CVmatch

SME

SME

CVmatch

1.

2.

SME

CVmatch

Cheap, fast, non-structural

MAC/FAC models similarity based

retrieval

•

•

Scales to large memories

Accounts for psychological phenomena

•

Memory pool = All cases concerning

the 98 perpetrators, minus the test set.

3.

4.

Use MAC/FAC to

retrieve events similar to

E.

For each similar event,

remove it if it doesn't

include a candidate

inference about the

perpetrator.

Iterate until enough

hypotheses are generated.

(Optional) Generate

explanations and

expectations by

analyzing the similarities

and differences between

each Hp and E.

Method 2: Closest Generalization

New

Example

SEQL

SME

Generalizations

Exemplars

…

SEQL models generalization

•

•

•

Assimilate new exemplars into a

generalization when close enough.

Models psychological data, used to

made successful predictions of

human behavior.

Recent extension: use probability to

improve noise immunity

•

Preprocessing:

1.

Partition case library

according to perpetrator.

Use SEQL to construct

generalizations for each

perpetrator.

2.

•

Generating

hypotheses:

1.

Given an incident E, pick

the n closest

generalizations, as

determined by SME's

structural evaluation score.

Whodunit Experiment

• Used 3,379 terrorist

incidents from

Cycorp’s Terrorist

knowledge base

– Between 6 and 158

propositions per case,

20 on average

• 98 perpetrators

involved in at least 3

incidents in the TKB

– Pick one incident at

random for test set,

remove perpetrator

• Elaborate via

inference

– Add attributes (e.g.,

(CityInCountryFn

Italy)) using genls

hierarchy

• Three performance

levels:

– Best bet

– Top 3: Best plus

plausible alternatives

– Top Ten list: Foci for

additional collection,

analysis

Whodunit Example

Whodunit Results

60%

50%

Pure

retrieval

surprisingly

good

Correctness

Adding

probability

yielded 5%

improvement

40%

Top-10

Top-3

Correct

30%

20%

10%

0%

MAC/FAC

SEQL

Symbolic

generalization

adds valve for

weaker criteria

SEQL+P

Background Material

Basketball Stats Estimation by Analogy

Given: An estimation problem

(seasonThreePointsPercent JasonKidd ?x) and

a case library

Find the most similar player to JasonKidd in the

case library for whom we know the value for

seasonThreePointsPercent.

Use that as an estimate for the given problem.

Compare accuracy over the initial case library, and

the case library enriched with representations from

CARVE.

SpaceCase

sKEA

input stimulus

KB

Evidence

Rules

ink

processing

routines

Bayesian

updating

algorithm

Spatial Preposition Label

Performance

• Labeling task (Feist &

Gentner, 2003)

– <figure> is in/on the

<ground>

• 36 total stimuli

– {firefly, coin}

– {bowl, dish, plate, slab,

rock, hand}

– {low, medium, high}

• Consistent on all 36

trials for values of

parameters given

Modeling a spatial language/memory interaction

• Feist and Gentner (2001)

• Use spatial preposition when

showing someone a situation

“On”

• Use SpaceCase to confirm

unsuitability of original stimuli

for ON

initial sketch

0.363

plus variant

0.859

minus variant

0.2428

• Retrieval via MAC/FAC

• Given novel stimulus, they are

more likely to claim they have

seen it before

– Initial sketch plus variants

stored as memory

– Initial as probe retrieves

itself

– Initial plus relation for

spatial preposition retrieves

plus variant

SpaceCase next steps

• Expand model

– more prepositions

– more complex input

• Cross-linguistic modeling

auf

an