Part 4 - Computer Science and Engineering

advertisement

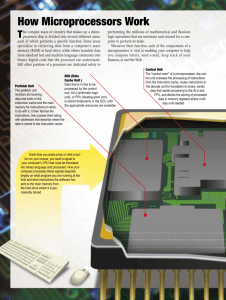

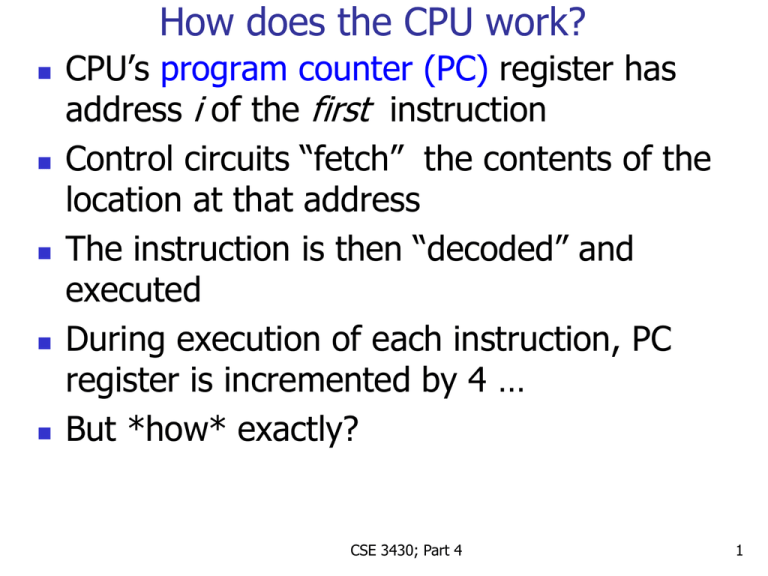

How does the CPU work? CPU’s program counter (PC) register has address i of the first instruction Control circuits “fetch” the contents of the location at that address The instruction is then “decoded” and executed During execution of each instruction, PC register is incremented by 4 … But *how* exactly? CSE 3430; Part 4 1 A simple (accumulator) machine 8-bit words, 5-bit address, 3-bit op-code Instructions and op-codes: ADD 000 SUB 001 MPY 010 DIV 011 LOAD 100 STORE 101 In m.l., address is in bits 0 – 4, op-code in 5 – 7 Example code for C = A*B + C*D A in word at 20, B in 21, C in 22, D in 23; word at 30 (E) is used for temporary storage 100 10100 LOAD A 010 10111 MPY D 010 10101 MPY B 000 11110 ADD E 101 11110 STORE E 101 10110 STORE C 2 100 10110 LOAD CSE C 3430; Part 4 Structure of simple CPU Addr Decode Timing and Control INC 2→1 MUX OP IR PC Bus ACC 2→1 MUX MAR MDR ALU CSE 3430; Part 4 3 Structure of simple CPU Bus This bus is internal to the CPU. There is a separate bus from the memory to MAR and MBR CSE 3430; Part 4 4 MAR is memory address register MBR is memory buffer register To read a word in memory, the CPU must put the address of the word in memory and wait for a certain no. of clock cycles; at the end of that, the value at that memory address will appear in MBR Bus To write a word to memory, the CPU must put the address of the word in memory and the value to be written in MBR; set the “write enable” bit; wait for a certain no. of clock cycles CSE 3430; Part 4 MAR MDR 5 INC 2→1 MUX PC PC is the program counter. INC is a simple circuit whose output is one greater than its input. The MUX is a multiplexor which will output one of its two inputs, depending on the value of a control signal (not shown); this allows for normal control flow and branches Bus MAR CSE 3430; Part 4 MDR 6 INC 2→1 MUX PC ALU is the arithmetic/logic unit and does all the math ACC is the accumulator It can be loaded with a value from the ALU or the bus; the value in it can be used as an input to ALU or copied into MBR (why? when?) Bus ACC 2→1 MUX MAR MDR ALU CSE 3430; Part 4 7 IR (instruction reg.) contains the instruction being executed. The decoder splits it into the address and operation to be performed. Timing and control generates the correct control signals and, in effect, runs the whole show Addr Decode Timing and Control INC 2→1 MUX OP IR PC Bus ACC 2→1 MUX MAR MDR ALU CSE 3430; Part 4 8 “Timing and control” generates a set of “control signals” that essentially control what happens. Key inputs to TAC: clock, condition signals (from PS) Key idea: At each clock cycle, current state is Condition updated to the signals appropriate next state and a new set of ctrls signals generated Number 0 1 2 3 4 5 6 7 Operation Acc → bus load Acc PC → bus load PC load IR load MAR MDR → bus load MDR Next-state Current-state Clock (register) Control Number 8 9 10 11 12 13 14 Operation ALU → Acc INC → PC ALU operation ALU operation Addr → bus CS R/W … Control signals Finally: How the CPU works 0 PC → bus load MAR INC → PC load PC Yes States 0,1,2: Fetch Rest: Decode, execute OP=store No 6 CS, R/W 4 ACC → bus load MDR 1 CS, R/W 5 2 CS Yes OP=load No MDR → bus load IR 3 Addr → bus load MAR 7 MDR → bus load ACC 8 MDR → bus ALU → ACC ALU op load ACC 10 “Timing and control” generates a set of “control signals” that essentially control what happens. Key inputs to TAC: clock, condition signals (from PS) Key idea: At each clock cycle, current state is Condition updated to the signals appropriate next state and a new set of ctrls signals generated Number 0 1 2 3 4 5 6 7 Operation Acc → bus load Acc PC → bus load PC load IR load MAR MDR → bus load MDR Next-state Current-state Clock (register) Control Number 8 9 10 11 12 13 14 Operation ALU → Acc INC → PC ALU operation ALU operation Addr → bus CS R/W … Control signals What if we want to handle interrupts? Ans: The interrupt line would feed into Next-state Improving Performance Problem: Speed mismatch between CPU and memory Memory *can* be fast but then it becomes expensive Solution: Memory hierarchy: (cheaper, slower as you go down list) CPU Registers Cache (Level 1, Level 2, …) Main memory (may be more than one kind) Disk/SSD, … Flash cards, tapes etc. CSE 3430; Part 4 12 Memory hierachy (contd) Key requirement: Data that CPU needs next must be as high up in the hierarchy as possible Important concept: Locality of reference Temporal locality: A recently executed instruction is likely to be executed again soon Spatial locality: Instructions near a recently executed instruction are likely to be executed soon CSE 3430; Part 4 13 Cache and Main Memory CPU Main Memory Cache When a Read is received and the word is not in the cache, a block of words containing that word is transferred to cache (one word at a time) Locality of ref. means future requests can probably be met by the cache CPU doesn’t worry about these details … the circuitry in the cache handles them CSE 3430; Part 4 14 Cache structure & operation Organized as a collection of blocks Ex: Cache of 128 blocks, 16 words/block Mem: 64K words, 16 bit addr: 4K blocks Direct-mapping approach: Block j of mem. → Cache bl. j mod 128 So blocks 0, 128, 256, … of main mem. will all map to cache block 0; etc. Mem. addr.: 5 tag bits+7 block bits+4 word Block bits → the relevant cache block Word bits → which word in block Tag bits → Which of mem. block 0, 128, …? CSE 3430; Part 4 15 Cache structure & op (contd) When a block (16 words) of memory is stored in the corresponding cache block, also store the tag bits of that mem. block When CPU asks for a word of memory: Cache compares the leftmost 5 bits of addr. with tag bit stored with the corresponding cache block; (“corresponding”?) If it matches, there is a cache “hit”, and we can use copy in cache CSE 3430; Part 4 16 Cache structure & op (contd) But what if it is a write op? Need to update copy in main mem. as well: Write-through protocol: Update both the value in cache and in memory Update only the cache location but set cache block’s dirty bit to 1 CSE 3430; Part 4 17 Cache structure & op (contd) What if the word is not in the cache? Need to read the entire block of memory that contains that word, i.e., based on first 12 bits of address, into the right cache block But first: check if dirty bit of that cache block is 1 and, if so, write it back to memory before doing the above This can lead to poor performance -depending on the degree of spatial/temporal locality of reference CSE 3430; Part 4 18 Cache structure & op (contd) Associative-mapping approach: A main-memory block may be placed in any cache block Each cache block has a *12 bit* tag that identifies which mem. block is currently mapped to it When an address is received from CPU, the cache compares the first 12 bits with the tag of each cache block to see if there is a match That can be done quite fast (in parallel) CSE 3430; Part 4 19 Cache structure & op (contd) For anything other than direct-mapping need suitable replacement algorithm Widely used: replace least recently used (LRU) block Surprising: Random replacement does very well Not so surprising: even small caches are useful CSE 3430; Part 4 20 Cache structure & op (contd) Good measure of effectiveness: hit rate and miss rate These can depend on the program being executed Compilers try to produce code to ensure high hit rates Cache structure can also be tweaked: e.g., have separate “code cache” and “data cache” CSE 3430; Part 4 21 Improving performance: Pipelining Key idea: Simultaneously perform different stages of consecutive instructions: F(etch), D(ecode), E(xec), W(rite) I1 I2 I3 I4 1 2 3 4 5 6 F1 D1 E1 W1 F2 D2 E2 W2 F3 D3 E3 W3 F4 D4 E4 7 W4 • Need buffers between stages CSE 3430; Part 4 22 Pipelining (contd) Need buffers between stages Fetch Instruction B1 Decode ins & Fetch operands B2 Execute operation B3 Write results During clock cycle 4: Buffer B1 holds I3 which was fetched in cycle 3 and is being decoded B2 holds both the source operands for I2 and specification of operation to be performed – produced by decoder in cycle 3; B2 also holds info that will be needed for the write step (in next cycle) of I2 B3 holds results produced by exec unit and the destination info for I1 CSE 3430; Part 4 23 Potential problems in pipelining Mismatched stages: Different stages require different no. of cycles to finish e.g.: instruction fetch Cache can help address this But what if previous instruction is a branch? That is an instruction hazard Especially problematic for conditional branches Various solutions in both hardware and software (in compilers) have been tried CSE 3430; Part 4 24 Potential problems in pipelining (contd) Data hazards: if the data needed to execute an instruction is not yet available Maybe data needed has to be computed by previous instruction … can happen even in the case of register operands (how?) Again various solutions have been proposed for dealing with data hazards Important concept: data cache vs. instruction cache Also multiple levels of cache (part of mem. hierarchy) CSE 3430; Part 4 25 Improving perf.: multiple processors SIMD (single-instruction, multiple-data): One of the earliest: Vector/array processors … … … … … Control Processor … Broadcast instructions … Very useful for matrix computations; likely to be of value in data-analytics applications; GPUs use similar architecture 26 Improving perf.: multiple processors MIMD: Multiple-instruction, multiple-data i.e., different CPUs executing different instructions on different sets of data Tends to be complex with questions such as how to organize memory Common memory accessible to all processors? (slow) Copy of portion of memory in cache of each processor? (fast but cache coherence?) OS plays an important role in managing such systems Ignoring remaining slides CSE 3430; Part 4 27 Interrupts? Interrupt controller Interrupt controller CPU Interrupt-in-service Interrupt mask Device 0 Device 1 Device 2 Device 3 28