The Web

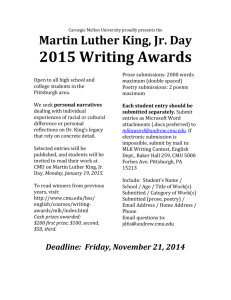

advertisement

The Web Aditya Akella 18 Apr, 2002 Aditya Akella (aditya@cs.cmu.edu) In Today’s Lecture… • Web caches • Content Distribution Networks • Peer-to-Peer Networks Aditya Akella (aditya@cs.cmu.edu) In Today’s Lecture… • Web caches • • • • Caching Proxies Cache hierarchies, ICP Towards Optimal Caches Discussion • Content Distribution Networks • Peer-to-Peer Networks Aditya Akella (aditya@cs.cmu.edu) Web Caching • Why cache HTTP objects? • Reduce client response time • Serve locally • Reduce network bandwidth usage • Wide area vs. local area use Aditya Akella (aditya@cs.cmu.edu) Caching Web Proxies • Sharing the Internet connection • Small businesses – network connectivity can be shared between many workstations • Filtering • Proxy is only host that can access Internet • Security -- Administrators makes sure that it is secure Policy -- Filter requests • • Caching • Provides a centralized coordination point to share information across clients Aditya Akella (aditya@cs.cmu.edu) Caching Proxies Sources for Misses • Capacity • Can only cache a limited set of objects • Cache typically on disk • Compulsory retrievals • First time access to document • Non-cacheable documents • CGI-scripts • Personalized documents (cookies, etc) • Encrypted data (SSL) • Consistency • Document has been updated/expired before reuse Aditya Akella (aditya@cs.cmu.edu) Cache Hierarchies • Population for single proxy limited • Performance, administration, policy, etc • Use hierarchy to scale a proxy to more than limited population • Why is hierarchy scalable? • Larger population = higher hit rate • Larger effective cache size • But, need caches talk to each other… • Internet Cache Protocol (ICP) Aditya Akella (aditya@cs.cmu.edu) ICP • Simple protocol to query another cache for content • Uses UDP to avoid overhead • ICP message contents • Type – query, hit, hit_obj, miss • Other – identifier, URL, version, sender address Aditya Akella (aditya@cs.cmu.edu) Squid Cache ICP Use • Upon query that is not in cache • Sends ICP_Query to each peer and parents • Sets time to short period (default 2 sec) • Peer caches process queries and return either ICP_Hit or ICP_Miss • Proxy begins transfer upon reception of ICP_Hit • Upon timer expiration, proxy request object from closest (RTT) parent proxy • Better -- Direct to parent that is towards origin server Aditya Akella (aditya@cs.cmu.edu) Squid Parent ICP Query Child ICP Query Child Child Web page request Client Aditya Akella (aditya@cs.cmu.edu) Squid Parent ICP MISS Child ICP MISS Child Child Client Aditya Akella (aditya@cs.cmu.edu) Squid Parent Web page request Child Child Child Client Aditya Akella (aditya@cs.cmu.edu) Squid Parent ICP Query Child ICP Query Child ICP Query Child Web page request Client Aditya Akella (aditya@cs.cmu.edu) Squid Parent ICP HIT ICP HIT Child Child ICP MISS Child Web page request Client Aditya Akella (aditya@cs.cmu.edu) Squid Parent Web page request Child Child Child Client Aditya Akella (aditya@cs.cmu.edu) Optimal Cache Mesh Behavior • Minimize number of hops through mesh • Each hop adds significant latency • ICP hops can cost a 2 sec timeout each! Especially painful for misses • • Share across many users and scale to many caches • ICP does not scale to a large number of peers • Cache and fetch data close to clients Aditya Akella (aditya@cs.cmu.edu) Hinting • Have proxies store content as well as metadata about contents of other proxies (hints) • Minimizes number of hops through mesh • Size of hint cache is a concern – size of key vs. size of document • Having hints can help consistency • Makes it possible to push updated documents or invalidations to other caches • How to keep hints up-to-date? • Not critical – incorrect hint results in extra lookups not incorrect behavior • Can do updates to peers at regular intervals Aditya Akella (aditya@cs.cmu.edu) An Example Summary Cache • Typical cache has 8GB of space and 8KB objects • • • 1M objects Using 16byte hashes (MD5) 16MB per peer Solution: Bloom filters Bloom filters can help save • Proxy contents summarized as a M bit value • Each page stored contributes k hash values in range [1..M] • Bits for k hashes set in summary • Check for page => if all pages k hash bits are set in summary it is likely that proxy has summary • Delayed propagation of hints • Waits until threshold %age of cached documents are not in summary Aditya Akella (aditya@cs.cmu.edu) Leases • Only consistency mechanism in HTTP is for • clients to poll server for updates Should HTTP also support invalidations? • Problem: server would have to keep track of many, many clients who may have document Possible solution: leases • • Leases – server promises to provide invalidates for a particular lease duration • Server can adapt time/duration of lease as needed • To number of clients, frequency of page change… Aditya Akella (aditya@cs.cmu.edu) How Useful can Caching Be? • Over 50% of all HTTP objects are uncacheable – why? • Many issues -- Not easily solvable • Dynamic data stock prices, scores, web cams • CGI scripts results based on passed parameters • SSL encrypted data is not cacheable • Most web clients don’t handle mixed pages well many generic objects transferred with SSL • Hit metering owner wants to measure # of hits for revenue, etc. • Are proxies the best solution? Aditya Akella (aditya@cs.cmu.edu) Web Proxies - Problems • Implementation issues • Aborted transfers • Cache size settings • What if clients did what proxies already do? • Utility of proxy caching could be marginal • Hierarchies – no longer useful • Faster processors => proxies can handle large • populations Hierarchy useful only for policy, administration Aditya Akella (aditya@cs.cmu.edu) In Today’s Lecture… • Web caches • Content Distribution Networks • Server Selection • Akamai • Peer-to-Peer Networks Aditya Akella (aditya@cs.cmu.edu) CDN • Replicate content on many servers • Help serve data from the nearest replica • Challenges – to name a few… • How to find replicated content • How to choose among know replicas • How to direct clients towards best replica • • • DNS, HTTP 304 response, anycast, etc. How to replicate content Where to replicate content Aditya Akella (aditya@cs.cmu.edu) CDN • Replicate content on many servers • Help serve data from the nearest replica • Challenges – to name a few… • How to choose among know replicas • How to direct clients towards best replica • DNS, HTTP 304 response, anycast, etc. How to find replicated content How to replicate content Where to replicate content • • • • Akamai Aditya Akella (aditya@cs.cmu.edu) Server Selection • Service is replicated in many places in network • Which server to pick? • Lowest load to balance load on servers • Best performance to improve client • performance • Based on Geography? RTT? Throughput? Any alive node to provide fault tolerance Aditya Akella (aditya@cs.cmu.edu) Redirecting Clients • How to direct clients to a particular server? • As part of routing anycast, cluster load balancing • As part of application HTTP redirect • As part of naming DNS Aditya Akella (aditya@cs.cmu.edu) Redirection - Routing Based • Anycast • Give service a single IP address • Each node implementing service • • advertises route to address Packets get routed routed from client to “closest” service node • Closest is defined by routing metrics • May not mirror performance/application needs What about the stability of routes? Aditya Akella (aditya@cs.cmu.edu) Redirection - Routing Based • Cluster load balancing • Router in front of cluster of nodes directs packets • • to server Must be done on connection by connection basis • Forces router to keep per connection state How to choose server • Easiest to decide based on arrival of first packet in exchange • Primarily based on local load • Can be based on later packets (e.g. HTTP Get request) but makes system more complex Aditya Akella (aditya@cs.cmu.edu) Redirection - Application Based • HTTP supports simple way to indicate that Web page • has moved Server gets Get request from client • Chooses best server is best suited for particular client and object and returns HTTP redirect to that server • Can make informed application specific decision • May introduce additional overhead • • Multiple connection setup, name lookups, etc. While good solution in general HTTP Redirect has some design flaws – especially with current browsers Aditya Akella (aditya@cs.cmu.edu) Redirection - Naming Based • Client does name lookup for service • Name server chooses appropriate server address • What information can it base decision on? • Server load/location must be collected • Name service client • Typically the local name server for client • Round-robin • Randomly choose replica • Avoid hot-spots Aditya Akella (aditya@cs.cmu.edu) Redirection - Naming Based • Predicted application performance • How to predict? • Only have limited info at name resolution • Multiple techniques • Static metrics to get coarse grain answer • Current performance among smaller group • How does this affect caching? • Typically want low TTL to adapt to load changes • What do the first and subsequent lookup do? Aditya Akella (aditya@cs.cmu.edu) How Akamai Works • Clients fetch html document from primary • server • E.g. fetch index.html from cnn.com URLs for replicated content are replaced in html • E.g. <img src=“http://cnn.com/af/x.gif”> replaced with <img src=“http://a73.g.akamaitech.net/7/23/cnn.com/af/ x.gif”> • Client is forced to resolve aXYZ.g.akamaitech.net hostname Aditya Akella (aditya@cs.cmu.edu) How Akamai Works How is content replicated? • Akamai only replicates static content • Modified name contains original file • Akamai server is asked for content • First checks local cache • If not in cache, requests file from primary server and caches file Aditya Akella (aditya@cs.cmu.edu) How Akamai Works • Root server gives NS record for akamai.net • Akamai.net name server returns NS record for g.akamaitech.net • Name server chosen to be in region of client’s name server • TTL is large • G.akamaitech.net nameserver choses server in region • Should try to chose server that has file in cache - How to choose? • Uses aXYZ name ( uses consistent hash) • TTL is small Aditya Akella (aditya@cs.cmu.edu) How Akamai Works cnn.com (content provider) DNS root server Akamai server Get foo.jpg 12 Get index. html 1 11 2 3 4 5 Akamai high-level DNS server 6 7 Akamai low-level DNS server 8 Closest Akamai server 9 End-user 10 Get /cnn.com/foo.jpg Aditya Akella (aditya@cs.cmu.edu) Akamai – Subsequent Requests cnn.com (content provider) DNS root server Akamai server Get index. html 1 Akamai high-level DNS server 2 7 Akamai low-level DNS server 8 Closest Akamai server 9 End-user 10 Get /cnn.com/foo.jpg Aditya Akella (aditya@cs.cmu.edu) In Today’s Lecture… • Web caches • Content Distribution Networks • Peer-to-Peer Networks • Overview • Napster, Gnutella, Freenet Aditya Akella (aditya@cs.cmu.edu) Peer-to-Peer Networks • Each node in the network has identical • • capabilities • No notion of a server or a client Typically each member stores content that it desires Basically a replication system for files • Have multiple accessible sources of data • Peer-to-peer networks allow files to be anywhere • • Searching is the key challenge Dynamic member list makes it more difficult Aditya Akella (aditya@cs.cmu.edu) The Lookup Problem N1 Key=“title” Value=MP3 data… Publisher N2 Internet N4 N5 N3 ? Client Lookup(“title”) N6 Aditya Akella (aditya@cs.cmu.edu) Centralized Lookup (Napster) N1 N2 SetLoc(“title”, N4) Publisher@N4 Key=“title” Value=MP3 data… N3 DB N9 N6 N7 Client Lookup(“title”) N8 Simple, but O(N) state and a single point of failure Aditya Akella (aditya@cs.cmu.edu) Flooded Queries (Gnutella) N2 N1 Publisher@N 4 Key=“title” Value=MP3 data… N6 N7 Lookup(“title”) N3 Client N8 N9 Robust, but worst case O(N) messages per lookup Aditya Akella (aditya@cs.cmu.edu) Routed Queries (Freenet) N2 N1 Publisher N3 Client Lookup(“title”) N4 Key=“title” Value=MP3 data… N6 N7 N8 N9 Aditya Akella (aditya@cs.cmu.edu) Napster • Simple centralized scheme motivated by ability to • sell/control How to find a file: • On startup, client contacts central server and reports list of files • Query the index system return a machine that stores the required file • Ideally this is the closest/least-loaded machine • Fetch the file directly from peer • Advantages: • Simplicity, easy to implement sophisticated search engines on top of the index system • Disadvantages: • Robustness, scalability Aditya Akella (aditya@cs.cmu.edu) Gnutella • Distribute file location • On startup client contacts any servent (server + client) in network • Servent interconnection used to forward control (queries, hits, etc) • Idea: multicast the request • How to find a file: • Send request to all neighbors • Neighbors recursively multicast the request • Eventually a machine that has the file receives the request, and it sends back the answer • Advantages: • • Totally decentralized, highly robust Disadvantages: • Not scalable Aditya Akella (aditya@cs.cmu.edu) Gnutella Details • Basic message header • • Unique ID, TTL, Hops Message types • Ping – probes network for other servents • Pong – response to ping, contains IP addr, # of files, # of • • • • • Kbytes shared Query – search criteria + speed requirement of servent QueryHit – successful response to Query, contains addr + port to transfer from, speed of servent, number of hits, hit results, servent ID Push – request to servent ID to initiate connection, used to traverse firewalls Ping, Queries are flooded QueryHit, Pong, Push reverse path of previous message Aditya Akella (aditya@cs.cmu.edu) Gnutella: Example Assume: m1’s neighbors are m2 and m3; m3’s neighbors are m4 and m5;… m5 E m6 F E D E? E? m4 E? E? C A m1 B m3 m2 Aditya Akella (aditya@cs.cmu.edu) Freenet • Additional goals to file location/replication: • Provide publisher anonymity, security • Resistant to attacks • Files are stored according to associated key • Core idea: try to cluster information about similar keys • Messages • Random 64bit ID used for loop detection • TTL • TTL 1 are forwarded with finite probablity -- Helps anonymity • Depth counter • Opposite of TTL – incremented with each hop • Depth counter initialized to small random value Aditya Akella (aditya@cs.cmu.edu) Data Structure • Each node maintains a common stack local node id next_hop file … • id – file identifier • next_hop – another node that store the file id • file – file identified by id being stored on the • Forwarding: to • If file id stored locally, then stop … • Each message contains the file id it is referring • Forwards data back to upstream requestor • Requestor adds file to cache, adds entry in routing table • If not, search for the “closest” id in the stack, and forward the message to the corresponding next_hop Aditya Akella (aditya@cs.cmu.edu) Query Example query(10) n2 n1 4 n1 f4 12 n2 f12 5 n3 1 9 n3 f9 4’ 4 2 n3 3 n1 f3 14 n4 f14 5 n3 n4 n5 14 n5 f14 13 n2 f13 3 n6 5 4 n1 f4 10 n5 f10 8 n6 Note: doesn’t show file caching on the reverse path Aditya Akella (aditya@cs.cmu.edu) Freenet Requests • Any node forwarding reply may change the source of the reply (to itself or any other node) • Helps anonymity • Each query is associated a TTL that is decremented each time the query message is forwarded; to obscure distance to originator: • TTL can be initiated to a random value within some bounds • When TTL=1, the query is forwarded with a finite probability • Each node maintains the state for all outstanding queries that have traversed it help to avoid cycles • If data is not found, failure is reported back • Requestor then tries next closest match in routing table Aditya Akella (aditya@cs.cmu.edu) Freenet Request Data Request C Data Reply Request Failed 2 3 1 A B 12 D 6 7 4 11 10 9 5 F E 8 Aditya Akella (aditya@cs.cmu.edu) Freenet Search Features • Nodes tend to specialize in searching for • • • similar keys over time • Gets queries from other nodes for similar keys Nodes store similar keys over time • Caching of files as a result of successful queries Similarity of keys does not reflect similarity of files Routing does not reflect network topology Aditya Akella (aditya@cs.cmu.edu) Freenet File Creation • Key for file generated and searched helps identify collision • Not found (“All clear”) result indicates success • Source of insert message can be change by any forwarding node • Creation mechanism adds files/info to • • locations with similar keys New nodes are discovered through file creation Erroneous/malicious inserts propagate original file further Aditya Akella (aditya@cs.cmu.edu)