Novel approaches of data-mining in experimental physics

advertisement

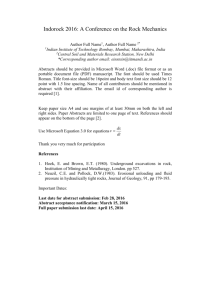

XXIV International Symposium on Nuclear Electronics & Computing Varna, 09-16 September, 2013. Novel approaches of data-mining in experimental physics G.A.Ososkov, Laboratory of Information Technologies Joint Intstitute for Nuclear Research, 141980 Dubna, Russia email: ososkov@jinr.ru http://www.jinr.ru/~ososkov 3/22/2016 1 Data mining concept Classical approaches for processing experimental data supposed to have a model describing a physical system and based on the advanced physical theory. Then observed data are used to verify the underlying models and to estimate its direct or indirect parameters. Now, when the experimental data stream is terabytes/sec, we come to the BIG DATA era, having often a lack of the corresponding theory, our data handling paradigm shifts from classical modeling and data analyses to developing models and the corresponding analyses directly from data. (Data-driven detector alignment as an example) The entire process of applying a computer-based methodology, including new techniques, for discovering knowledge from data is called data mining. Wikipedia: “-it is the analysis of large amounts of data about experimental results held on a computer in order to get information about them that is not immediately available or obvious.” 3/22/2016 2 Data mining methods It is the process of extracting patterns from large data sets by combining methods from statistics and artificial intelligence with database management. Data mining commonly involves four classes of tasks: Association rule learning – searches for relationships between variables. Clustering – is the task of discovering groups and structures in the data that are in some way or another "similar", without using known structures in the data. Classification – is the task of generalizing known structure to apply to new data. Regression – attempts to find a function which models the data with the least error Although Data Mining Methods (DMM) are oriented mostly on mining business and social science data, in recent years, data mining has been used widely in the areas of science and engineering, such as bioinformatics, genetics, medicine, education and electrical power engineering. So a great volume of DMM software is now exists as open-source and commercial. However one would not find experimental physics in DMM application domains. Therefore we are going to understand how DMM would look like, if data will be taken from high energy physics? 3/22/2016 3 Data mining peculiarities for experimental high energy physics (HEP) Let us consider some examples Condensed Barion Matter 1. СВМ experiment TRD RICH (Germany, GSI, to be running in 2018) 107 events per sec, ~1000 tracks per event ~100 numbers per track Total: terabytes/sec ! RICH - Cherenkov radiation detector simulated event of the central Au+Au collision in the vertex detector schematic view of the СВМ setup Our problem is to recognize all of these rings and evaluate their parameters despite of their overlapping, noise and optical shape distortions view of Cherenkov radiation rings registered by the CBM RICH detector 3/22/2016 4 2. Transition radiation detector (TRD) TR Production TRD measurements allow for each particle to reconstruct its 3D track and calculate its energy loss (EL) during its passage through all 12 TRD stations in order to distinguish electrons e- from pions π± . Unlike π±, electrons generate additionally the transition radiation (TR) in TRD. Our problem is to use the distributions of EL+TR for e- and π± in order to test hypothesis about a particle attributing to one of these alternatives keeping the probability α of the 1st kind of error on the fixed level α =0.1 and the probability β of the 2nd kind of error on the level less than β< 0.004. Both distributions were simulated by special program GEANT-4 taking into account all details of the experimental setup and corresponding physical assumptions related to heavy ion collisions. However the test based on direct cut on the sum of energy losses could not satisfy these requirements because both EL and TR have long-tailed Landau distributions. The main lesson: a transformation needed to reduce Landau tails of EL 3/22/2016 5 Example 3: the OPERA experiment CERN LNGS: the world largest underground physics laboratory Neutrino beam Search for neutrino oscillations LNGS 1600 m in depth ~100’000 m3 caverns’ volume OPERA 3/22/2016 A. Ereditato - LNGS - 31 May 2010 6 6 the OPERA experiment: Search for neutrino oscillations (OPERA is running) Hadron shower axix Each wall is accompanied by two planes of electronic trackers made of scintillator strips The crucial issue in OPERA is finding of that particular brick where the neutrino interaction takes place. Tracks formed by scintillator hits should originate from a single point - vertex. However the main obstacle is back-scattered particles (BSP) occuring in 50% of events, which do not contain useful information. Emulsion scanning to determine neutrino oscillation – is the separate task out of this talk 3/22/2016 BSP Real vertex Two types of OPERA events with BSP 7 The particular features of the data from these detectors are as follows: data arrive with extremely high rate; recognized patterns are discrete and have complex texture; very high multiplicity of objects (tracks, Cherenkov radiation rings, showers) to be recognized in each event; the number of background events, which are similar to “good” events, is larger than the number of the latter events by several orders of magnitude; noise counts are numerous and correlated. ——————————————————————————————--—————- The basic requirements to data processing in current experiments are: maximum speed of computing in combination with the highest attainable accuracy and high efficiency of methods of estimating physical parameters interesting for experimentalists. 3/22/2016 8 Data mining in experimental HEP 1. To understand the need for analyses of large, complex, information-rich data sets in HEP let us start from considering stages of HEP data processing 1. Pre-processing is very important stage. It includes: Data acquisition: before data mining algorithms can be used, a target data set must be assembled and converted from the rough format of detector counters into natural unit format. Data Transformation: to transform data into forms appropriate for mining, they must be corrected from detector distortions and misalignment by special calibration and alignment transformation procedures. Data selection: then data must be cleaned to remove noisy, inconsistent and other observations, which do not satisfy acceptance conditions. It can be accomplished in a special often quite sophisticate triggering procedure that usually causes a significant reduction of target data (several orders of magnitude). 3/22/2016 9 Data mining in experimental HEP 2. 2. HEP data processing involves following stages and methods: Pattern recognition: hit detection, tracking, vertex finding, revealing Cherenkov rings , fake objects removing etc employing the following methods: Cluster analysis Hough transform Kalman filter Neural networks Cellular automata Wavelet analysis The next expounding will be Physical parameters estimation some retrospections of the - robust M-estimations JINR experience to illustrate Hypothesis testing HEP data processing steps - Likelihood ratio test - Neural network approach - Boosted decision trees 3/22/2016 10 Data mining in experimental HEP 3. Monte-Carlo simulations are used on all stages and allow to - accomplish in advance the experimental design of a hardware setup and data mining algorithms and optimize them from money, materials and time point of view; - develop needed software framework and test it; - optimize structure and needed equipment of planned detectors minimizing costs, timing with a proposed efficiency and accuracy; - calculate in advance all needed distributions or thresholds for goodness-of-fit tests; Parallel programming of optimized algorithms is inevitable Software quality assurance (SQA) is the very important issue of any great programming system development GRID technologies changed considerably HEP data processing stages, which now more and more correspond to the GRID Tier hierarchy. Since each of theses items needs a long separate expounding, they will be only briefly noted below 3/22/2016 11 Some retrospections Artificial Neural Networks Why ANN for a contemporary HEP experiment? 1. historically namely physicists wrote in 80-ties one of the first NN programing packages – Jetnet. They were also among the first neurochip users after being trained ANN is one of the most appropriate tools for implementing many of data handling tasks, while on the basis of some new physical model physicists have possibility to generate training samples of any arbitrary needed length by Monte Carlo appearance of TMVA - Toolkit for Multivariate Data Analysis with ROOT Thus, there are many real problems solved on the basis of ANN in experimental physics as - Object recognizing and classifying - Statistical hypothesis testing - Expert system implementing - Approximation of many-dimensional functions - Solution of non-linear differential equations - etc 3/22/2016 12 NN application examples: 1. RICH detector A fragment of photodetector plane. In average there are 1200 points per event forming 75 rings. A sketch of the RICH detector Data processing stages: Ring recognition and their parameters evaluation; Compensating the optical distortions lead to elliptic shapes of rings; Matching found rings with tracks of particles which are interesting to physicists Eliminating fake rings which could lead to wrong physical conclusions Accomplishing the particle identification with the fixed level of the ring recognition efficiency 3/22/2016 Electrons Pions Radius versus momentum for reconstructed rings. 13 NN for the RICH detector The study has been made to select the most informative ring features needed to distinguish between good and fake rings and to identify electrons. Ten of them have been chosen to be input to ANNs, they are: 1.Number of points in the found ring 2.Its distance to the nearest track 3.The biggest angle between two neighbouring points 4.Number of points in the narrow corridor surrounding ring 5.Radial ring position on the photodetector plane 6.χ2 of ellipse fitting electrons 7.Both ellipse half-axes (A and B) 8.angle φ of the ellipse inclination to abscissa 9.track azimuth 10. track momentum 40000 e and π rings to train π-mesons Two samples with 3000 e (+1)and 3000 π (-1) have been simulated to train NN. Electron recognition efficiency was fixed on 90% Probabilities of the 1-st kind error 0.018 and the 2-d kind errors 0.0004 correspondingly were obtained 3/22/2016 14 NN application examples: 2. e- / π± separation by transition radiation Two ideas to avoid obstacles with the easy cut test and long tails of energy loss (ΔE) distributions: 1. Apply artificial neural network for testing 2. Calculate likelihood ratio for ΔE of each TRD station as input to ANN We use Monte-Carlo calculations to simulate a representative sample of TRD signals for given experimental conditions and then obtain energy losses from all n TRD stations for both eand π± , sort them and calculate probability density functions (PDF) for ordered ΔEs. Then we repeat simulation in order to train neural network with n inputs and one output neuron, which should be equal +1 in case of electron and and -1 in case of pion. As inputs, the likelihood ratios for each ΔE were calculated L PDF (E ) PDF (E ) PDFe (E ) The result of testing the trained neural network gave the probability of the 2nd kind of error β= 0.002 It satisfied the required experimental demands. It is interesting to note: Applying Busted decision Trees algorithm from TMVA allows to improve pion suppression result up to 15-20% comparing to NN 3/22/2016 ANN output distribution 15 NN application examples: 3. OPERA experiment To facilitate the vertex location a considerable data preprocessing has been fulfilled in order to eliminate or, at least, reduce electronic noise. The method was based on cellular automaton that rejects points having no nearest neighbours; Reconstruct muon tracks (Hough transform, Kalman filter) M-estimate hadron shower axis with 2D robust weights taking into account not only distance of a point to the shower axis, but also amplitudes of scintillator signals make a study to determine 15 parameters to input them to ANN . According to 3 classes of events 3 neural networks of MLP type were then trained for each class on 20000 simulated events to make a decision about the wall with the event vertex. The wall finding efficiency on the level of 80 – 90% was then calculated by testing 10000 events. NN results were then used in the brick finding procedure. 3/22/2016 16 Recurrent ANNs and applications Hopfield’s theorem: the energy function E(s) = - ½ Σij si wij sj of a recurrent NN with the symmetrical weight matrix wij = wji , wii = 0 has local minima corresponding to NN stability points Applications in JINR 1. Track recognition by Denby- Peterson (1988) segment model with modifications was successfully used for tracking in the EXCHARM experiment 2. More rare: track recognition by rotor models of Hopfield networks E 1 2 ij 1 rij v v m i j 1 2 ij 1 rij 2 ( v r ) i ij m The energy function: the first term forces neighbouring rotors to be close to each other. The second term is in charge of the same between rotors and track-segments. 3/22/2016 17 Our innovations (I.Kisel, 1992) cos 2 ij sin 2 ij v j v j W ij v j sin 2 ij cos 2 ij Therefore we obtain a simple energy function without any constrains 1 v i v j E 2 ij This approach has been applied in the ARES experiment with some extra efforts: - prefiltering by cellular automaton; - local Hough algorithm for initial rotor set up; - special robust multipliers for synaptic weights. Results: recognition efficiency - 98% Analysis of ionograms. Data from the vertical sounding of the ionosphere 3/22/2016 Up to now the corresponding program is in use in the Irkutsk Institute of the terrestrial magnetism, Russia and in the Lowell University, MA, USA 18 Elastic neural networks ANN drawbacks revealed by physicists in many HEP applications: ▪ too slow convergence of the ANN evolution due to too high degrees of freedom; ▪ only recognition is fulfilled without taking into account the known track model; ▪ over-sensitivity of ANNs to noise is indicated. Therefore it was suggested to combine both stages: recognition and fitting of a track in one procedure when deformable templates (elastic arm) formed by equations of particle motion are all bended in order to overlaid the data from the detector. A routine then has to evaluate whether or not the template matched a track. Ohlsson and Peterson (O&P, 1992 ) from the Lund University realized this idea as a special Hopfield net with the energy function depending from helix parameters describing a track and binary neurons Sia, each of them is equal to 1 or 0 when i-th point belongs or not to the a-th track, respectively. Gyulassy and Harlander (G & H, 1991) proposed their elastic tracking that can physically be described as interaction between the positively charged template and negatively charged spatial points measured in the track. The better the elastic template fits points, the lower the energy of their interaction. Using Lorenz potential with the time-dependent width where a is the maximal distance, at which points are still accredited to this template, b<< a is spatial resolution of a detector, G & H obtained the energy to be minimized by helix parameters π 3/22/2016 19 Elastic neural networks applications To avoid E(π,t) getting caught in local spurious minima the simulated annealing iterative procedure is applied. On the first iteration w(t) is taken for the highest temperature, when E(π,t) has the only one minimum. Then w(t) is narrowed gradually allowing more and more accurate search of the global minimum. G&H elastic tracking was applied for the STAR TPC simulated data with remarkably high track-finding efficiency (1998) O&P elastic NNs after corresponding modifications were succesfully applied for Cherenkov ring search and track reconstructing (1997). Drift chamber tracks with their left-right ambiguity in magnetic field demanded to invent 2D neurons Si=(si+, si —) to determine a point to a track accreditation (1998) Important to note: a homogeneous magnetic field of NICA-MPD project will make it possible to apply this elastic arm approach for MPD TPC tracking 3/22/2016 20 Some retrospections 2. Robust estimates for heavy contaminated samples Why robust estimates? In all preceeding experimental examples we must solve typical statistics problems of parameter estimations by sets of measured data. However we faced with not usual applied statistics, but with special mass production statistics Keywords are: heavy data contamination due to noisy measurements; measurements from neighbour objects. need in very fast algorithms of hypothesis testing and parameter estimating Comparison of LSF and robust fit in case of one point outlier How to achieve that? - Robust approach, based on functional weights of each measuremet, preferably parallel algorithms 3/22/2016 21 M-estimate formalizm Instead of LSF with its crucial assumption of residual normality and quadratic nature of minimized functional we consider P.Huber’s M-estimate, i.e. replace quadratic functional S(p) to be minimized by L(p,σ)=Σi ρ(εi ), where measurement error ε is distributed according to J.Tukey's gross-error model f(ε) = (1-c) φ(ε) + c h(ε), c is a parameter of contamination, φ(ε) is the Gauss distribution and h(ε)is some long-tailed noise distribution density. Likelihood equation for the functional L(p,σ) by denoting can be modified to the form which is similar to the normal LSF equations, but with replacement of the numerical weight coefficients to weight functions w(ε) to be recalculated on each step of an iterative procedure. 3/22/2016 22 How to choose the weight function w(ε) ? For a particular, but important case of the uniform contamination h(ε)=h0 we found the optimal weights w(ε) which polynomial expansion of up to the fourth order leads to the approximation It is the famous Tukey's bi-weights, which are easier to calculate than optimal ones. Simulated annealing procedure is used to avoid sticking functional in local minima. Recall the energy function of G&H elastic tracking Lorentz potential in this sum plays a role of the robust functional weight. 3/22/2016 23 Application examples: 1. Determination of the interaction vertex position for only two coordinate planes (NA-45) The target consists of eight 25-μ gold discs. One of two silicon disk with 1000 track and noise hits. So, it is impossible to recognize individual tracks. Tukey biweight function with cT=3 was used. Iterational procedure converged in five iterations with the initial approximation taken as the middle of Z-axis target region. The results after processing 4000 Pb+Au events provide satisfactory accuracy of 300 μ along Z-axis and good local accuracy of a track. 3/22/2016 24 Application examples: 2.TDC calibration problem (HERA-B) It is caused by the fact that real track detectors, as drift chambers, for example, are measuring the drift time in TDC (Time-Digital Converter) counts. So to perform data processing, TDC counts are to be transferred, first of all, into drift radii. Such a transformation named calibration is inevitably data-driven, i.e. is carried out statistically from real TDC data of some current physical run. Here is an impressive example of the effectiveness of the robust approach. The fitting problem in such cases is radically different from any common one, since for every abscissa we have not one, but many ordinates with different amplitudes. Therefore every point to be fitted was provided by 2D weight depending as of this point distance to the fitted curve, as of its amplitude. It is shown how a calibration function r(t) can be obtained by fitting cubic splines to directly 2D histogram of drift radii versus TDC counts, which consists of many thousand bins with various amplitudes. The fitted spline only for upper part is shown. A lot of more applications were reported, in particular, for tracking in presence of δ-electrons in CMS muon endcup 3/22/2016 25 Some retrospections 3.Wavelet analysis What are continuous wavelets? In contrast to the most known mean of signal analysis as Fourier transform, onedimensional wavelet transform (WT) of the signal f(x) has 2D form , where the function is the wavelet, b is a displacement (time shift), and a is a scale (or frequency). Condition Cψ < ∞ guarantees the existence of and the wavelet inverse transform. Due to the freedom in choice, many different wavelets were invented. The family of continuous wavelets with vanishing momenta is presented here by Gaussian wavelets, which are generated by derivatives of Gaussian function Most known wavelet G2 is named “the Mexican hat” The biparametric nature of wavelets renders it possible to analyze simultaneously both time and frequency characteristics of signals. 3/22/2016 26 Wavelets can be applied for extracting very special features of mixed and contaminated signal An example of the signal with a localized high frequency part and considerable contamination then wavelet filtering is applied G2 wavelet spectrum of this signal Filtering works in the wavelet domain by thresholding of scales, to be eliminated or extracted, and then by making the inverse transform 3/22/2016 Filtering results. Noise is removed and high frequency part perfectly localized. NOTE: that is impossible by Fourier transform 27 Continuous wavelets: pro and contra PRO: - Using wavelets we overcome background estimation - Wavelets are resistant to noise (robust) CONTRA: - redundancy → slow speed of calculations - nonorthogonality (signal distotres after inverse transform!) Besides, real signals to be analysed by computer are discrete, in principle So orthogonal discrete wavelets should be preferable. Denoising by DWT shrinking wavelet shrinkage means, certain wavelet coefficients are reduced to zero: Our innovation is the adaptive shrinkage, i.e. λk= 3σk where k is decomposition level (k=scale1,...,scalen), σk is RMS of Wψ for this level (recall: sample size is 2n) 3/22/2016 Small peak finding with coiflets 28 NEW: Back to continuous wavelets Peak parameter estimating by gaussian wavelets When a signal is bell-shaped one, it can be approximated by a gaussian Then it can be derived analytically that its wavelet transformation looks as the corresponding wavelet with . parameters depending of the original signal parameters. Thus, we can calculate them directly in the wavelet domain instead of time/space domain. The most remarkable point is, we do not need the inverse transform! 3/22/2016 29 Estimating peak parameters in G2 wavelet domain How it works? Let us have a noisy invariant mass spectrum 1. transform it by G2 into wavelet domain 2. 2. look for the wavelet surface maximum bmax ,amax . 3. From the formula for WG2(a,b;x0,σ)g one can derive analytical expressions for its maximum x0 and a. max 5 which should correspond to the found bmax ,amax . Thus we can use coordinates of the maximum as estimations of wanted peak parameters peak has bell-shape form xˆ0 , aˆ 4. From them we can directly obtain halfwidth amplitude ˆ max W (aˆ 2 ˆ 2 ) A 5 aˆ 2ˆ and even the integral 3/22/2016 3 2 ˆ aˆ 5 I A 2 30 Application results to CBM invariant mass spectra Low-mass dileptons (muon channel) - ω– wavelet spectrum ω. Thanks to Anna Kiseleva ω. Gauss fit of reco signal M=0.7785 σ =0.0125 A=1.8166 Ig=0.0569 ω. Wavelets M=0.7700 σ =0.0143 A=1.8430 Iw=0.0598 ω-meson φ-meson Even φ- and mesons have been visible in the wavelet space, so we could extract their parameters. 3/22/2016 31 NEW: Example with set of FOPI data provided by N.Hermann, GSI, Darmstadt, Germany Wavelets G4 are used. The formula for σ obtaining is σ = amax/3 1 2 3 noise level σ = 0.009 Despite of the very jagged spectrum wavelets give visible peaks with σ1 = σ3 = 0.013, σ2 = 0.021 3/22/2016 32 Clustering in data mining Clustering is one of important DM task because it allows to seek groups and structures in the data that are in some way "similar", without using known structures in the data. Clustering methods are widely used in HEP data processing to find the point of particle passage through coordinate plane of some cell-structure detector New application of clustering analysis allows to develop the URQMD fragmentation model of nuclear collision at relativistic energies. Clusters or nuclear fragments are generated via dynamical forces between nucleons during their evolution in coordinate and momentum space New two steps clustering method is proposed for BIG DATA. It accomplishes the quantization of input data by generating so-called Voronoi partition. The final clustering is done using any conventional methods of clustering. A new promising watershed clustering algorithm is proposed. 3/22/2016 33 Parallel programming Fortunately the common structure of HEP experimental data naturally organized as the sequence of events gives the possibility for the natural multithread parallelism by handling events simultaneously on different processors. However the requirements of such experiments as the CBM to handle terabytes of data per second leads to the necessity of parallelism on the level of each event by so-called SIMDization of algorithms, that demands their substantial optimization and vectoring of input data. For instance, in case of CBM TRD and MuCh tracking algorithms we obtain Resulting speedup of the track fitter on the Computer with 2xCPUs Intel Core i7 (8 cores in total) at 2.67 GHz Time [μs/track] Speedup 1200 - Optimization 13 92 SIMDization 4.4 3 Multithreading 0.5 8.8 Final 0.5 2400 Initial 3/22/2016 Throughput: 2*106 tracks/s 34 Software quality assurance (SQA) Since software framework of any contemporary HEP experiment is developed by efforts of international team from thousand collaborants with various programming skills, software components, they wrote, can inevitably have bags, interconnection errors or output result different from specified before. Therefore automation of experimental framework software testing is needed to provide the following: - More reliable software, speedup of its development - Reduce development cycles - Continues integration and deployment - High code coverage to test, ideally, all code in the repository - Not only unit testing but also system test for simulation and reconstruction However known SQA systems could not be applied directly for these purposes,since they are based on the theory of reliability methods and suppose to have a highly qualified team of programmers and testing with immediate failure repairing, while the most software in our experimental collaborations are written by physicists who are not highly qualified in programming and are not able to watch over immediate failure repairing For discussion an automatic test system for HEP experiment should perform: Report generation for simulation studies; Automatic check of output results based on predefined values; Nightly monitoring of the simulation results; Designed to be modular in order to easy extend and add new histograms 3/22/2016 35 Conclusion and outlook remarks Importance of advanced Monte-Carlo simulations Robust estimates, neural networks and wavelet applications are really significant for data-mining in HEP It looks reasonable to provide wavelet analysis tools in ROOT The focus of developing data mining algorithms in HEP is shifted to their optimization and parallelization in order to speed them up considerably while keeping their efficiency and accuracy Parallelism is to be introduced inevitably on the basis of new technologies of computing and software Software reliability concept is very essential Distributed or cloud computing are growing. In HEP it is accomplished by GRID technologies 3/22/2016 36 Thanks for your attention! 3/22/2016 37 37 SQA example for FAIR GSI SQA general structure Histogram Creator. It realizes the management of large number of histograms 2. Drawer. Feature extractor. Report generator. Result checker. They provide - Base classes for simulation and study report generation; - Base functionality for histogram drawing; - Base functionality for serializing/deserializing images to/from XML/JSON - Report in HTML, text, Latex 3. SQA monitoring (SQAM) Its features allow users to easy increase number of tests for different collision systems, energies, detector geometries etc SQAM provides: - Automatic testing of simulation, reconstruction and analysis - Automatic check of simulation results QAM current status: About 30 tests run nightly. 1. 3/22/2016 38 3/22/2016 39