Claim - Microsoft Research

advertisement

Automated Text Summarization –

IJCNLP-2005 Tutorial

Chin-Yew Lin

Natural Language Group

Information Sciences Institute

University of Southern California

cyl@isi.edu

http://www.isi.edu/~cyl

How Much Information? (2003)

• Print, film, magnetic, and optical storage media produced about 5

exabytes (1018) of new information in 2002. 92% of the new

information was stored on magnetic media, mostly in hard disks.

– If digitized with full formatting, the 17 million books in the Library of Congress

contain about 136 terabytes of information;

– 5 exabytes of information is equivalent in size to the information contained in

37,000 new libraries the size of the Library of Congress book collections.

• The amount of new information stored on paper, film, magnetic, and

optical media has about doubled during 1999 – 2002.

• Information flows through electronic channels – telephone, radio,

TV, and the Internet – contained almost 18 exabytes of new

information in 2002, three and a half times more than is recorded in

storage media (98% are telephone calls).

* Source: How much information 2003

* http://www.sims.berkeley.edu/research/projects/how-much-info2003/execsum.htm#summary

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

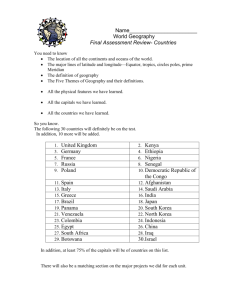

Table of contents

1.

2.

3.

4.

5.

Motivation and Introduction.

Approaches and paradigms.

Summarization methods.

Evaluating summaries.

The future.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Which School is Better?

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Is this Shoe Good or Bad?

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

What’s in the News?

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

How to Get from A to B?

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Get a Job!

*About.com

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Which Movie for Friday Night?

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Browse a Summarized Web

* Stanford PowerBrowser Project, Orkut et al.

WWW10, 2001

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

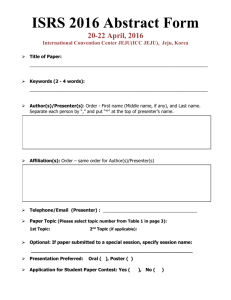

The Big & Bigger Search Engines

Search Engine Reported Size Page Depth

Google

8.1 this

billion

101K posted a

“As

those of you who follow

blog know, I recently

weather

you to

material changes

to our index.

MSN report alerting 5.0

billion

150K

Since

that post, we've seen

discussion

from

webmasters

Yahoo

4.2some

billion

*

500K

who have noticed more of their documents in our index. As it

Ask Jeeves

2.5 billion

101K

turns out we have grown our index and just reached a significant

* Nov, 2004

searchenginewatch.com

milestone

at Yahoo! Search

— our index now provides

BillionstoOfover

Textual

Indexed

(6,we

2002

- 9, 2003)

access

20 Documents

billion items

. While

typically

don't

disclose size (since we've always said that size is only one

dimension of the quality of a search engine), for those who are

curious this update includes just over 19.2 billion web documents,

1.6 billion images, and over 50 million audio and video files. Note

that as with all index updates we are still tuning things so youユll

continue to see some fluctuation in ranking over the next few

weeks.”

8/8/2005 Yahoo! Search Blog

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

The Bottom Line

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Solution: Summarization

• Informed decision through summarization

• What is summarization?

– A summary is a concise restatement of

the topic and main ideas of its source.

• Concise – giving a lot of information clearly and in

a few words. (Oxford American Dictionary)

• Restatement – in your own words.

• Topic – what is the source about?

• Main ideas – important facts or arguments about

the topic.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Kinds of Summaries

• Based on two different perspectives

– The author’s point of view

• Paraphrase summary (indicative vs. informative)

– In the fall of 1978, Fran Tate wrote some hot checks to finance

the opening of a Mexican restaurant in Barrow, Alaska. All the

banks she had applied to for financing had turned her down.

But Fran was sure that Barrow lusted for a Mexican restaurant,

so she went ahead with her plans. (“In Alaska: Where the Chili is Chilly”, by Gregory Jaynes)

– The summary writer’s point of view

• Analytical summary

– For Gregory Jaynes, Fran Tate embodies the astonishing

independence and toughness that is typical of Alaska at its

best. Tate has opened a Mexican restaurant – an unlikely

enterprise – in Barrow, Alaska.

From “Reaching Across the Curriculum”, ©1997, Eva Thury and Carl Drott.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

How to Summarize?

• Topic Identification

– Find the most important information – the topic.

•

The Egyptian civilization was one of the most important cultures of the ancient world. This civilization flourished along the

rich banks and delta of the Nile River for many centuries, from 3200 B.C. until the Roman conquest in 30 B.C. …*

– Find main ideas that support the topic and show how they are

related.

•

Among the many contributions made by the Egyptian culture is the hieroglyphic writing system they invented. This is one of

the earliest writing systems known and it was used from about 3200 B.C. until 350 A.D. Hieroglyphics are a form of picture

writing, in which each symbol stands for either a single object or a single sound. The symbols can be combined into long

strings to form words and sentences. Other cultures, such as the Hittites, the Cretans, and the Mayans, also developed

picture writing, but these systems are not related to the Egyptian system, nor to one another.*

• Topic Interpretation (I)

– Combine several main ideas into a single sentence.

•

Among the many contributions made by the Egyptian culture is the hieroglyphic writing system they invented. This is one of

the earliest writing system known and it was used from about 3200 B.C. until 350 A.D. Hieroglyphics are a form of picture

writing, in which each symbol stands for either a single object or a single sound. The symbols can be combined into long

strings to form words and sentences. Other cultures, such as the Hittites, the Cretans, and the Mayans, also developed

picture writing, but these systems are not related to the Egyptian system, nor to one another.*

The Egyptians invented hieroglyphics, a form of picture writing, which is one of the earliest writing systems

known.*

(* Examples adapted from Summary Street ® http://lsa.colorado.edu/summarystreet)

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

How to Summarize? (cont.)

• Topic Interpretation (II)

– Substitute a general form for lists of items or events.

•

List of items:John bought some milk, bread, fruit, cheese, potato chips, butter, hamburger meat and

buns. *

John bought some groceries.*

• List of events:A lot of children came and brought presents. They played games and blew bubbles at

each other. A magician came and showed them some magic. Later Jennifer opened her presents and

blew out the candles on her cake.*

Jennifer had a birthday party.*

– Remove trivial and redundant information

•

We have gotten word of an enormous deal in the publishing deal. Hillary Clinton, senator-elect has

accepted an $8 million dollars advance to publish her book. That is a figure, surpassed only by the $8

1/2 million the pope received for his book. Senator-elect Hillary Rodham Clinton Friday night agreed to

sell Simon & Schuster a memoir of her years as first lady, for the near-record advance of about $8

million.

Senator-elect Hillary Rodham Clinton agreed to sell Simon & Schuster a memoir of her years

as First Lady, for the near-record advance of about $8 million.

(* Examples adapted from Summary Street ® http://lsa.colorado.edu/summarystreet)

• Generation (Writing Summaries)

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Aspects of Summarization

• Input

–

–

–

–

–

(Sparck Jones 97,Hovy and Lin 99)

Single-document vs. multi-document...fuse together texts?

Domain-specific vs. general...use domain-specific techniques?

Genre...use genre-specific (newspaper, report…) techniques?

Scale and form…input large or small? Structured or free-form?

Monolingual vs. multilingual...need to cross language barrier?

• Purpose

–

–

–

–

Situation...embedded in larger system (MT, IR) or not?

Generic vs. query-oriented...author’s view or user’s interest?

Indicative vs. informative...categorization or understanding?

Background vs. just-the-news…does user have prior knowledge?

• Output

– Extract vs. abstract...use text fragments or re-phrase content?

– Domain-specific vs. general...use domain-specific format?

– Style…make informative, indicative, aggregative, analytic...

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Table of contents

1.

2.

3.

4.

5.

Motivation and Introduction.

Approaches and paradigms.

Summarization methods.

Evaluating summaries.

The future.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Two Psycholinguistic Studies

• Coarse-grained summarization protocols from professional

summarizers (Kintsch and van Dijk, 78):

– Delete material that is trivial or redundant.

– Use superordinate concepts and actions.

– Select or invent topic sentence.

• 552 finely-grained summarization strategies from

professional summarizers (Endres-Niggemeyer, 98):

–

–

–

–

Self control: make yourself feel comfortable.

Processing: produce a unit as soon as you have enough data.

Info organization: use “Discussion” section to check results.

Content selection: the table of contents is relevant.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Computational Approach: Basics

Top-Down:

Bottom-Up:

• I know what I want! — don’t

• I’m dead curious: what’s

confuse me with nonsense!

in the text?

• User wants anything

• User wants only certain

that’s important.

types of info.

• System needs generic

• System needs particular

importance metrics, used

criteria of interest, used to

to rate content.

focus search.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Typical 3 Stages of

Summarization

1. Topic Identification: find/extract the most

important material

2. Topic Interpretation: compress it

3. Summary Generation: say it in your own

words

…as easy as that!

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Top-Down: Info. Extraction (IE)

• IE task: Given a form and a text, find all the

information relevant to each slot of the form and

fill it in.

• Summ-IE task: Given a query, select the best

form, fill it in, and generate the contents.

• Questions:

1. IE works only for very particular

forms; can it scale up?

2. What about info that doesn’t fit into

any form—is this a generic limitation

of IE?

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Bottom-Up: Info. Retrieval (IR)

• IR task: Given a query, find the relevant document(s)

from a large set of documents.

• Summ-IR task: Given a query, find the relevant

passage(s) from a set of passages (i.e., from one or

more documents).

• Questions:

1. IR techniques work on large

volumes of data; can they scale

down accurately enough?

2. IR works on words; do abstracts

require abstract representations?

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Paradigms: IE vs. IR

IE:

IR:

• Approach: try to ‘understand’

text—transform content into

‘deeper’ notation; then

manipulate that.

• Need: rules for text analysis

and manipulation, at all levels.

• Strengths: higher quality;

supports abstracting.

• Weaknesses: speed; still

needs to scale up to robust

open-domain summarization.

• Approach: operate at word

level—use word frequency,

collocation counts, etc.

• Need: large amounts of text.

• Strengths: robust; good for

query-oriented summaries.

• Weaknesses: lower quality;

inability to manipulate

information at abstract levels.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Deep and Shallow, Down and Up...

Today:

Increasingly, techniques hybridize: people use

word-level counting techniques to fill IE forms’

slots, and try to use IE-like discourse and quasisemantic notions in the IR approach.

Thus:

You can use either deep or shallow paradigms

for either top-down or bottom-up approaches!

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Toward the Final Answer...

• Problem: What if neither IR-like nor IE-Word counting

like methods work?

– sometimes counting and

forms are insufficient,

– and then you need to do

inference to understand.

• Solution:

Mrs. Coolidge: “What did

the preacher preach

about?”

Coolidge: “Sin.”

Mrs. Coolidge: “What did

he say?”

Coolidge: “He’s against it.”

– semantic analysis of the text (NLP),

– using adequate knowledge bases that

support inference (AI).

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Inference

The Optimal Solution...

Combine strengths of both paradigms…

...use IE/NLP when you have suitable

form(s),

...use IR when you don’t…

…but how exactly to do it?

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Table of contents

1.

2.

3.

4.

5.

Motivation and Introduction.

Approaches and paradigms.

Summarization methods.

Evaluating summaries.

The future.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Topic Identification

Classic Methods

Overview of Identification Methods

• General method: score each sentence;

combine scores; choose best sentence(s)

• Scoring techniques:

– Position in the text: lead method; optimal position

policy; title/heading method

– Cue phrases: in sentences

– Term frequencies: throughout the text

– Cohesion: semantic links among words; lexical

chains; coreference; word co-occurrence

– Discourse structure of the text

– Information Extraction: parsing and analysis

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Position-Based Method

• Claim: Important sentences occur at the

beginning (and/or end) of texts.

• Lead method: just take first sentence(s)!

• Experiments:

– In 85% of 200 individual paragraphs the topic

sentences occurred in initial position and in 7% in

final position (Baxendale 58).

– Only 13% of the paragraphs of contemporary

writers start with topic sentences (Donlan 80).

– First 100-word baseline achieved 80% (B: 32.24%

vs. H: 40.23% in average coverage) of human

performance in DUC 2001 single-doc sum task.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Optimum Position Policy (OPP)

Claim: Important sentences are located at positions that

are genre-dependent; these positions can be

determined automatically through training:

– Corpus: 13000 newspaper articles (ZIFF corpus).

– Step 1: For each article, enumerate sentence positions (both

and ).

– Step 2: For each sentence, determine yield (= overlap

between sentences and the index terms for the article).

– Step 3: Create partial ordering over the locations where

sentences containing important words occur: Optimal Position

Policy (OPP).

(Lin and Hovy, 97)

OPP is genre dependent

– For example: lead sentence is a very good baseline in news genre.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

OPP (cont.)

– OPP for ZIFF corpus:

(T) > (P2,S1) > (P3,S1) > (P2,S2) > {(P4,S1),(P5,S1),(P3,S2)} >…

(T=title; P=paragraph; S=sentence)

– Results: testing corpus

of 2900 articles:

Recall=35%,

Precision=38%.

– Results: 10%-extracts

cover 91% of the salient

words.

COVERAGE SCORE

– OPP for Wall Street Journal: (T)>(P1,S1)>...

1

0.9

0.05

0.03

0.04

0.8

0.03

0.02

0.7 0.02 0.07 0.08

0.06

0.6

R3

0.5 R1 R2

0.4

0.3

0.2 R1 R2 R3

0.1

0

R1 R2 R3

0.06 0.07 0.08 0.09 0.1 0.11 0.12

0.05 0.06 0.07 0.07 0.08 0.08 0.08

0.1 0.11 0.12 0.13 0.14

0.15 0.16

R4

R5

R6

R7

R8

R9 R10

R4

R5

R6

R7

R8

R9 R10

R4

R5 R6

R7

R8

R9 R10

OPP POSITIONS

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

>=5

4

3

2

1

Position: Title-Based Method

• Claim: Words in titles and headings are

positively relevant to summarization.

• Shown to be statistically valid at 99% level of

significance (Edmundson, 68).

• Empirically shown to be useful in

summarization systems.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Examples: DUC 2003

Topic 30003

DOCNO

NYT19981017.0177

HEADLINE

ACCEDING TO SPAIN, BRITAIN ARRESTS

FORMER CHILEAN LEADER AUGUSTO

PINOCHET

1ST SNT

BUENOS AIRES, Argentina _ Gen. Augusto Pinochet, who

ruled Chile as a despot for 17 years, has been arrested in

London after Spain asked that he be extradited for the

presumed murders of hundreds of Chilean and Spanish

citizens, the British authorities announced Saturday.

NYT19981018.0098

PINOCHET'S ARREST PUTS BLAIR'S

GOVERNMENT IN A TIGHT SPOT

LONDON _ Gen. Augusto Pinochet, the former Chilean dictator

arrested here at the request of a Spanish judge, remained

sequestered under police guard Sunday, awaiting a potentially

devastating court hearing to weigh his extradition on charges

of genocide, terrorism and murder.

NYT19981018.0160

IN SANTIAGO, NEWS OF PINOCHET'S

ARREST STIRS ONLY SMALL PROTEST

SANTIAGO, Chile _ Perhaps as a sign of how much Chileans

wish to forget their years of polarized politics and repression

under Gen. Augusto Pinochet, the immediate popular response

here to the sensational news of his arrest in London has been

remarkable for its moderation.

NYT19981018.0123

A MAVERICK SPANISH JUDGE'S TWO-YEAR

QUEST FOR PINOCHET'S ARREST

PARIS _ A stubbornly independent judge who more than once

has challenged the Spanish government, Baltasar Garzon was

ridiculed when he first set out to pursue the most notorious

right-wing generals in South America for human rights crimes

committed in the 1970s and '80s, offenses for which they had

already been granted amnesty at home.

NYT19981018.0185

ACCUSED OFFICIALS HAVE FEWER PLACES

TO HIDE

Chin-Yew LIN • IJCNLP-05 • Jeju Island •

WASHINGTON _ The arrest of Gen. Augusto Pinochet shows

the growing significance of international human-rights law,

suggesting that officials accused of atrocities have fewer

places to hide these days, even if they are carrying diplomatic

passports,

legal

Republic

of Korea

• scholars

October say.

10, 2005

Examples: Yahoo! News

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Cue-Phrase-Based Method

• Claim 1: Important sentences contain ‘bonus

phrases’, such as significantly, In this paper we

show, and In conclusion, while non-important

sentences contain ‘stigma phrases’ such as

hardly and impossible.

• Claim 2: These phrases can be compiled semiautomatically (Edmumdson 69; Paice 80; Kupiec et al.

95; Teufel and Moens 97).

• Method: Add to sentence score if it contains a

bonus phrase, penalize if it contains a stigma

phrase.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Examples: “In this paper, we …”

— From WAS 2004 Workshop papers

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Term Significance:

TF vs. TFIDF Term Rankings

DUC 2003 Topic 30003

Top 30

Normalized TF & TFIDF Terms

TF: Term Frequency

IDF: Inverse document

frequency

TFIDF: TF*log(N/DF)

N: # of docs in a training corpus

DF: # of docs occurrences for term i

Rank

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

TF Terms N-TF Value TFIDF Terms

the

1.000 Pinochet

of

0.703 Spanish

in

0.536 Chile

a

0.504 Chilean

and

0.489 Garzon

to

0.482 Argentina

Pinochet

0.380 diplomatic

that

0.308 British

for

0.250 immunity

said

0.214 dictator

was

0.203 arrest

he

0.196 military

Spanish

0.185 genocide

's

0.174 Augusto

on

0.174 Gen.

is

0.170 crimes

Chile

0.167 extradition

his

0.159 his

have

0.152 London

has

0.149 torture

British

0.127 human

The

0.127 Spain

arrest

0.120 judge

who

0.120 terrorism

Chilean

0.105 rights

Argentina

0.098 Castro

by

0.098 him

from

0.098 he

as

0.094 former

with

0.094 surgery

N-TFIDF Value

1.000

0.377

0.254

0.245

0.238

0.176

0.163

0.154

0.141

0.133

0.123

0.117

0.114

0.105

0.105

0.094

0.094

0.091

0.088

0.082

0.082

0.081

0.080

0.080

0.079

0.076

0.076

0.074

0.072

0.070

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Term Significance:

Term Frequency-Based Method

C

1

E

Pinochet

D

DUC 2003 TOPIC 30003

TOP 31 TF TERMS

TF vs. TFIDF

th

e

0.9

(Luhn 59)

0.8

0.7

0.6

TF

TFIDF

LUHN

to

a

of

an

d

in

0.5

he

t

0.4

Pi

no

c

Spanish

th

at

0.3

C

as

by from with

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

en

t

rn

m

ve

government

go

ge

w nti

ith na

as

om

Ar

who

fr

iti

w sh

ho

ar

re

st

C

hi

le

by an

Br

have

has

The

Argentina

by

Ar fro

ge m

nt

in

a

w

ith

go

ve

rn as

m

en

t

is

Th

e

ha

ve

ha

s

hi

le

hi

s

C

is

's

's

British

arrest

is

hi

le

hi

s

ha

ve

ha

s

Th

Br e

iti

sh

w

h

ar o

re

C

hi st

le

an

on

's

that

for

t

th

at

fo

r

sa

id

w

as

he

no

c

to

andto

Pi

an

d

a

in

of

th

e

0

of in a

the

Chilean

his

he

saidwas

on

0.1

Sp he

an

is

h

0.2

Sp

an

on i sh

sa

id

w

as

he

fo

r

Chile

Sentence Significance:

Luhn’s Method

SENTENCE

Sentence

Now , after Garzon succeeded in getting Scotland Yard to

[arrest Chile 's former dictator , Gen. Augusto Pinochet

, the Spanish ] magistrate has suddenly moved closer to

creating an important legal precedent , one that could make

the retirement of former dictators decidedly uncomfortable .

*: SIGNIFICANT

WORDS

*

1

2

* *

3

4

5

6

*

7

ALL WORDS

SCORE = 42/7 = 2.286

LONDON ( AP ) _ An influential lawmaker from the governing

Labor Party on Saturday backed [ Spanish requests to

question former Chilean dictator Gen. Augusto Pinochet

, in London for back surgery , on allegations of genocide

and terrorism ] .

LONDON ( AP ) _ Former [ Chilean dictator Gen. Augusto

Pinochet has been arrested by British police on a Spanish

extradition warrant , despite protests from Chile that he is

entitled to diplomatic immunity ] .

(Luhn 59)

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Luhn Score

8^2/10=6.400

11^2/21=5.762

12^2/27=5.333

Web Search:

Text Snippet Significance

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Topic Identification

Cohesion-Based

Cohesion-Based Methods

• Claim: Important sentences/paragraphs are the highest connected

entities in more or less elaborate semantic structures.

• Cohesion: Semantic relations that make the text hang together and

provide the texture of the text.

Anaphora

• Types of Cohesion

Resolution

Discourse

– Grammatical

•

•

•

•

Parsing

Reference: anaphora, cataphora, and exophora.

Substitution: nominal (one, ones; same), verbal (do), and clausal (so, not).

Ellipsis: substitution by zero; something left unsaid but understood.

Conjunction: additive, adversative, causal, and temporal.

Lexical Chain

– Lexical

• Lexical Cohesion: reiteration (repetition, synonym, hypernym/hyponum, general

word) and collocation.

– Please see “Cohesion in English”, by Halliday & Hasan for more details.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Cohesion Examples

From:

“Alice's Adventures in

Wonderland”

by

Lewis Carrol

—

Example taken from:

“Cohesion in English”

by

Halliday & Hasan

1976

The Cat only grinned when saw Alice.

‘Come, it’s pleased so far,’ thought

Alice, and she went on.

‘Would you tell me, please, which way

I ought to go from here?’

That depends a good deal on where

you want to get to,’ said the Cat.

‘I don’t much care where —’ said Alice.

‘Then it doesn’t matter which way you

go,’ said the Cat.

‘— so long as I get somewhere,’ Alice

added as an explanation.

‘Oh, you’re sure to do that,’ said the

Cat, ‘if you only walk long enough.’

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Cohesive-Based Semantic

Network (Skorochod’ko 1971)

“b”

“a”

•

chain

•

Fi Ni (M M i

ring

semantic network.

– the maximum number of connected

M imax

nodes after removal of node i.

7 •

6

•

5

4

2

1

8

)

N i – the degree (# of edges) of a node.

M – the total number of nodes in a

monolith

piecewise

3

Sentences are linked by semantic

relations.

Sentence significance, Fi, is determined

by:

max

9

Word significance is determined by the

same formula but nodes represent

words instead of sentences.

Summaries are generated by first

selecting the most significant sentences

then compressing by removing the least

significant words.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Cohesion: Lexical Chains (1)

• First applied lexical chains in

• Lexical chains: sequence of

summarization by Barzilay &

semantically related words linked

Elhadad (B&E 97).

together by lexical cohesion.

• Morris & Hirst (91) proposed the • Similar to Hirst & St-Onge (98)

but differed in:

tirst computational model of

• Use a POS tagger and a shallow

lexical chains.

parser to identify noun

• Lexical cohesion was defined

compounds vs. use straight

based on categories, index

WordNet nouns.

entries, and pointers in Rogets’

• Identify chains in each text

thesaurus. (eg, synonyms, antonyms,

hyper-/hyponums, and mero-/holonyms)

• Not an automatic algorithm.

• Cover over 90% of intuitive lexical

relations.

• Automated later by Hirst & StOnge (98) using WordNet.

segment then connect only

strong chains across segments.

• Try to link words to all

possible chains then choose

the best chain at the end vs.

just link to the best currently

available chain.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Lexical Chains-Based Method (2)

• Chain score is computed as follows (B&E 97):

Score(C) = Length(C) - #DistinctOccurrences(C)

• Strong chain criterion:

Score(C) > Average(Scores)+2*SD(Scores)

• Assumes that important sentences are those that are

‘traversed’ by strong chains.

– For each chain, choose the first sentence that is traversed by

the chain and that uses a representative set of concepts from

that chain.

Lexical

Lead-based

[Jing et al. 98]

corpus

10% cutoff

20% cutoff

Chains

Baseline

Recall

Prec

Recall

Prec

67%

64%

61%

47%

83%

71%

63%

47%

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Lexical Chains Examples

(DUC 2003 APW19981017.0477)

Augusto Pinochet or Pinochet

Eight years after his turbulent regime ended , former Chilean strongman Gen.

Augusto Pinochet is

being called to account by Spanish authorities for the deaths , detention and torture of political

regimeopponents

or government

or group

.

Eight years

after

his

turbulent

regime warrant

ended ,, British

formerpolice

Chilean

strongman

Gen. they

Augusto

Pinochet is

Responding to a Spanish extradition

announced

Saturday

have arrested

being called

to account

by Spanish

the deaths

, detention

and in

torture

of political

Pinochet

on allegations

that he authorities

murdered anfor

unidentified

number

of Spaniards

Chile between

Sept

opponents

.

11 , 1973

, the year he seized power , and Dec 31 , 1983 .

But Britain

saidruthless

Pinochet

did notwas

havewidely

diplomatic

immunity

or

amnesty

Pinochet

,immunity

whose

regime

criticized

for.its human right record , was recovering

Pinochet

,

whose

ruthless

regime

was

widely

criticized

for

right record

, was recovering

from

from surgery

in asaid

London

clinicprotest

when he

heldauthorities

Friday night

.human that

Chile

it would

towas

British

,its

arguing

the 82-year-old

senator-for-life

has

surgery

in

a

London

clinic

when

he

was

held

Friday

night

.

diplomatic immunity .

In a statement

issued

in Porto

, Portugal

, where

President Eduardo

is attending

theFernando

Ibero American

Scotland Yard

refused

to confirm

Pinochet

's whereabouts

, but hisFrei

Santiago

spokesman

But Britain said Pinochet did not have diplomatic immunity .

summitMartinez

, the Chilean

government

said

it

is

``

filing

a

formal

protest

with

the

British

government

for

said he was in a London clinic when police came for him .

A

regular

visitor

to

Britain

,

Pinochet

underwent

surgery

Oct

9

for

a

herniated

disc

,

a

spinal

disorder

what it considers

a violation

of theindiplomatic

immunity

which President

Sen. Pinochet

''

In a statement

issued

Porto , Portugal

, where

Eduardoenjoys

Frei is .attending

the Ibero American

whichsummit

has given

himChilean

pain and hampered

his walking

months

.

, the

government

said in

it recent

is `` filing

a formal

protest with the British government for

Spanish Foreign Minister Abel Matutes , also attending the Ibero American summit , said his

what

considers

a decisions

violation

of

the

diplomatic

Sen. the

Pinochet

enjoys . ''

In a statement

issued in

Porto

, Portugal

, where

President

Eduardo Freiwhich

is attending

Ibero American

government

``itrespects

the

taken

by

courts

. ''immunity

summit , the Chilean government said it is ` ` filing a formal protest with the British government for

British

law two

recognizes

two

types

of

immunity

: state

immunity

, which

British what

law recognizes

types of

of

immunity

: state

immunity

,Sen.

which

covers head

of. state

andhead of state and

it considers

a violation

the

diplomatic

immunity

which

Pinochet

enjoys

'' covers

government

on official

visits , andimmunity

diplomatic

immunity

for persons

accredited

as diplomats

government

members onmembers

official visits

, and diplomatic

for persons

accredited

as diplomats

.

. Bunting of the human rights group Amnesty International , which has frequently criticized

Richard

Pinochet

said

the

British

government

was

` ` under

obligation tocovering

take

legalhas

action

'' againstcriticized

himbefore

.

While,ofin

power

he also

pushed

through

an International

amnesty

crimes

committed

1978 , when

Richard

Bunting

the

human

rights

group

Amnesty

, which

frequently

most

of

his

human

rights

abuses

allegedly

took

place

.

Pinochet

, said

the British clear

government

` underPinochet

obligation

to take

It was

not immediately

which clinicwas

was `treating

, who

is 83legal

next action

week . '' against him .

Baltasar Garzon , one of two Spanish magistrates handling probes into human rights violations in Chile

Castellon

'sstrongman

probe into

murder

, torture and disappearances in Chile during

Pinochet 's regime began

or

dictator

and Argentina

, filed

a

request to question Pinochet on Wednesday , a day after another judge , Manuel

in 1996

.

GarciaEight

Castellon

, filed

a similar

petition . regime ended , former Chilean strongman Gen. Augusto Pinochet is

years

after

his turbulent

Pinochet

is

implicated

in

probe

through

his involvement

`` Operation

Condor

, torture

'' in

which

Castellon

's probe

murder 's

, torture

and

disappearances

in Chile

during

'sand

regime

began

being

calledinto

toGarzon

account

by

Spanish

authorities

for

the in

deaths

, Pinochet

detention

ofinpolitical

military1996

regimes

in Chile , Argentina and Uruguay coordinated anti-leftist campaigns

.opponents

.

Pinochet is implicated in Garzon 's probe through his involvement in `` Operation Condor , '' in which

It son

will of

be athe

first time

this

ghastly

has faced

questions

, '' heintold

Sky television

.

Pinochet ,``

the

customs

clerk

who

ousted dictator

elected President

Salvador

Allende

a bloody

1973

military regimes in Chile , Argentina and Uruguay coordinated anti-leftist campaigns

coup , remained commander in chief of the Chilean army until March , when he was sworn in as a

senator-for-life

a post

him

in a

constitution

drafted by

his regime

. a bloody 1973

Pinochet ,, the

son established

of a customsfor

clerk

who

ousted

elected President

Salvador

Allende in

coup , remained commander in chief of the Chilean army until March , when he was sworn in as a

senator-for-life , a post established for him in a constitution drafted by his regime .

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Cohesion: Coreference-Based

Method

• Build coreference chains (noun/event

identity, part-whole relations) between

– query and document

– title and document

– sentences within document

• Important sentences are those traversed

by a large number of chains:

– a preference is imposed on chains (query > title > doc)

• Evaluation: 67% F-score for relevance

(SUMMAC, 98). (Baldwin and Morton, 98)

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Cohesion: Graph-Based Method (1)

(in Multi-document summarization; Mani and Bloedorn 97)

• Map texts into graphs:

– The nodes of the graph are the

words of the text.

– Arcs represent same, adjacency,

phrase, name, coreference, and

alpha link (synonym and

hypernym) relations.

• Seed the text graphs with word

TFIDF weights.

• Given a query (or topic), a

spreading activation algorithm is

used to re-weight the initial

graphs.

• Sentences with high activation

weights are assumed as

important.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Cohesion: Graph-Based Method (2)

(in Multi-document summarization; Mani and Bloedorn 97)

• Extrinsic information retrieval task evaluation

– 4 TREC topics were used as testing queries:

• 204, 207, 210, and 215

– Corpus: Subset of TREC collection indexed by SMART

• Retain the top 75 documents returned for each query.

• A Query-biased summary was created by taking the top 5

weighting sentences for every returned document.

– 4 human subjects were asked to rate the relevancy of

the returned documents and their summaries.

Metric

Accuracy (Recall, Precision)

Time (mins)

Full Document

30.25%, 41.25%

24.65

Summary

25.75%, 48.75%

21.65

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Cohesion: Graph-Based Method (3)

(in Multi-document summarization; Mani and Bloedorn 97)

Adjacent links

taking into account

collocations

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Cohesion: Hyperlink-Based

Method (1)

P1

P2

P3

P9

• Paragraph Relation Map (PRM):

P8

P7

P4

P6

P5

– Apply automatic inter-document hypertext link generation

method in IR to generate intra-document paragraph or sentence

links. (Salton et al. 91; Salton & Allan 93; Allan 96)

– Compute cosine similarity between every paragraph or sentence

pairs of a document.

– Paragraphs or sentences with similarity scores higher then an

empirically determined cutoff threshold are linked.

• Summaries are generated in three ways:

– Bushy* path: take the topmost N bushy nodes on the map.

– Depth-first path: start from the bushiest node then visit the

next most similar node for the next step.

– Segmented bushy path:segment text at least bushy nodes then

apply the bushy path strategy for each segment.

*Bushiness: # of links connecting a node to other nodes on PRM.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Cohesion: Hyperlink-Based

Method (2)

• Evaluation (Salton et al. 99)

– 50 articles from the Funk and Wagnalls Encyclopedia (Funk &

Wagnalls 79).

– 100 extracts (2 per article) were created by 9 human subjects. On

average, 46% overlap were found between two manual extracts.

– Four summary scoring scenarios:

•

•

•

•

Optimistic: take the higher overlap between two human extracts.

Pessimistic: take the lower overlap between two human extracts.

Intersection*: take the overlap of the intersection of two human extracts.

Union*: take the overlap the union of the two human extracts.

Algorthm

Bushy

Depeth-first

Segmented Bushy

Random

Optimistic (%) Pessimistic (%) Intersection (%) Union (%)

45.60

30.74

47.33

55.16

43.98

27.76

42.33

52.48

45.48

26.37

38.17

52.95

39.16

22.07

38.47

44.24

*intersection: strict precision; *union: lenient precision

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Hyperlink-Based

Method:

Example

(DUC 2003 APW19981017.0477)

(S04) Chile said it would protest to British authorities , arguing that

the 82-year-old senator-for-life has diplomatic immunity .

(S27) Pinochet , the son of a customs clerk who ousted elected

President

Salvador

Allende

in No

a bloody

1973

coup

, remained

(S11)

In a statement

issued

in Porto

Portugal

,was given .

(S03)

reason

for, the

dates

commander-in-chief

of the

Chilean

army

until

, Ibero

when

where

President

Eduardo

is``attending

(S08)

NoitFrei

hearing

dateMarch

hasthe

been

set he

. was

(S19)

Bunting

of the

human

rights

group

Amnesty

(S06)Richard

Prime sworn

Minister

Tony

Blair

's

office

said

was

inhas

as frequently

a senator-for-life

,Chilean

aPinochet

post

established

for

himithearing

in

a

American

summit

,

the

government

said

is

(S22)

He

has

a

pacemaker

and

International

,

which

criticized

,

said

a matter for the

magistrates

and by

thehis

police

. '' .

constitution

drafted

regime

``

filing

a

formal

protest

with

the

British

S23

Baltasar

Garzon

,

one

of

two

Spanish

magistrates

handling

aid

,

but

ingovernment

good

health probes

.

the

British

government

under

obligationistogenerally

take

legal

(S23)

Baltasar

Garzon was

, one``of

two Spanish

for

what

itinto

considers

arights

violation

of the diplomatic

human

violations

in Chile

and Argentina

, filed a request to

action

'' into

against

himrights

. probes

magistrates

handling

human

immunity

Sen.

. '’ judge , Manuel

question

Pinochet

on which

Wednesday

, a day

after

another

(S28)

While

power

heArgentina

also

pushed

through

an amnesty

violations

ininChile

and

, filed

a Pinochet

request

toenjoys

(S14)

Foreign

Minister

Abel

Matutes

, also

Castellon

, Spanish

filed

similar

. , arguing

covering

crimes

committed

before

1978

,petition

when

most

of his

S04

ChileGarcia

said

it would

protest

to aBritish

authorities

that the

82question

Pinochet

on

Wednesday

, a day

after

attending

the

Ibero

American

summit

,

said

his

S24

Castellon

's

probe

into

murder

,

torture

and

disappearances

in Chile

human

rights

allegedly

took

place

year-old

senator-for-life

has

diplomatic

immunity

another

judgeabuses

, Manuel

Garcia

Castellon

,. filed. a

government

``began

respects

the decisions

taken

during

Pinochet

's regime

1996

. immunity

S05

But Britain

said

did not

have in

diplomatic

. by courts

similar

petition

. Pinochet

. ''is implicated

S26

's :probe

involvement in

S15 British

lawPinochet

recognizes

two types in

of Garzon

immunity

state through

immunityhis, which

`` Operation

Condor

, '' in which

militaryon

regimes

Chile, and

, Argentina and

covers heads

of state and

government

members

officialinvisits

campaigns

. .

diplomaticUruguay

immunitycoordinated

for personsanti-leftist

accredited

as diplomats

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Topic Identification

Discourse-Based

Discourse-Based Method

• Claim: The multi-sentence coherence structure

of a text can be constructed, and the ‘centrality’,

typically the nuclei of the textual units in this

structure reflects their importance.

• Tree-like representation of texts in the style of Rhetorical

Structure Theory

(RST; Mann and Thompson 88).

• Use the discourse (RST) representation in order to

determine the most important textual units.

• Attempts:

– Ono et al. (1994) for Japanese.

– Marcu (1997, 2000) for English

– (see http://www.isi.edu/~marcu/discourse for more information).

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

RST: A Very Short Overview

(Mann and Thompson 88).

• Rhetorical Relation

(Effective Speaking or Writing)

– A relation holds between two non-overlapping text spans called

nucleus and satellite.

– Nucleus expresses what is more essential to the writer’s purpose

than satellite.

– The nucleus is comprehensible independent of the satellite but

not vice versa.

– Constraints on RST relations and their overall effect enforce text

coherence.

Relation Name

EVIDENCE

The reader R might not believe the information that is conveyed by

Constraints on N

the nucleus N to a degree satifactory to the writer W.

The reader believes the information that is conveyed by the satellite S

Constraints on S

or will find it credible.

Constraints on N+S R's comprehending S increases R's belief of N.

R's belief of N is increased.

Locus of the Effect N.

[(N) The truth is that the pressure to smoke in junior high is greater

Example

than it will be any other time of one's lift:][( S) we know that 3,000

teens start smoking each day.]

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Rhetorical Parsing (1)

http://www.isi.edu/~marcu/discourse

[Energetic and concrete action has been taken in

Colombia during the past 60 days against the mafiosi of the

evidence

drug trade,]1 [but it has not been sufficiently effective,]2

background

[because, unfortunately, it came too

late.]3

[Ten years ago, the newspaper El Espectador,]4 [of which

my brother Guillermo was editor,]5 [began warning of the

rise of the drug mafias and of their leaders' aspirations]6 [to

control Colombian politics, especially the Congress.]7

[Then,]8 [when it would have been easier to resist them,]9

[nothing was done]10 [and my brother was murdered by the

drug mafias three years ago.]11 …

(Marcu 97)

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Rhetorical Parsing (2)

2

Elaboratio

n

2

Elaboratio

n

2

Background

Justification

1

2

8

Example

3

Elaboration

45

Contrast

3

4

10

Antithesi

s

7

9

8

10

Summarization =

selection of the most important units +

determination of a partial ordering on

them

5

Evidence

Cause

5

8

Concessi

on

6

2 > 8 > 3, 10 > 1, 4, 5, 7, 9 > 6

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Discourse Method: Evaluation

(Marcu WVLC-98: using a combination of heuristics for rhetorical parsing disambiguation)

TREC Corpus (4-Fold Cross-validation; 40 Texts; Jing et al 98)

Reduction Method

Recall Precision F-Score

Humans

83.20%

75.95%

79.41%

10%

Program

63.75%

72.50%

67.84%

Lead

82.91%

63.45%

71.89%

Humans

82.83%

64.93%

72.80%

20%

Program

61.79%

60.83%

61.31%

Lead

70.91%

46.96%

56.50%

Scientific American Corpus (5 Texts; Marcu WISTS-97)

Level

Method

Recall Precision F-Score

Humans

72.66%

69.63%

71.27%

Program (training)

67.57%

73.53%

70.42%

Clause

Program (no training)

51.35%

63.33%

56.71%

Lead

39.68%

39.68%

39.68%

Humans

78.11%

79.37%

78.73%

Program (training)

69.23%

64.29%

66.67%

Sentence

Program (no training)

57.69%

51.72%

54.54%

Lead

54.22%

54.22%

54.22%

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Topic Identification

Combining the Evidence

Summary of Identification Methods

• General method: score each sentence;

combine scores; choose best sentence(s)

• Scoring techniques:

– Position in the text: lead method; optimal position

policy; title/heading method

– Cue phrases: in sentences

– Term frequencies: throughout the text

– Cohesion: semantic links among words; lexical

chains; coreference; word co-occurrence

– Discourse structure of the text

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Combining the Evidence

• Problem: which extraction methods to use?

• Answer: assume they are independent, and

combine their evidence: merge individual sentence

scores.

• Studies:

– (Edumondson 69): linear combination.

– (Kupiec et al. 95; Aone et al. 97; Teufel & Moens 97):

Naïve Bayes.

– (Mani and Bloedorn 98): SCDF, C4.5, inductive learning.

– (Lin 99): C4.5, linear combination.

– (Marcu 98): rhetorical parsing tuning.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Linear Combination (1)

• Edmundson 69:

– Combination of cue phrase (C) , key method

(K), title method (T), and position method (P).

Score a1C a2 K a3T a4 P

– A total of 17 experiments were performed to

adjust weights, i.e. a1 , a2 , a3 , and a4 .

– Co-selection ratio between the automatic and

the the target extracts was used as the

evaluation metric.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Linear Combination (2)

(Edmundson 69)

Random

Key

Combination

Title

Cue

Position

Cue-Key-Title-Position

Cue-Title-Position

0.0

10.0

20.0

30.0

40.0

50.0

60.0

Coselection Percentage

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

70.0

80.0

Naïve Bayes (1)

• Kupiec, Pedersen, and Chen 95

– Features

• Sentence length cut-off (binary long sentence feature: only

include sentences longer than 5 words)

• Fixed-phrase (binary cue phrase feature: such as “this letter …”,

total 26 fixed phrases)

• Paragraph (binary position feature: the first 10 and the last 5

paragraphs in a document)

• Thematic Word (binary key feature: sentences contain the most

frequent content words or not)

• Uppercase Word (binary orthographic feature: capitalize frequent

words not occur at the sentence-initial position)

– Corpus: 188 OCRed documents and their manual

summaries sampled from 21 scientific/technical domain.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Naïve Bayes (2)

(Kupiec et al. 95)

• Summarization as a sentence ranking process

based on the probability of a sentence would be

included in a summary: P(F1, F2 , , Fk | s S)P(s S)

P(s S | F1 , F2 ,

, Fk )

P(F1 , F2 ,

, Fk )

P(s S) j 1 P(Fj | s S)

k

k

P(Fj )

– Assuming statistical independence of the features.

– P(s S) is a constant (assuming uniform distribution

for all s).

– P(Fj | s S) and P(Fj ) can be estimated from the training

set.

j 1

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Naïve Bayes (3)

(Kupiec et al. 95)

Reference Extract Matching Distribution

Direct Sentence Matches

Direct Joins

Unmatchable Sentences

Incomplete Single Sentences

Incomplete Joins

Total Manual Summary sents

451

19

50

21

27

568

79%

3%

9%

4%

5%

Feature

Individual

Cum ulative

Sents Correct Sents Correct

Paragraph

163 (33%)

163 (33%)

Fixed Phrases

145 (29%)

209 (42%)

Length C ut-off

121 (24%)

217 (44%)

Thematic Word

101 (20%)

209 (42%)

Uppercase Word 100 (20%)

211 (42%)

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Performance of Individual

Factors

(Lin CIKM 99)

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Topic Interpretation

Topic Interpretation

• From extract to abstract:

topic interpretation

or concept fusion.

• Experiment (Marcu, 99):

xx xxx xxxx x xx xxxx

xxx xx xxx xx xxxxx x

xxx xx xxx xx x xxx xx

xx xxx x xxx xx xxx x

xx x xxxx xxxx xxxx xx

xx xxxx xxx

xxx xx xx xxxx x xxx

xx x xx xx xxxxx x x xx

xxx xxxxxx xxxxxx x x

xxxxxxx xx x xxxxxx

xxxx

xx xx xxxxx xxx xx x xx

xx xxxx xxx xxxx xx

xxx xx xxx xxxx xx

xxx x xxxx x xx xxxx

xx xxx xxxx xx x xxx

xxx xxxx x xxx x xxx

xx xx xxxxx x x xx

xxxxxxx xx x xxxxxx

xxxx

xx xx xxxxx xxx xx

xxx xx xxxx x xxxxx

xx xxxxx x

– Got 10 newspaper texts, with human abstracts.

– Asked 14 judges to extract corresponding clauses

from texts, to cover the same content.

– Compared word lengths of extracts to abstracts:

extract_length 2.76 abstract_length !!

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Some Types of Interpretation

• Concept Generalization:

Sue ate apples, pears, and bananas Sue ate fruit

• Meronymy Replacement (Part-Whole) :

Both wheels, the pedals, saddle, chain… the bike

• Script Identification:

(Schank and Abelson, 77)

He sat down, read the menu, ordered, ate, paid, and

left He ate at the restaurant

• Metonymy:

A spokesperson for the US Government announced

that… Washington announced that...

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

General Aspects of

Interpretation

• Interpretation occurs at the conceptual level...

…words alone are polysemous (bat animal and sports

instrument) and combine for meaning (alleged

murderer murderer).

• For interpretation, you need world knowledge...

…the fusion inferences are not in the text!

• Little work so far:

(Lin, 95; Radev and McKeown, 98; Reimer

and Hahn, 97; Hovy and Lin, 98).

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Form-Based Operations

• Claim: Using IE systems, can aggregate

forms by detecting interrelationships.

1. Detect relationships (contradictions, changes of

perspective, additions, refinements, agreements, trends,

etc.).

2. Modify, delete, aggregate forms using rules (Radev and

McKeown, 98):

Given two forms,

if (the location of the incident is the same and

the time of the first report is before the time of the second report and

the report sources are different and

at least one slot differs in value)

then combine the forms using a contradiction operator.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Inferences in

Terminological Logic

•

‘Condensation’ operators (Reimer & Hahn 97).

1. Parse text, incrementally build a terminological rep.

2. Apply condensation operators to determine the salient

concepts, relationships, and properties for each

paragraph (employ frequency counting and other

heuristics on concepts and relations, not on words).

3. Build a hierarchy of topic descriptions out of salient

constructs.

•

Conclusion: No evaluation.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Concept Generalization:

Wavefront

• Claim: Can perform concept

generalization, using WordNet (Lin, 95).

• Find most appropriate summarizing

concept:

Calculator

Computer

PC

5

IBM

6

20

0

20

2

18

Mac

5

Dell

Cash register

Mainframe

1. Count word occurrences in

text; score WN concepts

2. Propagate scores upward

3. R Max{scores} / scores

4. Move downward until no

obvious child: R<Rt

5. Output that concept

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Wavefront Evaluation

• 200 BusinessWeek articles about computers:

– typical length 750 words (1 page).

– human abstracts, typical length 150 words (1 par).

– several parameters; many variations tried.

• Rt = 0.67; StartDepth = 6; Length = 20%:

Random Wavefront

Precision

Recall

20.30%

15.70%

33.80%

32.00%

• Conclusion: need more elaborate taxonomy.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Topic Signatures (1)

• Claim: Can approximate script

identification at lexical level, using

automatically acquired ‘word families’ (Lin

and Hovy COLING 2000).

• Idea: Create topic signatures: each

concept is defined by frequency

distribution of its related words (concepts):

signature = {head (c1,f1) (c2,f2) ...}

restaurant waiter + menu + food + eat...

• (inverse of query expansion in IR.)

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Example Signatures

RANK

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

aerospace

contract

air_force

aircraft

navy

army

space

missile

equipment

mcdonnell

northrop

nasa

pentagon

defense

receive

boeing

shuttle

airbus

douglas

thiokol

plane

engine

million

aerospace

corp.

unit

banking

environment

telecommunication

bank

epa

at&t

thrift

waste

network

banking

environmental

fcc

loan

water

cbs

mr.

ozone

pbs

deposit

state

bell

board

incinerator

long-distance

fslic

agency

telephone

fed

clean

telecommunication

institution

landfill

mci

federal

hazardous

mr.

Agirre

et

al.

LREC

2004

fdic

acid_rain

doctrine

volcker

standard

service

henkel

federal

news

banker

lake

turner

khoo

garbage

station

asset

pollution

nbc

brunei

city

sprint

citicorp

law

communication

billion

site

broadcasting

regulator

air

broadcast

national_bank

protection

programming

greenspan

violation

television

financial

management

abc

vatican

reagan

rate

Download topic signatures for all WordNet 1.6 noun senses:

http://ixa.si.ehu.es/Ixa/resources/sensecorpus

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Topic Signatures (2)

• Experiment: created 30 signatures from 30,000

Wall Street Journal texts, 30 categories:

– Used tf.idf to determine uniqueness in category.

– Collected most frequent 300 words per term.

• Evaluation: classified 2204 new texts:

– Created document signature and matched against all

topic signatures; selected best match.

• Results: Precision 69.31%; Recall 75.66%

– 90%+ for top 1/3 of categories; rest lower, because

less clearly delineated (overlapping signatures).

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Evaluating Signature Quality

• Test: perform text categorization task:

1. match new text’s ‘signature’ against topic signatures

2. measure how correctly texts are classified by

signature

• Document Signature (DSi):

[ (ti1,wi1), (ti2,wi2), … ,(tin,win) ]

• Similarity measure:

– cosine similarity, cos TSk DSi / | TSk DSi|

DATA RECALL PRECISION DATA RECALL PRECISION

7WD

0.847

0.752

8WD

0.803

0.719

7TR

0.844

0.739

8TR

0.802

0.710

7PH

0.843

0.748

8PH

0.797

0.716

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Summary Generation

NL Generation for Summaries

• Level 1: no separate generation

– Produce extracts, verbatim from input text.

• Level 2: simple sentences

– Assemble portions of extracted clauses together.

• Level 3: full NLG

1. Sentence Planner: plan sentence content, sentence

length, theme, order of constituents, words chosen...

(Hovy and Wanner, 96)

2. Surface Realizer: linearize input grammatically (Elhadad,

92; Knight and Hatzivassiloglou, 95).

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Full Generation Example

• Challenge: Pack content densely!

• Example (Radev and McKeown, 98):

– Traverse templates and assign values to ‘realization

switches’ that control local choices such as tense and

voice.

– Map modified templates into a representation of

Functional Descriptions (input representation to

Columbia’s NL generation system FUF).

– FUF maps Functional Descriptions into English.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Generation Example

(Radev and McKeown, 98)

NICOSIA, Cyprus (AP) – Two bombs exploded near government

ministries in Baghdad, but there was no immediate word of any

casualties, Iraqi dissidents reported Friday. There was no independent

confirmation of the claims by the Iraqi National Congress. Iraq’s

state-controlled media have not mentioned any bombings.

Multiple sources and disagreement

Explicit mentioning of “no

information”

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Fusion at Syntactic Level

• General Procedure:

1.

2.

3.

4.

Identify sentences with overlapping/related content,

Parse these sentences into syntax trees,

Apply fusion operators to compress the syntax trees,

Generate sentence(s) from the fused tree(s).

X

X

A

B

C A B

D

AA

BB

C

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

D

Syntax Fusion (1)

(Barzilay, McKeown, and Elhadad, 99)

• Parse tree: simple syntactic dependency notation

DSYNT, using Collins parser.

• Tree paraphrase rules derived through corpus

analysis cover 85% of cases:

–

–

–

–

sentence part reordering,

demotion to relative clause,

coercion to different syntactic class,

change of grammatical feature: tense, number,

passive, etc.,

– change of part of speech,

– lexical paraphrase using synonym, etc.

• Compact trees mapped into English using FUF.

• Evaluate the fluency of the output.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Syntax Fusion (2)

(Mani, Gates, and Bloedorn, 99)

• Elimination of syntactic constituents.

• Aggregation of constituents of two sentences

on the basis of referential identity.

• Smoothing:

– Reduction of coordinated constituents.

– Reduction of relative clauses.

• Reference adjustment.

Evaluation:

– Informativeness.

– Readability.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

Text Summarization

Information Extraction-Based

Information Extraction Method

• Idea: content selection using forms (templates)

– Predefine a form, whose slots specify what is of interest.

– Use a canonical IE system to extract from a (set of) document(s) the

relevant information; fill the form.

– Generate the content of the form as the summary.

• Previous IE work:

– FRUMP (DeJong, 79): ‘sketchy scripts’ of terrorism, natural disasters,

political visits...

– McFRUMP (Mauldin, 89): forms for conceptual IR.

– SCISOR (Rau and Jacobs, 90): forms for business.

– SUMMONS (McKeown and Radev, 95): forms for news.

– GisTexter (Harabagiu et al., 03): Traditional IE + dynamic templates

based on WordNet.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

IE-Based Summarization

• Topic Identification

– A template defines the topic of interest and important facts

associated with the topic. This also defines the coverage of IEbased summarizer.

– Problem: how to select a relevant template? (i.e. the frame

initiation problem)

• Topic Interpretation

– Problem: how to fill template slots and merge multiple

templates?

• Generation

– Problem: how to generate summaries from the filled and merged

templates?

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

FRUMP (DeJong 1979)

•

•

•

•

Domain: General news

Input: UPI news stories

Output: summaries (single doc)

Template: 60 sketchy scripts that contain

important events in a situation.

– Meeting, earthquake, theft, war, …

– Represented as conceptual dependency (CD)

• Encode constraints on script variables, between script

variables, and between scripts.

• Ex: John went to New York. ((ACTOR (*John*)

<=>

(*PTRANS*)

OBJECT (*JOHN*)

TO

(*NEW YORK*))

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

FRUMP (cont.)

• Vocabulary size: 2,300 root words plus

morphological rules.

• Interpretation Mechanism

– PREDICTOR initiates candidate scripts.

– SUBSTANTIATOR validates the script slots.

• Evaluation

– 6 days run from April 10 to 15 in 1980.

– Lenient: recall 63%, precision 80%

– Strict: recall 43%, precision 54%

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

FRUMP (cont.)

• Three ways to activate sketchy scripts:

– Explicit: a word or phrase that identifies an

entire script can activate the script.

• Ex: ARREST

– Implicit: invocation of a script activates a

related script.

• Ex: CRIME => ARREST

– Event-inducing: a central event of a script can

activate the script. (key request)

• Ex: police apprehended someone => ARREST

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

McFRUMP (Mauldin 1989)

• Domain: FRUMP + Astronomy (5 scripts)

• Input: UseNet news; Output: case frame

(CD)

QuickTime™ and a

TIFF (Uncompressed) decompressor

are needed to see this picture.

*Mauldin 91

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

McFRUMP (cont.)

• Vocabulary size: 3,369 nouns, 2,148 names, and 376

basic verbs + synonyms from Webster’s 7 (28,323 word

senses) + near synonyms, and proper name rules.

• Interpretation Mechanism

– PREDICTOR initiates candidate scripts.

– SUBSTANTIATOR validates the script slots.

• Evaluation

– Use as a component in FERRET, a conceptual information

retrieval system.

– Measured on a test corpus of 1,065 astronomy texts with recall

52% and precision 48%.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

SCISOR (Rau & Jacobs 1990)

•

•

•

•

•

Domain: Merger & Acquisition (M&A)

Input: Dow Jones News + Queries

Output: Answers

Template: M&A (1?)

Vocabulary size: 10,000 word roots + 75 affixes and a

core concept hierarchy of 1,000 nodes.

• Interpretation Mechanism

– TRUMPET top down processor matches bottom up output with

expectations.

– TRUMP bottom up processor parses input into frame-like

semantic representation.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

SCISOR (cont.)

• Evaluation:

NA

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

SUMMONS (McKeown & Radev 95)

• Domain: Terrorist (MUC-4)

• Input: Filled IE templates + Sources + Times

• Output: Automatically generated English

summary of a few sentences (abstract)

• Template: Terrorist (1?, 25 fields)

• Vocabulary size: Large? (based on FUF,

Functional Unification Formalism, Elhadad 91)

• Interpretation Mechanism

– Summarization is achieved through a series of

template merging using 7+1 major summary

operators.

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

SUMMONS

(cont.)

• Evaluation: NA

• Goal: Generate paragraph

summaries describing the

change of a single event

over time or a series of

related events.

– Assume all the input

templates are about the same

event. (a strong assumption!)

– Focus on similarity and

difference of sources, times,

and values of slot.

Input: Cluster of templates

T1

…..

T2

Tm

Conceptual combiner

Combiner

Domain

ontology

Planning

operators

Paragraph planner

Linguistic realizer

Sentence planner

Lexicon

Lexical chooser

Sentence generator

OUTPUT: Base summary

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

SURGE

SUMMONS Operators

(Topic Interpretation)

*TxSi : slot i of template x; ∪: union; ⊂ : specialization

Chin-Yew LIN • IJCNLP-05 • Jeju Island • Republic of Korea • October 10, 2005

GisTexter (Harabagiu et al. 03)

(An End-to-end IE-based Summarizer)

•

•

•

•

Domain: General

Input: General News

Output: summaries (extract) with sentence reduction

Template: Natural Disasters (1?) + ad hoc template from WordNet

topical relations. (match 40 out of 59 DUC 2002 topics)