Command Management System For Next

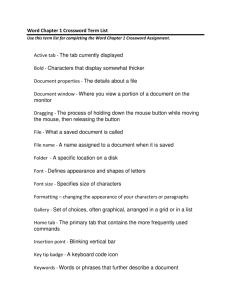

advertisement

Designing Systems for Next-Generation I/O Devices Mitchell Tsai, Peter Reiher, Jerry Popek UCLA May 20, 1999 Problem • Next-Generation I/O performs poorly with existing applications and operating systems. – Examples of next-generation sensors/actuators • Speech, vision, handwriting, physical location… – AI meets real General-Purpose Systems. • Not in the sandbox anymore! – What should OSs provide for these technologies? Current Systems Keyboard & Mouse GUI Interface OS & Applications Requires 100% accuracy in critical situations One input at a time, from one source Grammar Sounds Speech Recognition Engine OS & Applications Speech Enabler Best Phrase “Make the text blue” Command TextRange.Font.Color = ppAccent1 80-99% accuracy Noise & Errors • Existing Metrics (Accuracy & Speed) are not good enough. • Dictation: 99% accuracy at 150 wpm 10 40 X sec/error = 20% time correcting errors! Type Time (sec) Tspeech 38 2 Tdelay 488 33 Tcorrections 131 Tproof-reading 29 Ttotal 230 Speed (wpm) % Total Time 160 16% 85 14% 30 57% 26 13% 9 26 100% Ttotal = Tspeech + Tdelay + Tcorrections + Tproof-reading Command & Control Errors 1) Most programs have No Undo capability 2) One Keystroke Loss – Cancel in MS Money – Paste instead of Copy on PalmPilot 3) Undo requires advanced knowledge – MS Word accidental shift to outline mode 4) Undo is inconsistent between programs – One text selection (Outlook Mail) or two (Netscape Mail) From Dictation to Commands • Commands are worse than dictation – Con: Errors can be irreversible and/or dangerous – Con: Dictation delays processing to increase accuracy – Pro: Smaller grammars produce higher accuracy • Error handling “ad hoc” & insufficient – Handled twice by sensor processor & application – Programmers design custom interfaces (or programs!) – Users confused by inconsistencies • How to leverage new inputs? – Context-sensitive and ambiguous commands Outline • • • • Problems of Next-Generation Sensors BabySteps: Some Dialogue Management Services Related Work Design Issues for Post-GUI Environments Next-Generation Sensors • Direct – speech, handwriting, vision (eye gaze, pointing, gesture) • Indirect – vision (head and eye focus), geographic location, identification badges, emotions (affective computing). • Traditional – network connectivity, computer resources. 4 Main Problems of Next-Generation Sensors 1) Noise – “Make this b… red”, Sporadic incorrect GPS readings 2) Errors – Accidental user errors, Sensor processor mistakes 3) Ambiguity – “Make this box red”: Which box? 4) Fragmentation – Simultaneous inputs from speech, pointing, & vision Sequences of Errors • Series of commands – “cd thisdir; mv foo ..; rm *” • Linear Undo Stack problems – Accidentally undo a few operations (X, Y, Z) – Type “A” – Lose all operations on the stack (X, Y, Z) • Quit without Save, Accidental Command Mode – Oops!, Confirmed a “Yes/No/Cancel” box. BabySteps: Some Dialogue Management Services • Command Manager – Command Services – Command Properties PowerPoint: Context-Sensitive Speech & Mouse • Context Manager – Analyze Behavior Patterns – Explicit Contexts (Internal, Dialogue, and External) • Communicating Ambiguous Information – Probabilistic – Richer, Task-based, Annotated BabySteps Sounds Speech Interpreter Command Processing Dangerous commands Grammar for context 7 Safe commands Command Processing Modules Context Management OS & Applications Command Properties for context 7. “We are in context 7 now.” Command Management 1) Command Services must be provided by OS – Recording, editing, filtering,... 2) Command Properties must be communicated to OS – Ambiguous, context-sensitive events (from sensors) – Safety, reversibility, usage patterns, cost (from applications) 3) Command Processing Modules – Safety Filter, Usage Tracker, Cost Evaluator How Speech Recognition Works I’ll of view Aisle loathe you Acoustic Model Best Match I loathe you I’ll of view Language Model Best Match I love you I love Hugh Two Model Best Match I love Hugh Best in different context 4 Models in Current Systems: Acoustic, Language, Vocabulary, Topic Methods for Better Accuracy • Speech Engines can produce scored output Score (Phrase | Sound) = –100 to 100 • Combine sensor information with application or OS information using likelihoods(L). L(Command | Sound, Context) = L(Command | Context) * L(Command | Phrase, Context) * L(Phrase | Sound) where L(A) = F(A) / (AF(A) – F(A)) and F(A) can be P(A) or some other scoring function Explicit Contexts From User Behavior Analysis • Example: – Context A = a priori probabilities for “editing” commands – Context B = a priori probabilities for “viewing” commands • Other Types of Explicit Contexts – – – – Variations on Least Recently Used (LRU) Simple Markov Models Hidden Markov Models (HMMs) Bayesian Networks Probabilistic Context-Sensitive Events High-level Events Select “box 3”, “line 4”, and “box 10” Mid-level Events 90% Region X, 10% Region Y Low-level Events Fuzzy Mouse Movement Probabilistic Objects in Events “Thicken” Type = Speech PClarification = 0.6 NCommands = 3 Command[1] = “Thicken line 11”, L[1] = 0.61 Command[2] = “Thicken line 13”, L[2] = 0.24 Command[3] = “Quit”, L[3] = 0.15 User Clarification • Consider PClarification, the probability that we should clarify the command with the user: PClarification = [1-L(CommandML, Context)] * LReversible(CommandML, Context) * LCost(CommandML, Context) CommandML is the Most Likely command. LReversible = 0 to 1 (1 means fully reversible) LCost = 0 to 1 (a normalized version of cost) • Reversibility and cost can reduce seriousness of errors, but they may increase the total time required to finish a task! • What is the relative utility of different types of clarification? BabySteps: Additional Factors • Performance Evaluation – Error Hierarchy – New Commands – “Ambiguity is a Strength, not a Problem” • “Transparency is not the best policy.” – How to get Feedback from the user? • Passive/Active – Different Types of “Cancel” • “Oops”, “Wrong”, “Backtrack” Application Performance: Error Types • Desired Effect • Inaction 2% 13% • Confirmation • Minor 0% 0% 1% 8% 8% 2% 1% 8% – Undoable • Medium – Fixable (1 command) – Fixable (Few commands) – Unrecoverable (Many commands) • Major – Exit without Save, Application Crash/Freeze 9% 5% Extended Benefits for Applications Sound Speech Interpreters • Mouse: Fuzzy Pointing Command Processing Command Processing Modules • Combining speech & mouse commands – Speech: “Make these arrows red.” – Mouse: Move around arrows and other objects. Ambiguity & Context = Convenience OS & Apps Ambiguity can be a Strength • Ambiguity is usually considered a problem. – If the user makes a precise command, and sensors provide perfect interpretation, then the application should know exactly what to do. • Exact precision by the user may be impossible or extremely time-consuming. Consider PowerPoint: – Moving the cursor to change modes • Select Object Move Object => Resize Object Copy Object – Selecting objects (and groups of objects) • Very close and/or overlapping (esp. with invisible boundaries) • From layers of different groups – Making object A identical with object B in size, shape, color, etc... BabySteps Summary • New sensors & user inputs present a family of problems – Noise, Errors, Ambiguity, Fragmentation • BabySteps: Some Dialogue Management Services 1) Command Management - Command Services & Command Properties 2) Context Management - Analyze Behavior Patterns, Explicit Contexts 3) Communicate Ambiguous Information - Probabilistic, Richer • Performance Evaluation – – – – New Metrics: Total Task Time, Error Hierarchy New Commands: Will they pass usability threshold? Transparency vs. Communication (User Feedback & Control) Ambiguity is a Strength BabySteps approach to 4 Main Problems 1) Noise – Facilitate closer interaction between sensor processors & applications – Reduce impact of errors through command & context management 2) Errors – Use user behavior analysis to detect, fix, and/or override errors. – Ask user for help based on context and command properties 3) Ambiguity – Limited context-sensitive speech and mouse 4) Fragmentation – Probabilistic, temporal multimodal grammars not handled yet Related Work • Context-Handling Infrastructures – Context Toolkit: Georgia Tech • Provides context widgets for reusable solutions to context handling [Salber, Dey, Abowd 1998, 1999]. • Multimodal Architectures (Human-Computer Interfaces) – QuickSet: Oregon Graduate Institute • First robust approach to reusable scalable architecture which integrates gesture and voice. [Cohen, Oviatt, et al. 1992, 1997, 1999]. • Context Advantages for Operating Systems – File System Actions: UC Santa Cruz • Uses Prediction by Partial Match (PPM) to track sequences of File System Events for a predictive cache [Kroeger 1996, 1999]. Related Work • CHI-99 – “Nomadic Radio: Scaleable and Contextual Notification for Wearable Audio Messaging”: MIT • Priority, Usage Level, & Conversations [Sawney, Schamandt 1999]. – LookOut, “Principles of Mixed-Initiative User Interfaces”: MSFT • Utility of Action vs. Non-action vs. Dialog. [Horvitz 1999]. – “Patterns of Entry and Correction in Large Vocabulary Continuous Speech Recognition Systems”: IBM/Univ. of Michigan • Compares Dragon, IBM, & L&H. Speech 14 cwpm (vs. keyboard 32 cwpm). [Karat, Halverson, Horn, Karat 1999]. – “Model-based and Empirical Evaluation of Multimodal Interactive Error Correction”: CMU/ Universität Karlsruhe • Models multimodal error correction attempts using TAttempt = TOverhead + R*TInput [Suhm, Myers, Waibel 1999]. Related Work • Multimodal Grammars – Oregon Graduate Institute [Cohen, Oviatt, et al. 1992, 1997]. – CMU [Vo & Waibel 1995, 1997]. • Command Management – Universal Undo [Microsoft] – Task-Based Windows UI [Microsoft] • Context Management (CONTEXT-97, CONTEXT-99) – AAAI-99 Context Workshop • “Operating Systems Services for Managing Context” [Tsai 1999] – AAAI-99 Mixed-Initiative Intelligence • “Baby Steps Towards Dialogue Management” [Tsai 1999] • Probabilistic & Labeled Information in OS – Eve [Microsoft] Post-GUI Systems Artificial Intelligence User Interfaces Operating Systems Next-Generation Sensors/Actuators Real People Computer People Special People General Public Design Issues for Post-GUI Environments • Performance may be driven by mobility & ubiquity. – – – – Hard to beat desktop performance, except for specialized tasks But why not design good macros? Or use 2+ pointers/mice? Even with no video screen or keyboard, use buttons (e.g. PalmPilot) Speech and video good for rapid acquisition of data • What are new tasks for smart mobile environments? – – – – Summarize ongoing tasks (e.g. “Car, what was I doing?) Real dialogue is mixed-initiative (All commands are backgrounded!) Control of multiple applications (Consider JAWS. Is this needed?) Context-sensitive communication (Where’s the nearest pizza?) Possible Changes • Explicit Contexts for Communication – For users, or for system services – What format for communicating events & contexts? – What command properties should applications support? • Database-like Rollback/Transactions for Application Commands – In addition to Elephant File System (HotOS 1999) – Making the entire computer more bulletproof, temporal history – Support dialogue management rather than linear commands • Command and Task History – How to handle? Databases? Trees? Human conversation? – Real Dialogue Management Possible Changes II • “Faster is not better.” – “Courteous Computing” (Horvitz, Microsoft) – Pre-executing tasks works best in MS Outlook with 1 sec delay – Alternative to “Yes/No” dialog = Announce action & wait 1 sec • User I/O must be buffered, filtered, & managed – Normal dialog is a series of background commands – Speech-only output may be a queue of application output requests – Variable environment conditions • low/high bandwidth connections & Video/PalmPilot – What if user must switch modalities midstream? • Separate SAPI, GUI may not work - Need Multimodal API Possible Changes III • Applications not designed for multiple commands. – Currently submenus & dialog box sequences help narrow context. – Procedures GUI event loops Post-GUI dialogue • Windows event systems aren’t either. • I/O not designed for rapid interactive haptic/visual systems. – 1/3 sec (300 ms) responses good for conscious responses – But not for unconscious actions • 1 ms visual tracking, 70 ms haptic responses, 150 ms visual responses • Cost/Delay of sensor processors extremely high – How to give e-mail system priority responsiveness? • Unified resource management, Soft Real-Time Systems – Governed by new Command Properties and Context Knowledge Possible Changes IV • Use Probabilistic & Multi-faceted Info throughout OS – Task-based file identification – Multiple configuration setups (NT dialup) • Applications could be designed for ambiguous and contextsensitive commands • Context-based Adaptive Computing, Active Networks • Will a more context-aware system provide resiliency? – Rather than super-slow AI learning? Possible Changes V • How do we support transition to real English dialogue? • “Computerese” may co-exist with – natural human spoken & gestural languages – command-line & GUI computer interfaces • Can other protocol learn from human languages? – Use ambiguity, synonyms. – Different Types of ACKs, NACKs Future Directions • If the System & Algorithm people can provide X, can the UI people design good ways use this information? • If the UI or Device has characteristic Y, what must the system and algorithm people provide? • New sensors & user inputs present a family of problems – Noise, Errors, Ambiguity, Fragmentation • User I/O may need a whole family of User Dialogue services, similar to networking, file management, or process control.