Geophysical exploration

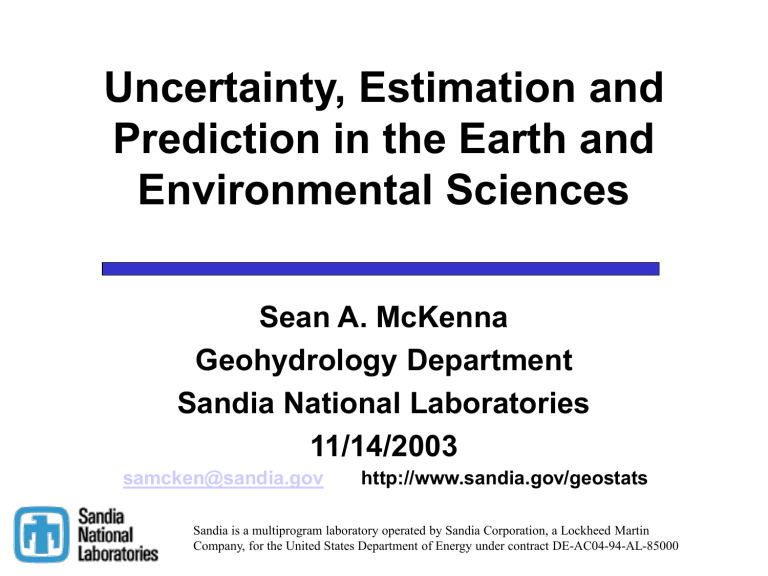

Uncertainty, Estimation and

Prediction in the Earth and

Environmental Sciences

Sean A. McKenna

Geohydrology Department

Sandia National Laboratories

11/14/2003 samcken@sandia.gov

http://www.sandia.gov/geostats

Sandia is a multiprogram laboratory operated by Sandia Corporation, a Lockheed Martin

Company, for the United States Department of Energy under contract DE-AC04-94-AL-85000

Impediments to Predictability

• What stands in the way of predictability?

– Logic

– Unknown physics

– Complicated physics

– Numerics

– Algorithms

– Statistics

– Experimental evidence

– Multi-scale Interactions

Yes!

Differences

• Material property heterogeneity matters

• “predictive” space: model uncertainty is unknown and maybe no experimental data

• Conceptual model uncertainty, both physics and problem geometry, needs to be considered

• Limited direct sampling drives the need to integrate all types of available data

Uncertainty

Geologic materials are ubiquitously heterogeneous

1200 km 50 cm

Limited sampling results in uncertainty in spatial distribution of physical properties

Forward Stochastic Imaging

Multiple Realizations of

Property Distribution Physics Model

(seismic, groundwater, thermal, electromagnetic, etc.)

Physics model acts as a transfer function to propagate uncertainty in spatial distribution of properties to uncertainty in outcome of physics predictions Distribution of Critical Metric(s)

Accuracy and Precision

Precise Imprecise

Seismic Example

• Examine effect of fine-scale heterogeneity on scattering, attenuation and coherence of seismic energy

– Not a well understood phenomenon

– Interested in array output and bearing estimation

• Heterogeneity in both P-wave velocity (v in Poisson’s ratio ( s

) p

) and

– Vary the amount of heterogeneity in the properties

(changing variance)

– Vary the level of cross-correlation between v p and s

Seismic Energy Propagation

Examine nine levels of variability in v p

100 to 1300 m/s)

(standard deviaitons from

Constant color scale for v p is used in all four figures

Seismic Energy Propagation

A vertically oriented point source of seismic energy at 100m depth is used in a 2-D, finite-difference model of a crosssection of the earth

Standard deviation of vp is 100m/s

Standard deviation of vp is 900m/s

Wavefront images made 350 msecs after source initiation

Power Loss

Comparing the power loss as calculated through “delay and sum beamforming” due to microgeologic variation to that in a homogeneous earth as a function of v p standard deviation

Each array has 17 receivers at 10m spacing

(160m aperture) and 12 array spacings centered at 100 to 1200m range are used

Source depth increased to 250 meters

Array Power Loss

0.6

0.5

0.4

0.3

0.2

0.1

0.9

0.8

0.7

Power loss for fixed array distance over 10 realizations

Average, 90 th

and 10 th

Percentiles of Power conv

/ Power opt

200 400 600 800

Standard Deviation of v p

(m/s)

1000 1200

1

0.9

0.8

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0

0

Mean power loss for five array distances

Power conv

/ Power opt

vs. Standard Deviation of v p

Array at 100m

Array at 200m

Array at 300m

Array at 600m

Array at 1200m

200 400 600 800

Standard Deviation of v p

1000 1200 1400

Seismic Energy Propagation

Mean bearing estimates as a function of range for four different levels of v p variability

Std dev. = 300 m/s Std dev. = 100 m/s

Std dev. = 500 m/s

Std dev. = 900 m/s

Spatial Correlation

• Earth and Environmental Science data are almost never independent

• Processes that create and rework the earth are a combination of diffusive and convective transport with some abrupt jolts

– Rivers, wind, volcanism, earthquakes, erosion

• Often these processes are driven by a gradient and result in non-stationary property fields

Geostatisitcs

• Study of information exhibiting spatial correlation

• Set of tools for calculating and defining spatial correlation

• Adaptations to regression theory that allow for estimation and prediction

Problem Details

Deterministic Estimation: z

*

( u ) | ( info )

G ( info )

Probabilistic Estimation:

F

*

( z ; u ) | ( info )

G ( info )

Ideally, G takes into account values, location, relative clustering, scale and precision of surrounding data

(information) when making estimate of z at any location u

Ideally, G provides a minimum variance, unbiased estimate of z at any location u (a “BLUE”)

Variogram

Variogram defines increase in variability between two samples as the distance between those points increases.

Range

Variogram points are calculated from field data

M

I

( h )

1

2 n i n

1

( Z ( x i

)

Z ( x i

h ))

2

Sill

Nugget

Separation Distance

Covariance is the complement of the variogram under condition of second-order stationarity

C(h) = Sill-

(h)

Variogram (Cont.)

Variogram is the moment of inertia of points about the 1:1 line for any separation distance, h.

M

I

( h )

1

2 n i n

1

( Z ( x i

)

Z ( x i

h ))

2 d

Z (x) Z (x)

Significant difference from a covariance calculation in that a defined mean is not necessary

Variograms (Cont.)

Variogram Modeling

Variogram model must be chosen to produce a positive definite covariance matrix in the next step of the estimation h<a: γ(h) h

a: γ(h)

C

1.5

h a

C

0.5

h a

3

1.2

Variogram Models

Range = 100.0 SIll= 1.0

1.0

γ(h)

C

1

e

3h a

0.8

0.6

γ(h)

C

1

e

( 3 h )

2 a

2

Where C = sill value a = range h = lag distance

0.4

0.2

0.0

0.0

50.0

100.0

150.0

Distance

200.0

250.0

Estimation

Ordinary Kriging (OK) estimate:

*

Z

OK

( x

0

)

i

N

1

Subject to:

i

Z ( x i

) i

N

1

i

1 .

0

OK estimation variance:

N s

2

OK

( x

0

)

Cov ( 0 )

i

1

i

Cov ( x

0

, x i

)

Ordinary Kriging Equations

C

C

C

:

1

11

21

N 1

C

12

C

22

:

C

N 2

1

C

:

:

1

:

:

C

1 N

C

2 N

:

C

NN

1

1

1

1

1

0

L

:

N

1

2

= D

C

C

C

0

1

:

01

02

N

Solution of OK equations is a constrained minimization problem.

Additional row and column on LHS guarantee unbiasedness.

Lagrange parameter solution for minimum variance

Ordinary Kriging Properties

• Minimum mean square error

• Estimation interval not limited to sample data interval (weights outside [0,1]

• Screen effect

• Declustering ability

• Exact interpolator with zero variance

• Cannot handle duplicate sample coordinates

• Variance is not a function of data values

• Equations are completely non-parametric

Extending Kriging

• Kriging is designed to produce locally optimal estimates, but not to reproduce spatial patterns

– As an interpolator, Kriging is a smoothing operator

• Kriging also produces a variance, but there is no distribution form associated with the estimate and the variance

Imparting Shape to ccdf

Transform data and invoke multivariate normal assumption

Select K thresholds and estimate ccdf at each threshold – transform data and interpolate between thresholds

Sampling ccdfs

• At each point in a grid, the ccdf can be created through a parametric or nonparametric technique conditional to multiple

9000 types of information

8000

7000

• These ccdfs are created with spatially varying centers and variances

• Ccdfs are visited on a random path and sampled

6000

5000

4000

9000

3000

2000

-8.0

-8.5

3000

8000

2000

7000

1000

6000

-9.0

-9.5

5000

1000

4000

2000 3000

9000

4000 5000 6000 7000 8000 9000

8000

3000

7000

2000

6000

1000

5000

1000 2000

4000

3000 4000 5000 6000 7000 8000 9000

1000

-7.5

• Previously simulated points join the

1000 2000 3000 4000 5000 6000 7000 8000 9000

-7.5

-8.0

-8.5

-9.0

-9.5

conditioning data set for future simulations

-7.5

-8.0

-8.5

-9.0

-9.5

Estimation vs. Simulation

Estimation ( kriging ) is a geostatistical process that returns best guess (optimal value) at each location.

Optimal Estimate

Distance

Simulation : geostatistical process that defines the full distribution of possible values at each location and randomly chooses one of them as representative

Groundwater Transport

• A recurring problem is predicting the eventual migration of a contaminant from a landfill, nuclear waste repository, fuel spill, etc.

• Path and timing of migration are uncertain and can be strongly affected by spatial distribution of material properties

• Try to incorporate this uncertainty through stochastic simulation of properties

Radionuclide Transport

Dispersion of flowpaths, velocities and location of contaminant plume are controlled by heterogeneity of aquifer.

Three different realizations of log10 transmissivity conditioned to same 89 well measurements

Inverse Modeling

• Previous slide showed fields conditioned to measurements of property material

• How to calibrate correlated fields of a spatial random variable to measurements of state variables?

– Condition simultaneously to both permeability and water level measurements

Pilot Point Review

• Choose locations in the model domain and update their properties to produce better fit to measured heads (“calibration points”)

• Spread influence of each point to neighboring model cells by using the spatial covariance function as a weighting scheme

Pilot Point Example

Simple example of pilot five points used to update a log10 transmissivity field

Pilot Point Example (Cont.)

-3

-4

-5

-6

-6

-5

-4

-3

-11

-10

-9

-8

-7

-3

-4

-5

-6

-7

-6

-5

-4

-3

-11

-10

-9

-8

-7

-7

-8

-8

-9

-9

-10

-10

-11

N-S 80

Dis tan

60 ce ( me 40 ters

)

-11

20

E-

W

40

D

Ist an ce

60

(m ete rs)

100

20

0

0

80

20

E-

W

40

di sta nc e (

60 me ter s)

100

N-S 80

DIs tan

60 ce ( me

40 ters

) 20

0

0

80

Pilot points and variogram provide a means of calibrating the heterogeneous field to match pressure data

Inverse Modeling

Linear

Model c Xb

= ( c Xb ) t Q ( c Xb ) b = ( X t QX ) -1 X t Qc .

Non-Linear

Model c

0

= M ( b

0

) c = c

0

+ J ( b b

0

)

= ( c

c

0

J ( b b

0

)) t Q ( c

c

0 u = ( J t QJ ) -1 J t Q ( c

c

0

)

- J ( b b

0

))

Regularization

• Most applications of calibration by the Pilot

Point method have considerably more estimated parameters than observed data

• Need to reduce the effective number of parameters through regularization

• For this example problem, minimize the weighted sum of squared differences between pilot point values

– Weights are based on spatial covariance

Problem Statement

• Stochastic-Inverse models create non-unique fields of spatially correlated properties (K, T), each of which can honor observed head data

• Output of the stochastic-inverse model is not the final goal but is used as input to a predictive model (e.g., solute transport)

• How can we address non-uniqueness in the stochastic fields at the predictive model stage?

Non-Uniqueness Problem

• Any inverse model of reasonable complexity will lead to a non-unique set of parameter estimates

– Noise in data

– Correlation between parameters

• What is the effect on advective transport calculations when non-unique T fields are used as input?

• Use Predictive Estimation to examine this question on a hypothetical test problem

Simple Example

Theis solution for a transient pumping well provides a simple, 2 parameter model.

18.0

16.0

h

h o

( x , y )

Q

4

T

W ( u ) 14.0

12.0

10.0

u

4 T ( r

2

S t

t o

)

8.0

6.0

4.0

2.0

0.0

0.0

W ( u )

E 1 ( u )

u

e m m dm ( u

)

10.0

Log10 T = - 5.0

Log10 S = - 3.0

20.0

30.0

Radius (m)

Solution

40.0

50.0

Sample the drawdown values for a given time at

20 different radii from 2.2 to 44.0 meters

Simple Example: Part II

Rerun the simple pumping well solution, but this time add an absolute average of 0.23 meters of error to each observation

20.0

18.0

16.0

14.0

12.0

10.0

8.0

6.0

4.0

2.0

0.0

0.0

10.0

20.0

30.0

No Error

Error

40.0

50.0

Errors are uncorrelated and drawn from a uniform distribution [ –0.5,0.5]

Radius (m)

Simple Example

-2.8

-2.9

0.1

1

10

100

1000

-2.8

-2.9

0.1

1

10

100

1000

-3.0

-3.0

-3.1

-3.1

-3.2

-5.2

-3.2

-5.1

-5.0

-4.9

Log10 Transmissivity (m2/s)

-4.8

-5.2

-5.1

-5.0

-4.9

SSE

Log10 Transmissivity (m

2

/s)

i

20

1

( DD i obs

DD i calc

)

2

-4.8

Predictive Estimation

Allow nearly optimal solutions in order to explore nonuniqueness of solution space with emphasis on conservative predictions

Critical Point defines most conservative

Critical

Point prediction from one model while

P

2

Time maintaining near optimal solution in a different model

P

1

How fast could the 5 th percentile travel time be if the calibration is 5 percent off of the optimal calibration?

Predictive Estimation

d =Kb Scalar prediction from model run

0 l = ( c – Xb) t Q ( c – Xb ) where:

l

0

min

b

X

T

QX

1

X t

Qc

K

2

Parameter vector is a function of both the estimation and the prediction

1

2

2

0

c t

Qc

K t

c

t

X

QX t

QX

X

t

1

QX

K

1

X t

Qc

Lagrange Parameter

Definition

Keep the model “calibrated” while minimizing/maximizing the prediction d

After Vecchia and Cooley, 1987, Water Resources Research

Example Problem Setup

6000 Head at Y = 6000 is 224 meters

Source Zone

5000

50 meter grid with nx = 80 and ny = 120

(9600 cells)

4000

3000

High T buffer zones

2000

Regulatory

Boundary

1000

Head at Y = 0 is 212 meters

0

0 1000 2000

Easting (meters)

3000 4000

Total of 89 boreholes with transmissivity measurements

True T Field and Transport

Steady –state flow solution and advective transport through the true

Transmissivity field. Only showing a fraction of the

400 total particles

True spatial pattern obtained from

X-ray transmission image of

Culebra dolomite porosity

Pilot Point Locations

6000

5000

4000

Total of 17 pilot points

Located somewhat arbitrarily in areas of low well density

3000

2000

1000

0

0 1000

Easting (meters)

2000 3000

Original T Data

Pilot Points

4000

Three Levels of Models

• Three ensembles of 100 realizations each:

• Seeds

– Initial realizations from geostatistical simulator and no calibration to observed heads

• Calibrated

– Seed realizations calibrated to observed heads

• Predictive Estimation

– Seed realizations with “near optimal” (+10 %) calibration to observed heads that minimize 5 th percentile arrival times

Objective Function Distributions

1.0

0.8

0.6

0.4

0.2

0.0

0

Cumulative distributions of the objective functions across 100 realizations for each of three ensembles

Seeds

Calibration

Predictive

20 40 60

Objective Function, SSE (meters

2

)

80

SSE

i

89

1

( H i est

H i obs

)

2

Transmissivity Fields

True T field is compared to expectation (average) maps from three different generation techniques

True Seed Calibrate Predictive

Smoothing nature of head calibration is evident

Travel Time Distributions

Distributions across 100 realizations compared to true values

5 th Percentile Travel Times Median Travel Times

1.0

1.0

0.8

0.6

0.8

0.6

0.4

0.4

0.2

Seeds

Calibrated

Predictive

0.2

Seeds

Calibrated

Predictive

0.0

2.0e+4 3.0e+4 4.0e+4 5.0e+4 6.0e+4

Fifth Percentile Travel Time (years)

7.0e+4

0.0

2.0e+4 4.0e+4 6.0e+4 8.0e+4 1.0e+5 1.2e+5 1.4e+5 1.6e+5

Median Travel Time (years)

All distributions are accurate

Seed distribution is least precise

Stochastic Inverse Summary

• Predictive estimation provides:

– Technique of dealing with non-uniqueness in Tfield estimation

– Maximum conservatism in predictions while maintaining near optimal calibration

• Example Problem Results:

– Significantly shorter travel times with essentially the same calibration

– More conservative and more accurate predictions

McKenna, Doherty and Hart, 2003 Journal of Hydrology

Conceptual Model Uncertainty

Conceptual model of fracture transport within Culebra has strong influence on

PA results

Need to better understand masstransfer conceptual model

Note that regulations for WIPP site incorporate prediction uncertainty

S P

F r

T o

D

P y

T o

D P

T o h t

C m e m

( 1

-

1

-

1

-

1

1

1

3

S d e e R

Conceptual Model (Cont.)

• The previous slide contained three different conceptual model options and it was possible to test the competing models by collecting new data

– What is best way to carry forward all viable conceptual models in predictive modeling if none of them can be ruled out?

– How do we know there are not additional (I.e., more than three) conceptual models that should be considered?

– Can the effect of unknown additional conceptual models be accounted for in predictions?

Data Integration

Multiple data sets collected with varying support and precision need to be quantitatively integrated to provide a final estimate, with uncertainty, of a particular property

Examples: trace element concentrations in soil with crosscorrelation between elements and varying means between soil types; acoustic impedance and porosity; thermal conductivity, bulk density and model predictions; multispectral satellite bands and ground truth samples, etc.

Discussion Topic

• Uncertainty space

– Calculate physics model using 10,000 stochastic fields generated by a Gaussian simulator as input

– Calculate again using results of an indicator simulator

– Are the solution spaces identical? Do they overlap?

– What if both ensembles are calibrated to extensive pressure data?

– How do these solution spaces compare to predictions made with analytical techniques?

Geosciences:

Multiple Realizations of

Property Distribution Physics Model

(seismic, groundwater, thermal, electromagnetic, etc.)

Physics model acts as a transfer function to propagate uncertainty in spatial distribution of properties to uncertainty in outcome of physics predictions Distribution of Critical Metric(s)

Discussion Topics

• State of UQ and Predictability in the Earth Sciences

(Engineering focus)

– “ Prediction” has a fuzzy definition in the earth sciences

– Does “calibration” to existing data mean that we are no longer “predicting”? What if we are not calibrating to the final output, but to data that informs subsystem models that impact the final output?

– Can’t quantify what we don’t know that we don’t know

(correct conceptual model?) Do enough data of the right kind and enough time always lead to the correct conceptual model?

Discussion Topics

• State of UQ and Predictability in the Earth Sciences

(Engineering focus) Continued

• Summary:

– We work in a data poor environment,

– the practice of forward stochastic modeling is well developed

– geostatistical approaches have been developed and are well-suited to handling stationary fields

– need more efficient and practically oriented sampling

– linear flow and transport processes are relatively wellmodeled

– non-linear flow and transport still need work

Discussion Topics (Cont.)

• Definitions of predictability in the earth sciences

– Quantities include the timing and magnitude of contaminant releases in groundwater systems (landfills, nuclear waste repositories, spills, etc)

– Amount of oil/gas that can be extracted from a reservoir, time in which that can be accomplished

–

Subsidence of land surface due to fluid extraction

– Focused on engineering problems (not prediction earthquakes, floods, tsunamis, etc.)

– Regulatory bodies now prescribe regulations probabilistically

– Definitions of “predictability” are not black and white

Discussion Topics (Cont.)

• What stands in the way of predictability?

– Unknown physics

– Complicated physics

– Numerics

– Algorithms

– Statistics

– Experimental evidence

– Multi-scale Interactions

– Depends on objectives

– Tie objectives of modeling to good checks as you go (the “in process audit” idea)

Discussion Topics (Cont.)

• What can be done to mitigate obstacles to predictability?

–

Better sampling of data

– Models that take complex non-stationarity into account

– Better techniques for upscaling information

– Multiscale modeling

– “feedback” – nature controls uncertainty

– Kalman filters to update model, not just state of the model

– Modeling the behavior of modelers (how does the modeler learn? Can this be quantified and extrapolated)

Discussion Topics (Cont.)

• What would be the benefits of improved predictability?

– More confidence in predictions

– Avoiding falling into the “precise but inaccurate” trap

Precise Imprecise

Discussion Topics (Cont.)

• What would be the contents of a predictability aware model?

Discussion Topic

• Numerical vs. Analytical Models

– What are strengths and weaknesses of each?

– Can analytical solutions provide nonparametric models of local ccdfs?

– How can the two approaches be used together to advantage?

Discussion Topic

• Other perspectives on UQ and predictability in earth and environmental sciences?

• Can we really develop full distributions of predictions, or can we only reasonably bound the possible outcomes?

Discussion Topic

• Data integration

– Local ccdf can be conditional to multiple data sources and this is typically done in an

“empirically” Bayesian approach

– Is a true Bayesian approach necessary?

– How sensitive are model predictions to data integration approach?

Discussion Topic

• Multiple conceptual models

– Is there an efficient way to carry along multiple conceptual models (physics, boundary conditions, domain geometry) during calculations?

– Is there a way to account for the possibility of a previously unconsidered model?

Research Areas

• Multipoint statistics

– Current spatial (geo) statistical approaches depend on a 2-point spatial covariance – cannot be used to define complex spatial patterns

– Pattern recognition techniques for extracting more information from “training images”

Multipoint

Geostatistical approaches based on a two-point spatial covariance model are not capable of describing many patterns found in nature: meandering streams, fracture networks, volcanic deposits, etc.

Example of a fracture network on a glacier formed around a collapse feature due to a volcano below the glacier

Research Areas

• Sample Support

– Increasingly data are being collected along transects

– Instruments recording continuously on mobile platforms

– For a property exhibiting spatial correlation, these data provide some amount of redundant information

Research Areas

• Properties and processes that do not follow the traditional statistical models

– Levy distribution description of permeability and also of solute dispersion within porous media

Problem Space Summary

• Parameter uncertainty produces prediction uncertainty

• In Earth and Environmental Sciences, parameters often exhibit spatial correlation

– Need to express and sample joint uncertainty

• Conceptual models governing physics, and/or boundary conditions are often uncertain and can be unknown

Typical Problem Spaces

• Limited number of samples

• Need to expand information away from samples (“mapping” or “material modeling”)

• Incorporate uncertainty in that expansion

• Make decisions on mapped property that incorporate uncertainty

• Use mapped property as input to a predictive model

– Predictive models may be run stochastically

Modeling Materials

Sparse sampling of a site with environmental contamination is the basis for estimating (“best guess”) the contamination level at all sites, or predicting the probability of exceeding a contaminant threshold at all sites

Example from Sandia Lead Flyer site. Right image shows the probability of exceeding a 400 ppm threshold