Dealing with Project Evaluation and Broader Impacts (An Interactive

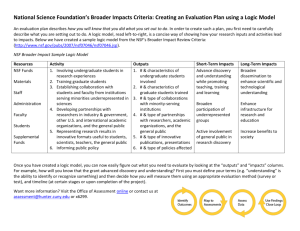

advertisement

1 Most of the information presented in this workshop represents the presenters’ opinions and not an official NSF position Local facilitators will provide the link to the workshop slides at the completion of the webinar. Participants may ask questions by “raising their virtual hand” during a question session. We will call on selected sites and enable their microphone so that the question can be asked. Responses will be collected from a few sites at the end of each Exercise. At the start of the Exercise, we will identify these sites in the Chat Box and then call on them one at a time to provide their responses. 2 Learning must build on prior knowledge ◦ Some knowledge correct ◦ Some knowledge incorrect – Misconceptions Learning is ◦ Connecting new knowledge to prior knowledge ◦ Correcting misconceptions Learning requires engagement ◦ Actively recalling prior knowledge ◦ Sharing new knowledge ◦ Forming a new understanding Effective learning activities ◦ Recall prior knowledge -- actively, explicitly ◦ Connect new concepts to existing ones ◦ Challenge and alter misconceptions Active & collaborative processes ◦ Think individually ◦ Share with partner ◦ Report to local and virtual groups ◦ Learn from program directors’ responses 4 Coordinate the local activities Watch the time ◦ Allow for think, share, and report phases ◦ Reconvene on time -- 1 min warning slide Ensure the individual think phase is devoted to thinking and not talking Coordinate the asking of questions by local participants and reporting local responses to exercises 5 Long Exercise ---- 6 min ◦ Think individually -------- ~2 min ◦ Share with a partner ----- ~2 min ◦ Report in local group ---- ~2 min Short Exercise ------ 4 min ◦ Think individually --------- ~2 min ◦ Report in local group ---- ~2 min Individual Exercise ----------- 2 min Questions ----------- 5 min Reports to Virtual Group----- 5 min 6 The session will enable you to collaborate more effectively with evaluation experts in preparing credible and comprehensive project evaluation plans…. it will not make you an evaluation expert. After the session, participants should be able to: Discuss the importance of goals, outcomes, and questions in the evaluation process ◦ Cognitive and affective outcomes Describe several types of evaluation tools ◦ Advantages, limitations, and appropriateness Discuss data interpretation issues ◦ Variability, alternative explanations Develop an evaluation plan in collaboration with an evaluator ◦ Outline a first draft of an evaluation plan The terms evaluation and assessment have many meanings ◦ One definition Assessment is gathering evidence Evaluation is interpreting data and making value judgments Examples of evaluation and assessment ◦ Individual’s performance (grading) ◦ Program’s effectiveness (ABET and regional accreditation) ◦ Project’s progress and success (monitoring and validating) Session addresses project evaluation ◦ May involve evaluating individual and group performance – but in the context of the project Project evaluation ◦ Formative – monitoring progress to improve approach ◦ Summative – characterizing and documenting final accomplishments Project Goals, Expected Outcomes, and Evaluation Questions Effective evaluation starts with carefully defined project goals and expected outcomes Goals and expected outcomes related to: ◦ Project management Initiating or completing an activity Finishing a “product” ◦ Student behavior Modifying a learning outcome Modifying an attitude or a perception Goals provide overarching statements of project intention What is your overall ambition? What do you hope to achieve? Expected outcomes identify specific observable or measureable results for each goal How will achieving your “intention” be reflected by changes in student behavior? How will it change their learning and their attitudes? Goals → Expected outcomes Expected outcomes → Evaluation questions Questions form the basis of the evaluation process The evaluation process consists of the collection and interpretation of data to answer evaluation questions Read the abstract -- Goal statement removed Suggest two plausible goals ◦ One on student learning Cognitive behavior ◦ One on some other aspect of student behavior Affective behavior Focus on what will happen to the students ◦ Do not focus on what the instructor will do Long Exercise ---- 6 min ◦ Think individually -------- ~2 min ◦ Share with a partner ----- ~2 min ◦ Report in local group ---- ~2 min Watch time and reconvene after 6 min Use THINK time to think – no discussion, Selected local facilitators report to virtual group The goal of the project is …… The project is developing computer-based instructional modules for statics and mechanics of materials. The project uses 3D rendering and animation software, in which the user manipulates virtual 3D objects in much the same manner as they would physical objects. Tools being developed enable instructors to realistically include external forces and internal reactions on 3D objects as topics are being explained during lectures. Exercises are being developed for students to be able to communicate with peers and instructors through real-time voice and text interactions. The project is being evaluated by … The project is being disseminated through … The broader impacts of the project are … Non engineers should substitute: “Organic chemistry” for “statics and mechanics of materials” “Interactions” for “external forces and internal reactions” One Minute GOAL: To improve conceptual understanding and processing skills In the context of course ◦ Draw free-body diagrams for textbook problems ◦ Solve 3-D textbook problems ◦ Describe the effect(s) of external forces on a solid object orally In a broader context ◦ ◦ ◦ ◦ ◦ Solve out-of-context problems Visualize 3-D problems Communicate technical problems orally Improve critical thinking skills Enhance intellectual development GOAL: To improve ◦ ◦ Self- confidence Attitude about engineering as a career Write one expected measurable outcome for each of the following goals: ◦ ◦ Improve the students’ understanding of the fundamental concepts in statics (cognitive) Improve the students’ self confidence (affective) Individual exercise ~ 2 minutes ◦ Individually write a response One Minute Understanding of the fundamentals ◦ Students will be better able to: Describe all parameters, variable, and elemental relationships Describe the governing laws Describe the effects of changing some variable in a simple problem Changes in the frictional force on a block when the angle of an inclined plane changes Changes in the forces in the members of a simple three element truss when the connecting angles change Self-Confidence ◦ Students will: Do more of the homework Have less test anxiety Express more confidence in their solutions Be more willing to discuss their solutions Write an evaluation question for these expected measurable outcomes: Understanding of the fundamentals ◦ Students will be better able to describe the effects of changing some variable in a simple problem Self-Confidence ◦ Students will express more confidence in their solutions Individually identify a question for each Report to the group One Minute Understanding of the fundamentals ◦ ◦ Are the students better able to describe the effects of changing some variable in a simple problem Are the students better able to describe the effects of changing some variable in a simple problem as a result of the intervention Self-Confidence ◦ ◦ Do the students express more confidence in their solutions Do the students express more confidence in their solutions as a result of the intervention Tools for Evaluating Learning Outcomes Surveys ◦ Forced choice or open-ended responses Concept Inventories ◦ Multiple-choice questions to measure conceptual understanding Rubrics for analyzing student products ◦ Guides for scoring student reports, tests, etc. Interviews ◦ Structured (fixed questions) or in-depth (free flowing) Focus groups ◦ Like interviews but with group interaction Observations ◦ Actually monitor and evaluate behavior Olds et al, JEE 94:13, 2005 NSF’s Evaluation Handbook Observations Surveys Time & labor intensive Efficient Accuracy depends on Inter-rater reliability must subject’s honesty be established Difficult to develop reliable Captures behavior that and valid survey subjects are unlikely to Low response rate report threatens reliability, validity Useful for observable & interpretation behavior Olds et al, JEE 94:13, 2005 Use interviews to answer these questions: ◦ ◦ ◦ ◦ ◦ What does program look and feel like? What do stakeholders know about the project? What are stakeholders’ and participants’ expectations? What features are most salient? What changes do participants perceive in themselves? The 2002 User Friendly Handbook for Project Evaluation, NSF publication REC 99-12175 Originated in physics -- Force Concept Inventory (FCI) Several are being developed in engineering fields Series of multiple choice questions ◦ Questions involve single concept Formulas, calculations or problem solving skills not required ◦ Possible answers include detractors Common errors -- misconceptions Developing CI is involved ◦ ◦ ◦ ◦ Identify misconceptions and detractors Develop, test, and refine questions Establish validity and reliability of tool Language is a major issue Pittsburgh Freshman Engineering Survey ◦ Questions about perception Confidence in their skills in chemistry, communications, engineering, etc. Impressions about engineering as a precise science, as a lucrative profession, etc. Validated using alternate approaches: ◦ Item analysis ◦ Verbal protocol elicitation ◦ Factor analysis Compared results for students who stayed in engineering to those who left Besterfield-Sacre et al , JEE 86:37, 1997 Levels of Intellectual Development ◦ Students see knowledge, beliefs, and authority in different ways “ Knowledge is absolute” versus “Knowledge is contextual” Tools ◦ Measure of Intellectual Development (MID) ◦ Measure of Epistemological Reflection (MER) ◦ Learning Environment Preferences (LEP) Felder et al, JEE 94:57, 2005 Suppose you where considering an existing tool (e. g., a concept inventory) for use in your project’s evaluation of learning outcomes What questions would you consider in deciding if the tool is appropriate? Long Exercise ---- 6 min ◦ Think individually -------- ~2 min ◦ Share with a partner ----- ~2 min ◦ Report in local group ---- ~2 min Watch time and reconvene after 6 min Use THINK time to think – no discussion Selected local facilitators report to virtual group One Minute Nature of the tool ◦ Is the tool relevant to what was taught? ◦ Is the tool competency based? ◦ Is the tool conceptual or procedural? Prior validation of the tool ◦ ◦ ◦ ◦ Has the tool been tested? Is there information concerning its reliability and validity? Has it been compared to other tools? Is it sensitive? Does it discriminate between a novice and an expert? Experience of others with the tool ◦ Has the tool been used by others besides the developer? At other sites? With other populations? ◦ Is there normative data? Questions Hold up your “virtual hand” to ask a question. Question or Concept 1 2 3 - Percent w ith Correct No. of Students Answ er Com parision Experim ental Com parision Experim ental Group Group Group Group 25 24 25 - 30 32 31 - 29% 34% 74% - 23% 65% 85% - Data suggest that the understanding of Concept #2 increased One interpretation is that the intervention caused the change List some alternative explanations Individual Exercise ---- 2 min ◦ Individually write a response ◦ Confounding factors ◦ Other factors that could explain the change One Minute Students learned the concept out of class (e. g., in another course or in study groups with students not in the course) Students answered with what they thought the instructor wanted rather than what they believed or “knew” An external event distorted the pretest data The instrument was unreliable Other changes in the course and not the intervention was responsible for the improvement The characteristics of groups were not similar Data suggest that the understanding of the concept tested by Q1 did not improve One interpretation is that the intervention did cause a change that was masked by other factors Think about alternative explanations How would these alternative explanations (confounding factors) differ from the previous list? Evaluation Plan List the topics that need to be addressed in the evaluation plan Long Exercise ---- 6 min ◦ Think individually -------- ~2 min ◦ Share with a partner ----- ~2 min ◦ Report in local group ---- ~2 min Watch time and reconvene after 6 min Use THINK time to think – no discussion Selected local facilitators report to virtual group One Minute Name & qualifications of the evaluation expert ◦ Get the evaluator involved early in the proposal development phase Goals, outcomes, and evaluation questions Instruments for evaluating each outcome Protocols defining when and how data will be collected Analysis & interpretation procedures Confounding factors & approaches for minimizing their impact Formative evaluation techniques for monitoring and improving the project as it evolves Summative evaluation techniques for characterizing the accomplishments of the completed project. Workshop on Evaluation of Educational Development Projects ◦ http://www.nsf.gov/events/event_summ.jsp?cntn_id=108142&org=NSF NSF’s User Friendly Handbook for Project Evaluation ◦ http://www.nsf.gov/pubs/2002/nsf02057/start.htm Online Evaluation Resource Library (OERL) ◦ http://oerl.sri.com/ Field-Tested Learning Assessment Guide (FLAG) ◦ http://www.wcer.wisc.edu/archive/cl1/flag/default.asp Student Assessment of Their Learning Gains (SALG) ◦ http://www.salgsite.org/ Science education literature Identify the most interesting, important, or surprising ideas you encountered in the workshop on dealing with project evaluation 47 Questions Hold up your “virtual hand” to ask a question. BREAK 15 min 49 BREAK 1 min 50 NSF proposals evaluated using two review criteria ◦ Intellectual merit ◦ Broader impacts Most proposals ◦ Intellectual merit done fairly well ◦ Broader impacts done poorly 52 To increase the community’s ability to design projects that respond effectively to NSF’s broader impacts criterion 53 At the end of the workshop, participants should be able to: ◦ ◦ ◦ ◦ List categories for broader impacts List activities for each category Evaluate a proposed broader impacts plan Develop an effective broader impacts plan 54 Broader Impacts: Categories and Activities 55 TASK: ◦ What does NSF mean by broader impacts? Individual Exercise ---- 2 min ◦ Individually write a response 56 One Minute Every NSF solicitation has a set of questions that provide context for the broader impacts criterion Suggested questions are a guide for considering broader impacts Suggested questions are NOT ◦ A complete list of “requirements” ◦ Applicable to every proposal ◦ An official checklist Will the project… Advance discovery - promote teaching & learning? Broaden participation of underrepresented groups? Enhance the infrastructure? Include broad dissemination? Benefit society? NOTE: Broader impacts includes more than broadening participation Will the project… Involve a significant effort to facilitate adaptation at other sites? Contribute to the understanding of STEM education? Help build and diversify the STEM education community? Have a broad impact on STEM education in an area of recognized need or opportunity? Have the potential to contribute to a paradigm shift in undergraduate STEM education? TASK: Identify activities that “broadly disseminate results to enhance scientific and technological understanding” Pay special attention to activities that will help transport the approach to other sites Long Exercise ---- 6 min ◦ Think individually -------- ~2 min ◦ Share with a partner ----- ~2 min ◦ Report in local group ---- ~2 min Watch time and reconvene after 6 min Use THINK time to think – no discussion Selected local facilitators report to virtual group 61 One Minute Dissemination to general public ◦ Applies to research and education development proposals ◦ See handout Dissemination to peers (other instructors) ◦ Education projects should include strategies for Making other instructors aware of material and methods Enabling other instructors to use material and methods Partner with museums, nature centers, science centers, and similar institutions to develop exhibits in science, math, and engineering. Involve the public or industry, where possible, in research and education activities. Give science and engineering presentations to the broader community (e.g., at museums and libraries, on radio shows, and in other such venues). Make data available in a timely manner by means of databases, digital libraries, or other venues such as CD-ROMs 64 Publish in diverse media (e.g., non-technical literature, and websites, CD-ROMs, press kits) to reach broad audiences. Present research and education results in formats useful to policy-makers, members of Congress, industry, and broad audiences. Participate in multi- and interdisciplinary conferences, workshops, and research activities. Integrate research with education activities in order to communicate in a broader context. 65 Standard approaches ◦ Post material on website ◦ Present papers at conferences ◦ Publish journal articles Consider other approaches ◦ ◦ ◦ ◦ ◦ NSDL Specialty websites and list servers (e.g., Connexions) Targeting and involving a specific sub-population Commercialization of products Beta test sites Focus on active rather than passive approaches 66 Questions Hold up your “virtual hand” to ask a question. Reviewing a Project’s Broader Impacts 68 Review the Project Summary & the excerpts from the Project Description Assume the proposal is a TUES Type 1 with a $200K budget and a 3-year duration and that the technical merit is considered to be meritorious • Write the broader impacts section of a review (Extra) Long Exercise ---- 8 min ◦ Think individually -------- ~4min ◦ Share with a partner ----- ~2 min ◦ Report in local group ---- ~2 min Watch time and reconvene after 8 min Use THINK time to think – no discussion Selected local facilitators report to virtual group ◦ Identify strengths and weaknesses ◦ Use a bullet format 69 One Minute Scope of activities ◦ ◦ ◦ ◦ ◦ Overall-very inclusive and good Well done but “standard things" Did not address the issue of quality No clear-cut plan Activities not justified by research base Dissemination ◦ Limited to standard channels ◦ Perfunctory 71 Industrial advisory committee a strength Collaboration with other higher education institutions ◦ Institutions appear to be quite diverse but use of diversity not explicit ◦ Interactions not clearly explained ◦ Sends mixed message – raises questions about effectiveness of partnership High school outreach ◦ Real commitment not evident ◦ Passive -- not proactive ◦ High school counselors and teachers not involved 72 Modules are versatile Broader (societal) benefits ◦ Need for materials not well described ◦ Value of the product not explained ◦ Not clear who will benefit and how much Assessment of broader impacts not addressed 73 TASK: ◦ Identify desirable features of a broader impacts plan or strategy General aspects or characteristics Long Exercise ---- 6 min ◦ Think individually -------- ~2 min ◦ Share with a partner ----- ~2 min ◦ Report in local group ---- ~2 min Watch time and reconvene after 6 min Use THINK time to think – no discussion Selected local facilitators report to virtual group 74 One Minute Include strategy to achieve impact ◦ ◦ ◦ ◦ ◦ ◦ ◦ Have a well-defined set of expected outcomes Make results meaningful and valuable Make consistent with technical project tasks Have detailed plan for activities Provide rationale to justify activities Include evaluation of impacts Have a well-stated relationship to the audience or audiences 76 WRAP-UP 77 Use and build on NSF suggestions ◦ List of categories in solicitations ◦ Representative activities on website Not a comprehensive checklist Expand on these -- be creative Develop activities to show impact impact Integrate and align with other project activities 78 Help reviewers (and NSF program officers) ◦ Provide sufficient detail Include goals, objectives, strategy, evaluation ◦ Make broader impacts obvious Easy to find Easy to relate to NSF criterion 79 Make broader impacts credible ◦ Realistic and believable Include appropriate funds in budget ◦ Make broader impacts consistent with Project’s scope and objectives Institution's or College’s mission and culture PI’s interest and experience Assure agreement between content of Project Summary and Project Description 80 Identify the most interesting, important or surprising ideas you encountered in the workshop on dealing with broader impacts 81 Grant Proposal Guide Proposal & Award Policies & Procedures Guide http://www.nsf.gov/pubs/policydocs/pappguide/nsf11001/ Broader Impacts Activities http://www.nsf.gov/pubs/gpg/broaderimpacts.pdf 82 Questions Hold up your “virtual hand” to ask a question. To download a copy of the presentation- go to: http://www.step.eng.lsu.edu/nsf/participants/ Please complete the assessment survey-go to: http://www.step.eng.lsu.edu/nsf/participants/