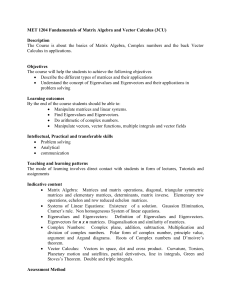

L8_Eigenvectors

advertisement

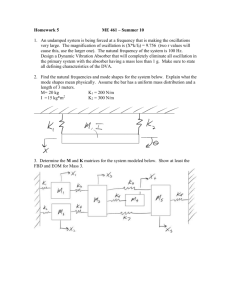

MA2213 Lecture 8

Eigenvectors

Application of Eigenvectors

Vufoil 18, lecture 7 : The Fibonacci sequence satisfies

s1 s2 1, s3 2, s4 3, s5 5, sn1 sn sn1

n

n

sn 1 0 1 sn

0 1 s1 0 1 1

s 1 1 s 1 1 s 1 1 1

n 1

2

n2

0 1

1 1

n

1

1

1

1

n

n

c c c c

sn 1 c c ,

, c

n

n

sn 1

c c

lim

lim

n

1

n

1

n s

n c

n

c

n

n

1 5

2

Fibonacci Ratio Sequence

Fibonacci Ratio Sequence

Another Biomathematics Application

Leonardo da Pisa, better known as Fibonacci, invented

his famous sequence to compute the reproductive

success of rabbits* Similar sequences un , vn describe

frequencies in males, females of a sex-linked gene.

For genes (2 alleles) carried in the X chromosome**

un1 0 1 un

u 1 1 u , n 0,1,2,...

n 2 2 2 n1

1 n

The solution has the form u n c1 c2 ( 2 )

1

2

where c1 3 (u0 2v0 ), c2 3 (u0 v0 )

*page i, ** pages 10-12 in The Theory of Evolution and

Dynamical Systems ,J. Hofbauer and K. Sigmund, 1984.

Eigenvector Problem (pages 333-351)

Recall that if

vector

v

A

is a square matrix then a nonzero

is an eigenvector corresponding to the

eigenvalue

if

Av v

Eigenvectors and eigenvalues arise in biomathematics

where they describe growth and population genetics

They arise in numerical solution of linear equations

because they determine convergence properties

They arise in physical problems, especially those that

involve vibrations in which eigenvalues are related to

vibration frequencies

Example 7.2.1 pages 333-334

For

1.25 0.75

A

0.75 1.25

the eigenvalue-eigenvector pairs are

1 2, v

(1)

1

1

and

2 0.5, v

( 2)

1

1

We observe that every (column) vector

x1

(1)

( 2)

x c1v c2v

x2

where

c1 ( x1 x2 ) / 2

c2 ( x2 x1 ) / 2

Example 7.2.1 pages 333-334

Therefore, since x Ax is a linear transformation

Ax A(c1v c2v ) c1 Av c2 Av

(1)

and since

(1)

v ,v

( 2)

( 2)

( 2)

are eigenvectors

c1 Av c2 Av

(1)

(1)

( 2)

c11v c22v

(1)

( 2)

We can repeat this process to obtain

A x A(c11v c22v ) c v c v

2

(1)

( 2)

A x c v c v

n

n (1)

1 1

n ( 2)

2 2

Question What happens as

2 (1)

1 1

2 ( 2)

2 2

c1 2 v c ( ) v

n

n

(1)

?

1 n

2 2

( 2)

Example 7.2.1 pages 333-334

General Principle : If a vector v can be expressed as a

linear combination of eigenvectors of a matrix A, then it

is very easy to compute Av

It is possible to express every vector as a linear

combination of eigenvectors of an n by n matrix A iff

either of the following equivalent conditions is satisfied :

(i) there exists a basis consisting of eigenvectors of A

(ii) the sum of dimensions of eigenspaces of A = n

5 1

Question Does this condition hold for J

0 5

Question What special form does this matrix have ?

?

Example 7.2.1 pages 333-334

5 1

The characteristic polynomial of J

0 5 is

z 5 1

2

det ( z I J ) det

( z 5)

z 5

0

2 is the (only) eigenvalue, it has algebraic multiplicity 2

v1

5 1 v1

0 5 v 5 v v1 0

2

2

so the eigenspace

for eigenvalue 5

has dimension 1

the eigenvalue 5 is said to have geometric multiplicity 1

Question What are alg.&geom. mult. in Example 7.2.7 ?

Characteristic Polynomials pp. 335-337

Example 7.22 (p. 335) The eigenvalue-eigenvector pairs

of the matrix

1.25 0.75

A

0.75 1.25

in Example 7.2.1 are

z 1.25 0.75

2

det ( zI A) det

z 2.5 z 1

0.75 z 1.25

corresponding (1) ( 2 )

( A) { 1 2, 2 0.5}

v ,v

eigenvectors

(1)

2

1

.

25

0

.

75

v

1 0

1

(1)

0.75 2 1.25 (1) 0 v 1, R

v2

( 2)

Question What is the equation for v ?

Eigenvalues of Symmetric Matrices

The following real symmetric matrices that we studied

0 1

1 1 ,

1.25 0.75

0.75 1.25

have real eigenvalues and eigenvectors corresponding

to distinct eigenvectors are orthogonal.

Question What are the eigenvalues of these matrices ?

Question What are the corresponding eigenvectors ?

Question Compute their scalar products

(u, v) u v

T

Eigenvalues of Symmetric Matrices

Theorem 1. All eigenvalues of real symmetric matrices

are real valued.

Proof For a matrix M with complex (or real) entries

let

M denote the matrix whose entries are the

complex conjugates of the entries of M

Question Prove M is real (all entries are real) iff

M M

Question Prove 0 v C v v 0

n

T

A R ,0 v C , C , Av v

T

T

and observe that Av v therefore v v v Av

nn

Assume that

n

( Av ) v v v , and v v 0 R

T

T

T

Eigenvalues of Symmetric Matrices

Theorem 2. Eigenvectors of a real symmetric matrix that

correspond to distinct eigenvalues are orthogonal.

Proof Assume that A R nn , , R, ,

v, w R , Av v, Aw w .

n

Then compute

w v ( Aw) v w A v w Av w v w v

T

T

and observe that

T

T

T

T

( w, v) w v 0 .

T

T

Orthogonal Matrices

n

Definition A matrix U R is orthogonal if U T U I

If

U

is orthogonal then

1 det I det(U ) det(U ) [det(U )]

T

det(U ) 1

1

U is nonsingular and has an inverse U hence

therefore either

so

1

U IU

so

1

det(U ) 1

2

(U U ) U

T

1

1

UU UU I .

cos sin

sin cos ,

T

or

1

U (UU ) U I U

T

T

Examples

cos 2

sin 2

sin 2

cos 2

T

Permutation Matrices

nn

Definition A matrix M R

is called a permutation

matrix if there exists a function (called a permutation)

p : {1,2,..., n } {1,2,..., n }

that is 1-to-1 (and therefore onto) such that

M i , p (i ) 1,

j p(i ) M i , j 0

Examples

0 1 0 0 0 1

1 0 0 1

,

,

1

0

0

,

1

0

0

0 1 1 0

0 0 1 0 1 0

Question Why is every permutation matrix orthogonal ?

Eigenvalues of Symmetric Matrices

nn

A R is symmetric

(i )

{ i , v }, 1 i n of n

Theorem 7.2.4 pages 337-338 If

then there exists a set

eigenvalue-eigenvector pairs

Av i v , 1 i n

(i )

(i )

Proof Uses Theorems 1 and 2 and a little linear algebra.

Choose eigenvectors so that

construct matrices U v

(1)

(i ) T

(v ) v

v

( 2)

(i )

1, 1 i n

v

(n)

R

nn

1 0 and observe that U T U I

n n

AU U D

D R

1

T

0 n

A U D U U D U

MATLAB EIG Command

>> help eig

EIG Eigenvalues and eigenvectors.

E = EIG(X) is a vector containing the eigenvalues of a square

matrix X.

[V,D] = EIG(X) produces a diagonal matrix D of eigenvalues and a

full matrix V whose columns are the corresponding eigenvectors so

that X*V = V*D.

[V,D] = EIG(X,'nobalance') performs the computation with balancing

disabled, which sometimes gives more accurate results for certain

problems with unusual scaling. If X is symmetric, EIG(X,'nobalance')

is ignored since X is already balanced.

E = EIG(A,B) is a vector containing the generalized eigenvalues

of square matrices A and B.

[V,D] = EIG(A,B) produces a diagonal matrix D of generalized

eigenvalues and a full matrix V whose columns are the

corresponding eigenvectors so that A*V = B*V*D.

EIG(A,B,'chol') is the same as EIG(A,B) for symmetric A and symmetric

positive definite B. It computes the generalized eigenvalues of A and B

using the Cholesky factorization of B.

EIG(A,B,'qz') ignores the symmetry of A and B and uses the QZ algorithm.

In general, the two algorithms return the same result, however using the

QZ algorithm may be more stable for certain problems.

The flag is ignored when A and B are not symmetric.

See also CONDEIG, EIGS.

MATLAB EIG Command

Example 7.2.3 page 336

>> A = [-7 13 -16;13 -10 13;-16 13 -7]

A=

-7 13 -16

13 -10 13

-16 13 -7

>> [U,D] = eig(A);

>> U

U=

-0.5774 0.4082 0.7071

0.5774 0.8165 -0.0000

-0.5774 0.4082 -0.7071

>> D

D=

-36.0000

0

0

0 3.0000

0

0

0 9.0000

>> A*U

ans =

20.7846

-20.7846

20.7846

1.2247 6.3640

2.4495 -0.0000

1.2247 -6.3640

>> U*D

ans =

20.7846

-20.7846

20.7846

1.2247 6.3640

2.4495 -0.0000

1.2247 -6.3640

Positive Definite Symmetric Matrices

Theorem 4 A symmetric matrix

A R

nn

is [lec4,slide24]

(semi) positive definite iff all of its eigenvalues ( ) 0

Proof Let U , D R

nn

be the orthogonal, diagonal

matrices on the previous page that satisfy A U D U

T

i u

T

T

where u U w . Since U is nonsingular u 0 w 0

Then for every w R , w Aw u Du

T

n

therefore

A

T

n

i 1

2

i

is (semi) positive definite iff

u 0 i 1 i u ( ) 0

n

Clearly this condition holds iff

2

i

i ( ) 0, 1 i n

Singular Value Decomposition

mn

and rank M r then there

Theorem 3 If M R

mm

nn

exist orthogogonal matrices U R

,V R

m n

T

has the form

such that M U S V where S R

Singular Values

1 0 0 = sqrt eig T

j

M

M

Proof Outline Choose

nn

mm

so

U

R

,

V

R

0 r 0

T

T

and

D

V

M

MV

T

T

EU MM U

then

0 0 0 are diagonal,

T

S U M V satisfies

T

T

S ( S S ) ( S S ) S S D S try to finish

MATLAB SVD Command

>> help svd

SVD Singular value decomposition.

[U,S,V] = SVD(X) produces a diagonal matrix S, of the same

dimension as X and with nonnegative diagonal elements in

decreasing order, and unitary matrices U and V so that X =U*S*V'.

S = SVD(X) returns a vector containing the singular values.

[U,S,V] = SVD(X,0) produces the "economy size“ decomposition.

If X is m-by-n with m > n, then only the first n columns of U are

computed and S is n-by-n.

See also SVDS, GSVD.

MATLAB SVD Command

>> M = [ 0 1; 0.5 0.5 ]

M=

0 1.0000

0.5000 0.5000

>> [U,S,V] = svd(M)

U=

-0.8507 -0.5257

-0.5257 0.8507

S=

1.1441

0

0 0.4370

V=

-0.2298 0.9732

-0.9732 -0.2298

>> U*S*V'

ans =

0.0000

0.5000

1.0000

0.5000

SVD Algebra

1 0

S

V [v1 v2 ]

2

T 0

M U SV

U [u1 u2 ]

1

1

M v1 USV v1 US U 1u1

0

0

T

0

0

M v2 USV v2 US U 2u2

1

1

T

SVD Geometry

v2

v1

circle { x1v1 x2v2 : x x 1}

2

1

2

2

SVD Geometry

2u2

1u1

y12

y22

1

2

M(circle) ellipse { y1u1 y2u2 : 2 2 1}

Square Roots

Theorem 5 A symmetric positive definite matrix A R

nn

has a symmetric positive definite ‘square root’.

Proof Let U , D R

nn

be the orthogonal, diagonal

matrices on the previous page that satisfy A U D U

T

Then construct the matrices

1

S

0

B

USU

0

and observe that B

is

symmetric

positive

definite

n

T

and satisfies

B (USU )(USU ) US U UDU A

2

T

T

2

T

T

Polar Decomposition

Theorem 6 Every nonsingular matrix

M R

nn

M B U where B is symmetric

and positive definite and U is orthogonal.

can be factored as

Proof Construct

A MM

T

and observe that

A

B be symmetric

B A and construct

is symmetric and positive definite. Let

2

positive definite and satisfy

1

U B M.

1

Then

2

U U M B M

T

T

T 1

M A M M (M M ) M I

T

and clearly

M BU .

T

Löwdin Orthonormalization

(1) Per-Olov Löwdin, On the Non-Orthogonality Problem Connected with the use of

Atomic Wave Functions in the Theory of Molecules and Crystals, J. Chem. Phys. 18,

367-370 (1950).

http://www.quantum-chemistry-history.com/Lowdin1.htm

Proof Start with v1 , v2 ,..., vn in an inner product space

(assumed to be linearly independent), compute the

Gramm matrix

Since

G

Gij (vi , v j ), 1 i, j n

is symmetric and positive definite, Theorem 5

gives (and provides a method to compute) a matrix

that is symmetric and positive definite and B G .

n

1

Then ui

( B )i , j v j , 1 i n are orthonormal.

B

2

j 1

The Power Method pages 340-345

Finds the eigenvalue with largest absolute value of a

nn

whose eigenvalues satisfy

A

R

matrix

| 1 | | 2 | | n |

Step 1 Compute a vector with random entries z

( 0)

(1)

( 0)

(0)

k

arg

max

|

z

|

w

A

z

Step 2 Compute

and

i

1 i n

(1)

(1)

( 0)

and 1 wk / zk

(1)

(1)

(1)

z

w

/

||

w

||

Step 3 Compute

(1)

(1)

|

w

||

max

|

w

( recall that

i | )

1 i n

Step 4 Compute w A z

and k arg max | z

1 i n

( 2)

( 2)

(1)

and

1 wk / zk

Repeat

( 2)

(1)

Then ( (1m), w ( m) ) ( 1 , v (1) )

with A v (1) 1 v (1) .

(1)

i

|

The Inverse Power Method

Result If

A R

v

is an eigevector of

corresponding to eigenvalue

and

nn

R then v

is an eigenvector of A I corresponding to

eigenvalue . Furthermore, if 0 then

1

v is an eigenvector of ( A I ) corresponding to

1

eigenvalue ( ) .

Definition The inverse power method is the power

1

method applied to the matrix A .

It can find the eigenvalue-eigenvector pair if there

is one eigenvalue that has smallest absolute value.

Inverse Power Method With Shifts

Computes eigenvalue of A closest to 1 R

and a corresponding eigenvector

v

Step 1 Apply 1 or more interations of the power method

( A 1I ) to estimate an eigenvalue

1

- eigenvector pair 1 ( 1 ) , v1

using the matrix

Step 2 Compute

1

2 1

1

1 - better estimate of

Step 3 Apply 1 or more interations of the power method

( A 2 I ) to estimate an eigenvalue

1

- eigenvector pair 2 ( 2 ) , v2 and iterate. Then

using the matrix

1

k , vk v with cubic rate of convergence !

Unitary and Hermitian Matrices

Definition The adjoint of a matrix

T

is the matrix

M M

Example

M C

mn

5

1 i

0

3

1 i

5 4 2i 7 0 4 2i

3

7

n n

U C is unitary if U U 1

nn

Definition A matrix H C

is hermitian if H H

n n is (semi) positive definite

Definition A matrix P C

if v 0 v Pv ( ) 0

(or self-adjoint)

Definition A matrix

Super Theorem : All previous theorems true for complex

matrices if orthogonal is replaced by unitary, symmetric

by hermitian, and old with new (semi) positive definite.

Homework Due Tutorial 5 (Week 11, 29 Oct – 2 Nov)

1. Do Problem 1 on page 348.

2. Read Convergence of the Power Method (pages 342-346)

and do Problem 16 on page 350.

3. Do problem 19 on pages 350-351.

4. Estimate eigenvalue-eigenvector pairs of the

matrix M using the power and inverse power M

methods – use 4 iterations and compute errors

1

0

0.5 0.5

5. Compute the eigenvalue-eigenvector

cos 2

O

pairs of the orthogonal matrix O

sin 2

sin 2

cos 2

6. Prove that the vectors u , 1 i n defined at the bottom of

i

slide 29 are orthonormal by computing their inner products (ui , u j )

Extra Fun and Adventure

We have discussed several matrix decompositions :

LU

Eigenvector

Singular Value

Polar

Find out about other matrix decompositions. How are

they derived / computed ? What are their applications ?