today - Wharton Statistics Department

advertisement

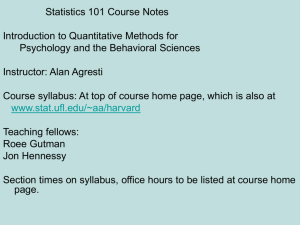

Statistics 111 - Lecture 18 Final review June 2, 2008 Stat 111 - Lecture 18 - Review 1 Administrative Notes • Pass in homework • Final exam on Thursday • Office hours today from 12:30-2:00pm June 2, 2008 Stat 111 - Lecture 18 - Review 2 Final Exam • Tomorrow from 10:40-12:10 • Show up a bit early, if you arrive after 10:40 you will be wasting time for your exam • Calculators are definitely needed! • Single 8.5 x 11 cheat sheet (two-sided) allowed • Sample midterm is posted on the website • Solutions for the midterm are posted on the website June 2, 2008 Stat 111 - Lecture 18 - Review 3 Final Exam • The test is going to be long – 6 or 7 questions • Pace yourself • Do NOT leave anything blank! • I want to give you credit for what you know give me an excuse to give you points! • The questions are NOT in order of difficulty • If you get stuck on a question put it on hold and try a different question June 2, 2008 Stat 111 - Lecture 18 - Review 4 Outline • • • • • • • • • Collecting Data (Chapter 3) Exploring Data - One variable (Chapter 1) Exploring Data - Two variables (Chapter 2) Probability (Chapter 4) Sampling Distributions (Chapter 5) Introduction to Inference (Chapter 6) Inference for Population Means (Chapter 7) Inference for Population Proportions (Chapter 8) Inference for Regression (Chapter 10) June 2, 2008 Stat 111 - Lecture 18 - Review 5 Experiments Treatment Group Population • • • • • Experimental Units Control Group Treatment No Treatment Try to establish the causal effect of a treatment Key is reducing presence of confounding variables Matching: ensure treatment/control groups are very similar on observed variables eg. race, gender, age Randomization: randomly dividing into treatment or control leads to groups that are similar on observed and unobserved confounding variables Double-Blinding: both subjects and evaluators don’t know who is in treatment group vs. control group June 2, 2008 Stat 111 - Lecture 18 - Review 6 Sampling and Surveys Population ? Parameter Sampling Sample Inference Estimation Statistic • Just like in experiments, we must be cautious of potential sources of bias in our sampling results • Voluntary response samples, undercoverage, nonresponse, untrue-response, wording of questions • Simple Random Sampling: less biased since each individual in the population has an equal chance of being included in the sample June 2, 2008 Stat 111 - Lecture 18 - Review 7 Different Types of Graphs • A distribution describes what values a variable takes and how frequently these values occur • Boxplots are good for center,spread, and outliers but don’t indicate shape of a distribution • Histograms much more effective at displaying the shape of a distribution June 2, 2008 Stat 111 - Lecture 18 - Review 8 Measures of Center and Spread • Center: Mean 𝑥𝑖 𝑥1 + 𝑥2 + ⋯ + 𝑥𝑛 𝑋= = 𝑛 𝑛 • Spread: Standard Deviation 𝑠= (𝑥𝑖 − 𝑥)2 𝑛−1 • For outliers or asymmetry, median/IQR are better • Center: Median - “middle number in distribution” • Spread: Inter-Quartile Range IQR = Q3 - Q1 • We use mean and SD more since most distributions we use are symmetric with no outliers(eg. Normal) June 2, 2008 Stat 111 - Lecture 18 - Review 9 Relationships between continuous var. • Scatterplot examines relationship between response variable (Y) and a explanatory variable (X): Education and Mortality: r = -0.51 • Positive vs. negativeassociations • Correlation is a measure of the strength of linear relationship between variables X and Y • r near 1 or -1 means strong linear relationship • r near 0 means weak linear relationship • Linear Regression: come back to later… June 2, 2008 Stat 111 - Lecture 18 - Review 10 Probability • Random process: outcome not known exactly, but have probability distribution of possible outcomes • Event: outcome of random process with prob. P(A) • Probability calculations: combinations of rules • • • • Equally likely outcomes rule Complement rule Additive rule for disjoint events Multiplication rule for independent events • Random variable: a numerical outcome or summary of a random process • Discrete r.v. has a finite number of distinct values • Continuous r.v. has a non-countable number of values • Linear transformations of variables June 2, 2008 Stat 111 - Lecture 18 - Review 11 The Normal Distribution • The Normal distribution has center and spread 2 N(0,1) N(-1,2) N(0,2) N(2,1) • Have tables for any probability from the standard normal distribution ( = 0 and 2 = 1) • Standardization: converting X which has a N(,2) distribution to Z which has a N(0,1) distribution: 𝑋−𝜇 𝑍= 𝜎 • Reverse standardization: converting a standard normal Z into a non-standard normal X 𝜎𝑍 + 𝜇 = 𝑋 June 2, 2008 Stat 111 - Lecture 18 - Review 12 Inference using Samples Population ? Parameters: or p Sampling Sample Inference Estimation Statistics: 𝑋 or 𝑝 • Continuous: pop. mean estimated by sample mean • Discrete: pop. proportion estimated by sample proportion • Key for inference: Sampling Distributions • Distribution of values taken by statistic in all possible samples from the same population June 2, 2008 Stat 111 - Lecture 18 - Review 13 Sampling Distribution of Sample Mean • The center of the sampling distribution of the sample mean is the population mean: mean(𝑋) = 𝜇 • Over all samples, the sample mean will, on average, be equal to the population mean (no guarantees for 1 sample!) • The standard deviation of the sampling distribution 𝜎 of the sample mean is 𝑆𝐷 𝑋 = 𝑛 • As sample size increases, standard deviation of the sample mean decreases! • Central Limit Theorem: if the sample size is large enough, then the sample mean 𝑋 has an approximately Normal distribution June 2, 2008 Stat 111 - Lecture 18 - Review 14 Binomial/Normal Dist. For Proportions • Sample count Y follows Binomial distribution which we can calculate from Binomial tables in small samples • If the sample size is large (n·p and n(1-p)≥ 10), sample count Y follows a Normal distribution: mean(𝑌) = 𝑛𝑝 𝑆𝐷 𝑌 = 𝑛𝑝(1 − 𝑝) • If the sample size is large, the sample proportion also approximately follows a Normal distribution: mean(𝑝) = 𝑝 𝑆𝐷 𝑝 = June 2, 2008 𝑝(1 − 𝑝) 𝑛 Stat 111 - Lecture 18 - Review 15 Summary of Sampling Distribution Type of Data Continuous Unknown Parameter Statistic 𝑋 Variability of Statistic 𝑆𝐷 𝑋 = 𝜎 𝑛 Distribution of Statistic Normal (if n large) Binomal (if n small) Count Xi = 0 or 1 June 2, 2008 p 𝑝 𝑆𝐷 𝑝 = 𝑝(1 − 𝑝) 𝑛 Normal (if n large) Stat 111 - Lecture 18 - Review 16 Introduction to Inference • Use sample estimate as center of a confidenceinterval of likely values for population parameter • All confidence intervals have the same form: Estimate ± Margin of Error • The margin of error is always some multiple of the standard deviation (or standard error) of statistic • Hypothesis test: data supports specific hypothesis? 1. Formulate your Null and Alternative Hypotheses 2. Calculate the test statistic: difference between data and your null hypothesis 3. Find the p-value for the test statistic: how probable is your data if the null hypothesis is true? June 2, 2008 Stat 111 - Lecture 18 - Review 17 Inference: Single Population Mean • Known SD : confidence intervals and test statistics involve standard deviation and normal critical values 𝑋−𝑍 ∗ 𝜎 𝑛 ,𝑋 + 𝑍 ∗ 𝜎 𝑇= 𝑛 𝑋 − 𝜇0 𝜎 𝑛 • Unknown SD : confidence intervals and test statistics involve standard error and critical values from a t distribution with n-1 degrees of freedom 𝑋− ∗ 𝑡𝑛−1 𝑠 𝑛 ,𝑋 + ∗ 𝑡𝑛−1 𝑠 𝑛 𝑇= 𝑋 − 𝜇0 𝑠 𝑛 • t distribution has wider tails (more conservative) June 2, 2008 Stat 111 - Lecture 18 - Review 18 Inference: Comparing Means 1and 2 • Known 1 and2: two-sample Z statistic uses normal distribution 𝑋1 − 𝑋2 𝑍= 𝜎12 𝜎22 + 𝑛1 𝑛2 • Unknown 1 and2: two-sample T statistic uses t distribution with degrees of freedom = min(n1-1,n2-1) 𝑋1 − 𝑋2 𝑇= 𝑠12 𝑠22 𝑠12 𝑠22 ∗ ∗ 𝑋1 − 𝑋2 − 𝑡𝑘 + , 𝑋 − 𝑋2 + 𝑡𝑘 + 𝑠12 𝑠22 𝑛1 𝑛2 1 𝑛1 𝑛2 + 𝑛1 𝑛2 • Matched pairs: instead of difference of two samples X1 and X2, do a one-sample test on the difference d 𝑇= June 2, 2008 𝑋𝑑 − 0 𝑠𝑑 𝑛 𝑋𝑑 − ∗ 𝑡𝑛−1 Stat 111 - Lecture 18 - Review 𝑠𝑑 𝑛 , 𝑋𝑑 + ∗ 𝑡𝑛−1 𝑠𝑑 𝑛 19 Inference: Population Proportion p • Confidence interval for p uses the Normal distribution and the sample proportion: 𝑝−𝑍 ∗ 𝑝(1 − 𝑝) 𝑝(1 − 𝑝) ∗ ,𝑝 + 𝑍 𝑛 𝑛 𝑌 where 𝑝 = 𝑛 • Hypothesis test for p = p0 also uses the Normal distribution and the sample proportion: 𝑝 − 𝑝0 𝑍= 𝑝0 (1 − 𝑝0 ) 𝑛 June 2, 2008 Stat 111 - Lecture 18 - Review 20 Inference: Comparing Proportions p1and p2 • Hypothesis test for p1 - p2 = 0 uses Normal distribution and complicated test statistic 𝑌1 𝑌2 𝑝1 − 𝑝2 where 𝑝1 = and 𝑝2 = 𝑍= 𝑛1 𝑛2 𝑆𝐸(𝑝1 − 𝑝2 ) with pooled standard error: 𝑌1 + 𝑌2 𝑆𝐸 𝑝1 − 𝑝2 = 𝑝𝑝 (1 − 𝑝𝑝 ) + where 𝑝𝑝 = 𝑛1 𝑛2 𝑛1 + 𝑛2 • Confidence interval for p1 = p2 also uses Normal distribution and sample proportions 1 𝑝1 − 𝑝2 ∓ 𝑍 June 2, 2008 ∗ 1 𝑝1 (1 − 𝑝1 ) 𝑝2 (1 − 𝑝2 ) + 𝑛1 𝑛2 Stat 111 - Lecture 18 - Review 21 Linear Regression • Use best fit line to summarize linear relationship between two continuous variables X and Y: 𝑌𝑖 = 𝛼 + 𝛽𝑋𝑖 • The slope (𝑏 = 𝑟 ∙ 𝑠𝑦 𝑠𝑥): average change you get in the Y variable if you increased the X variable by one • The intercept( 𝑎 = 𝑌 − 𝑏𝑋 ):average value of the Y variable when the X variable is equal to zero • Linear equation can be used to predict response variable Y for a value of our explanatory variable X June 2, 2008 Stat 111 - Lecture 18 - Review 22 Significance in Linear Regression • Does the regression line show a significant linear relationship between the two variables? H0 : = 0 versus Ha : 0 • Uses the t distribution with n-2 degrees of freedom and a test statistic calculated from JMP output 𝑏 𝑇= 𝑆𝐸(𝑏) • Can also calculate confidence intervals using JMP output and t distribution with n-2 degrees of freedom ∗ 𝑏 ∓ 𝑡𝑛−2 𝑆𝐸(𝑏) June 2, 2008 ∗ 𝑎 ∓ 𝑡𝑛−2 𝑆𝐸(𝑎) Stat 111 - Lecture 18 - Review 23 Good Luck on Final! • I have office hours until 2pm today • Might be able to meet at other times June 2, 2008 Stat 111 - Lecture 18 - Review 24