Lecture 2

advertisement

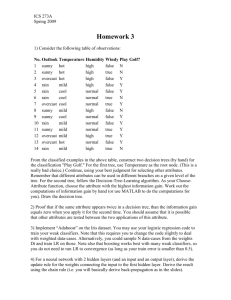

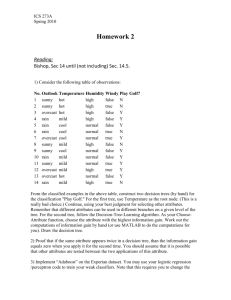

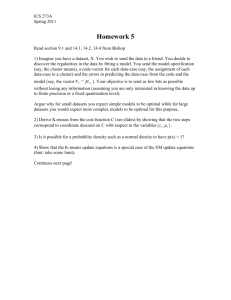

Databases and Data Mining Lecture 2: Predictive Data Mining Fall 2005 Peter van der Putten (putten_at_liacs.nl) Course Outline • Objective – Understand the basics of data mining – Gain understanding of the potential for applying it in the bioinformatics domain – Hands on experience • Schedule Date Time Room 11-Apr 13.45 - 15.30 174 Lecture 18-11 13.45 - 15.30 413 Lecture 15.45 - 17.30 306/308 Practical Assignments 25-11 13.45 - 15.30 413 Lecture 12-Feb 13.45 - 15.30 413 Lecture 15.45 - 17.30 306/308 Practical Assignments • Evaluation – Practical assignment (2nd) plus take home exercise • Website – http://www.liacs.nl/~putten/edu/dbdm05/ Agenda Today • Recap Lecture 1 – A short introduction to life – Data mining explained • Predictive data mining concepts – Classification and regression – Bioinformatics applications • Predictive data mining techniques – – – – – Logistic Regression Nearest Neighbor Decision Trees Naive Bayes Neural Networks • Evaluating predictive models • WEKA Demo (optional) • Lab session – Predictive Modeling using WEKA What is data mining? Sources of (artificial) intelligence • Reasoning versus learning • Learning from data – – – – – – – – Patient data Customer records Stock prices Piano music Criminal mug shots Websites Robot perceptions Etc. Some working definitions…. • ‘Data Mining’ and ‘Knowledge Discovery in Databases’ (KDD) are used interchangeably • Data mining = – The process of discovery of interesting, meaningful and actionable patterns hidden in large amounts of data • Multidisciplinary field originating from artificial intelligence, pattern recognition, statistics, machine learning, bioinformatics, econometrics, …. A short summary of life Bio Building Blocks Biotech Data Mining Applications The Promise…. . The Promise…. . Discovering the structure of DNA James Watson & Francis Crick - Rosalind Franklin The structure of DNA DNA Trivia • DNA stores instructions for the cell to peform its functions • Double helix, two interwoven strands • Each strand is a sequence of so called nucleotides • Deoxyribonucleic acid (DNA) comprises 4 different types of nucleotides (bases): adenine (A), thiamine (T), cytosine (C) and guanine (G) – Nucleotide uracil (U) doesn’t occur in DNA • Each strand is reverse complement of the other • Complementary bases – A with T – C with G DNA Trivia • Each nucleus contain 3 x 10^9 nucleotides • Human body contains 3 x 10^12 cells • Human DNA contains 26k expressed genes, each gene codes for a protein in principle • DNA of different persons varies 0.2% or less • Human DNA contains 3.2 x 10^9 base pairs – X-174 virus: 5,386 – Salamander: 100 109 – Amoeba dubia: 670 109 Primary Protein Structure • Proteins are built out of peptides, which are poylmer chains of amino acids • Twenty amino acids are encoded by the standard genetic code shared by nearly all organisms and are called standard amino acids (100 amino acids exist in nature) Protein Structure from Primary to Quaternary Wikipedia Proteins: 3D Structure A representation of the 3D structure of myoglobin, showing coloured alpha helices. This protein was the first to have its structure solved by X-ray crystallography by Max Perutz and Sir John Cowdery Kendrew in 1958, which led to them receiving a Nobel Prize in Chemistry. http://en.wikipedia.org/wiki/Protein Proteins: 3D Structure G Protein-Coupled Receptors (GPCR) represent more than half the current drug targets From DNA to Proteins Standard Genetic Code • Each tri-nucleotide unit (‘codon’) codes in the amino acid codes for one amino acid • This code is the same for nearly all living organisms The Standard Genetic Code 1st base U C A G Wikipedia U UUU (Phe/F)Phenylalanine UUC (Phe/F)Phenylalanine UUA (Leu/L)Leucine UUG (Leu/L)Leucine, Start CUU (Leu/L)Leucine CUC (Leu/L)Leucine CUA (Leu/L)Leucine CUG (Leu/L)Leucine, Start AUU (Ile/I)Isoleucine, Start2 AUC (Ile/I)Isoleucine AUA (Ile/I)Isoleucine AUG (Met/M)Methionine, Start1 GUU (Val/V)Valine GUC (Val/V)Valine GUA (Val/V)Valine GUG (Val/V)Valine, Start2 2nd base C A UCU (Ser/S)Serine UAU (Tyr/Y)Tyrosine UCC (Ser/S)Serine UAC (Tyr/Y)Tyrosine UCA (Ser/S)Serine UAA Ochre (Stop ) UCG (Ser/S)Serine UAG Amber (Stop ) CCU (Pro/P)Proline CAU (His/H)Histidine CCC (Pro/P)Proline CAC (His/H)Histidine CCA (Pro/P)Proline CAA (Gln/Q)Glutamine CCG (Pro/P)Proline CAG (Gln/Q)Glutamine ACU (Thr/T)Threonine AAU (Asn/N)Asparagine ACC (Thr/T)Threonine AAC (Asn/N)Asparagine ACA (Thr/T)Threonine AAA (Lys/K)Lysine ACG (Thr/T)Threonine AAG (Lys/K)Lysine GCU (Ala/A)Alanine GAU (Asp/D)Aspartic acid GCC (Ala/A)Alanine GAC (Asp/D)Aspartic acid GCA (Ala/A)Alanine GAA (Glu/E)Glutamic acid GCG (Ala/A)Alanine GAG (Glu/E)Glutamic acid G UGU (Cys/C)Cysteine UGC (Cys/C)Cysteine UGA Opal (Stop ) UGG (Trp/W)Tryptophan CGU (Arg/R)Arginine CGC (Arg/R)Arginine CGA (Arg/R)Arginine CGG (Arg/R)Arginine AGU (Ser/S)Serine AGC (Ser/S)Serine AGA (Arg/R)Arginine AGG (Arg/R)Arginine GGU (Gly/G)Glycine GGC (Gly/G)Glycine GGA (Gly/G)Glycine GGG (Gly/G)Glycine Standard Genetic Code • Each tri-nucleotide unit (‘codon’) codes in the amino acid codes for one amino acid • This code is the same for nearly all living organisms The Standard Genetic Code Ala A GCU, GCC, GCA, GCG Leu L Arg R CGU, CGC, CGA, CGG, AGA, AGG Asn N AAU, AAC Asp D GAU, GAC Cys C UGU, UGC Gln Q CAA, CAG Met Phe Pro Ser M F P S Thr Trp Tyr Val Stop T W Y V Glu Gly His Ile Start Wikipedia E G H I GAA, GAG GGU, GGC, GGA, GGG CAU, CAC AUU, AUC, AUA AUG, GUG UUA, UUG, CUU, CUC, CUA, CUG Lys K AAA, AAG AUG UUU, UUC CCU, CCC, CCA, CCG UCU, UCC, UCA, UCG, AGU,AGC ACU, ACC, ACA, ACG UGG UAU, UAC GUU, GUC, GUA, GUG UAG, UGA, UAA Importance of Combinatorial Gene Control • combinations of a few gene regulatory proteins can generate many different cell types during development Some working definitions…. • Bioinformatics = – Bioinformatics is the research, development, or application of computational tools and approaches for expanding the use of biological, medical, behavioral or health data, including those to acquire, store, organize, archive, analyze, or visualize such data [http://www.bisti.nih.gov/]. – Or more pragmatic: Bioinformatics or computational biology is the use of techniques from applied mathematics, informatics, statistics, and computer science to solve biological problems [Wikipedia Nov 2005] • NCBI Tools for data mining: – – – – – Nucleotide sequence analysis Proteine sequence analysis Structures Genome analysis Gene expression • Data mining or not?. Bio informatics and data mining • From sequence to structure to function • Genomics (DNA), Transcriptomics (RNA), Proteomics (proteins), Metabolomics (metabolites) Pattern matching and search • Sequence matching and alignment • Structure prediction – Predicting structure from sequence – Protein secondary structure prediction • Function prediction – Predicting function from structure – Protein localization • Expression analysis – Genes: micro array data analysis etc. – Proteins • Regulation analysis Bio informatics and data mining • • • • • • Classical medical and clinical studies Medical decision support tools Text mining on medical research literature (MEDLINE) Spectrometry, Imaging Systems biology and modeling biological systems Population biology & simulation • Spin Off: Biological inspired computational learning – Evolutionary algorithms, neural networks, artificial immune systems Data mining revisited Genomic Microarrays – Case Study • Problem: – Leukemia (different types of Leukemia cells look very similar) – Given data for a number of samples (patients), can we • Accurately diagnose the disease? • Predict outcome for given treatment? • Recommend best treatment? • Solution – Data mining on micro-array data Microarray data • 50 most important genes • Rows: genes • Columns: samples / patients Example: ALL/AML data • 38 training patients, 34 test patients, ~ 7,000 patient attributes (micro array gene data) • 2 Classes: Acute Lymphoblastic Leukemia (ALL) vs Acute Myeloid Leukemia (AML) • Use train data to build diagnostic model ALL AML Results on test data: 33/34 correct, 1 error may be mislabeled Some working definitions…. • ‘Data Mining’ and ‘Knowledge Discovery in Databases’ (KDD) are used interchangeably • Data mining = – The process of discovery of interesting, meaningful and actionable patterns hidden in large amounts of data • Multidisciplinary field originating from artificial intelligence, pattern recognition, statistics, machine learning, bioinformatics, econometrics, …. The Knowledge Discovery Process Data Mining Objectives & Design Problem Objectives Deployment, Application & Monitoring Data Understanding Data Preparation Evaluation Modeling Some working definitions…. • Concepts: kinds of things that can be learned – – • Instances: the individual, independent examples of a concept – • Example: a patient, candidate drug etc. Attributes: measuring aspects of an instance – • Aim: intelligible and operational concept description Example: the relation between patient characteristics and the probability to be diabetic Example: age, weight, lab tests, microarray data etc Pattern or attribute space Data mining tasks • Predictive data mining – Classification: classify an instance into a category – Regression: estimate some continuous value • Descriptive data mining – – – – – – Matching & search: finding instances similar to x Clustering: discovering groups of similar instances Association rule extraction: if a & b then c Summarization: summarizing group descriptions Link detection: finding relationships … Data Mining Tasks: Classification Goal classifier is to seperate classes on the basis of known attributes weight The classifier can be applied to an instance with unknow class age For instance, classes are healthy (circle) and sick (square); attributes are age and weight Data Preparation for Classification • On attributes – Attribute selection – Attribute construction • On attribute values – – – – – Outlier removal / clipping Normalization Creating dummies Missing values imputation …. Examples of Classification Techniques • • • • • • • • Majority class vote Logistic Regression Nearest Neighbor Decision Trees, Decision Stumps Naive Bayes Neural Networks Genetic algorithms Artificial Immune Systems Example classification algorithm: Logistic Regression • Linear regression – For regression not classification (outcome numeric, not symbolic class) – Predicted value is linear combination of inputs y ax1 bx2 c • Logistic regression – Apply logistic function to linear regression formula – Scales output between 0 and 1 – For binary classification use thresholding y 1 1 e ( ax1 bx2 c ) y t c1 y t c2 Example classification algorithm: Logistic Regression Classification fe weight Linear decision boundaries can be represented well with linear classifiers like logistic regression fe age Logistic Regression in attribute space Voorspellen f.e. weight Linear decision boundaries can be represented well with linear classifiers like logistic regression f.e. age Logistic Regression in attribute space Voorspellen f.e. weight xxxx linear decision Non boundaries cannot be represented well with linear classifiers like logistic regression f.e. age Logistic Regression in attribute space Non linear decision boundaries cannot be represented well with linear classifiers like logistic regression Well known example: f.e. weight The XOR problem f.e. age Example classification algorithm: Nearest Neighbour • Data itself is the classification model, so no model abstraction like a tree etc. • For a given instance x, search the k instances that are most similar to x • Classify x as the most occurring class for the k most similar instances Nearest Neighbor in attribute space Classification = new instance Any decision area possible fe weight Condition: enough data available fe age Nearest Neighbor in attribute space Voorspellen Any decision area possible bvb. weight Condition: enough data available f.e. age Example Classification Algorithm Decision Trees 20000 patients age > 67 yes no 1200 patients Weight > 85kg yes 400 patients Diabetic (%50) 18800 patients gender = male? no 800 customers Diabetic (%10) no etc. Building Trees: Weather Data example Outlook Temperature Humidity Windy Play? sunny hot high false No sunny hot high true No overcast hot high false Yes rain mild high false Yes rain cool normal false Yes rain cool normal true No overcast cool normal true Yes sunny mild high false No sunny cool normal false Yes rain mild normal false Yes sunny mild normal true Yes overcast mild high true Yes overcast hot normal false Yes rain mild high true No KDNuggets / Witten & Frank, 2000 Building Trees • An internal node is a test on an attribute. • A branch represents an outcome of the test, e.g., Color=red. • A leaf node represents a class label or class label distribution. • At each node, one attribute is chosen to split training examples into distinct classes as much as possible • A new case is classified by following a matching path to a leaf node. KDNuggets / Witten & Frank, 2000 Outlook sunny rain overcast Yes Humidity Windy high normal true false No Yes No Yes Split on what attribute? • Which is the best attribute to split on? – The one which will result in the smallest tree – Heuristic: choose the attribute that produces best separation of classes (the “purest” nodes) • Popular impurity measure: information – Measured in bits – At a given node, how much more information do you need to classify an instance correctly? • What if at a given node all instances belong to one class? • Strategy – choose attribute that results in greatest information gain KDNuggets / Witten & Frank, 2000 Which attribute to select? • Candidate: outlook attribute • What is the info for the leafs? – info[2,3] = 0.971 bits – Info[4,0] = 0 bits – Info[3,2] = 0.971 bits • Total: take average weighted by nof instances – Info([2,3], [4,0], [3,2]) = 5/14 * 0.971 + 4/14* 0 + 5/14 * 0.971 = 0.693 bits • What was the info before the split? – Info[9,5] = 0.940 bits • What is the gain for a split on outlook? – Gain(outlook) = 0.940 – 0.693 = 0.247 bits Witten & Frank, 2000 Which attribute to select? Gain = 0.247 Gain = 0.152 Gain = 0.048 Witten & Frank, 2000 Gain = 0.029 Continuing to split gain(" Humidity" ) 0.971 bits gain(" Temperatur e" ) 0.571 bits gain(" Windy" ) 0.020 bits KDNuggets / Witten & Frank, 2000 The final decision tree • Note: not all leaves need to be pure; sometimes identical instances have different classes Splitting stops when data can’t be split any further KDNuggets / Witten & Frank, 2000 Computing information • Information is measured in bits – When a leaf contains once class only information is 0 (pure) – When the number of instances is the same for all classes information reaches a maximum (impure) • Measure: information value or entropy entropy( p1 , p2 ,..., pn ) p1 log p1 p2 log p2 ... pn log pn • Example (log base 2) – Info([2,3,4]) = -2/9 * log(2/9) – 3/9 * log(3/9) – 4/9 * log(4/9) KDNuggets / Witten & Frank, 2000 Decision Trees in Pattern Space Goal classifier is to seperate classes (circle, square) on the basis of attribute age and income weight Each line corresponds to a split in the tree Decision areas are ‘tiles’ in pattern space age Decision Trees in attribute space Goal classifier is to seperate classes (circle, square) on the basis of attribute age and weight Each line corresponds to a split in the tree weight Decision areas are ‘tiles’ in attribute space age Example classification algorithm: Naive Bayes • Naive Bayes = Probabilistic Classifier based on Bayes Rule • Will produce probability for each target / outcome class • ‘Naive’ because it assumes independence between attributes (uncorrelated) Bayes’s rule • Probability of event H given evidence E : • Pr[ E | H ] Pr[ H ] Pr[ E ] A priori probability of H : Pr[H ] Pr[ H | E ] – • Probability of event before evidence is seen A posteriori probability of H : Pr[ H | E ] – Probability of event after evidence is seen from Bayes “Essay towards solving a problem in the doctrine of chances” (1763) Thomas Bayes Born: 1702 in London, England Died: 1761 in Tunbridge Wells, Kent, England KDNuggets / Witten & Frank, 2000 Naïve Bayes for classification • Classification learning: what’s the probability of the class given an instance? – – • Evidence E = instance Event H = class value for instance Naïve assumption: evidence splits into parts (i.e. attributes) that are independent Pr[ E1 | H ] Pr[ E1 | H ]Pr[ En | H ] Pr[ H ] Pr[ H | E ] Pr[ E ] KDNuggets / Witten & Frank, 2000 Weather data example Outlook Temp. Humidity Windy Play Sunny Cool High True ? Evidence E Pr[ yes | E ] Pr[Outlook Sunny | yes] Pr[Temperature Cool | yes] Probability of class “yes” Pr[ Humidity High | yes ] Pr[Windy True | yes ] Pr[ yes ] Pr[ E ] 93 93 93 149 Pr[ E ] 2 9 KDNuggets / Witten & Frank, 2000 Probabilities for weather data Outlook Temperature Yes No Sunny 2 3 Hot 2 2 Overcast 4 0 Mild 4 2 Rainy 3 2 Cool 3 1 Sunny 2/9 3/5 Hot 2/9 2/5 Overcast 4/9 0/5 Mild 4/9 2/5 Rainy 3/9 2/5 Cool 3/9 1/5 KDNuggets / Witten & Frank, 2000 Yes Humidity No Windy Yes No High 3 4 Normal 6 High Normal Play Yes No Yes False 6 2 9 5 1 True 3 3 3/9 4/5 False 6/9 2/5 9/14 5/14 6/9 1/5 True 3/9 3/5 Outlook Temp Humidity Windy Play Sunny Hot High False No Sunny Hot High True No Overcast Hot High False Yes Rainy Mild High False Yes Rainy Cool Normal False Yes Rainy Cool Normal True No Overcast Cool Normal True Yes Sunny Mild High False No Sunny Cool Normal False Yes Rainy Mild Normal False Yes Sunny Mild Normal True Yes Overcast Mild High True Yes Overcast Hot Normal False Yes Rainy Mild High True No No Probabilities for weather data Outlook Temperature Yes No Sunny 2 3 Hot 2 2 Overcast 4 0 Mild 4 2 Rainy 3 2 Cool 3 1 Sunny 2/9 3/5 Hot 2/9 2/5 Overcast 4/9 0/5 Mild 4/9 2/5 Rainy 3/9 2/5 Cool 3/9 1/5 • A new day: KDNuggets / Witten & Frank, 2000 Yes Humidity No Windy Yes No High 3 4 Normal 6 High Play Yes No Yes False 6 2 9 5 1 True 3 3 3/9 4/5 False 6/9 2/5 9/14 5/14 Normal 6/9 1/5 True 3/9 3/5 Outlook Temp. Humidity Windy Play Sunny Cool High True ? Likelihood of the two classes For “yes” = 2/9 3/9 3/9 3/9 9/14 = 0.0053 For “no” = 3/5 1/5 4/5 3/5 5/14 = 0.0206 Conversion into a probability by normalization: P(“yes”) = 0.0053 / (0.0053 + 0.0206) = 0.205 P(“no”) = 0.0206 / (0.0053 + 0.0206) = 0.795 No Extensions • Numeric attributes – • Fit a normal distribution to calculate probabilites What if an attribute value doesn’t occur with every class value? (e.g. “Humidity = high” for class “yes”) – – Probability will be zero! A posteriori probability will also be zero! (No matter how likely the other values are!) Pr[ Humidity High | yes] 0 Pr[ yes | E ] 0 – – witten&eibe Remedy: add 1 to the count for every attribute value-class combination (Laplace estimator) Result: probabilities will never be zero! (also: stabilizes probability estimates) Naïve Bayes: discussion • Naïve Bayes works surprisingly well (even if independence assumption is clearly violated) • Why? Because classification doesn’t require accurate probability estimates as long as maximum probability is assigned to correct class • However: adding too many redundant attributes will cause problems (e.g. identical attributes) witten&eibe Naive Bayes in attribute space Classification fe weight NB can model non fe age Example classification algorithm: Neural Networks • Inspired by neuronal computation in the brain (McCullough & Pitts 1943 (!)) invoer: bvb. klantkenmerken uitvoer: bvb. respons • Input (attributes) is coded as activation on the input layer neurons, activation feeds forward through network of weighted links between neurons and causes activations on the output neurons (for instance diabetic yes/no) • Algorithm learns to find optimal weight using the training instances and a general learning rule. Neural Networks • Example simple network (2 layers) age weightage body_mass_index Weightbody mass index Probability of being diabetic • Probability of being diabetic = f (age * weightage + body mass index * weightbody mass index) Neural Networks in Pattern Space Classification Simpel network: only a line available (why?) to seperate classes Multilayer network: f.e. weight Any classification boundary possible f.e. age Evaluating Classifiers • Root mean squared error (rmse), Area Under the ROC Curve (AUC), confusion matrices, classification accuracy – Accuracy = 78% on test set 78% of classifications were correct • Hold out validation, n fold cross validation, leave one out validation – Build a model on a training set, evaluate on test set – Hold out: single test set (f.e. one thirds of data) – n fold cross validation • Divide into n groups • Perform n cycles, each cycle with different fold as test set – Leave one out • Test set of one instance, cycle trough all instances • Investigating the sources of error – bias variance decomposition – Informal definition • Bias: error due to limitations of model representation (eg linear classifier on non linear problem); even with infinite date there will be bias • Variance: error due to instability of classifier over different samples; error due to sample sizes, overfitting Example Results Predicting Survival for Head & Neck Cancer TNM Symbolic TNM Numeric Average and standard deviation (SD) on the classification accuracy for all classifiers Example Results Head and Neck Cancer: Bias Variance Decomposition • Quiz: What could be a strategy to improve these models? What have we learned today • A primer into biology concepts relevant for bioinformatics data mining • An introduction into data mining in general – Definition, process, data mining tasks • Various data mining techniques for predictive data mining • This afternoon: hands on exercises with data mining tool WEKA • Questions?