TUC SLO Results Poster

advertisement

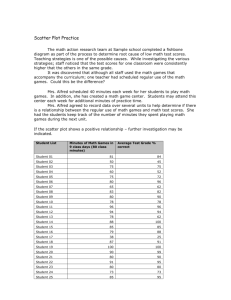

Analyzing Institutional Assessment Results Longitudinally (with nonparametric effect sizes) Dr. Bradley Thiessen, Director of Institutional Research Problem: How to analyze institutional SLO assessment results if: - Different Colleges administer different tests to different students - Each assessment has its own score scale (some pass-fail only) Solution: Require all assessments to be scored on a common scale. - (1) Below (2) Approaching (3) Meets (4) Exceeds expectations ___ Problem: How can we use this score scale for longitudinal analyses? - A 4-point score scale provides little room for growth The test scores from Time 1 and Time 2 can also be displayed as cumulative distributions (cdf’s). Students in the 2010 cohort were assessed on applied knowledge at the developmental and, later, mastery levels. Here are the number of students below, approaching, meeting, and exceeding expectations: -3% 0% For any given cut-score, the vertical distance between the cdf’s represents the change in the percentage of students scoring above that cutscore. Level For example, the vertical distance between the cdf’s at a cut-score of 500 is 21% (achievement increased). A cut-score of 700 shows no change in achievement and a cut-score of 800 shows achievement declined by 3%. # Below # Approaching # Meets # Exceeds Developmental +21% Mastery 1 2 10 8 26 23 % meets/exceeds 0 2 70.3% 71.4% It looks like achievement increased, but this is based on only one (semiarbitrarily) chosen cut-score. We’re interested in using all possible cut-scores. • Convert data to display the % of students scoring at or below each cut-score Solution: Analyze changes in the % of students meeting expectations. - Year 1: 75% met expectations; Year 2: 80% met expectations - This would indicate an increase in student achievement ___ 0% Problem: This is not a valid method for analyzing achievement growth. - Changes in the “% of students meeting expectations” vary depending on the choice of cut-score. - Each assessment within each program uses a different cut-score to define “meeting expectations.” A P-P Plot displays all the vertical gaps between two -3% cdf’s, i.e., the change in the percentage of students scoring at or below all possible cut-scores. The vertical lines represent the same 3 cut-scores used throughout this example. The point (.16, .37) on the P-P plot shows that only 16% of students at Time 2 scored below the 37th percentile from the Time 1 distribution. This point is .21 units away from the diagonal line, representing the “21% increase in student achievement.” +21% Since we’re interested in using all possible cutscores, we’re interested in the area between the P-P Plot and the diagonal reference line. Percentage of students at or below each cut-score: Below Approaching Meets Exceeds Level 2.70% 5.71% Developmental Mastery 29.73% 28.57% 100% 94.29% 100% 100% • Plot these points on a P-P Plot: (.0571, .0270); (.2857, .2973); (.9429, 1.0000) • Interpolate a P-P Plot (using cubic splines) 1.0 0.9 Assume we administer the same test at Time 1 and Time 2 and the average score increases from 550 to 600. If a cut-score of 500 represented “meeting expectations,” then 63% of students met expectations at Time 1 and 84% met expectations at Time 2. We would conclude achievement increased by 21%. If we had chosen a cut-score of 700 to meet expectations, then we would conclude achievement remained constant. If we would have chosen a cut-score of 800, then we would conclude achievement declined by 2%. Which conclusion is correct? The area under the P-P Plot, calculated by p dp 1 0 1 2 F1 F 2 2 0.8 P X 2 X1 , can be 0.7 Mastery Level interpreted as the probability that a randomly selected test score from Time 2 is greater than a randomly selected test score from Time 1. Area = 0.611 In this example, the area is calculated to be 0.611. 0.6 0.5 0.4 Area = 0.567 0.3 V = 0.40 If we randomly select a student test score from Time 2, there is a 61% chance it will be greater than a randomly selected test score from Time 1. Thus, achievement increased. 0.2 V = 0.240 0.1 0.0 0.0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0 Developmental Level Achievement declined Achievement remained constant Achievement increased If we assume test scores from Time 1 follow a standard normal distribution and test scores from Time 2 follow a normal distribution with unit variance, we can calculate the following summary statistic: V 2 1 P X 2 X 1 This V statistic is a scale-invariant effect size of the trends in scores from Time 1 to Time 2. All three conclusions are correct, but our choice of cut-score will determine which conclusion we believe. In this example, V is calculated to be approximately 0.40. Thus, scores on this test increased by 0.40 standard deviation units from Time 1 to Time 2. With these effect sizes, we can use common meta-analysis methods to synthesize assessment results from different tests and programs. • Estimate the area under the P-P Plot (using numerical integration) and convert to the V statistic. A randomly selected student at the Mastery Level has a 56.7% chance of outscoring a random student at the Developmental Level even though expectations are higher at the Mastery Level Performance (relative to expectations) increased by 0.24 std. deviation units. Achievement increased beyond expectations.