slides

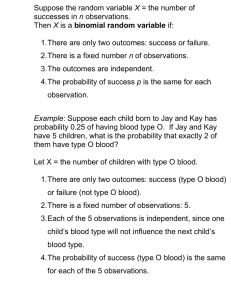

advertisement

Empirical Evaluation (Ch 5) • how accurate is a hypothesis/model/dec.tree? • given 2 hypotheses, which is better? • accuracy on training set is biased – error: errortrain(h) = #misclassifications/|Strain| – errorD(h) ≥ errortrain(h) • could set aside a random subset of data for testing – sample error for any finite sample S drawn randomly from D is unbiased, but not necessarily same as true error – errS(h) ≠ errD(h) • what we want is estimate of “true” accuracy over distribution D Confidence Intervals • put a bound on errorD(h) based on Binomial Distribution – suppose sample error rate is errorS(h)=p – then 95% CI for errorD(h) is – – – – E[errorD(h)] = errorS(h) = p E[var(errorD(h))] = p(1-p)/n standard deviation = s = var; var = s2 1.96s comes from confidence level (95%) Binomial Distribution • put a bound on errorD(h) based on Binomial distribution – suppose true error rate is errorD(h)=p – on a sample of size n, would expect np errors on average, but could vary around that due to sampling (error rate, as a proportion:) Hypothesis Testing • is errorD(h)<0.2 (is error rate of h less than 20%?) – example: is better than majority classifier? (suppose errormaj(h)=20%) • if we approximate Binomial as Normal, then m±2s should bound 95% of likely range for errorD(h) • two-tailed test: – risk of true error being higher or lower is 5% – Pr[Type I error]≤0.05 • restrictions: n≥30 or np(1-p)≥5 • Gaussian Distribution 1.28s = 80% of distr. z-score: relative distance of a value x from mean • for a one-tailed test, use z value for a/2 • for example • • • • suppose errorS(h)=0.19 and s=0.02 suppose you want 95% confidence that errorD(h)<20%, then test if 0.2-errorS(h)>1.64s 1.64 comes from z-score for a=90% • notice that confidence interval on error rate tightens with larger sample sizes – example: compare 2 trials that have 10% error – test set A has 100 examples, h makes 10 errors: • 10/100 sqrt(.1x.9/100)=0.03 • CI95%(err(h)) = [10±6%] = [4-16%] – test set B has 100,000 examples, 10,000 errors: • 10,000/100,000=sqrt(.1x.9/100,000)=0.00095 • CI95%(err(h)) = [10±0.19%] = [9.8-10.2%] • Comparing 2 hypotheses (decision trees) – test whether 0 is in conf. interval of difference – add variances example... Estimating the accuracy of a Learning Algorithm • errorS(h) is the error rate of a particular hypothesis, which depends on training data • what we want is estimate of error on any training set drawn from the distribution • we could repeat the splitting of data into independent training/testing sets, build and test k decisions trees, and take average k-fold Cross-Validation • Typically k=10 • note that this is a biased estimator, probably under-estimates true accuracy because uses less examples • this is a disadvantage of CV: building d-trees with only 90% of the data • (and it takes 10 times as long) k-fold Cross-Validation partition the dataset D into k subsets of equal size (30), T1..Tk for i from 1 to k do: Si = D-Ti // training set, 90% of D hi = L(Si) // build decision tree ei = error(hi,Ti) // test d-tree on 10% held out m = (1/k)Sei s = (1/k)S(ei-m)2 SE = s/k (1/k(k-1))S(ei-m)2 CI95 = m tdof,a SE (tdof,a=2.23 for k=10 and a=95%) • what to do with 10 accuracies from CV? – accuracy of alg is just the mean (1/k)Sacci – for CI, use “standard error” (SE): • s = (1/k)S(ei-m)2 • SE = s/k (1/k(k-1))S(ei-m)2 • standard deviation for estimate of the mean – 95% CI = m ± tdof,a (1/k(k-1))S(ei-m)2 • Central Limit Theorem – we are estimating a “statistic” (parameter of a distribution, e.g. the mean) from multiple trials – regardless of underlying distribution, estimate of the mean approaches a Normal distribution – if std. dev. of underlying distribution is s, then std. dev. of mean of distribution is s/n • example: multiple trials of testing the accuracy of a learner, assuming true acc=70% and s=7% • there is intrinsic variability in accuracy between different trials • with more trials, distribution converges to underlying (std. dev. stays around 7) • but the estimate of the mean (vertical bars, m2s/n) gets tighter est of true mean= 71.02.5 est of true mean= 70.50.6 est of true mean= 69.980.03 • Student’s T distribution is similar to Normal distr., but adjusted for small sample size; dof = k-1 • example: t9,0.05 = 2.23 (Table 5.6) • Comparing 2 Learning Algorithms – e.g. ID3 with 2 different pruning methods • approach 1: – run each algorithm 10 times (using CV) independently to get CI for acc of each alg • acc(A), SE(A) • acc(B), SE(B) – T-test: statistical test if difference means ≠ 0 • d=acc(A)-acc(B) – problem: the variance is additive (unpooled) 58 60 62 64 66 68 -3 acc(LB,Ti) d=B-A 59 +2 63 +1 63 +4 63 +3 64 +2 62 +3 63% +3%, SE=1% mean diff is same but B is systematically higher than A 3 6 9 d=acc(B)-acc(A) mean = 3% SE = 3.7 (just a guess) suppose mean acc for A is 61%2, mean acc for B is 64%2 acc(LA,Ti) 58 62 59 60 62 59 60% 0 -3 0 3 6 9 • approach 2: Paired T-test – run the algorithms in parallel on the same divisions of tests test whether 0 is in CI of differences: