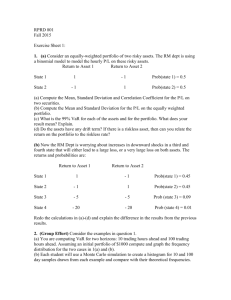

MCE3

advertisement

Standard Statistical Distributions

Most elementary statistical books provide a survey of commonly used statistical

distributions. The reason we study these distributions are that

They provide a comprehensive range of distributions for modelling practical applications

Their mathematical properties are known

They are described in terms of a few parameters, which have natural interpretations.

1 Prob

1. Bernoulli Distribution.

This is used to model a trial which gives rise to two outcomes:

success/ failure, male/ female, 0 / 1. Let p be the probability that

the outcome is one and q = 1 - p that the outcome is zero.

1-p

E[X]

= p (1) + (1 - p) (0) = p

VAR[X] = p (1)2 + (1 - p) (0)2 - E[X]2 = p (1 - p).

0

2. Binomial Distribution.

Suppose that we are interested in the number of successes X

in n independent repetions of a Bernoulli trial, where the

probability of success in an individual trial is p. Then

Prob{X = k} = nCk pk (1-p)n - k, (k = 0, 1, …, n)

E[X]

=np

VAR[X] = n p (1 - p).

This is the appropriate distribution to use in modeling the

number of boys in a family of n = 4 children, the number of

defective components in a batch n = 10 components and so on.

Prob

p

p

1

(n=4, p=0.2)

1

np

4

3. Poisson Distribution.

The Poisson distribution arises as a limiting case of the binomial distribution, where

n , p in such a way that n p a constant). Its density is

Prob{X = k} = exp ( - )k /k!k=,1,2,… ).

Note that exp (x) stands for e to the power of x, where e is

Prob

approximately 2.71828.

1

E [X]

=

VAR [X] = .

The Poisson distribution is used to model the number of

occurrences of a certain phenomenon in a fixed period of

time or space, as in the number of

5

O particles emitted by a radioactive source in a fixed direction and period of time

O telephone calls received at a switchboard during a given period

O defects in a fixed length of cloth or paper

O people arriving in a queue in a fixed interval of time

O accidents that occur on a fixed stretch of road in a specified time interval.

X

4. Geometric Distribution.

This arises in the “time” or number of steps k to the first

success in a series of independent Bernoulli trials. The

density is

Prob{X = k} = p (1 - p) k-1 (k = 1, 2, … ).

E[X] = 1/p

VAR [X] = (1 - p) /p2

Prob

1

X

5. Negative Binomial Distribution

This is used to model the number of failures k that occur before the rth success in a series of

independent Bernoulli trials. The density is

Prob {X = k} = r+k-1Ck pr (1 - p)k

(k = 0, 1, 2, … )

Note

E [X]

= r (1 - p) / p

VAR[X]

= r (1 - p) / p2.

6. Hypergeometric Distribution

Consider a population of M items, of which W are deemed to be successes. Let X be the

number of successes that occur in a sample of size n, drawn without replacement from the

population. The density is

Prob { X = k} = WCk M-WCn-k / MCn ( k = 0, 1, 2, … )

Then

E [X] = n W / M

VAR [X] = n W (M - W) (M - n) / { M2 (M - 1)}

7. Uniform Distribution

Prob

1

A random variable X has a uniform distribution on

the interval [a, b], if X has density

f (X) = 1 / ( b - a)

for a < X < b

1 / (b-a)

X

=0

otherwise.

Then

E [X] = (a + b) / 2

a

b

VAR [X] = (b - a)2 / 12

Uniformly distributed random numbers occur frequently in simulation models. However,

computer based algorithms, such as linear congruential functions, can only approximate this

distribution so great care is needed in interpreting the output of simulation models.

If X is a continuous random variable, then the probability that X takes a value in the range

[a, b] is the area under the frequency function f(x) between these points:

Prob { a < x < b } = F (b) - F (a) = ab f(x) dx.

In practical work, these integrals are evaluated by looking up entries in statistical tables.

9. Gaussian or Normal Distribution

A random variable X has a normal distribution with mean m and standard deviation s if it

has density

f (x)

=

1

Prob

1

exp { - ( x - m )2 }, -x <

2ps22s2

=

0,

otherwise

f(x)

E [ X]

=m

VAR [X] = s2.

0

m

As described below, the normal distribution arises naturally as the limiting distribution of the

average of a set of independent, identically distributed random variables with finite

variances. It plays a central role in sampling theory and is a good approximation to a large

class of empirical distributions. For this reason, a default assumption in many empirical

studies is that the distribution of each observation is approximately normal. Therefore,

statistical tables of the normal distribution are of great importance in analysing practical data

sets. X is said to be a standardised normal variable if m = 0 and s = 1.

X

10. Gamma Distribution

The Gamma distribution arises in queueing theory as the time to the arrival of the n th

customer in a single server queue, where the average arrival rate is . The frequency

function is

f(x)

= ( x )n - 1 exp ( - x) / ( n - 1)! , x 0, >0, n = 1, 2, ...

= 0,

otherwise

E [X]

=n/

VAR [X] = n / 2

11. Exponential Distribution

This is a special case of the Gamma distribution with n = 1 and so is used to model the

interarrival time of customers, or the time to the arrival of the first customer, in a simple

queue. The frequency function is

f (x)

= exp ( - x ),

x 0,>0

= 0,

otherwise.

12. Chi-Square Distribution

A random variable X has a Chi-square distribution with n degrees of freedom ( where n is a

positive integer) if it is a Gamma distribution with = 1, so its frequency function is

f (x)

= xn - 1 exp ( - x) / ( n - 1) !, x o

Prob

= 0,

otherwise.

c2 n (x)

X

Chi-square Distribution (continued)

The chi-square distribution arises in two important applications:

O If X1, X2, … , Xn is a sequence of independently distributed standardised normal

random variables, then the sum of squares X12 + X22 + … + Xn2 has a chi-square

distribution with n degrees of freedom

O If x1, x2, … , xn is a random sample from a normal distribution with mean m

and variance s2 and let

x = xi / n

and S2 = ( xi - x ) 2 / s2,

then S2 has a chi-square distribution with n - 1 degrees of freedom, and the

random variables S2 and x are independent.

13. Beta Distribution.

A random variable X has a Beta distribution with parameters a>0 and b>0 if it has

frequency function

f (x)

= Ga+b) x a- 1 ( 1 - x) b- 1 /G (a) Gb), 0 < x < 1

= 0,

otherwise

E [X]

= a/a+b)

VAR [X] = ab/[a+b)2 a+b+1)]

If n is an integer,

G (n) = ( n - 1 ) !

G (n + 1/2) = (n - 1/2) ( n - 3/2) …

with G (1) = 1

with G ( 1/2) = p

14. Student’s t Distribution

A random varuable X has a t distribution with n degrees of freedom ( tn ) if it has density

f(x)

= G (n+1) / 2 )

n pGn / 2)

1 + x2 / n ) - (n+1) / 2

= 0,

The t distribution is symmetrical about the origin, with

E[X]

=0

VAR [X] = n / (n -2).

( - < x < )

otherwise.

For small values of n, the tn distribution is very flat. As n is increased the density assumes a

bell shape. For values of n 25, the tn distribution is practically indistinguishable from the

standard normal curve.

O If X and Y are independent random variables

If X has a standard normal distribution and Y has a cn2 distribution

then

X

has a tn distribution

Y/n

O If x1, x2, … , xn is a random sample from a normal distribution, with mean m

and variance s2 and if we define s2 = 1 / ( n - 1) ( xi - x ) 2

then ( x - m ) / ( s / n) has a tn- 1 distribution

15. F Distribution

A random variable X has an F distribution with m and n degrees of freedom if it has density

f(x)

= G (m + n) / 2 ) m m / 2 n n / 2 x m / 2 - 1

Gm / 2) Gn / 2) (n + m

Note

If

x>0

x) ( m + n ) / 2

= 0,

E[X]

= n / ( n - 2)

VAR [X] = 2 n2 (m + n - 2)

m (n - 4) ( n - 2 )2

otherwise.

if n > 4

if n > 4

O X andYare independent random variables, X has a cm2 and Y a cn2 distribution

X / m has an Fm , n distribution

Y/n

O One consequence of this is that the F distribution represents the distribution of

the ratio of certain independent quadratic forms which can be constructed from

random samples drawn from normal distributions:

if x1, x2, … , xm ( m 2) is a random sample from a normal

distribution with mean m1 and variance s12, and

if y1, y2, … , yn ( n 2) is a random sample from a normal

distribution with mean m2 and variance s22, then

( xi - x )2 / ( m - 1)

( yi - y )2 / ( n - 1)

has an Fm - 1 , n - 1 distribution