Document

advertisement

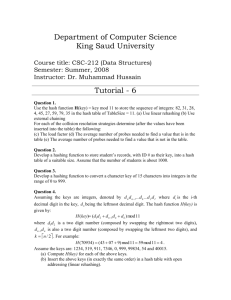

CS 3343: Analysis of

Algorithms

Lecture 15: Hash tables

Hash Tables

• Motivation: symbol tables

– A compiler uses a symbol table to relate

symbols to associated data

• Symbols: variable names, procedure names, etc.

• Associated data: memory location, call graph, etc.

– For a symbol table (also called a dictionary),

we care about search, insertion, and deletion

– We typically don’t care about sorted order

Hash Tables

• More formally:

– Given a table T and a record x, with key (= symbol)

and associated satellite data, we need to support:

• Insert (T, x)

• Delete (T, x)

• Search(T, k)

– We want these to be fast, but don’t care about sorting

the records

• The structure we will use is a hash table

– Supports all the above in O(1) expected time!

Hashing: Keys

• In the following discussions we will consider all

keys to be (possibly large) natural numbers

– When they are not, have to interpret them as natural

numbers.

• How can we convert ASCII strings to natural

numbers for hashing purposes?

– Example: Interpret a character string as an integer

expressed in some radix notation. Suppose the string

is CLRS:

• ASCII values: C=67, L=76, R=82, S=83.

• There are 128 basic ASCII values.

• So, CLRS = 67·1283+76 ·1282+ 82·1281+ 83·1280

= 141,764,947.

Direct Addressing

• Suppose:

– The range of keys is 0..m-1

– Keys are distinct

• The idea:

– Set up an array T[0..m-1] in which

• T[i] = x

• T[i] = NULL

if x T and key[x] = i

otherwise

– This is called a direct-address table

• Operations take O(1) time!

• So what’s the problem?

The Problem With

Direct Addressing

• Direct addressing works well when the range m

of keys is relatively small

• But what if the keys are 32-bit integers?

– Problem 1: direct-address table will have

232 entries, more than 4 billion

– Problem 2: even if memory is not an issue, the time to

initialize the elements to NULL may be

• Solution: map keys to smaller range 0..m-1

• This mapping is called a hash function

Hash Functions

• U – Universe of all possible keys.

• Hash function h: Mapping from U to the

slots of a hash table T[0..m–1].

h : U {0,1,…, m–1}

• With direct addressing, key k maps to slot

A[k].

• With hash tables, key k maps or “hashes”

to slot T[h[k]].

• h[k] is the hash value of key k.

Hash Functions

U

(universe of keys)

|U| >> K

&

|U| >> m

k2

0

h(k1)

h(k4)

k1

k4

K

(actual

keys)

T

k5

collision

k3

h(k2) = h(k5)

h(k3)

m-1

• Problem: collision

Resolving Collisions

• How can we solve the problem of

collisions?

• Solution 1: chaining

• Solution 2: open addressing

Open Addressing

• Basic idea (details in Section 11.4):

– To insert: if slot is full, try another slot (following a

systematic and consistent strategy), …, until an open

slot is found (probing)

– To search, follow same sequence of probes as would

be used when inserting the element

• If reach element with correct key, return it

• If reach a NULL pointer, element is not in table

• Good for fixed sets (adding but no deletion)

– Example: file names on a CD-ROM

• Table needn’t be much bigger than n

Chaining

• Chaining puts elements that hash to the

same slot in a linked list:

U

(universe of keys)

k6

k2

k1

k4 ——

k5

k2

——

——

——

k1

k4

K

(actual

k7

keys)

T

——

k5

——

k8

k3

k3 ——

k8

——

k6 ——

k7 ——

Chaining

• How to insert an element?

U

(universe of keys)

k6

k2

k1

k4 ——

k5

k2

——

——

——

k1

k4

K

(actual

k7

keys)

T

——

k5

——

k8

k3

k3 ——

k8

——

k6 ——

k7 ——

Chaining

• How to delete an element?

– Use a doubly-linked list for efficient deletion

U

(universe of keys)

k6

k2

k1

k4 ——

k5

k2

——

——

——

k1

k4

K

(actual

k7

keys)

T

——

k5

——

k8

k3

k3 ——

k8

——

k6 ——

k7 ——

Chaining

• How to search for a element with a

given key?

U

(universe of keys)

k6

k2

k1

k4 ——

k5

k2

——

——

——

k1

k4

K

(actual

k7

keys)

T

——

k5

——

k8

k3

k3 ——

k8

——

k6 ——

k7 ——

Hashing with Chaining

• Chained-Hash-Insert (T, x)

– Insert x at the head of list T[h(key[x])].

– Worst-case complexity – O(1).

• Chained-Hash-Delete (T, x)

– Delete x from the list T[h(key[x])].

– Worst-case complexity – proportional to length of list with

singly-linked lists. O(1) with doubly-linked lists.

• Chained-Hash-Search (T, k)

– Search an element with key k in list T[h(k)].

– Worst-case complexity – proportional to length of list.

Analysis of Chaining

• Assume simple uniform hashing: each key in

table is equally likely to be hashed to any slot

• Given n keys and m slots in the table, the

load factor = n/m = average # keys per slot

• What will be the average cost of an

unsuccessful search for a key?

– A: (1+) (Theorem 11.1)

• What will be the average cost of a successful

search?

– A: (2 + /2) = (1 + ) (Theorem 11.2)

Analysis of Chaining Continued

• So the cost of searching = O(1 + )

• If the number of keys n is proportional to

the number of slots in the table, what is ?

• A: n = O(m) => = n/m = O(1)

– In other words, we can make the expected

cost of searching constant if we make

constant

Choosing A Hash Function

• Clearly, choosing the hash function well is

crucial

– What will a worst-case hash function do?

– What will be the time to search in this case?

• What are desirable features of the hash

function?

– Should distribute keys uniformly into slots

– Should not depend on patterns in the data

Hash Functions:

The Division Method

• h(k) = k mod m

– In words: hash k into a table with m slots using the slot given by

the remainder of k divided by m

– Example: m = 31 and k = 78, h(k) = 16.

• Advantage: fast

• Disadvantage: value of m is critical

– Bad if keys bear relation to m

– Or if hash does not depend on all bits of k

• What happens to elements with adjacent values of k?

– Elements with adjacent keys hashed to different slots: good

• What happens if m is a power of 2 (say 2P)?

• What if m is a power of 10?

• Pick m = prime number not too close to power of 2 (or 10)

Hash Functions:

The Multiplication Method

• For a constant A, 0 < A < 1:

• h(k) = m (kA mod 1) = m (kA - kA)

What does this term represent?

Hash Functions:

The Multiplication Method

• For a constant A, 0 < A < 1:

• h(k) = m (kA mod 1) = m (kA - kA)

WhatFractional

does this term

part represent?

of kA

• Advantage: Value of m is not critical

• Disadvantage: relatively slower

• Choose m = 2P, for easier implementation

How to choose A?

• The multiplication method works with any

legal value of A.

• Choose A not too close to 0 or 1

• Knuth: Good choice for A = (5 - 1)/2

• Example: m = 1024, k = 123, A 0.6180339887…

h(k) = 1024(123 · 0.6180339887 mod 1)

= 1024 · 0.018169... = 18.

Multiplication Method Implementation

•

•

•

•

•

•

Choose m = 2p, for some integer p.

Let the word size of the machine be w bits.

Assume that k fits into a single word. (k takes w bits.)

Let 0 < s < 2w. (s takes w bits.)

Restrict A to be of the form s/2w.

Let k s = r1 ·2w+ r0 .

• r1 holds the integer part of kA (kA) and r0 holds the fractional

part of kA (kA mod 1 = kA – kA).

• We don’t care about the integer part of kA.

– So, just use r0, and forget about r1.

Multiplication Method –

Implementation

w bits

k

binary point

r1

s = A·2w

·

r0

extract p bits

h(k)

• We want m (kA mod 1).

• m = 2p

• We could get that by shifting r0 to the left by p bits and then taking the p

bits that were shifted to the left of the binary point.

• But, we don’t need to shift. Just take the p most significant bits of r0.

Hash Functions:

Worst Case Scenario

• Scenario:

– You are given an assignment to implement hashing

– You will self-grade in pairs, testing and grading your

partner’s implementation

– In a blatant violation of the honor code, your partner:

• Analyzes your hash function

• Picks a sequence of “worst-case” keys that all map to the

same slot, causing your implementation to take O(n) time to

search

– Exercise 11.2-5: when |U| > nm, for any fixed hashing

function, can always choose n keys to be hashed into

the same slot.

Universal Hashing

• When attempting to defeat a malicious

adversary, randomize the algorithm

• Universal hashing: pick a hash function

randomly in a way that is independent of the

keys that are actually going to be stored

– pick a hash function randomly when the algorithm

begins (not upon every insert!)

– Guarantees good performance on average, no matter

what keys adversary chooses

– Need a family of hash functions to choose from

Universal Hashing

• Let be a (finite) collection of hash functions

– …that map a given universe U of keys…

– …into the range {0, 1, …, m - 1}.

• is said to be universal if:

– for each pair of distinct keys x, y U,

the number of hash functions h

for which h(x) = h(y) is at most ||/m

– In other words:

• With a random hash function from , the chance of a collision

between x and y is at most 1/m (x y)

Universal Hashing

• Theorem 11.3 (modified from textbook):

– Choose h from a universal family of hash functions

– Hash n keys into a table of m slots, n m

– Then the expected number of collisions involving a particular key

x is less than 1

– Proof:

•

•

•

•

For each pair of keys y, x, let cyx = 1 if y and x collide, 0 otherwise

E[cyx] <= 1/m (by definition)

Let Cx be total number of collisions involving key x

n 1

E[C x ] E[cxy ]

m

yT

y x

• Since n m, we have E[Cx] < 1

• Implication, expected running time of insertion is (1)

A Universal Hash Function

• Choose a prime number p that is larger than all

possible keys

• Choose table size m ≥ n

• Randomly choose two integers a, b, such that

1 a p -1, and 0 b p -1

• ha,b(k) = ((ak+b) mod p) mod m

• Example: p = 17, m = 6

h3,4 (8) = ((3*8 + 4) % 17) % 6 = 11 % 6 = 5

A universal hash function

• Theorem 11.5: The family of hash functions Hp,m

= {ha,b} defined on the previous slide is universal

• Proof sketch:

– For any two distinct keys x, y, for a given ha,b,

– Let r = (ax+b) % p, s = (ay+b) % p.

– Can be shown that rs, and different (a,b) results in

different (r,s)

– x and y collides only when r%m = s%m

– For a given r, the number of values s such that r%m =

s%m and r s is at most (p-1)/m

– For a given r, and any randomly chosen s, prob(r s

& r%m = s%m) = (p-1) / m / (p-1) = 1/m