Descriptive Rubrics - Central New Mexico Community College

advertisement

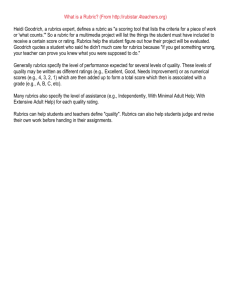

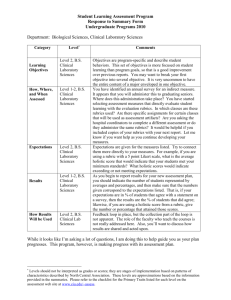

Descriptive Rubrics Ursula Waln, Director of Student Learning Assessment Central New Mexico Community College Rubric: Just Another Word for Scoring Guide • A rubric is any scoring guide that lists specific criteria, such as a checklist or a rating scale. • Checklists are used for objective evaluation (did it or did not do it). • Rating scales are used for subjective evaluation (gradations of quality). • Descriptive rubrics are rating scales that contain descriptions of what constitutes each level of performance. • Maybe call them descriptive scoring guides if you don’t like the word rubric. • Most people who talk about rubrics are referring to descriptive rubrics, not checklists or rating scales. Click to go to: CATs Item Analysis The Purpose of Descriptive Rubrics • Descriptive rubrics are used to lend objectivity to evaluations that are inherently subjective, e.g.: • Grading of artwork, papers, performances, projects, speeches, etc. • Assessing overall student progress toward specific learning outcomes (course and/or program level) • Monitoring developmental levels of individuals as they progress through a program (‘developmental rubrics’). • Conducting employee performance evaluations. • Assessing group progress toward a goal. • When used by multiple evaluators, descriptive rubrics can minimize differences in rater thresholds (especially if normed). Click to go to: CATs Item Analysis Why Use Descriptive Rubrics in Class? • In giving assignments, descriptive rubrics can help clarify the instructor’s expectations and grading criteria for students. • Students can ask more informed questions about the assignment. • A clear sense of what is expected can inspire students to achieve more. • The rubric helps explain to students why they received the grade they did. • Descriptive rubrics help instructors remain fair and consistent in their scoring of student work (more so than rating scales). • Scoring is easier and faster when descriptions clearly distinguish levels. • The effects of scoring fatigue (e.g., grading more generously toward the bottom of a stack due to disappointed expectations) are minimized. Click to go to: CATs Item Analysis Why Use Descriptive Rubrics for Assessment? • Clearly identifying benchmark levels of performance and describing what learning looks like at each level establishes a solid framework for interpreting multiple measures of performance. • Student performance on different types of assignments and at different points in the learning process can be interpreted for analysis using a descriptive rubric as a central reference. • With rubrics that describe what goal achievement looks like, instructors can more readily identify and assess the strength of connections between: • Course assignments and course goals • Course assignments and program goals Click to go to: CATs Item Analysis Two Common Types of Descriptive Rubrics Holistic • Each level of performance has just one comprehensive description. • Descriptions may be organized in columns or rows. • Useful for quick and general assessment and feedback. Analytic • Each level of performance has descriptions for each of the performance criteria. • Descriptions are organized in a matrix. • Useful for detailed assessment and feedback. Click to go to: CATs Item Analysis Example of a Holistic Rubric Performance Levels Descriptions Proficient (10 points) Ideas are expressed clearly and succinctly. Arguments are developed logically and with sensitivity to audience and context. Original and interesting concepts and/or unique perspectives are introduced. Intermediate (6 points) Ideas are clearly expressed but not fully developed or supported by logic and may lack originality, interest, and/or consideration of alternative points of view. Emerging (3 points) Expression of ideas is either undeveloped or significantly hindered by errors in logic, grammatical and/or mechanical errors, and/or overreliance on jargon and/or idioms. Example of an Analytic Rubric Delve, Mintz, and Stewart’s (1990) Service Learning Model Developmental Variables Intervention Mode Setting Phase 1 Exploration Phase 2 Clarification Phase 3 Realization Phase 4 Activation Phase 5 Internalization Group Group (beginning to identify with group) Group that shares focus or independently Group that shares focus or independently Individual Minimal community interaction—Prefers oncampus activities One Time Trying many types of contact Direct contact with community Frequent and committed involvement Several Activities or Sites Consistently at One Site Direct contact with community—intense focus on issue or cause Consistently at One Site or with one issue Duration Short Term Long Term Commitment to Group Long Term Commitment to Activity, Site, or Issue Behavior Needs Outcomes Participate in Incentive Activities Identify with Group Camaraderie Commit to Activity, Site, or Issue Feeling Good Belonging to a Group Balance Challenges Becoming Involved Concern about new environments Activities are Nonthreatening and Structured Choosing from Multiple Opportunities/Group Process Group Setting, Identification and Activities are Structured Understanding Activity, Site, or Issue Confronting Diversity and Breaking from Group Commitment Frequency Supports Reflective-Supervisors, Coordinators, Faculty, and Other Volunteers Lifelong Commitment to Issue (beginnings of Civic Responsibility) Advocate for Issue(s) Consistently at One Site or focused on particular issues Lifelong Commitment to Social Justice Promote Values in self and others Changing Lifestyle Living One’s Values Questioning Authority/Adjusting to Peer Pressure Reflective-Partners, Clients, and Other Volunteers Living Consistently with Values Community—Have Achieved a Considerable Inner Support System Another Example of an Analytic Rubric AAC&U Ethical Reasoning Value Rubric Capstone 4 Milestones 3 Benchmark 2 Student states both core beliefs and the origins of the core beliefs. 1 Ethical SelfAwareness Student discusses in detail/analyzes both core beliefs and the origins of the core beliefs and discussion has greater depth and clarity. Student discusses in detail/analyzes both core beliefs and the origins of the core beliefs. Student states either their core beliefs or articulates the origins of the core beliefs but not both. Understanding Different Ethical Perspectives/ Concepts Student names the theory or theories, can present the gist of said theory or theories, and accurately explains the details of the theory or theories used. Student can name the major theory or Student can name the major theory she/he theories she/he uses, can present the gist of uses, and is only able to present the gist of said theory or theories, and attempts to the named theory. explain the details of the theory or theories used, but has some inaccuracies. Ethical Issue Recognition Student can recognize ethical issues when presented in a complex, multilayered (gray) context AND can recognize crossrelationships among the issues. Student can recognize ethical issues when issues are presented in a complex, multilayered (gray) context OR can grasp cross-relationships among the issues. Student can recognize basic and obvious Student can recognize basic and obvious ethical issues and grasp (incompletely) the ethical issues but fails to grasp complexity or complexities or interrelationships among the interrelationships. issues. Application of Ethical Perspectives/ Concepts Student can independently apply ethical perspectives/concepts to an ethical question, accurately, and is able to consider full implications of the application. Student can independently apply ethical perspectives/concepts to an ethical question, accurately, but does not consider the specific implications of the application. Student can apply ethical perspectives/concepts to an ethical question, independently (to a new example) and the application is inaccurate. Student can apply ethical perspectives/concepts to an ethical question with support (using examples, in a class, in a group, or a fixed-choice setting) but is unable to apply ethical perspectives/concepts independently (to a new example.). Evaluation of Different Ethical Perspectives/ Concepts Student states a position and can state the objections to, assumptions and implications of and can reasonably defend against the objections to, assumptions and implications of different ethical perspectives/concepts, and the student's defense is adequate and effective. Student states a position and can state the objections to, assumptions and implications of, and respond to the objections to, assumptions and implications of different ethical perspectives/concepts, but the student's response is inadequate. Student states a position and can state the objections to, assumptions and implications of different ethical perspectives/concepts but does not respond to them (and ultimately objections, assumptions, and implications are compartmentalized by student and do not affect student's position.) Student states a position but cannot state the objections to and assumptions and limitations of the different perspectives/concepts. Student only names the major theory she/he uses. There are No Rules for Developing Rubrics • Form typically follows function, so how one sets up a descriptive rubric is usually determined by how one plans to use it. • Performance levels are usually column headings but can function just as wall as row headings. • Performance levels can be arranged in ascending or descending order, and one can include as many levels as one wants. • Descriptions can focus only on positive manifestations or include references to missing or negative characteristics. • Some use grid lines while others do not. Click to go to: CATs Item Analysis Artifact Analyses Descriptive rubrics can help pull together results from multiple measures for a more comprehensive picture of student learning. Rubric Using the Model • To pull together multiple measures for an overall assessment of student learning: • Take a random sample from each assignment and re-score those using the rubric (instead of the grading criteria), or rate the students as a group based on overall performance on each assignment. • Then, combine the results, weighting their relative importance based on: • • • • • At what stage in the learning process the results were obtained How well you think students understood the assignment or testing process How closely the learning measured relates to the instructional objectives Factors that could have biased the results Your own observations, knowledge of the situations, and professional judgment Click to go to: CATs Item Analysis “ Remember that when you do assessment, whether in the department, the general education program, or at the institutional level, you are not trying to achieve the perfect research design; you are trying to gather enough data to provide a reasonable basis for action. You are looking for something to work on. ” Woolvard, B. E. (2010). Assessment clear and simple: Apractical guide for institutions, departments, and general education. San Fransisco, CA: Jossey-Bass.