Why forecasts differ - presentation

advertisement

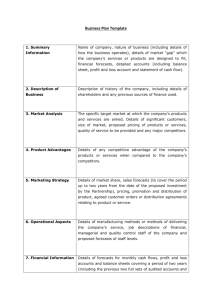

Why Forecasts Differ and Why are they so Bad? Roy Batchelor Professor of Banking and Finance, Cass Business School But back in the real world… • Forecasters often look (and feel) stupid • This is because Sometimes they can’t help it. Sometimes they deliberately make biased forecasts. • Aim is to separate these causes of error, using evidence from panels of forecasters Consensus Economics Blue Chip Financial Forecasts • Payoff – which forecasters are worth listening to, and when? Plan of lecture • Insights from recession forecasts • Reasons for biased forecasts • Who is most biased? Forecasting the 1991 recession 4.0 Consensus 3.0 Mr. Brightside 1 Dismal Scientist 1 2.0 HMT 1.0 0.0 -1.0 -2.0 -3.0 Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec 1990 1990 1990 1990 1990 1990 1990 1990 1990 1990 1990 1990 1991 1991 1991 1991 1991 1991 1991 1991 1991 1991 1991 1991 Forecasting the 2009 recession 4 3 Consensus 2 Mr. Brightside 2 Dismal Scientist 2 1 HMT 0 -1 -2 -3 -4 -5 Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec 2008 2008 2008 2008 2008 2008 2008 2008 2008 2008 2008 2008 2009 2009 2009 2009 2009 2009 2009 2009 2009 2009 2009 2009 Should we privatise GDP forecasts? • “Ageing model leads Treasury astray” (Sunday Times 9/2/92) • “Time to take forecasting away from the Treasury” (Sunday Times, 12/4/92). • What do you think? Forecasts for 1990 3 2.5 2 Consensus Mr. Brightside 1 1.5 Dismal Scientist 1 HMT 1 0.5 0 -0.5 -1 Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec 1989 1989 1989 1989 1989 1989 1989 1989 1989 1989 1989 1989 1990 1990 1990 1990 1990 1990 1990 1990 1990 1990 1990 1990 Forecasts for 2008 3 2.5 2 1.5 Consensus Mr. Brightside 2 Dismal Scientist 2 1 HMT 0.5 0 -0.5 Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec 2007 2007 2007 2007 2007 2007 2007 2007 2007 2007 2007 2007 2008 2008 2008 2008 2008 2008 2008 2008 2008 2008 2008 2008 Forecasts for 2010: … it’s too soon to know 2 1.5 1 0.5 Consensus Mr. Brightside 2 0 Dismal Scientist 2 HMT -0.5 -1 -1.5 -2 Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec 2009 2009 2009 2009 2009 2009 2009 2009 2009 2009 2009 2009 2010 2010 2010 2010 2010 2010 2010 2010 2010 2010 2010 2010 Recession forecasts in general • Loungani, P, 2001, How accurate are private sector forecasts? Cross-country evidence from consensus forecasts of output growth, International Journal of Forecasting, 17, 419-432 Stylised facts about Forecasts • It’s hard to forecast recession. These are rare events, always with different causes. • Consensus forecast usually starts close to the average growth rate, and then adjusts • Accuracy-improving information arrives only about 12-15 months in advance of the end of the target year. • Individual forecasts are distributed above and below the consensus in a consistent way. There are persistent optimists and pessimists. Plan of lecture • Insights from recession forecasts • Reasons for biased forecasts • Who is most biased? Reasons For Bias • Incompetence (unlikely, really…) • Bias in the Consensus: Learning about Structural Breaks Market Incentives for Bias • Bias in Individual Forecasts Market Incentives for Product Differentiation Learning about Structural Change • Important • Many countries (Japan, Germany, Italy, France) experienced slowdown in trend growth • Optimal forecast will be biased to optimism as forecasters learn about the new trend • Evidence supports this - forecasts in US, UK less biased US – Log(GDP) and Forecast (Non-) Bias Italy – Log(GDP) and Forecast Bias Market incentives for bias • Important for Investment Analysts • Bias to optimism in earnings forecasts can arise from Selection of firms/ sectors you believe in Relationship building Trade generation • However, regulatory changes (Sarbanes Oxley) can change incentives… • May also apply to some government forecasts Financial analysts: Bias to optimism 1990-2003 Financial analysts: Bias to pessimism 2003- Dispersion v Herding in Individual Forecasts • There is evidence of herding – excessive convergence on the consensus – for financial analysts • However, there is evidence of the excessive dispersion of forecasts by economic forecasters, who underweight information in the consensus forecast (Batchelor and Dua, 1992, J Forecasting ) Maintain consistently optimistic or pessimistic priors from year to year (Batchelor and Dua, 1990, Int J Forecasting; Batchelor, 2007, Int J Forecasting) Herding • Financial forecasters have incentives to overweight consensus forecasts: “Information cascade”: forecasts are made sequentially, so each forecast becomes part of the next forecasters prediction set. In aggregate published forecasts are biased towards the early forecasts. “Incentive concavity”: rewards for an accurate “bold” forecast are smaller than penalties for an inaccurate bold forecast. Less experienced forecasters herd more since career prospects are at stake. Dispersion and Product Differentiation • Hotelling “location model” – don’t set up your store right next to everyone else, but don’t be too far out of town. So profit maximising strategy is to shade forecasts consistently away from the Consensus (even if you believe it is the best forecast) • Batchelor and Dua (1990), Batchelor (2007) find individual forecasters persistently make optimistic or pessimistic forecasts relative to the consensus. Interpreted as an attempt to differentiate their product, increase press coverage, book sales, speaking fees etc. • Does not harm accuracy, except in extreme cases Evolution of Forecast Disagreement • Lahiri, K., and X Shen, 2008, Evolution of Forecast Disagreement in a Bayesian learning Model, Journal of Econometrics, 144, 325-340. News and Dispersion of Forecast Revisions • Forecasts converge, but quarterly GDP releases give forecasters an opportunity to put different spins on the figures Plan of lecture • Insights from recession forecasts • Reasons for biased forecasts • Who is most biased? Blue Chip Financial Forecasts Method • Forecasts from US Blue Chip Financial Forecasters TB3, TB30, RGDP, CPI, 1983-1997 (+ updating), Horizons 15 mths – 1 mth • Questionnaire on Forecaster Characteristics Sent to 80 BCFF participants, Nov 1993 - Jan 1994 43 useable responses 25 with full track record. Ranking by Forecast Quality For the 25 forecasters, we compute average (over horizon) ranks for each target variable by • Bias (actual-forecast, low rank = overprediction) • Extremism (absolute deviation from consensus forecast, high rank = far from consensus) • Accuracy (RMSE, low rank = high accuracy) • Calculate Rank Correlations with Forecaster Characteristics Individual Characteristics Please provide the following information about you and your organisation: Type of Organisation: Location: Highest College Degree (tick): Bachelors Masters PhD Number of years experience in forecasting Percentage of work time spent in forecasting Number of staff involved in forecasting at your organisation Individual effects: Rank Correlations Bias TB3 FBANK FNYDC FDEG FYRS FPERCENT FNOS -0.33 0.13 0.44 -0.17 0.17 -0.06 TB30 RGDP -0.36 -0.18 0.29 -0.27 0.02 -0.11 -0.27 0.15 -0.12 0.24 0.09 -0.18 CPI -0.04 -0.06 0.05 -0.56 -0.08 -0.02 Extremism TB3 TB30 RGDP -0.35 0.20 0.06 0.29 0.19 0.21 -0.43 0.12 -0.11 -0.08 0.20 0.19 -0.16 -0.07 -0.16 0.28 -0.02 -0.31 CPI -0.32 0.01 0.21 0.15 0.27 -0.14 Accuracy TB3 TB30 RGDP 0.12 0.04 -0.38 0.22 0.08 -0.01 -0.10 0.02 -0.17 0.19 0.18 0.01 -0.32 0.07 0.12 0.19 0.31 -0.19 CPI -0.12 -0.04 0.05 0.32 0.12 -0.22 • Banks made higher, less extreme, more accurate GDP forecasts • Experienced forecasters made slightly more extreme forecasts of TB3, RGDP, but no convincing evidence of extremism • Location, education, attention, size of team not significant Clientele Please indicate the relative importance of the following groups as users of your forecasts (weights should add to 100): Traders inside your organisation Other colleagues inside your organisation Clients of your organisation General public Other Concentration measures • We have constructed measures of concentration of Clientele, Technique, Theory and Information weights A forecaster who only served external clients would have a high concentration measure (UCONC) A forecaster who put equal weight on all types of user would have a low concentration measure • Hypothesis is that low concentration may reduce extremism and improve accuracy Strong Clientele Effects Bias TB3 TB30 RGDP CPI UTRADERS -0.42 -0.42 -0.13 -0.30 UINTERNAL -0.05 -0.12 -0.33 -0.14 UCLIENTS 0.33 0.36 0.26 0.36 UPUBLIC -0.12 -0.04 0.11 -0.11 UOTHER 0.20 0.13 0.26 -0.24 UCONC 0.21 0.24 0.21 0.36 Extremism TB3 TB30 RGDP -0.29 -0.20 -0.36 -0.26 -0.52 -0.12 0.45 0.54 0.35 -0.36 -0.29 -0.23 0.07 0.07 0.18 0.36 0.47 0.38 CPI -0.17 -0.18 0.21 -0.10 0.32 0.22 Accuracy TB3 TB30 0.38 0.06 -0.34 -0.41 0.02 0.25 -0.18 -0.04 -0.06 0.02 0.16 0.26 RGDP -0.32 -0.34 0.48 -0.30 0.27 0.49 • Forecasters giving weight to traders, internal users, or public, made less extreme and more accurate forecasts • Forecasters with external clients made more extreme and less accurate forecasts • Concentration on one type of client also increases extremism CPI -0.12 -0.13 0.21 -0.28 0.16 0.23 Forecast Techniques In making forecasts of US interest rates 3 to 6 months ahead, what weight do you assign to the following forecast techniques: (weights should add to 100) Econometric Models (structural, regression) Time Series Models (Box Jenkins, ARIMA, VAR) Exponential Smoothing methods Technical Analysis (Chart Analysis) Judgment Other Also give weights for forecasting 1 year ahead and beyond, if different. Weak Technique effects Bias TB3 TB30 RGDP TECHSEC -0.03 -0.02 -0.36 TECHSTS -0.26 -0.45 -0.34 TECHSSM -0.02 -0.20 0.14 TECHSTA -0.25 -0.22 0.23 TECHSJT 0.23 0.30 0.34 TECHSOTH 0.09 0.08 0.10 TECHSCONC 0.28 0.34 0.42 CPI 0.09 -0.29 -0.31 -0.26 0.20 0.07 0.27 Extremism TB3 TB30 RGDP 0.21 0.29 -0.39 0.28 0.06 -0.17 -0.14 0.00 0.09 -0.19 -0.15 0.00 -0.16 -0.17 0.50 -0.21 -0.15 -0.26 -0.19 -0.12 0.33 CPI 0.01 -0.14 0.17 0.01 0.16 -0.26 0.24 Accuracy TB3 TB30 RGDP -0.15 0.07 -0.20 0.09 0.09 -0.14 -0.31 -0.28 -0.03 0.08 0.03 -0.18 0.06 -0.01 0.38 0.15 -0.25 -0.05 0.16 0.10 0.41 CPI 0.02 0.16 0.00 -0.14 0.11 -0.47 0.19 • Smoothing methods are associated with higher accuracy, but little used • Use of Judgement, and Concentration on one technique increased extremism and reduced accuracy of real GDP forecasts Theory If you use Econometric Models, what weight do you put on the following types of economic theory: (weights should add to 100) Keynesian Monetarist Rational Expectations Supply Side Business Cycle Other (specify) Some Theory effects Bias TB3 TB30 RGDP THKEYNES 0.33 0.34 0.26 THMONET 0.21 0.33 -0.40 THRATEX -0.23 -0.11 -0.64 THSUPSIDE -0.24 -0.06 -0.12 THBUSCYC -0.12 -0.34 0.46 THOTH 0.02 0.05 -0.10 THCONC -0.25 -0.31 0.41 CPI 0.06 0.46 -0.10 0.12 -0.36 0.25 -0.26 Extremism TB3 TB30 RGDP -0.01 0.12 0.06 -0.47 -0.43 -0.07 0.13 0.07 0.21 0.23 -0.04 -0.03 0.15 0.18 0.05 -0.19 -0.14 -0.48 -0.22 -0.02 -0.01 CPI -0.33 0.27 0.28 -0.04 -0.08 0.11 -0.10 Accuracy TB3 TB30 RGDP -0.13 -0.17 -0.04 -0.33 -0.24 -0.19 -0.05 0.14 0.00 0.05 -0.01 0.09 0.26 0.16 0.14 0.02 0.07 -0.14 0.44 0.22 0.09 • Keynesians made low forecasts of interest rates • Monetarists made low forecasts of interest rates, inflation, high forecasts of real growth. Business Cycle theorists had the opposite biases • RE theorists made consistently high forecasts of real growth • Few differences in forecast accuracy, however CPI -0.46 -0.01 0.24 0.07 0.22 -0.16 -0.01 Judgment If you use Judgment, what weight so you place on the following processes? (weights should add to 100) Own analysis of current event Group analysis within your organisation (meetings, surveys) Other (specify) Some Judgment Process effects JTOWN JTGROUP JTOTHER Bias TB3 TB30 RGDP CPI 0.21 0.47 0.30 0.23 -0.08 -0.36 -0.30 -0.12 -0.33 -0.41 -0.15 -0.30 Extremism TB3 TB30 RGDP 0.00 0.19 0.01 -0.17 -0.27 -0.03 0.28 0.03 0.02 CPI 0.26 -0.20 -0.22 Accuracy TB3 TB30 RGDP 0.25 0.22 0.16 -0.38 -0.39 -0.13 0.09 0.18 -0.12 • Some evidence that group processes improve accuracy of interest rate forecasts, reliance on own judgment harms accuracy CPI 0.02 -0.07 0.07 Information If you use Judgment, what weight so you place on the following pieces of information? (weights should add to 100) Current Official Economic Statistics (GDP, Inflation, …) Forecasts made by other organisations (e.g. Blue Chip Financial Forecasts) Surveys of Consumer and Business Confidence Other (specify) Strong Information Source effects Bias TB3 TB30 RGDP INFONEWS 0.06 0.14 0.01 INFOFORCS 0.06 -0.11 -0.28 INFOSURV 0.13 0.11 0.08 INFOOTH -0.14 -0.09 0.12 INFOCONC -0.04 0.09 -0.03 CPI 0.28 -0.24 0.15 -0.15 0.16 Extremism TB3 TB30 RGDP 0.11 0.23 -0.04 -0.26 -0.16 -0.10 0.09 -0.13 0.07 0.02 -0.03 0.06 0.24 0.27 0.00 CPI 0.04 -0.01 0.02 -0.03 0.07 Accuracy TB3 TB30 RGDP 0.19 0.30 0.15 -0.45 -0.36 -0.51 -0.08 -0.15 -0.14 0.15 0.03 0.25 0.28 0.36 0.33 CPI -0.10 -0.07 -0.14 0.18 0.12 • Forecasters who place a lot of weight on the forecasts of other forecasters are less extreme, and significantly more accurate on interest rate and real GDP forecasts • Forecasters who rely heavily on one source of information tend to be less accurate Who should you listen to? • Less extreme work in bank serve general public • More accurate serve general public use any theory … except RE pay attention to other forecasters • More extreme rational expectations theory listen to friends, boss overweight news • Less accurate limited range of users (e.g. only external clients) doctrinaire use of theory (e.g. only Monetarism)