An Ensemble SVM Model for the Accurate

A

N

E

NSEMBLE

SVM M

ODEL FOR THE

A

CCURATE

P

REDICTION OF

N

ON

-

C

ANONICAL

M

ICRO

RNA T

ARGETS

Asish Ghoshal 1 , Ananth Grama 1 , Saurabh Bagchi 2 , Somali Chaterji 1

Purdue University, West Lafayette, IN

miRNA

MicroRNA (miRNA): “The genome’s guiding hand?”

• miRNA are 22 nucleotide ( nt ) strings of RNA, base-pairing with messenger RNA (mRNA) to cause mRNA degradation or translational repression

• Can be thought of as biology’s dark matter: small regulatory

RNA that are abundant and encoded in the genome

• Dysregulation of miRNA may contribute to diverse diseases

• Canonical (i.e., exact) matches involve the miRNA’s seed

region (nt 2-7) and the 3’ untranslated region (UTR) of mRNA and were thought of as the only form of interaction

• Recent high-throughput experimental studies have indicated the high-preponderance of “non-canonical” miRNA targets

Background

• microRNA

• mRNA

• Argonaute

• RISC (complex)

3

“Avishkar”: Computational miRNA target prediction

• We are attempting computational prediction of miRNA-mRNA interactions

• Challenging because of:

– Large number of features in miRNAs and mRNAs

– Noisy and incomplete data sets from experimental approaches

– Wide variety of positive miRNA-mRNA interactions

• Prior computational approaches are deterministic and rely on perfect complementarity of the miRNA and mRNA nucleotides

Background : CLIP Technology and Non-Canonical miRNA-mRNA Interactions

An experimental method to map the binding sites of RNAbinding proteins across the transcriptome. Proteins are crosslinked to RNA using ultraviolet light, and an antibody is used to specifically isolate the RNA-binding protein of interest together with its RNA interaction partners, which are subjected to sequencing.

5

Our Contributions

• Avishkar suite comprises of 4 different models:

a global linear SVM model,

a global non-linear model,

an ensemble linear model,

an ensemble non-linear model (radial basis function kernel)

• Feature computation and transformation

– Spatial features at two resolutions

– Seed enrichment metric

• Linear classifier had a moderate amount of bias, so we switched to a non-linear, kernel-SVM classifier. This achieves a TPR of 76% and an FPR of 20%, with the AUC

(ROC curve) for the ensemble non-linear model being 20% higher than for the simple linear model. This is an improvement of over 150% in the TPR over the best competitive protocol.

• Since training kernel SVMs is computationally expensive, we provide a generalpurpose and efficient implementation of Cascade SVM, on top of Apache Spark: https://bitbucket.org/cellsandmachines/kernelsvmspark

Parallel Support Vector Machines: The Cascade SVM: Hans Peter Graf, Eric Cosatto, Leon Bottou, Igor Durdanovic, Vladimir Vapnik, NIPS 2005

Problem Statement

Predict if a miRNA targets an mRNA (segment)

1 or 0 ?

miRNAs mRNA segments

7

Problem Statement

Ideally we would want some experimentally verified edge labels to train on.

1 or 0 ?

0

1

1

Edges with ground truth labels

Edges whose labels have to be predicted miRNAs mRNA segments

8

Problem Statement

• We have labels on vertices from CLIP-Seq experiments, i.e., which mRNA segments were targeted.

• We do not know which miRNA targeted a particular mRNA segment.

miRNAs

1 or 0 ?

1

0

1

0 mRNA segments

9

Methods : Generating Ground Truth Data

• Consider only the most highly expressed miRNAs

– 20 in humans, 10 in mouse

• Add an edge if

– the binding between a miRNA and mRNA segment is strong enough, i.e., ΔG is below a certain threshold or,

– There is at least a 6-mer seed match between the mRNA segment and miRNA.

1

0

1

0 miRNAs mRNA segments

10

Methods : Generating Ground Truth Data

• Label the edge 1 (or 0) if it is incident on a mRNA segment that has label 1

(or 0).

miRNAs

1

1

0

1

0

0

1

0

1

0 mRNA segments

11

Methods : Schematic of Features (Feature Engineering)

• The alternating blue and green regions denote the thirteen consecutive windows around the miRNA target site (red). These are the windows where the average thermodynamic and sequence features are computed.

• We hypothesize that the curves are different for the positive and negative samples.

• The miRNA seed region is the heptametrical sequence at positions 2-7 from the 5’ miRNA end.

12

Methods : Capturing Spatial Interaction using Smooth Curves

• Compute thermodynamic interaction profile upstream and downstream of the target region

– Data is collected for fixed-size windows on both sides of the target region

– Averaging is done within each window

• Compute smooth curves to remove noise in the biological data set

– Used B-spline interpolation for smoothing out the points

13

Methods : Capturing Spatial Interaction using Smooth Curves

• Compute interaction profiles for features

– Binding energy ΔG

– Accessibility ΔΔG

– Local AU content

• Compute interaction profiles at two different resolutions

– Window size of 40 and using the entire miRNA: “site” curves

– Window size of 9 and only using the seed region of the miRNA: “seed” curves

• Use coefficients of B-spline basis functions as features for classifier

14

Methods : Capturing all Possible Non-Canonical Matches

• Encode the alignment of the miRNA seed region with mRNA nucleotides using a string of 1s (matches), 2s (mismatches), 3s (gaps), and 4s (GU wobbles).

• Compute the probability that the alignment string (e.g., 1111121) is not a random occurrence.

• Define enrichment score for the seed match pattern as 1 – probability.

• The “seed enrichment metric” captures, in a single numeric feature, the relative efficacy of various kinds of seed matches in a single, unified metric.

• This in turn converts the categorical feature of seed match or non-canonical match into a numerical feature (probability value) that can be better handled by ML methods.

15

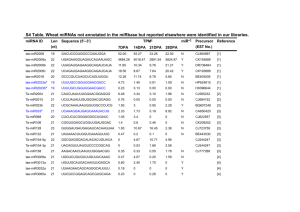

Methods : CLIP Data

Species

HITS-CLIP

(Mouse)

PAR-CLIP

(Human)

# Positive examples

(Seed,

Seedless)

861,208

(6%, 94%)

141,109

(8%,92%)

# Negative examples

# mRNA # miRNA Positive target sites

35,608,333 4,059 119

3’ UTR

56%

CDS 5’

UTR

43% 1%

2,659,748 1,211 35 57% 39% 4%

16

Methods : Our Classifier

• Distributed linear SVM using gradient descent (Apache Spark)

• 9 Intel X86 nodes, 8 GB memory, 4 cores.

17

Methods : Validation Protocol used to Evaluate Avishkar

18

Results : ROC Curves

19

Results : Feature Importance

20

Methods : Improving Classification Performance

• Linear classifier suffers from high bias (large training error).

• Use more complex learning model

– Kernel SVM

• SVMs suffer from a widely recognized scalability problem in both memory use and compute time.

• Kernel SVM computational cost: O(n3)

• Does not scale beyond a few thousand examples for feature vector of dimension ~ 150.

21

Methods : Distributed Training

1 2 3

• Cascading SVM [Graf et al. NIPS 2005]

• Key ideas:

– Train on partitions of the whole data set and do this in parallel

– Merge SVs from each partition in a hierarchical manner

– Final step is serial and it is hoped that the number of SVs is reasonably small at that stage

• Implemented on top of Apache Spark 22

Distributed Training: Our Contributions

• Use non-linear SVM for classification since we observe high bias when using linear SVM, leading to lower accuracy

• But non-linear SVM does not scale well even with cascading SVM

• Biological insight: miRNAs within an miRNA family share structural similarities

• Therefore, we create a separate non-linear classifier for each miRNA family, plus, within each family we speed up the optimization using the Cascading approach

• Training for the model for each miRNA family can occur in parallel

23

Results : Misclassification Rate for Linear and Non-Linear Classifier

• Five-fold cross-validation misclassification rate for different miRNA families.

• Mean test error of the non-linear SVMs for each of the miRNA families is less than the corresponding linear models.

• Benefit of non-linear SVM is more pronounced for larger miRNA families: e.g., for let-7 and miR-320 families, benefits are 50% and 69.9% over linear model.

• Insight : Non-linear model can remove the prediction bias inherent in linear model.

24

Results

• ROC curves for the ensemble linear model and ensemble non-linear model, obtained by varying the probability threshold of for the output of the SVM.

• The misclassification error, true positive rate, and false positive rate were computed using 5-fold stratified cross-validation for seedless sites in the human data set.

• One possible operating region is with an FPR of 0.2, the TPR for the linear model is

0.469, while the TPR for the non-linear model is 0.756.

25

Results

26

Results

• Does the classification performance improve due to clustering by miRNA family or due to the use of a more complex model?

• Performance of linear classifier does not improve by clustering data.

27

Take-Away Points

• We have developed “Avishkar”, a machine-learning, support vector machine-based model, to predict both canonical and non-canonical miRNA-mRNA interactions

• Avishkar extracts thermodynamic and sequence features and does smoothening through curve fitting, in order to extract enriched features from CLIP data

• We use non-linear SVM to minimize bias and scale it up to the large biological data sizes through a biologically-driven parallelization strategy

• We achieve the best-in-class precision (true positive rate), with an improvement of over 150%, over the best competitive protocol

• The code runs on Apache Spark and is open sourced on bitbucket: https://bitbucket.org/cellsandmachines/avishkar

• We also provide a general-purpose, scalable implementations of kernel

SVM using Apache Spark, which can be used to solve large-scale nonlinear binary classification problems: https://bitbucket.org/cellsandmachines/kernelsvmspark

28

Thanks!

29

EXTRA + Notes

30

31

Background : RNA interference

32