The Philosophy of Data

advertisement

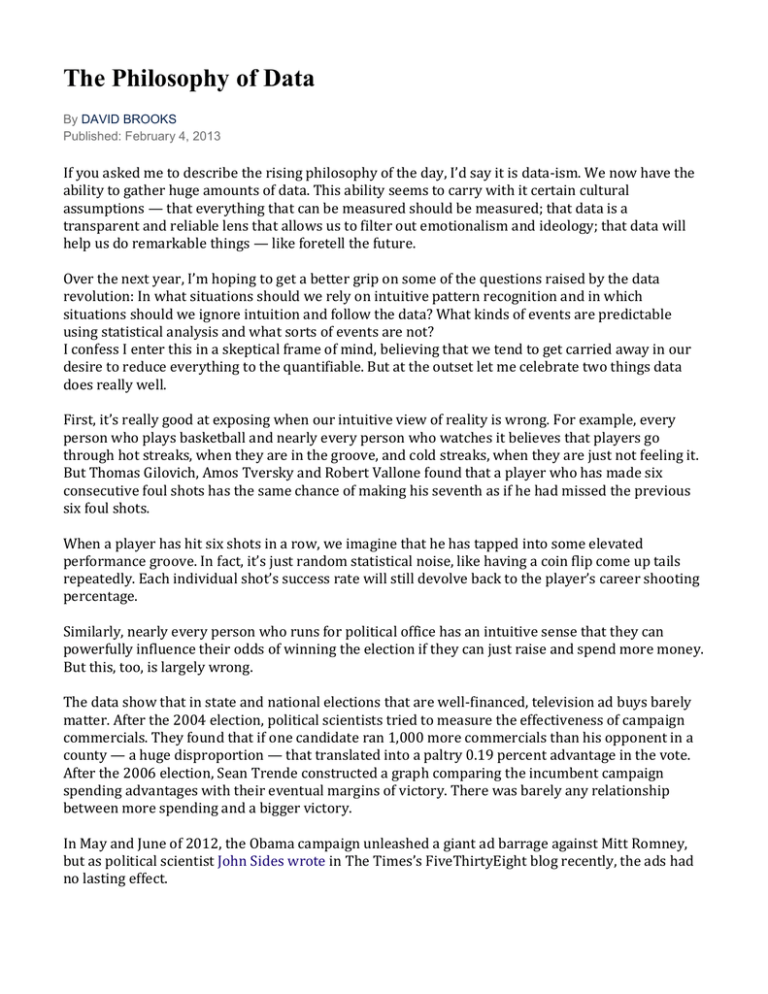

The Philosophy of Data By DAVID BROOKS Published: February 4, 2013 If you asked me to describe the rising philosophy of the day, I’d say it is data-ism. We now have the ability to gather huge amounts of data. This ability seems to carry with it certain cultural assumptions — that everything that can be measured should be measured; that data is a transparent and reliable lens that allows us to filter out emotionalism and ideology; that data will help us do remarkable things — like foretell the future. Over the next year, I’m hoping to get a better grip on some of the questions raised by the data revolution: In what situations should we rely on intuitive pattern recognition and in which situations should we ignore intuition and follow the data? What kinds of events are predictable using statistical analysis and what sorts of events are not? I confess I enter this in a skeptical frame of mind, believing that we tend to get carried away in our desire to reduce everything to the quantifiable. But at the outset let me celebrate two things data does really well. First, it’s really good at exposing when our intuitive view of reality is wrong. For example, every person who plays basketball and nearly every person who watches it believes that players go through hot streaks, when they are in the groove, and cold streaks, when they are just not feeling it. But Thomas Gilovich, Amos Tversky and Robert Vallone found that a player who has made six consecutive foul shots has the same chance of making his seventh as if he had missed the previous six foul shots. When a player has hit six shots in a row, we imagine that he has tapped into some elevated performance groove. In fact, it’s just random statistical noise, like having a coin flip come up tails repeatedly. Each individual shot’s success rate will still devolve back to the player’s career shooting percentage. Similarly, nearly every person who runs for political office has an intuitive sense that they can powerfully influence their odds of winning the election if they can just raise and spend more money. But this, too, is largely wrong. The data show that in state and national elections that are well-financed, television ad buys barely matter. After the 2004 election, political scientists tried to measure the effectiveness of campaign commercials. They found that if one candidate ran 1,000 more commercials than his opponent in a county — a huge disproportion — that translated into a paltry 0.19 percent advantage in the vote. After the 2006 election, Sean Trende constructed a graph comparing the incumbent campaign spending advantages with their eventual margins of victory. There was barely any relationship between more spending and a bigger victory. In May and June of 2012, the Obama campaign unleashed a giant ad barrage against Mitt Romney, but as political scientist John Sides wrote in The Times’s FiveThirtyEight blog recently, the ads had no lasting effect. Likewise, many teachers have an intuitive sense that different students have different learning styles: some are verbal and some are visual; some are linear, some are holistic. Teachers imagine they will improve outcomes if they tailor their presentations to each student. But there’s no evidence to support this either. Second, data can illuminate patterns of behavior we haven’t yet noticed. For example, I’ve always assumed that people who frequently use words like “I,” “me,” and “mine” are probably more egotistical than people who don’t. But as James Pennebaker of the University of Texas notes in his book, “The Secret Life of Pronouns,” when people are feeling confident, they are focused on the task at hand, not on themselves. High status, confident people use fewer “I” words, not more. Pennebaker analyzed the Nixon tapes. Nixon used few “I” words early in his presidency, but used many more after the Watergate scandal ravaged his self-confidence. Rudy Giuliani used few “I” words through his mayoralty, but used many more later, during the two weeks when his cancer was diagnosed and his marriage dissolved. Barack Obama, a self-confident person, uses fewer “I” words than any other modern president. Our brains often don’t notice subtle verbal patterns, but Pennebaker’s computers can. Younger writers use more downbeat and past-tense words than older writers who use more positive and future-tense words. Liars use more upbeat words like “pal” and “friend” but fewer excluding words like “but,” “except” and “without.” (When you are telling a false story, it’s hard to include the things you did not see or think about.) We think of John Lennon as the most intellectual of the Beatles, but, in fact, Paul McCartney’s lyrics had more flexible and diverse structures and George Harrison’s were more cognitively complex. In sum, the data revolution is giving us wonderful ways to understand the present and the past. Will it transform our ability to predict and make decisions about the future? We’ll see. Paul from Lower Manhattan Downtown New York, NY NYT Pick I've spent decades helping government organizations use data to improve operations, productivity, occasionally policy, and in recent years strategy. What I always encourage is using data as part of a feedback system to improve future decisions. When data are only used up front to determine a policy or strategy, that's insufficient. Continual data collection and analysis are essential to determine if intended results are being achieved or if changes are needed to improve results. Corporations are already using "big data" to increase sales, and more powerful ways to analyze more massive amounts of data may indeed help us get better public policies in the future, though watch out for abuse and cherry picking data to fit pre-determined ideological policies. But big data, even if not abused, will provide a relatively small advantage in making better policy choices up front. Much greater benefits will come from having more data of adequate quality flowing through well-designed feedback loops on a more timely basis so the users can keep improving strategies, policies, and operations for maximum public benefit. ProfWombat Andover MA NYT Pick 1. The most interesting recent example of use of data and its implications, far more than foul shooting streaks, is Nate Silver's use of Bayesian statistical models to predict election outcomes successfully, and the right's vehement rejection of his work and shock at its accuracy after the fact, largely out of ideology and innumeracy. An odd omission, that, from a column about data, its applications and limitations. 2. Examples abound of quantifying the unquantifiable. The US News college rankings come to mind: a complex institution can't be ranked as greater than, less than or equal to another. Single measures such as IQ and Spearman's G can't quantitate human intelligence, either. 3. Any given population gives rise to statistics. Any given individual within that population, at any given time, is doubly an anecdote. 4. Perhaps the largest body of data with the most significant policy implications arise from economics. Many economic models continue to exist despite a paucity of data for them. Again, an odd omission. Daniel12 Wash. D.C. NYT Pick Much of this is nonsense. Basketball players--indeed people in many fields--have hot and cold streaks, inspired and bad days; bizarre interpretation to say a person's good days are just random, accidental, like a coin just coming up heads repeatedly at a particular time, and that we should look at a person's "career average" rather than judge by good days; any fool knows that in the arts and sciences we do not go by any career average but the high points, the Mona Lisa moments, the Wright brothers inventing the airplane etc. Plenty of artists do only a couple significant things--we judge by that and not by any "career average". I suppose a newspaper columnist though would prefer career average, the consistent turn out of average pieces, and to call all exceptional accomplishment "just random noise", "pure luck", "not the person's usual way of being", "a coin just coming up heads", "could have been me"... As for students having different learning styles, obviously some people are more verbal or spatial than average, common sense demonstrates that fact; what we can argue about is "linear" and "holistic". Of course we have little evidence for the latter two words being types of learning style because what do these words signify in the first place? Are they as obvious as being verbal or spatial? Not at all. The problem in general is correct interpretation of data. We need more people with brains. Data is increasing, but so often I see obvious errors in interpretation of data. Martin New York NYT Pick The idea that a conclusion is "objective" or reliable just because it appeals to measurable data is totally bogus. Our capacity for gathering data has expanded so exponentially that it's nearly meaningless--in the sense that I can find the data I want by crafting my question or search in a way that suits my ends. The fact that political battles are carried out in the realm of media & publicity intensify this tendency. That's why we see so many studies or investigations that ask not what conclusion is consistent with the data, but what data will serve a pre-determined conclusion. And then when you inquire into anything that involves human behavior, you encounter the fact that humans change their behavior in response to being studied. Life is not just about measuring ourselves, but about deciding what we want to be. Kurt NY NYT Pick One of the most valuable lessons I learned in grad school was the imprecision of data and its misuses. Mr Brooks says we are living in an age being driven by data, but it is probably just as true that we are being driven by its misuse, in some ways just as contra-factual as any superstition-ridden medieval society. At the public level, we see summaries of academic studies with stark results, frequently clearly pointing to specific courses of action or analysis. But how much of that data providing such specificity rests on unproven assumptions or vague wording of which we know nothing? Were those assumptions or weightings changed infinitesimally, the results of the study could change dramatically, yet of that we know nothing, only being spoon fed the preferred policy prescription, one that could be strongly inferred as being the desired result all along. Often data is so specific it lends a feeling of false control and precision, when neither really exists, which is yet another problem. Which points out the need for transparency of process and peer review. And that process works well, so long as everyone has not already descended into group think. But confirmation bias is a well noted phenomenon on both sides in politics. Is it really wise to believe such does not exist in other fields? And how much of what we are told and believe is just as much a product of preexisting dispositions and prejudices and how much is really susceptible to argument and evidence? ralph varhaug houston NYT Pick Claude Shannon, the inventor of Information Theory made a distinction between data and information: data was a fact, information was the basis of a decision. Data is not information until we put it in context. A red light is a fact, a red light over a street intersection is the basis for a decision and thus, information. We seem to believe that all data are equally valuable but data without context is useless. Phyllis Kritek Half Moon Bay, CA NYT Pick Well done, Mr. Brooks. To add to the conversation I offer this quote from Einstein: ""Not everything that counts can be counted, and not everything that can be counted counts." By measuring things, we draw our attention to them, and hence not to other things. We may also signal, inadvertently, that the data we present are exhaustive, that is, that we have completed the exploration on this topic. Scientists often find their work summarized in the MSM in descriptions that ignore the fundamental tentativeness of all scientific findings. Good science always has explicit limitations and unveils the next looming unknown. The most unsettling thing about datism, I think, is the assumption that by measuring something, we actually capture its essence. Yet, what counts about it may not be something we can count. Easy Test: Can you measure your love for your child?