PowerPoint file

advertisement

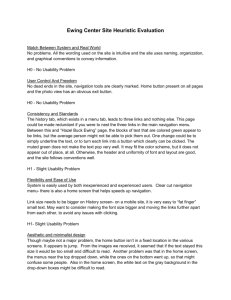

Understanding and Evaluating the User Experience with Information Spaces Andrew Dillon HCI Lab Indiana University adillon@indiana.edu 1 Why does user experience matter? “The improvements in performance gained through usable interface design are 3 or 4 times larger than those gained through designing better search algorithms” Sue Dumais, Microsoft - invited presentation to IU’s Computer Science Horizon Day, March 2000. 2 Why do we need to test users? Bailey (1993) asked 81 designers to assess 4 interfaces for users like themselves Interface A B C D Rating 4 3 1 2 Performance 1 2 3 4 NB: 95% of designers selected an interface other than the one they performed best on. 3 So what to test? Interaction Basics User Task Tool Context 4 Basic user tendencies: Users don’t estimate own performance well Users change over time Are impatient See things in their own way Seek to minimize cognitive effort 5 Traditional approach: usability engineering Usability defined: Semantically Featurally Operationally 6 So what is usability? Semantic definitions ‘user-friendliness’? ‘ease-of-use’? ‘ease-of-learning’? ‘transparency’ These tend to circularity, and provide little value to design practice However, the term captures something that people recognize as important 7 Usability as a collection of features Interface is usable if: Links, search engine, nav bar, back button? Graphical user interfaces (GUI) Based on style guide recommendations? Meets Nielsen’s or Shneiderman’s principles of design? 8 Attribution Fallacy The attribution fallacy suggests usability is a quality of an interface that is determined by the presence or absence of specific interface features. This attribution leads to an over-reliance on guidelines and prescriptive rules for design 9 Experience requires more than features Users’ experience is contextually determined by their needs, their tasks, their history and their location. Understanding this and knowing how to evaluate experience, is the primary purpose of this talk 10 Operational definition Usability (of an application) refers to the effectiveness, efficiency, and satisfaction with which specified users can achieve specified goals in particular environments ISO Ergonomics requirements, ISO 9241 part 11: Guidance on usability specification and measures. Useful but overlooked, and still not the full story…. 11 Effectiveness The extent to which users can achieve their task goals. Effectiveness measures the degree of accuracy and/or completion e.g.,if desired task goal is to locate information on a web site then: Effectiveness= success of user in locating the correct data 12 Effectiveness can be a scale or an absolute value If the outcome is ALL or NOTHING then effectiveness is an absolute value -User either locates info or does not... If outcome can be graded, (user can be partially right) then effectiveness should be measured as a scale -As a %, or a score from 1 (poor) to 5 (complete) Scale should be determined by evaluator in conjunction with developers and users 13 Quality? Some tasks do not have a definitive correct answer: creative production (writing, design) information retrieval data analysis management Making a purchase….. Effectiveness alone misses something... 14 Efficiency Measures resources used to perform task i.e., time, effort, cost, In case of Web site use, efficiency might equal time taken to complete a task or the navigation path followed etc. 15 Efficiency of using a redesigned web site Time taken to complete task Compared across tasks, across users or against a benchmark score Number of steps taken Number of deviations from ideal path Such variables are frequently highly positively correlated - but they needn’t be. 16 Efficiency in path analysis Home page Papers Conference Classes Journal Graduate Intro to HCI Ideal path: 3 steps Office hours Undergrad Stats HCI Java 17 Efficiency in path analysis Home page Papers Conference Classes Journal Graduate Intro to HCI Office hours Undergrad Stats Actual to ideal user navigation - 7:3 steps HCI Java 18 But is it efficiency that users want? The push to efficiency is symptomatic of an engineering-oriented approach Who determines efficiency? Are path deviations always inefficient? Is time equally weighted by user, designer or owner? Suggests a need for negotiation beyond typical usability tests 19 Satisfaction Measures the affective reaction (likes, dislikes, attitudinal response) of users to the application Assumed to be influenced but not the same as effectiveness or efficiency e.g., 2 applications with equal effectiveness, and efficiency, may not be equally satisfying to use or What users like might not be what they need! 20 Basis for satisfaction? Positively influenced by effectiveness and efficiency Also Personal experience with other technologies? Working style? Manner of introduction? Personality of user? Aesthetics of product? 21 Satisfaction is important Good usability studies recognize this But satisfaction is not enough…. People often like what they don’t use well What about empowerment, challenge etc? 22 Beyond usability: P-O-A User experience can be thought of at three levels: Process Outcome Affect Full evaluation needs to cover these bases 23 Experiencing IT at 3 levels: User experience What user does Outcome What user attains Affect How user feels Process 24 Process: what the user does Navigation paths taken Use of back button or links Use of menus, help, etc. Focus of attention The emphasis is on tracking the user’s moves and attention through the information space 25 Outcome: what the user attains What constitutes the end of the interaction? Purchase made? Details submitted? Information located? The emphasis is on observing what it means for a user to feel accomplishment or closure 26 Affect: how the user feels Beyond satisfaction, we need to know if user feels: Empowered? Annoyed, frustrated? Enriched? Unsure or wary? Confident? Willing to come back? The emphasis is on identifying what the interaction means for the user 27 User experience = behavior +result +emotion Behavior Result Emotion 28 Interesting ‘new’ measures of UE Aesthetics, Perceived usability Cognitive effort, Perception of information shapes Acceptance level Self-efficacy UE proposes a range of measures not normally associated with usability testing 29 Aesthetics and user performance Dillon and Black (2000) Took 7 interface designs with known user performance data Asked 15 similar users to rate “aesthetics” and “likely usability” of each alternative design Compared ratings with performance data 30 Rankings of 7 interfaces Interface Performance Preference Rating of Perceived Aesthetics usability A 2 4 1 3 B C D E F G 7 6 1 4 3 5 5 6 1 2 3 7 1 4 3 6 5 7 1 2 4 5 3 7 R=.85 R=.83 Correlation between aesthetics and performance = 0 31 Follow up study: 30 users Rated the aesthetics, likely usability and then used 4 web search interfaces Rated aesthetics and usability again again No correlation with performance! 32 So what? Users respond to interface beauty Users do not predict their own performance (process and outcome) accurately Designers cannot usefully predict user response through introspection, theory or asking their colleagues! 33 Time matters...Error Scores for Regular Users of Software 120 100 80 "Friendly" "Unfriendly" 60 40 20 0 1 2 3 4 5 Trial days 6 7 8 9 10 34 So design stops being important? NO…it remains important…. 120 Transfer trial Stress trial 100 80 Friendly Unfriendly 60 40 20 0 1 2 3 4 5 6 7 8 9 10 11 12 35 So what? User experience is dynamic User data is the best indicator of interaction quality….REPEAT THIS TO SELF DAILY!!!!! To be valid and reliable, the user data must reflect all aspects of the user experience: Most evaluations miss this P-O-A The targets are moving….user experience is growing daily in web environments 36 Genres in information space Users have expectations of information spaces Documents have genres E-business is “shopping” A website is a website is website…. Expectations activate mental models which drive what users see and interpret 37 What does a home page look like? Dillon and Gushrowski (2000) We analyzed a sample of 100 home pages for features Then tested 8 candidate pages manipulating the most common or uncommon features of existing pages New users were asked to rate the pages they thought were ‘best’ Significant positive correlation resulted 38 Element Total (of 57) Total by % % found on initial sampling Title 55 96 71 E-mail ad dres s (M*) 49 86 82 Update date 48 84 39 Tab le of co nten ts (L** ) 42 74 11 Create date 41 72 20 Extern al lin ks (M) 39 68 72 Welcome mes sage (M) 38 67 51 1-4 Grap hics (M) 34 60 52 Pho tographs 32 56 42 Brief bio 32 56 49 Text-on ly option (L) 26 46 2 5-9 Grap hics 22 39 31 Site map 14 25 4 Guestbo ok (L) 11 19 16 Lis ts 9 1 33 An imatio n 8 14 37 Tab les 7 12 37 Frames (L) 7 12 11 Sou nd 7 12 5 Image map 5 9 4 Coun ter 2 4 39 Ad vertisements 0 0 33 (L) 0 0 17 Ba ck to top button Thumbnails of ima ges 1 (M) 10 or more Grap hics 1 * /** Deno tes inclus ion in the most-u sed (M) /leas t-used elemen t (L) exercis e 39 Page M Page # Reflects features 1st 2nd 3rd 4th 5th 6th 7th 8th 1 2.1 30 7 13 2 2 2 3.6 7 14 7 10 10 2 3 3.5 7 10 11 13 9 7 4 4.0 3 9 11 15 8 8 3 5 5.0 4 8 4 7 6 13 5 10 6 4.5 5 8 8 6 8 9 9 4 7 6.6 1 3 1 8 5 21 18 8 6.7 6 6 4 18 22 1 7 1 2 Number reflects user ranking Correlation between features and user ranking r=0.95, d.f.=6, p<.01 40 Implications Expectations for digital information spaces are forming quickly Violation of expectancy impacts initial user ratings Full report online at: http://memex.lib.indiana.edu/adillon/genre.html 41 Maximizing your evaluations: Measure the 3 aspects of UE Design user tests that capture multiple sources of data Process, Outcome and Response Protocols, screen capture, attitude, speed, freeform answers Don’t rely on gurus or guidelines! A little data goes a long way! 42 Example web site protocol (User guesses) 1.32: “What do I choose here?....looks like there is no direct link....and I don’t like the colors here, too bright...er.... (SELECTS TEACHING).. 1.39: ‘teaching and courses’ sounds right (Negative comments) (SCREEN CHANGES).. 1.41: “oh this is all about missions and stuff...hang on.... (Navigation (HITS BACK BUTTON) strategy) 1.48: “well.....that looks the best of these, you know.” 43 Biggest user complaints in our lab Poor content Slow loading Poor aesthetics Unclear menu options Menus with example sub-items much preferred and lead to more efficient use Too much clicking and “forced” navigation No site map Poor search facilities 44