Lecture 13 – GPU Architectures

advertisement

CS6461 – Computer Architecture

Spring 2015

Morris Lancaster – Instructor

Adapted from Professor Stephen Kaisler’s Notes

Lecture 13

Graphics Processing Units

(GPUs)

Early Graphics

•

•

•

•

Vector graphics (1960s)

Based on the oscilloscope

Consist of electron gun + phosphor display

Examples:

– SketchPad

– Asteroids

– Pong

• Problems:

– No color

– Limited objects

– Crude images

3/22/2016

CSCI6461: Computer Architecture

13-2

Raster Displays

• Represent an image with a

“framebuffer”

– A 2D array of pixels

• Requires a RAMDAC:

– Random Access Memory

Digital-to-Analog Converter

• Translates the framebuffer into

a video signal

• Can be used to display any

image (in theory)

• Early Games:

– Sprite based rendering (late

1980s-early 1990s)

– 'Blit' rectangular subregions

quickly to animate objects

– Later games added scrolling

backgrounds

– Examples: Duke Nukem,

Commander Keen

3/22/2016

CSCI6461: Computer Architecture

13-3

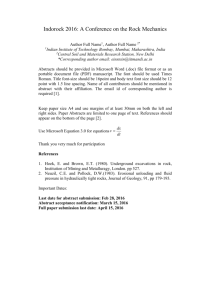

NVIDIA GPU Card

3/22/2016

CSCI6461: Computer Architecture

13-4

What is a GPU?

• A specialized processor oriented to performing

graphics operations very fast

–

–

–

–

Highly parallel vs. serial

Many execution units/slower clock vs. few EUs/higher clock

Much deeper pipelines

Mostly deterministic in their computations

• GPUs are fast!!

– 3.0 GHz dual-core Pentium4: 24.6 GFLOPS

– NVIDIA GeForceFX 7800: 165 GFLOPs

– Advanced memory interfaces for shifting data

• Growth:

– CPUs: 1.4× annual growth

– GPUs: 1.7×(pixels) to 2.3× (vertices) annual growth

3/22/2016

CSCI6461: Computer Architecture

13-5

Why GPU?

To provide a separate dedicated graphics computation

and rendering resource including a graphics processor

and memory.

To relieve some of the burden of the main system

resources, namely the Central Processing Unit, Main

Memory, and the System Bus, which would otherwise

get saturated with graphical operations and I/O requests.

3/22/2016

CSCI6461: Computer Architecture

13-6

Why Are GPUs So Fast?

• Entertainment Industry has driven the economy of these chips?

– Males age 15-35 buy $10B in video games / year

• Moore’s Law ++

• Simplified design (stream processing)

• Single-chip designs

3/22/2016

CSCI6461: Computer Architecture

13-7

GPU: a Multithreaded Coprocessor

Programming Model: Single Instruction

Multiple Thread (SIMT)

3/22/2016

CSCI6461: Computer Architecture

13-8

GPU: a Multithreaded Coprocessor

• Avoid divergent branches

• Threads of single SM must be executing the same

code

• Code that branches heavily and unpredictably will

execute slowly

• Threads shoud be independent as much as

possible

• Synchronization and communication can be done

efficiently only for threads of single multiprocessor

3/22/2016

CSCI6461: Computer Architecture

13-9

Modern GPU Pipeline

• Input Assembler

• Takes in 1D vertex data from

up to 8 input streams

• Converts data to a canonical

format

• Supports a mechanism that

allows the IA to effectively

replicate an object n times instancing

3/22/2016

CSCI6461: Computer Architecture

13-10

Modern GPU Pipeline - I

• Vertex Shader:

– Receives a stream of vertices in object space with all their

associated information (normals, texture coordinates, per vertex

color etc) from the CPU interface

– Outputs a stream of vertices in screen/clip space

– VS and other programmable stages share a common feature set

that includes:

• an expanded set of floating-point, integer, control, an

• memory read instructions allowing access to up to 128 memory

buffers (textures) and 16 parameter (constant) buffers - common

core

– No new vertices are created in this stage

– No vertices are discarded

3/22/2016

CSCI6461: Computer Architecture

13-11

Modern GPU Pipeline - II

• Geometry Shader:

– Geometry information becomes raster information (screen space

geometry is the input, pixels are the output)

– Takes the vertices of a single primitive (point, line segment, or

triangle) as input and generates the vertices of zero or more

primitives

– Additional vertices can be generated on-the-fly , allowing

displacement mapping

– Geometry shader has the ability to access the adjacency

information

– This enables implementation of some new powerful algorithms :

• Realistic fur rendering

• NPR rendering

3/22/2016

CSCI6461: Computer Architecture

13-12

Modern GPU Pipeline - III

• Clip/Project/PreRasterize

• Handles following operations:

- Clipping

– Copies a subset of the vertex

information to up to 4 1D

output buffers in sequential

order

– Data conversion and packing

can be implemented by a GS

program

– Prior to rasterization,

triangles that are backfacing

or are located outside the

viewing frustrum are rejected

– Some GPUs also do some

hidden surface removal at

this stage

3/22/2016

- Culling

- Perspective divide

- View port transform

- Primitive set-up

- Scissoring

- Depth offset

- Depth processing like

hierarchical-z

- Fragment generation

CSCI6461: Computer Architecture

13-13

Modern GPU Pipeline - IV

• Pixel Shader:

– Each fragment provided by Geometry Shader is fed into

fragment processing as a set of attributes (position, normal,

texcoord, etc), which are used to compute the final color for this

pixel

– The computations taking place here include texture mapping and

math operations

– Typically the bottleneck in modern applications

– Produces a single output fragment consisting of 1-8 attribute

values and an optional depth value

– If the fragment is supposed to be rendered, it is output to 8

render targets

– Each target represent a different representation of the scene

3/22/2016

CSCI6461: Computer Architecture

13-14

Modern GPU Pipeline

• Output Merger:

– Input is a fragment from the pixel shader

– Fragment colors provided by the previous stage are written to

the framebuffer

• Used to be the biggest bottleneck before fragment processing took

over

– Uses a single unified depth/stencil buffer to specify the bind

points for this buffer and 8 other render targets

• Degree of multiple rendering enhanced to 8

• Before the final write occurs, some fragments are rejected by the

zbuffer, stencil and alpha tests

– On modern GPUs, z and color are compressed to reduce

framebuffer bandwidth (but not size)

3/22/2016

CSCI6461: Computer Architecture

13-15

Vertex and Fragment Shaders: Example

3/22/2016

CSCI6461: Computer Architecture

13-16

GPU Applications: Bump/Displacement mapping

Height map

Diffuse light without bump

3/22/2016

Diffuse light with bump

CSCI6461: Computer Architecture

13-17

GPU Applications: Volume texture mapping

3/22/2016

CSCI6461: Computer Architecture

13-18

GPU Applications: Soft Shadows

3/22/2016

CSCI6461: Computer Architecture

13-19

Modern GPU

• Vector Processor

– Operates on 4 tuples

• Position

• Color

• Texture Coordinates

( x, y, z, w )

( red, green, blue, alpha )

( s, t, r, q )

– 4 Tuple Ops performed in 1 clock cycle

• How many processing units?

– Hundreds plus

• How many ALUs?

– Hundreds to Thousands

• Do you need a cache?

– No! Use space for ALUs

• What kind of memory?

– Lots, very fast.

3/22/2016

CSCI6461: Computer Architecture

13-20

NVIDIA GeForce 6800 3D Pipeline

Vertex

Triangle Setup

Z-Cull

Shader Instruction Dispatch

Fragment

L2 Tex

Fragment Crossbar

3/22/2016

Memory

Partition

Memory

Partition

Composite

Memory

Partition

CSCI6461: Computer Architecture

Memory

Partition

13-21

What Are GPUs Good For?

• Everything you can get it to do efficiently for you!!!

–

–

–

–

Physics

Collision Detection

AI

And yes, graphics too…

• GPUs mostly do stuff like rendering images.

• This is done through mostly floating point arithmetic

– the same stuff people use supercomputing for!

3/22/2016

CSCI6461: Computer Architecture

13-22

GPU versus Fastest Computers

ref: http://www.llnl.gov/str/JanFeb05/Seager.html

3/22/2016

CSCI6461: Computer Architecture

13-23

GPU vs. CPU: NVIDIA 280 vs. Intel i7 860

CPU1

GPU

Registers

16,384 (32-bit) /

multi-processor3

128 reservation stations

Peak memory bandwidth

141.7 Gb/sec

21 Gb/sec

Peak GFLOPs

562 (float)/

77 (double)

50 (double)

Cores

240

4/8 (hyperthreaded)

Processor Clock (MHz)

1296

2800

Memory

1Gb

16Gb

Shared memory

16Kb/TPC2

N/A

Virtual memory

None

3/22/2016

CSCI6461: Computer Architecture

13-24

GPU vs. CPU Performance

3/22/2016

CSCI6461: Computer Architecture

13-25

Graphics in the System

3/22/2016

CSCI6461: Computer Architecture

13-26

Programming GPUs

• GPUs – in the olden days – used to be hard to

program:

– using a graphics standard like OpenGL (which is mostly

meant for rendering), or

– getting fairly deep into the graphics rendering pipeline.

• To use a GPU to do general purpose number

crunching, you had to make your number crunching

pretend to be graphics.

– This was hard. So most people didn’t bother.

• The power and flexibility of GPUs makes them an

attractive platform for general-purpose computation

– Example applications range from in-game physics simulation

to conventional computational science

– Goal: make the inexpensive power of the GPU available to

developers as a sort of computational coprocessor

3/22/2016

CSCI6461: Computer Architecture

13-27

GPGPU programming model

• The GPU is viewed as a compute device that:

– Is a coprocessor (slave) to the CPU (host)

– Has its own DRAM (device memory) but no virtual memory

• Kernel is a CPU-callable function. Thread is an instance of a kernel.

• GPU runs many threads in parallel.

Device

Host

(CPU)

(GPU)

Kernel

Threads

(instances of

the kernel)

PCIe

Device

Memory

3/22/2016

CSCI6461: Computer Architecture

13-28

GPU <-> CPU Data Transfer

• GPUs and CPUs communicate via a PCIe bus

– This communication is expensive and should be minimized for target

applications

• Graphics applications usually require

– Initial data to be sent from CPU to GPU

– Single transfer of processed data from GPU to CPU

• General purpose computations usually require

– Multiple transfers between CPU and GPU (since conditional checks

on CPU)

– Possibility of saturating the PCIe bus and reducing the achievable

performance

3/22/2016

CSCI6461: Computer Architecture

13-29

GPU Threads vs. CPU Threads

• GPU threads:

– Lightweight, small creation and scheduling overhead,

extremely fast hardware context switching

– Need to issue 1000s of GPU threads to hide global memory

latencies (600-800 cycles)

• CPU threads:

– Heavyweight, large scheduling overhead, slow context

switching

• Multi-GPU usage requires invocation of multiple CPU

threads

– Each CPU thread creates a GPU context

– Context swapping is required for a CPU thread to access GPU

memory allocated by another CPU thread

3/22/2016

CSCI6461: Computer Architecture

13-30

GPU Memory Space

3/22/2016

CSCI6461: Computer Architecture

13-31

GPU Memory Space

• Each thread runs on a SP and has:

– R/W per-thread registers (on-chip)

• Limit usage (max 16K/MP)

– R/W per-thread local memory (off)

– R/W per-block shared memory (on)

• Need to avoid bank conflicts

– R/W per-grid global memory (off)

• Not cached, 600-800 cycle read

– Latency hidden by parallelism and fast context switches

• Main means for data transfer from host and device

– RO per-grid cached constant

and texture memory (off)

• The host can R/W global, constant and texture

memories (visible to all threads)

3/22/2016

CSCI6461: Computer Architecture

13-32

But, GPUs Are Difficult To Use as GPs

• GPUs designed for & driven by video games

– Programming model unusual; map general-purpose data

structures to graphics structures

– Programming idioms tied to computer graphics

– Programming environment tightly constrained

• Underlying architectures are:

–

–

–

–

Inherently parallel

Rapidly evolving (even in basic feature set!)

Largely proprietary

Lack random read/write to memory

• Not a Panacea!!

– Can’t simply “port” CPU code!

– GPUs are fast because they are specialized

• Poorly suited to sequential, “pointer-chasing” code

– Missing support for some basic functionality

• E.g. double precision, bitwise operations, indexed write

3/22/2016

CSCI6461: Computer Architecture

13-33

GPGPU Stream Operations

• Map: apply a function to every element in a

stream

• Reduce: use a function to reduce a stream to a

smaller stream (often 1 element)

• Scatter/gather: indirect read and write

• Filter: select a subset of elements in a stream

• Sort: order elements in a stream

• Search: find a given element, nearest neighbors,

etc

3/22/2016

CSCI6461: Computer Architecture

13-34

GPGPU Challenges

• The Killer App

• Programming models and

tools

• GPU in tomorrow’s

computer?

• Data conditionals

• Relationship to other

parallel hw/sw

3/22/2016

• Managing rapid change in

hw/sw (roadmaps)

• Performance evaluation and

cliffs

• Philosophy of faults and

lack of precision

• Broader toolbox for

computation / data

structures

• Wedding graphics and

GPGPU techniques

CSCI6461: Computer Architecture

13-35

Programming GPGPUs

• Proprietary programming language or extensions

– NVIDIA: Compute Unified Device Architecture (CUDA) (C/C++)

– AMD/ATI: StreamSDK/Brook+ (C/C++)

• OpenCL (Open Computing Language): an industry

standard for doing number crunching on GPUs.

• Portland Group Inc (PGI) Fortran and C compilers with

accelerator directives;

– PGI CUDA Fortran (Fortran 90 equivalent of NVIDIA’s CUDA C).

3/22/2016

CSCI6461: Computer Architecture

13-36

Processing Flow

3/22/2016

CSCI6461: Computer Architecture

13-37

Processing Flow

3/22/2016

CSCI6461: Computer Architecture

13-38

Processing Flow

3/22/2016

CSCI6461: Computer Architecture

13-39

To Compute, We Need To

• Allocate memory that will be used for the computation (variable

declaration and allocation)

• Read the data that we will compute on (input)

• Specify the computation that will be performed

• Write to the appropriate device the results (output)

But, the GPU is a specialized computing engine:

• Need to allocate space in the GPU’s memory for the variables.

• The GPU does not have I/O devices

– need to copy the input data from the memory in the host computer

into the memory in the GPU, using the variable allocated in the

previous step.

• Need to specify code to execute.

• Must copy the results back to the memory in the host computer.

3/22/2016

CSCI6461: Computer Architecture

13-40

What Executes on the GPU?

• Kernel:

– A simple C function

– Executes in parallel with other instances on other cores

– The keyword __global__ tells the compiler to make a function

a kernel (and compile it for the GPU, instead of the CPU)

• How to increase parallelism:

– More independent work within a thread (warp)

– More concurrent threads

3/22/2016

CSCI6461: Computer Architecture

13-41

Requirements for GPU Performance

• Expose sufficient parallelism

– Large number of independent instructions

– Arithmetic pipes should accommodate many independent

instructions

– Memory systems: keep many requests in flight to saturate

bandwidth

• Coalesce memory access

• Coherent execution within warp

3/22/2016

CSCI6461: Computer Architecture

13-42

What Do Programmers Need To Do?

• How is every instance of the kernel going to know which

piece of data it is working on?

• Programmers need to specify:

– The grid size: The size and shape of the data that the program

will be working on

– The block size: The block size indicates the sub-area of the

original grid that will be assigned to an MP (a set of stream

processors that share local memory)

3/22/2016

CSCI6461: Computer Architecture

13-43

Host

Device

Grid 1

Kernel

1

Block

(0, 0)

Block

(1, 0)

Block

(2, 0)

Block

(0, 1)

Block

(1, 1)

Block

(2, 1)

Grid 2

Kernel

2

Block (1, 1)

3/22/2016

Thread

(0, 0)

Thread

(1, 0)

Thread

(2, 0)

Thread

(3, 0)

Thread

(4, 0)

Thread

(0, 1)

Thread

(1, 1)

Thread

(2, 1)

Thread

(3, 1)

Thread

(4, 1)

Thread

(0, 2)

Thread

(1, 2)

Thread

(2, 2)

Thread

(3, 2)

Thread

(4, 2)

CSCI6461: Computer Architecture

Ref: “NVIDIA CUDA Programming Guide” version 1.1

Threads: Grids and Blocks

13-44

Threads: Grids and Blocks

• A kernel is executed as a grid of thread blocks (aka

blocks)

• A thread block is a batch of threads that can

cooperate with each other by:

– Synchronizing their execution

• Diverging execution results in performance loss

– Efficiently sharing data through a low latency shared

memory

• Two threads from two different blocks cannot

cooperate

Ref: “NVIDIA CUDA Programming Guide” version 1.1

3/22/2016

CSCI6461: Computer Architecture

13-45

Threads and blocks have IDs

So each thread can identify

what data they will operate on

Block ID: 1D or 2D

Thread ID: 1D, 2D, or 3D

Simplifies memory

addressing when processing

multidimensional data

Image processing

Solving PDEs on volumes

Other problems with

underlying 1D, 2D or 3D

geometry

3/22/2016

Device

Grid 1

Block

(0, 0)

Block

(1, 0)

Block

(2, 0)

Block

(0, 1)

Block

(1, 1)

Block

(2, 1)

Block (1, 1)

Thread

(0, 0)

Thread

(1, 0)

Thread

(2, 0)

Thread

(3, 0)

Thread

(4, 0)

Thread

(0, 1)

Thread

(1, 1)

Thread

(2, 1)

Thread

(3, 1)

Thread

(4, 1)

Thread

(0, 2)

Thread

(1, 2)

Thread

(2, 2)

Thread

(3, 2)

Thread

(4, 2)

CSCI6461: Computer Architecture

13-46

Ref: “NVIDIA CUDA Programming Guide” version 1.1

Block and Thread IDs

• GPU consists of “multiprocessors”, each of which has

many processors

• A kernel is executed as a grid of blocks

• Thread block is a batch of threads that cooperate with

each other by:

– Synchronizing their execution

• Diverging execution results in performance loss

– Efficiently sharing data through a low latency shared memory

• All threads of a block reside on the same multiprocessor

• Number of blocks a multiprocessor can process at once

depends on register and shared memory usage per

thread

3/22/2016

CSCI6461: Computer Architecture

13-47

Ref: “NVIDIA CUDA Programming Guide” version 1.1

Scheduling in GPUs

C Example

int A[2][4];

for(i=0;i<2;i++)

convert into CUDA

{

for(j=0;j<4;j++)

{

// define threads

A[i][j]++;

}

}

3/22/2016

int A[2][4];

kernelF<<<(2,1),(4,1)>>>(A);

__device__ kernelF(A)

{

i = blockIdx.x;

j = threadIdx.x;

A[i][j]++;

}

// all threads run same kernel

// each thread block has its id

// each thread has its id

// each thread has a different i

and j

CSCI6461: Computer Architecture

13-48

13-48

Thread Hierarchy

thread 3 of block 1 operates

on element A[1][3]

int A[2][4];

kernelF<<<(2,1),(4,1)>>>(A);

__device__ kernelF(A){

i = blockIdx.x;

j = threadIdx.x;

A[i][j]++;

}

3/22/2016

3/22/2016

// define threads

// all threads run same kernel

// each thread block has its id

// each thread has its id

// each thread has a

different i and j

CSCI6461:Computer

Computer Architecture

13-49

CSCI6461:

Architecture

13-49

Thread Execution

int A[2][4];

kernelF<<<(2,1),(4,1)>>>(A);

__device__ kernelF(A)

{

i = blockIdx.x;

j = threadIdx.x;

A[i][j]++;

}

mv.u32 %r0, %ctaid.x

mv.u32 %r1, %ntid.x

mv.u32 %r2, %tid.x

mad.u32 %r3, %r2, %r1, %r0

ld.global.s32 %r4, [%r3]

add.s32 %r4, %r4, 1

st.global.s32 [%r3], %r4

3/22/2016

// r0 = i = blockIdx.x

// r1 = "threads-per-block"

// r2 = j = threadIdx.x

// r3 = i * "threads-per-block" + j

// r4 = A[i][j]

// r4 = r4 + 1

// A[i][j] = r4

CSCI6461: Computer Architecture

13-50

NVidia’s CUDA

• Compute Unified Device Architecture-CUDA

– Elegant solution to problem of expressing parallelism

– Not all algorithms, but enough to matter

• CUDA’s design goals

– extend a standard sequential programming language,

specifically C/C++,

– focus on the important issues of parallelism—how to craft

efficient parallel algorithms—rather than grappling with the

mechanics of an unfamiliar and complicated language.

• minimalist set of abstractions for expressing parallelism

• highly scalable parallel code that can run across tens of thousands

of concurrent threads and hundreds of processor cores.

– A thread is associated with each data element

3/22/2016

CSCI6461: Computer Architecture

13-51

OpenCL

• Open Computing Language

• Open standard developed by Apple:

– Now, maintained by the Khronos Group, consortium of many

companies (including NVIDIA, AMD and Intel, but also lots of

others)

• Initial version of OpenCL standard released in Dec

2008.

• Many companies will create their own implementations.

• Apple was first to market, with an OpenCL

implementation included in Mac OS X v10.6 (“Snow

Leopard”) in 2009.

3/22/2016

CSCI6461: Computer Architecture

13-52

OpenCL vs. CUDA

• CUDA uses early code binding

– Code is compiled with normal C/C++/FORTRAN (beta)

source code

• Need CUDA occupancy calculator to determine number of threads

based on resource utilization

– Library support: BLAS & FFT & DPT

• OpenCL

– Late binding of OpenCL code to executable

• OpenCL compiler/linker embedded within application

• No need for CUDA occupancy calculator

– Only supports C

– No libraries

3/22/2016

CSCI6461: Computer Architecture

13-53

Matrix Multiplication

Ref: Multicores, Multiprocessors, and Clusters; University of Arizona

3/22/2016

CSCI6461: Computer Architecture

13-54

Matrix Multiplication

• For a 4096x4096 matrix multiplication

– Matrix C will require calculation of 16,777,216 matrix cells.

– On the GPU each cell is calculated by its own thread.

– On a general purpose processor we can only calculate one cell

at a time.

• We can have 23,040 active threads (GTX570), which

means we can have this many matrix cells calculated in

parallel.

• Each thread exploits the GPUs fine granularity by

computing one element of Matrix C.

• Sub-matrices are read into shared memory from global

memory to act as a buffer and take advantage of GPU

bandwidth.

3/22/2016

CSCI6461: Computer Architecture

13-55

Solving Systems of Equations

Ref: Multicores, Multiprocessors, and Clusters; University of Arizona

3/22/2016

CSCI6461: Computer Architecture

13-56

Additional Material

3/22/2016

CSCI6461: Computer Architecture

13-57

Simple Example

#include <stdio.h>

#define SIZEOFARRAY 64

extern void fillArray(int *a,int size);

/* The main program */

int main(int argc,char *argv[])

{

/* Declare the array that will be modified by the GPU */

int a[SIZEOFARRAY];

int i;

/* Initialize the array to 0s */

for(i=0;i < SIZEOFARRAY;i++) {

a[i]=i;

}

/* Print the initial array */

printf("Initial state of the array:\n");

for(i = 0;i < SIZEOFARRAY;i++) {

printf("%d ",a[i]);

}

printf("\n");

/* Call the function that will in turn call the function

in the GPU that will fill the array */

fillArray(a,SIZEOFARRAY);

/* Now print the array after calling fillArray */

printf("Final state of the array:\n");

for(i = 0; i < SIZEOFARRAY; i++) {

printf("%d ",a[i]);

}

printf("\n");

return 0;

}

3/22/2016

CSCI6461: Computer Architecture

13-58

Function in the GPU: Simple.cu

• simple.cu contains two functions

– fillArray(): A function that will be executed on the host and which

takes care of:

•

•

•

•

•

•

Allocating variables in the global GPU memory

Copying the array from the host to the GPU memory

Setting the grid and block sizes

Invoking the kernel that is executed on the GPU

Copying the values back to the host memory

Freeing the GPU memory

– cu_fillArray()

• This is the kernel that will be executed in every stream processor in

the GPU

• It is identified as a kernel by the use of the keyword: __global__

• This function uses the built-in variables

– blockIdx.x and

– threadIdx.x

to identify a particular position in the array

3/22/2016

CSCI6461: Computer Architecture

13-59

fillArray – Part 1

#define BLOCK_SIZE 32

extern "C" void fillArray(int *array,int arraySize)

{

/* a_d is the GPU counterpart of the array that exists on the host

memory */

int *array_d;

cudaError_t result;

/* allocate memory on device */

/* cudaMalloc allocates space in the memory of the GPU card */

result = cudaMalloc((void**)&array_d,sizeof(int)*arraySize);

/* copy the array into the variable array_d in the device */

/* The memory from the host is being copied to the corresponding

variable in the GPU global memory */

result = cudaMemcpy(

array_d,array

sizeof(int)*arraySize,

cudaMemcpyHostToDevice);

3/22/2016

CSCI6461: Computer Architecture

13-60

fillArray – Part 2

/* execution configuration... */

/* Indicate the dimension of the block */

dim3 dimblock(BLOCK_SIZE);

/* Indicate the dimension of the grid in blocks */

dim3 dimgrid(arraySize/BLOCK_SIZE);

/* actual computation: Call the kernel, the function that is */

/* executed by each and every processing element on the GPU card */

cu_fillArray<<<dimgrid,dimblock>>>(array_d);

/* read results back: */

/* Copy the results from the GPU back to the memory on the host */

result = cudaMemcpy(

array,

array_d,sizeof(int)*arraySize

cudaMemcpyDeviceToHost);

/* Release the memory on the GPU card */

cudaFree(array_d);

}

3/22/2016

CSCI6461: Computer Architecture

13-61

cu_fillArray

global__ void cu_fillArray(int *array_d)

{

int x;

/* blockIdx.x is a built-in variable in CUDA

that returns the blockId in the x axis

of the block that is executing this block of code

threadIdx.x is another built-in variable in CUDA

that returns the threadId in the x axis

of the thread that is being executed by this

stream processor in this particular block

*/

x=blockIdx.x*BLOCK_SIZE+threadIdx.x;

array_d[x]+=array_d[x];

}

3/22/2016

CSCI6461: Computer Architecture

13-62

// main routine that executes on the host

int main(void)

{

float *a_h, *a_d;

// Pointer to host & device arrays

const int N = 10;

// Number of elements in arrays

size_t size = N * sizeof(float);

a_h = (float *)malloc(size);

cudaMalloc((void **) &a_d, size);

// Allocate array on host

// Allocate array on device

// Initialize host array and copy it to CUDA device

for (int i=0; i<N; i++) a_h[i] = (float)i;

cudaMemcpy(a_d, a_h, size, cudaMemcpyHostToDevice);

// Do calculation on device:

int block_size = 4;

int n_blocks = N/block_size + (N%block_size == 0 ? 0:1);

square_array <<< n_blocks, block_size >>> (a_d, N);

// Retrieve result from device and store it in host array

cudaMemcpy(a_h, a_d, sizeof(float)*N, cudaMemcpyDeviceToHost);

// Print results

for (int i=0; i<N; i++) printf("%d %f\n", i, a_h[i]);

// Cleanup

free(a_h); cudaFree(a_d);

}

3/22/2016

CSCI6461: Computer Architecture

13-63

http://llpanorama.wordpress.com/200

8/05/21/my-first-cuda-program/

NVIDIA CUDA

OpenCL Example Part 1

// create a compute context with GPU device

context =

clCreateContextFromType(NULL, CL_DEVICE_TYPE_GPU, NULL, NULL,

NULL);

// create a command queue

queue = clCreateCommandQueue(context, NULL, 0, NULL);

// allocate the buffer memory objects

memobjs[0] = clCreateBuffer(context,

CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR,

sizeof(float)*2*num_entries, srcA, NULL);

memobjs[1] = clCreateBuffer(context,

CL_MEM_READ_WRITE,

sizeof(float)*2*num_entries, NULL, NULL);

// create the compute program

program = clCreateProgramWithSource(context, 1,

&fft1D_1024_kernel_src, NULL, NULL);

3/22/2016

CSCI6461: Computer Architecture

13-64

OpenCL Example Part 2

// build the compute program executable

clBuildProgram(program, 0, NULL, NULL, NULL, NULL);

// create the compute kernel

kernel = clCreateKernel(program, "fft1D_1024", NULL);

// set the args values

clSetKernelArg(kernel, 0, sizeof(cl_mem), (void *)&memobjs[0]);

clSetKernelArg(kernel, 1, sizeof(cl_mem), (void *)&memobjs[1]);

clSetKernelArg(kernel, 2,

sizeof(float)*(local_work_size[0]+1)*16, NULL);

clSetKernelArg(kernel, 3,

sizeof(float)*(local_work_size[0]+1)*16, NULL);

// create N-D range object with work-item dimensions

// and execute kernel

global_work_size[0] = num_entries; local_work_size[0] = 64;

clEnqueueNDRangeKernel(queue, kernel, 1, NULL,

global_work_size, local_work_size, 0,

NULL, NULL);

3/22/2016

CSCI6461: Computer Architecture

13-65

OpenCL Example Part 3

// This kernel computes FFT of length 1024. The 1024 length

FFT is decomposed into calls to a radix 16 function,

another radix 16

// function and then a radix 4 function

__kernel void fft1D_1024 (__global float2 *in, __global

float2 *out,__local float *sMemx, __local float *sMemy)

{

int tid = get_local_id(0);

int blockIdx = get_group_id(0) * 1024 + tid;

float2 data[16];

// starting index of data to/from global memory

in = in + blockIdx;

out = out + blockIdx;

globalLoads(data, in, 64); // coalesced global reads

fftRadix16Pass(data); // in-place radix-16 pass

twiddleFactorMul(data, tid, 1024, 0);

3/22/2016

CSCI6461: Computer Architecture

13-66

OpenCL Example Part 4

// local shuffle using local memory

localShuffle(data, sMemx, sMemy, tid, (((tid & 15) * 65) +

(tid >> 4)));

// in-place radix-16 pass

fftRadix16Pass(data);

// twiddle factor multiplication

twiddleFactorMul(data, tid, 64, 4);

localShuffle(data, sMemx, sMemy, tid, (((tid >> 4) * 64) +

(tid & 15)));

// four radix-4 function calls

fftRadix4Pass(data);

// radix-4

fftRadix4Pass(data + 4); // radix-4

fftRadix4Pass(data + 8); // radix-4

fftRadix4Pass(data + 12); // radix-4

function

function

function

function

number

number

number

number

1

2

3

4

// coalesced global writes

globalStores(data, out, 64);

}

3/22/2016

CSCI6461: Computer Architecture

13-67

Dr. Dobb’s Rules for Multicore Programming

Think parallel.

• Approach all problems looking for the parallelism.

• Understand where parallelism is, and organize your

thinking to express it.

• Decide on the best parallel approach before other design

or implementation decisions. Learn to "Think Parallel."

3/22/2016

CSCI6461: Computer Architecture

13-68

Dr. Dobb’s Rules for Multicore Programming

Program using abstraction.

• Focus on writing code to express parallelism, but

avoid writing code to manage threads or processor

cores.

• Libraries, OpenMP, and Intel Threading Building

Blocks are all examples of using abstractions.

• Do not use raw native threads (pthreads, Windows

threads, Boost threads, and the like).

• Threads and MPI are the assembly languages for

parallelism. They offer maximum flexibility, but

require too much time to write, debug, and maintain.

• Your programming should be at a high-enough level

that your code is about your problem, not about

thread or core management.

3/22/2016

CSCI6461: Computer Architecture

13-69

Dr. Dobb’s Rules for Multicore Programming

Program in tasks (chores), not threads (cores).

• Leave the mapping of tasks to threads or processor

cores as a distinctly separate operation in your

program, preferably an abstraction you are using

that handles thread/core management for you.

• Create an abundance of tasks in your program, or a

task that can be spread across processor cores

automatically (such as an OpenMP loop).

• By creating tasks, you are free to create as many as

you can without worrying about oversubscription.

3/22/2016

CSCI6461: Computer Architecture

13-70

Dr. Dobb’s Rules for Multicore Programming

Design with the option to turn concurrency off.

• To make debugging simpler, create programs that can run without

concurrency. This way, when debugging, you can run programs first

with—then without—concurrency, and see if both runs fail or not.

• Debugging common issues is simpler when the program is not running

concurrently because it is more familiar and better supported by today's

tools.

• Knowing that something fails only when run concurrently hints at the

type of bug you are tracking down.

• If you ignore this rule and can't force your program to run in only one

thread, you'll spend too much time debugging. Since you want to have

the capability to run in a single thread specifically for debugging, it

doesn't need to be efficient.

• You just need to avoid creating parallel programs that require

concurrency to work correctly, such as many producer-consumer

models.

• MPI programs often violate this rule, which is part of the reason MPI

programs can be problematic to implement and debug.

3/22/2016

CSCI6461: Computer Architecture

13-71

Dr. Dobb’s Rules for Multicore Programming

Avoid using locks.

• Simply say "no" to locks. Locks slow programs, reduce

their scalability, and are the source of bugs in parallel

programs.

• Make implicit synchronization the solution for your

program.

• When you still need explicit synchronization, use atomic

operations.

• Use locks only as a last resort.

• Work hard to design the need for locks completely out of

your program.

3/22/2016

CSCI6461: Computer Architecture

13-72

Dr. Dobb’s Rules for Multicore Programming

Use tools and libraries designed to help with

concurrency.

• Don't "tough it out" with old tools.

• Be critical of tool support with regards to how it presents

and interacts with parallelism.

• Most tools are not yet ready for parallelism.

• Look for threadsafe libraries—ideally ones that are

designed to utilize parallelism themselves.

3/22/2016

CSCI6461: Computer Architecture

13-73

Dr. Dobb’s Rules for Multicore Programming

• Use scalable memory allocators.

• Threaded programs need to use scalable memory

allocators. Period.

• There are a number of solutions and I'd guess that

all of them are better than malloc().

• Using scalable memory allocators speeds up

applications by eliminating global bottlenecks,

reusing memory within threads to better utilize

caches, and partitioning properly to avoid cache line

sharing.

• SHK: Better yet, do not use a programming language

that does not have implicit memory allocation and

garbage collection, e.g., Java, CommonLisp, …

3/22/2016

CSCI6461: Computer Architecture

13-74

Dr. Dobb’s Rules for Multicore Programming

• Design to scale through increased workloads.

• The amount of work your program needs to handle

increases over time. Plan for that.

• Designed with scaling in mind, your program will handle

more work as the number of processor cores increase

• Every year, we ask our computers to do more and more.

• Your designs should favor using increases in parallelism

to give you advantages in handling bigger workloads in

the future.

3/22/2016

CSCI6461: Computer Architecture

13-75