Facebook - Data Freeway - Open Cloud Engine WIKI

advertisement

Data Freeway :

Scaling Out to Realtime

Eric Hwang, Sam Rash

{ehwang,rash}@fb.com

Agenda

»

»

»

»

Data at Facebook

Data Freeway System Overview

Realtime Requirements

Realtime Components

›

›

›

›

›

Calligraphus/Scribe

HDFS use case and modifications

Calligraphus: a Zookeeper use case

ptail

Puma

» Future Work

Big Data, Big Applications / Data at Facebook

» Lots of data

›

›

›

›

more than 500 million active users

50 million users update their statuses at least once each day

More than 1 billion photos uploaded each month

More than 1 billion pieces of content (web links, news stories, blog posts,

notes, photos, etc.) shared each week

› Data rate: over 7 GB / second

» Numerous products can leverage the data

›

›

›

›

Revenue related: Ads Targeting

Product/User Growth related: AYML, PYMK, etc

Engineering/Operation related: Automatic Debugging

Puma: streaming queries

Data Freeway System Diagram

Realtime Requirements

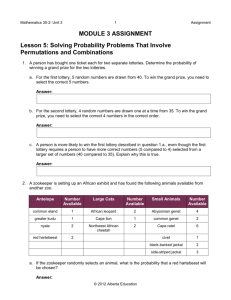

› Scalability: 10-15 GBytes/second

› Reliability: No single point of failure

› Data loss SLA: 0.01%

• loss due to hardware: means at most 1 out of 10,000 machines can lose data

› Delay of less than 10 sec for 99% of data

• Typically we see 2s

› Easy to use: as simple as ‘tail –f /var/log/my-log-file’

Scribe

• Scalable distributed logging framework

• Very easy to use:

• scribe_log(string category, string message)

• Mechanics:

•

•

•

•

Runs on every machine at Facebook

Built on top of Thrift

Collect the log data into a bunch of destinations

Buffer data on local disk if network is down

• History:

• 2007: Started at Facebook

• 2008 Oct: Open-sourced

Calligraphus

» What

› Scribe-compatible server written in Java

› emphasis on modular, testable code-base, and performance

» Why?

› extract simpler design from existing Scribe architecture

› cleaner integration with Hadoop ecosystem

• HDFS, Zookeeper, HBase, Hive

» History

› In production since November 2010

› Zookeeper integration since March 2011

HDFS : a different use case

» message hub

› add concurrent reader support and sync

› writers + concurrent readers a form of pub/sub model

HDFS : add Sync

» Sync

› implement in 0.20 (HDFS-200)

• partial chunks are flushed

• blocks are persisted

› provides durability

› lowers write-to-read latency

HDFS : Concurrent Reads Overview

» Without changes, stock

Hadoop 0.20 does not allow

access to the block being

written

» Need to read the block being

written for realtime apps in

order to achieve < 10s latency

HDFS : Concurrent Reads Implementation

1. DFSClient asks Namenode

for blocks and locations

2. DFSClient asks Datanode

for length of block being

written

3. opens last block

HDFS : Checksum Problem

» Issue: data and checksum updates are not atomic for last chunk

» 0.20-append fix:

› detect when data is out of sync with checksum using a visible length

› recompute checksum on the fly

» 0.22 fix

› last chunk data and checksum kept in memory for reads

Calligraphus: Log Writer

Scribe categories

Calligraphus

Servers

Server

Category 1

Category 2

Server

Category 3

Server

How to persist to HDFS?

HDFS

Calligraphus (Simple)

Calligraphus

Servers

Scribe categories

HDFS

Server

Category 1

Server

Category 2

Category 3

Server

Number of

categories

x

Number of

servers

=

Total number of

directories

Calligraphus (Stream Consolidation)

Scribe categories

Calligraphus

Servers

Router

Writer

Router

Writer

Router

Writer

HDFS

Category 1

Category 2

Category 3

ZooKeeper

Number of

categories

=

Total number of

directories

ZooKeeper: Distributed Map

» Design

› ZooKeeper paths as tasks (e.g. /root/<category>/<bucket>)

› Cannonical ZooKeeper leader elections under each bucket for bucket

ownership

› Independent load management – leaders can release tasks

› Reader-side caches

› Frequent sync with policy db

Root

A

1

2

3

B

4

5

1

2

3

C

4

5

1

2

3

D

4

5

1

2

3

4

5

ZooKeeper: Distributed Map

» Real-time Properties

›

›

›

›

›

Highly available

No centralized control

Fast mapping lookups

Quick failover for writer failures

Adapts to new categories and changing throughput

Distributed Map: Performance Summary

» Bootstrap (~3000 categories)

› Full election participation in 30 seconds

› Identify all election winners in 5-10 seconds

› Stable mapping converges in about three minutes

» Election or failure response usually <1 second

› Worst case bounded in tens of seconds

Canonical Realtime Application

» Examples

› Realtime search indexing

› Site integrity: spam detection

› Streaming metrics

Parallel Tailer

• Why?

• Access data in 10 seconds or less

• Data stream interface

• Command-line tool to tail the log

• Easy to use: ptail -f cat1

• Support checkpoint: ptail -cp XXX cat1

Canonical Realtime ptail Application

Puma Overview

» realtime analytics platform

» metrics

› count, sum, unique count, average, percentile

» uses ptail checkpointing for accurate calculations in the case of failure

» Puma nodes are sharded by keys in the input stream

» HBase for persistence

Puma Write Path

Puma Read Path

Summary - Data Freeway

» Highlights:

› Scalable: 4G-5G Bytes/Second

› Reliable: No single-point of failure; < 0.01% data loss with hardware

failures

› Realtime: delay < 10 sec (typically 2s)

» Open-Source

› Scribe, HDFS

› Calligraphus/Continuous Copier/Loader/ptail (pending)

» Applications

› Realtime Analytics

› Search/Feed

› Spam Detection/Ads Click Prediction (in the future)

Future Work

» Puma

› Enhance functionality: add application-level transactions on Hbase

› Streaming SQL interface

» Seekable Compression format

› for large categories, the files are 400-500 MB

› need an efficient way to get to the end of the stream

› Simple Seekable Format

• container with compressed/uncompressed stream offsets

• contains data segments which are independent virtual files

Fin

» Questions?