Colorless green ideas

advertisement

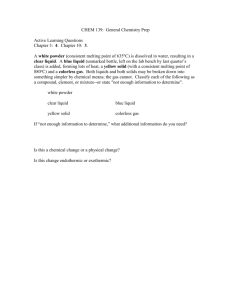

LING/C SC 581: Advanced Computational Linguistics Lecture Notes Jan 29th Today's Topics • • • • Britney Spears edit distance More on n-gram models Tregex class exercise Tregex homework Britney Spears edit distance • Data: Many ways to overfit the homework: • negative edit distance for doubling the 't' • 'a' for 'e' lowers cost • etc. • there is no simple and general formula … More on n-gram models Colorless green ideas • examples – (1) colorless green ideas sleep furiously – (2) furiously sleep ideas green colorless • Chomsky (1957): – . . . It is fair to assume that neither sentence (1) nor (2) (nor indeed any part of these sentences) has ever occurred in an English discourse. Hence, in any statistical model for grammaticalness, these sentences will be ruled out on identical grounds as equally `remote' from English. Yet (1), though nonsensical, is grammatical, while (2) is not. • idea One piece of supporting evidence: – (1) is syntactically valid, – (2) is word salad (1) pronounced with normal intonation (2) pronounced like a list of words … Colorless green ideas • Sentences: – (1) colorless green ideas sleep furiously – (2) furiously sleep ideas green colorless • Statistical Experiment (Pereira 2002) bigram language model wi-1 wi Part-of-Speech (POS) Tag Sequence • Chomsky's example: – colorless green ideas sleep furiously – JJ JJ NNS VBP RB (POS Tags) • Similar but grammatical example: – revolutionary new ideas appear infrequently – JJ JJ NNS VBP RB LSLT pg. 146 Penn Treebank (PTB) Corpus How is it used? • One million words of 1989 Wall Street Journal (WSJ) material • nearly 50,000 sentences (25 sections) • Training: (2–21) 39,832 sentences • Evaluation: (23) 2,416 sentences • Standard practice 0 training sections 2–21 90% evaluation 23 24 Stanford Parser • Stanford Parser: – a probabilistic PS parser trained on the Penn Treebank Stanford Parser • Stanford Parser: a probabilistic PS parser trained on the Penn Treebank Penn Treebank (PTB) • Corpus: word frequencies: Word POS colorless green Frequency Word POS 0 revolutionary JJ Frequency 6 NNP 33 NNP 2 JJ 19 NN 2 NN 5 JJ 1795 ideas NNS 32 NNP 1459 sleep VB 5 NNPS 2 NN 4 NN 1 VBP 2 ideas NNS 32 NNP 1 appear VB 55 RB 2 VBP 41 furiously new infrequently 0 Stanford Parser • Structure of NPs: – colorless green ideas – revolutionary new ideas Phrase [NP JJ JJ NNS] Frequency 1073 [NP NNP JJ NNS] 61 An experiment • examples – (1) colorless green ideas sleep furiously – (2) furiously sleep ideas green colorless • Question: – Is (1) the most likely permutation of these particular five words? Parsing Data • All 5! (=120) permutations of – colorless green ideas sleep furiously . Parsing Data • The winning sentence was: ① furiously ideas sleep colorless green . • after training on sections 02-21 (approx. 40,000 sentences) • sleep selects for ADJP object with 2 heads • adverb (RB) furiously modifies noun Parsing Data • The next two highest scoring permutations were: ② Furiously green ideas sleep colorless . ③ Green ideas sleep furiously colorless . sleep takes NP object sleep takes ADJP object Parsing Data • (Pereira 2002) compared Chomsky’s original minimal pair: 23. colorless green ideas sleep furiously 36. furiously sleep ideas green colorless Ranked #23 and #36 respectively out of 120 Parsing Data But … • graph (next slide) shows how arbitrary these rankings are • when trained on randomly chosen sections covering 14K- 31K sentences Example: #36 furiously sleep ideas green colorless outranks #23 colorless green ideas sleep furiously (and the top 3) over much of the training space Example: Chomsky's original sentence #23 colorless green ideas sleep furiously outranks both the top 3 and #36 just briefly at one data point Sentence Rank vs. Amount of Training Data 120 Best three sentences 100 ⬅︎Rank 80 60 #1 #2 #3 40 20 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Amount of training data ➠ 16 17 18 19 20 21 Sentence Rank vs. Amount of Training Data 120 #23 colorless green ideas sleep furiously #36 furiously sleep ideas green colorless 100 ⬅︎Rank 80 60 #23 #36 40 20 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Amount of training data ➠ 16 17 18 19 20 21 Sentence Rank vs. Amount of Training Data 120 #23 colorless green ideas sleep furiously #36 furiously sleep ideas green colorless 100 ⬅︎Rank 80 #1 60 #2 #3 #23 40 #36 20 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Amount of training data ➠ 16 17 18 19 20 21 Break tregex class exercise tregex class exercise • Other things: 1. nonstandard associative rule without round brackets ( … ) 2. @ basic category 3. boolean operators: ! (negation) ? (optional) & (and) | (or) [ … ] 4. segment : 5. naming a node: =name 6. groups inside regex for nodes: /regex/#1%name tregex class exercise • Look at TREEBANK_3/docs/prsguid1.pdf – on page 63 tregex class exercise • Search for NP trace relative clauses as defined below: tregex homework • One PDF file. • Due by email before next class tregex homework Section 16: Clefts (pp.268—270) • Examples from the guide: – (Subject) It is the clumsy child who sustains the worst injuries – (Direct object) It is this conclusion that we challenge – others… Do these exist in the PTB WSJ corpus? • Search for them and categorize by type, e.g. subject/object etc. • Supply frequency counts. – (Make sure you don't include it-extraposition examples, section 17.) • Supply your regex search strings • How about wh-clefts (16.2)? – (More difficult.)