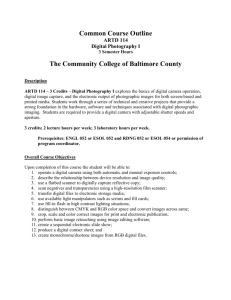

Thesis_AAK_Correction_4.20.13

advertisement