A = I n - University of Notre Dame

advertisement

1

The Bootstrap’s Finite Sample Distribution

An Analytical Approach

Lawrence C. Marsh

Department of Economics and Econometrics

University of Notre Dame

Midwest Econometrics Group (MEG)

Northwestern University

October 15 – 16, 2004

2

This is the first of three papers:

(1.) Bootstrap’s Finite Sample Distribution ( today !!! )

(2.) Bootstrapped Asymptotically Pivotal Statistics

(3.) Bootstrap Hypothesis Testing and Confidence Intervals

3

traditional approach in econometrics

Analytical

problem

Analogy principle (Manski)

GMM (Hansen)

Empirical

process

approach used in this paper

Empirical

process

Bootstrap’s

Finite Sample Distribution

Analytical

solution

4

bootstrap procedure

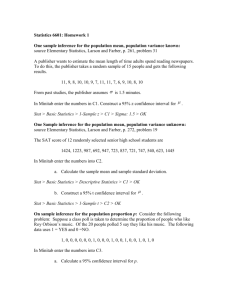

Start with a sample of size n: {Xi : i = 1,…,n}

Bootstrap sample of size m:

m < n

or

m = n

or

{Xj*: j = 1,…,m}

m > n

Define Mi as the frequency of drawing each Xi .

5

m

X

j 1

*

j

n

M X

i 1

i

i

1 m *

1 n

EM X j EM Mi X i

m i 1

m j 1

1 m *

1 n

VarM X j VarM Mi X i

m i 1

m j 1

..

.

6

EM M i

m

n

EM M

2

i

m m 1 n m

n2

2

1 m

*

EM f X j

m

j 1

M 1 ... M n m

M 1 ... M n m

n

2

2

M

f

X

i

i

i 1

for i k

2

m

1

n

m!

f X j*

m

( M !...M !)

j

1

1

n

m

2

n m m!

1

Mi f X i

m i 1

( M 1!... M n !)

n

M 1 ... M n m

1

m2

m2 m

EM M i M k

n2

2

m2

m

n

m!

M

M

f

X

f

X

i

k

i

k

( M 1!... M n !)

i k

n2 n

2

7

Applied Econometrician:

The bootstrap treats the original sample

as if it were the population and induces

multinomial distributed randomness.

1 m

*

VarM f X j

m j 1

n 1

2

mn

n

f X

i 1

i

2

=

2

2

mn

n2 n

2

f X f X

ik

i

k

8

Econometric theorist: what does this buy you?

Find out under joint distribution of

bootstrap-induced randomness and

randomness implied by the original

sample data:

1 m

*

VarM , X f X j

m j 1

=

1

n2

2

2

n

n

Var f X

i 1

X

i

n 1 n

2

E

f

X

X

i

2

m n i 1

n2 n

2

Cov f X , f X

ik

X

i

k

2

m n2

n2 n

2

E f X f X .

ik

X

i

k

9

Applied Econometrician:

1 m

VarM X j* X

m j 1

2

n 1

2

m

n

X

n

i 1

i

X

4

2

m n2

1

n2

2

n2

Var X

i 1

n2 n

2

ik

X

i

X

Cov X X i X

2

ik

f X

n

X

=

n 1 n

E Xi X

2 X

m n i 1

, X

2

k

X

i

X

X

2

X

k

2

2

For example,

Econometric theorist:

1 m

2

*

VarM , X X j X

m j 1

n2 n

2

2

2

m n2

n2 n

2

ik

*

j

4

X X

*

j

2

EX X i X

X

2

k

X

The Wild Bootstrap

10

Multiply each boostrapped value by plus one or minus one

each with a probability of one-half (Rademacher Distribution).

Use binomial distribution to impose Rademacher distribution:

PWi | M i

M i Wi

M i Wi

0.5 1 0.5

Wi

2

2

1 m

n

1

*

EM EW |M f X j EM EW |M Wi M i Wi f X i

m j 1

m i 1

Wi = number of positive ones out of Mi which, in turn,

is the number of Xi’s drawn in m multinomial draws.

11

The Wild Bootstrap

Applied Econometrician:

1

*

VarW , M f X j

m j 1

m

n

=

1

2

f X i

m n i 1

Econometric Theorist:

n

1 m

1

*

VarW , M , X f X j

VarX f X i

m j 1

m n i 1

under zero mean assumption

12

n

1 m

1

*

q f X j q M i f X i

m i 1

m j 1

1 m

*

EM q f X j

m j 1

1 m

*

VarM q f X j

m j 1

1 n

E M q M i f X i

m i 1

1 n

VarM q M i f X i

m i 1

.

.

.

13

E X

where X is a p x 1 vector.

o g

nonlinear function of .

X i : i 1,..., n

1 n

X Xi

n i 1

X

*

j

: j 1,..., m

1 m *

X Xj

m j 1

*

Horowitz (2001) approximates the bias of n g X

as an estimator of o g for a smooth nonlinear function g

*

n

B

1

*

*

'

EM X X G2 X X X

2

On

2

almost surely, where G2 X is matrix of second partial derivatives of g.

14

*

'

EM X G2 X X

'

1

*

EM X G2 X X j

m

j 1

m

1

'

EM X G2 X Mi Xi

m i 1

n

Horowitz (2001) uses bootstrap simulations to approximate

the first term on the right hand side.

*

n

B

1

EM X * X 'G2 X X * X

2

15

O n 2

Exact finite sample solution:

Bn* =

n2 n

2

1 n m 1 n

2

m

1

'

X i ' G2 X X i

X i ' G2 X X k X G2 X X

2

2

2 mn

mn

i 1

ik

+ On

2

16

Separability Condition

Definition:

*

Any bootstrap statistic, n , that is a function of the elements

of the set {f(Xj*): j = 1,…,m} and satisfies the separability condition

f X : j 1,..., m g M h f X

n

*

n

*

j

i 1

i

where g(Mi ) and h( f(Xi )) are independent functions

and where the expected value EM [g(Mi)] exists,

is a “directly analyzable” bootstrap statistic.

i

17

X is an n x 1 vector of original sample values.

X * is an m x 1 vector of bootstrapped sample values.

X * = HX where the rows of H are all zeros except

for a one in the position corresponding to

the element of X that was randomly drawn.

EH[H] = (1/n) 1m1n’

where 1m and 1n are column vectors of ones.

Taylor series expansion

m* =

m* = g(X *) = g(HX )

Setup for empirical process:

Xo* = Ho X

g(Xo*) + [G1(Xo*)]’(X *Xo*) + (1/2) (X *Xo*)’[G2(Xo*)](X *Xo*) + R *

Taylor series expansion

m* = g(X *) = g(HX )

Taylor series:

m* =

18

X * = HX where the rows of H are all zeros

except for a one in the position corresponding

to the element of X that was randomly drawn.

Xo* = Ho X

Ho = EH[H] = (1/n) 1m1n’

Setup for analytical solution:

g((1/n)1m1n’X )

+ [G1((1/n)1m1n’X )]’(H(1/n)1m1n’) X

+ (1/2)X‘(H(1/n)1m1n’)’[G2((1/n)1m1n’X )](H(1/n)1m1n’) X

+ R*

Now ready to determine exact finite moments, et cetera.

1

ˆ

'

X X X 'Y

Y X̂

e

e

{ e1 , e2 , . . ., en }

e {e ,e

*

1

*

e = ( In – X (X’X)-1X’)

*

2

, . . ., e }

*

n

A = ( In – (1/n)1n1n’ )

*

ˆ

Y X Ae

*

1

ˆ

*

'

X X X 'Y *

}

19

e* = H e

EH[H] = (1/n) 1n1n’

No restrictions

on covariance

matrix for errors.

20

Applied Econometrician:

CovH | ̂ *

=

1

1

1

'

'

X X X A I n e' e 2 1n1n' I n 1n' 2 vecee'

n

n

.

1

'

AX

X

X

1

1

1

2 X ' X X ' A 1n1n' ee' 1n1n' A X X ' X

n

where

A=

In

A = ( In – (1/n)1n1n’ )

or

so

A1n1n’ = 0 and 1n1n’A = 0

21

Econometric theorist:

Cov, H

X ' X

1

+

1 ' 1

1 '

1

X ' I n 1n1n tr 2 1n 2 vec X X ' X

n

n

n

No restrictions on E '

where

*

ˆ

Cov ˆ

1

1

'

'

'

I n X X X X E' I n X X X X '

22

This is the first of three papers:

(1.)

Bootstrap’s Finite Sample Distribution ( today !!! )

basically

(2.) Bootstrapped Asymptotically Pivotal Statistics

done.

almost (3.) Bootstrap Hypothesis Testing and Confidence Intervals

done.

Thank you !