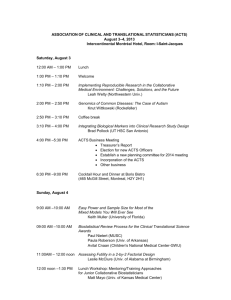

- Courses - University of California, Berkeley

advertisement

Lecture 24: NLP for IR Principles of Information Retrieval Prof. Ray Larson University of California, Berkeley School of Information IS 240 – Spring 2013 2013.04.01 - SLIDE 1 Final Term Paper • Should be about 8-12 pages on: – some area of IR research (or practice) that you are interested in and want to study further – Experimental tests of systems or IR algorithms – Build an IR system, test it, and describe the system and its performance • Due May 6th (First day of Final exam Week - or any time before) IS 240 – Spring 2013 2013.04.01 - SLIDE 2 Today • Review - Filtering and TDT • Natural Language Processing and IR – Based on Papers in Reader and on • David Lewis & Karen Sparck Jones “Natural Language Processing for Information Retrieval” Communications of the ACM, 39(1) Jan. 1996 • Information from Junichi Tsuji, University of Tokyo • Watson and Jeopardy IS 240 – Spring 2013 2013.04.01 - SLIDE 3 Today • Review - Filtering and TDT • Natural Language Processing and IR – Based on Papers in Reader and on • David Lewis & Karen Sparck Jones “Natural Language Processing for Information Retrieval” Communications of the ACM, 39(1) Jan. 1996 • Text summarization: Lecture from Ed Hovy (USC) • Watson and Jeopardy IS 240 – Spring 2013 2013.04.01 - SLIDE 4 Natural Language Processing and IR • The main approach in applying NLP to IR has been to attempt to address – Phrase usage vs individual terms – Search expansion using related terms/concepts – Attempts to automatically exploit or assign controlled vocabularies IS 240 – Spring 2013 2013.04.01 - SLIDE 5 NLP and IR • Much early research showed that (at least in the restricted test databases tested) – Indexing documents by individual terms corresponding to words and word stems produces retrieval results at least as good as when indexes use controlled vocabularies (whether applied manually or automatically) – Constructing phrases or “pre-coordinated” terms provides only marginal and inconsistent improvements IS 240 – Spring 2013 2013.04.01 - SLIDE 6 NLP and IR • Not clear why intuitively plausible improvements to document representation have had little effect on retrieval results when compared to statistical methods – E.g. Use of syntactic role relations between terms has shown no improvement in performance over “bag of words” approaches IS 240 – Spring 2013 2013.04.01 - SLIDE 7 General Framework of NLP Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 8 General Framework of NLP John runs. Morphological and Lexical Processing Syntactic Analysis Semantic Analysis Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 9 General Framework of NLP John runs. John run+s. P-N V N Morphological and Lexical Processing 3-pre plu Syntactic Analysis Semantic Analysis Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 10 General Framework of NLP John runs. John run+s. P-N V N Morphological and Lexical Processing 3-pre plu S Syntactic Analysis Semantic Analysis NP VP P-N V John run Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 11 General Framework of NLP John runs. John run+s. P-N V N Morphological and Lexical Processing 3-pre plu S Syntactic Analysis Pred: RUN Agent:John Semantic Analysis NP VP P-N V John run Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 12 General Framework of NLP John runs. John run+s. P-N V N Morphological and Lexical Processing 3-pre plu S Syntactic Analysis Pred: RUN Agent:John John is a student. He runs. IS 240 – Spring 2013 Semantic Analysis NP VP P-N V John run Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester 2013.04.01 - SLIDE 13 General Framework of NLP Tokenization Morphological and Part of Speech Tagging Lexical Processing Inflection/Derivation Compounding Syntactic Analysis Term recognition (Ananiadou) Semantic Analysis Context processing Interpretation Domain Analysis Appelt:1999 Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 14 Difficulties of NLP General Framework of NLP (1) Robustness: Incomplete Knowledge Morphological and Lexical Processing Syntactic Analysis Semantic Analysis Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 15 Difficulties of NLP General Framework of NLP (1) Robustness: Incomplete Knowledge Incomplete Lexicons Morphological and Open class words Lexical Processing Terms Term recognition Named Entities Syntactic Analysis Company names Locations Numerical expressions Semantic Analysis Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 16 Difficulties of NLP General Framework of NLP (1) Robustness: Incomplete Knowledge Morphological and Lexical Processing Incomplete Grammar Syntactic Coverage Domain Specific Constructions Ungrammatical Constructions Syntactic Analysis Semantic Analysis Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 17 Difficulties of NLP General Framework of NLP (1) Robustness: Incomplete Knowledge Morphological and Lexical Processing Syntactic Analysis Predefined Aspects of Information Semantic Analysis Context processing Interpretation Incomplete Domain Knowledge Interpretation Rules Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 18 Difficulties of NLP General Framework of NLP (1) Robustness: Incomplete Knowledge (2) Ambiguities: Combinatorial Explosion Morphological and Lexical Processing Syntactic Analysis Semantic Analysis Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 19 Difficulties of NLP General Framework of NLP (1) Robustness: Incomplete Knowledge Most words in English Morphological and are ambiguous in terms (2) Ambiguities: Lexical Processing of their parts of speech. Combinatorial runs: v/3pre, n/plu Explosion clubs: v/3pre, n/plu Syntactic Analysis and two meanings Semantic Analysis Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 20 Difficulties of NLP General Framework of NLP (1) Robustness: Incomplete Knowledge (2) Ambiguities: Combinatorial Explosion Morphological and Lexical Processing Syntactic Analysis Structural Ambiguities Semantic Analysis Predicate-argument Ambiguities Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 21 Structural Ambiguities Semantic Ambiguities(1) John bought a car with Mary. $3000 can buy a nice car. (1)Attachment Ambiguities John bought a car with large seats. John bought a car with $3000. The manager of Yaxing Benz, a Sino-German joint venture The manager of Yaxing Benz, Mr. John Smith (2) Scope Ambiguities Semantic Ambiguities(2) young women and men in the room Every man loves a woman. (3)Analytical Ambiguities Visiting relatives can be boring. IS 240 – Spring 2013 Co-reference Ambiguities Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester 2013.04.01 - SLIDE 22 Difficulties of NLP General Framework of NLP (1) Robustness: Incomplete Knowledge (2) Ambiguities: Combinatorial Explosion Combinatorial Explosion Morphological and Lexical Processing Syntactic Analysis Structural Ambiguities Semantic Analysis Predicate-argument Ambiguities Context processing Interpretation Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 23 Note: Ambiguities vs Robustness More comprehensive knowledge: More Robust big dictionaries comprehensive grammar More comprehensive knowledge: More ambiguities Adaptability: Tuning, Learning Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 24 Framework of IE IE as compromise NLP Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 25 Difficulties of NLP General Framework of NLP (1) Robustness: Incomplete Knowledge Morphological and Lexical Processing Syntactic Analysis Predefined Aspects of Information Semantic Analysis Context processing Interpretation Incomplete Domain Knowledge Interpretation Rules Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 26 Difficulties of NLP General Framework of NLP (1) Robustness: Incomplete Knowledge Morphological and Lexical Processing Syntactic Analysis Predefined Aspects of Information Semantic Analysis Context processing Interpretation Incomplete Domain Knowledge Interpretation Rules Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 27 Techniques in IE (1) Domain Specific Partial Knowledge: Knowledge relevant to information to be extracted (2) Ambiguities: Ignoring irrelevant ambiguities Simpler NLP techniques (3) Robustness: Coping with Incomplete dictionaries (open class words) Ignoring irrelevant parts of sentences (4) Adaptation Techniques: Machine Learning, Trainable systems Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester IS 240 – Spring 2013 2013.04.01 - SLIDE 28 Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester General Framework of NLP Morphological and Lexical Processing Syntactic Analysis Semantic Anaysis Context processing Interpretation IS 240 – Spring 2013 95 % FSA rules Part of Speech Tagger Statistic taggers Open class words: Named entity recognition (ex) Locations Persons Companies Organizations Position names Local Context Statistical Bias F-Value 90 Domain Dependent Domain specific rules: <Word><Word>, Inc. Mr. <Cpt-L>. <Word> Machine Learning: HMM, Decision Trees Rules + Machine Learning 2013.04.01 - SLIDE 29 Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester FASTUS General Framework of NLP Based on finite states automata (FSA) 1.Complex Words: Morphological and Lexical Processing Recognition of multi-words and proper names 2.Basic Phrases: Simple noun groups, verb groups and particles Syntactic Analysis 3.Complex phrases: Complex noun groups and verb groups Semantic Anaysis 4.Domain Events: Patterns for events of interest to the application Basic templates are to be built. Context processing Interpretation IS 240 – Spring 2013 5. Merging Structures: Templates from different parts of the texts are merged if they provide information about the same entity or event. 2013.04.01 - SLIDE 30 Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester FASTUS General Framework of NLP Based on finite states automata (FSA) 1.Complex Words: Morphological and Lexical Processing Recognition of multi-words and proper names 2.Basic Phrases: Simple noun groups, verb groups and particles Syntactic Analysis 3.Complex phrases: Complex noun groups and verb groups Semantic Anaysis 4.Domain Events: Patterns for events of interest to the application Basic templates are to be built. Context processing Interpretation IS 240 – Spring 2013 5. Merging Structures: Templates from different parts of the texts are merged if they provide information about the same entity or event. 2013.04.01 - SLIDE 31 Slides from Prof. J. Tsujii, Univ of Tokyo and Univ of Manchester FASTUS General Framework of NLP Based on finite states automata (FSA) 1.Complex Words: Morphological and Lexical Processing Recognition of multi-words and proper names 2.Basic Phrases: Simple noun groups, verb groups and particles Syntactic Analysis 3.Complex phrases: Complex noun groups and verb groups Semantic Analysis 4.Domain Events: Patterns for events of interest to the application Basic templates are to be built. Context processing Interpretation IS 240 – Spring 2013 5. Merging Structures: Templates from different parts of the texts are merged if they provide information about the same entity or event. 2013.04.01 - SLIDE 32 Using NLP • Strzalkowski (in Reader) Text NLP: TAGGER IS 240 – Spring 2013 NLP repres PARSER Dbase search TERMS 2013.04.01 - SLIDE 33 Using NLP INPUT SENTENCE The former Soviet President has been a local hero ever since a Russian tank invaded Wisconsin. TAGGED SENTENCE The/dt former/jj Soviet/jj President/nn has/vbz been/vbn a/dt local/jj hero/nn ever/rb since/in a/dt Russian/jj tank/nn invaded/vbd Wisconsin/np ./per IS 240 – Spring 2013 2013.04.01 - SLIDE 34 Using NLP TAGGED & STEMMED SENTENCE the/dt former/jj soviet/jj president/nn have/vbz be/vbn a/dt local/jj hero/nn ever/rb since/in a/dt russian/jj tank/nn invade/vbd wisconsin/np ./per IS 240 – Spring 2013 2013.04.01 - SLIDE 35 Using NLP PARSED SENTENCE [assert [[perf [have]][[verb[BE]] [subject [np[n PRESIDENT][t_pos THE] [adj[FORMER]][adj[SOVIET]]]] [adv EVER] [sub_ord[SINCE [[verb[INVADE]] [subject [np [n TANK][t_pos A] [adj [RUSSIAN]]]] [object [np [name [WISCONSIN]]]]]]]]] IS 240 – Spring 2013 2013.04.01 - SLIDE 36 Using NLP EXTRACTED TERMS & WEIGHTS President 2.623519 soviet 5.416102 President+soviet 11.556747 president+former 14.594883 Hero 7.896426 hero+local 14.314775 Invade 8.435012 tank 6.848128 Tank+invade 17.402237 tank+russian 16.030809 Russian 7.383342 wisconsin 7.785689 IS 240 – Spring 2013 2013.04.01 - SLIDE 37 Same Sentence, different sys INPUT SENTENCE The former Soviet President has been a local hero ever since a Russian tank invaded Wisconsin. TAGGED SENTENCE (using uptagger from Tsujii) The/DT former/JJ Soviet/NNP President/NNP has/VBZ been/VBN a/DT local/JJ hero/NN ever/RB since/IN a/DT Russian/JJ tank/NN invaded/VBD Wisconsin/NNP ./. IS 240 – Spring 2013 2013.04.01 - SLIDE 38 Same Sentence, different sys CHUNKED Sentence (chunkparser – Tsujii) (TOP (S (NP (DT The) (JJ former) (NNP Soviet) (NNP President) ) (VP (VBZ has) (VP (VBN been) (NP (DT a) (JJ local) (NN hero) ) (ADVP (RB ever) ) (SBAR (IN since) (S (NP (DT a) (JJ Russian) (NN tank) ) (VP (VBD invaded) (NP (NNP Wisconsin) ) ) ) ) ) ) (. .) ) ) IS 240 – Spring 2013 2013.04.01 - SLIDE 39 Same Sentence, different sys Enju Parser ROOT been been a a local The former Russian Soviet invaded invaded has has since since ever ROOT be be a a local the former russian soviet invade invade have have since since ever IS 240 – Spring 2013 ROOT VBN VBN DT DT JJ DT JJ JJ NNP VBD VBD VBZ VBZ IN IN RB ROOT VB VB DT DT JJ DT JJ JJ NNP VB VB VB VB IN IN RB -1 5 5 6 11 7 0 1 12 2 14 14 4 4 10 10 9 ROOT ARG1 ARG2 ARG1 ARG1 ARG1 ARG1 ARG1 ARG1 MOD ARG1 ARG2 ARG1 ARG2 MOD ARG1 ARG1 been President hero hero tank hero President President tank President tank Wisconsin President been been invaded since be president hero hero tank hero president president tank president tank wisconsin president be be invade since VBN NNP NN NN NN NN NNP NNP NN NNP NN NNP NNP VBN VBN VBD IN 2013.04.01 - SLIDE 40 VB NN NN NN NN NN NN NN NN NN NN NN NN VB VB VB IN NLP & IR • Indexing – Use of NLP methods to identify phrases • Test weighting schemes for phrases – Use of more sophisticated morphological analysis • Searching – Use of two-stage retrieval • Statistical retrieval • Followed by more sophisticated NLP filtering IS 240 – Spring 2013 2013.04.01 - SLIDE 41 NPL & IR • Lewis and Sparck Jones suggest research in three areas – Examination of the words, phrases and sentences that make up a document description and express the combinatory, syntagmatic relations between single terms – The classificatory structure over document collection as a whole, indicating the paradigmatic relations between terms and permitting controlled vocabulary indexing and searching – Using NLP-based methods for searching and matching IS 240 – Spring 2013 2013.04.01 - SLIDE 42 NLP & IR Issues • Is natural language indexing using more NLP knowledge needed? • Or, should controlled vocabularies be used • Can NLP in its current state provide the improvements needed • How to test IS 240 – Spring 2013 2013.04.01 - SLIDE 43 NLP & IR • New “Question Answering” track at TREC has been exploring these areas – Usually statistical methods are used to retrieve candidate documents – NLP techniques are used to extract the likely answers from the text of the documents IS 240 – Spring 2013 2013.04.01 - SLIDE 44 Mark’s idle speculation • What people think is going on always Keywords From Mark Sanderson, University of Sheffield IS 240 – Spring 2013 NLP 2013.04.01 - SLIDE 45 Mark’s idle speculation • What’s usually actually going on Keywords From Mark Sanderson, University of Sheffield IS 240 – Spring 2013 NLP 2013.04.01 - SLIDE 46 What we really need is… • The reason NLP fails to help is because the machine lacks the human flexibility of interpretation and knowledge of context and content • So what about AI? – There are many debates on whether humanlike AI is or is not possible • “the question of whether machines can think is no more interesting than the question of whether submarines can swim” – Edsger Dijkstra IS 240 – Spring 2013 2013.04.01 - SLIDE 47 Today • Review - Filtering and TDT • Natural Language Processing and IR – Based on Papers in Reader and on • David Lewis & Karen Sparck Jones “Natural Language Processing for Information Retrieval” Communications of the ACM, 39(1) Jan. 1996 • Information from Junichi Tsuji, University of Tokyo • Watson and Jeopardy IS 240 – Spring 2013 2013.04.01 - SLIDE 48 Building Watson and the Jeopardy Challenge Slides based on the article by David Ferrucci, et al. “Building Watson: An Overview of the DeepQA Project” In AI Magazine - Fall 2010 IS 240 – Spring 2013 2013.04.01 - SLIDE 49 The Challenge • “the open domain QA is attractive as it is one of the most challenging in the realm of computer science and artificial intelligence, requiring a synthesis of information retrieval, natural language processing, knowledge representation and reasoning, machine learning and computer-human interfaces.” – “Building Watson: An overview of the DeepQA Project”, AI Magazine, Fall 2010 IS 240 – Spring 2013 2013.04.01 - SLIDE 50 Technologies • • • • • • • • Parsing Question Classification Question Decomposition Automatic Source Acquisition and Evaluation Entity and Relation detection Logical form generation Knowledge representation Reasoning IS 240 – Spring 2013 2013.04.01 - SLIDE 51 Goals • “To create general-purpose, reusable natural language processing (NLP) and knowledge representation and reasoning (KRR) technology that can exploit as-is natural language resources and as-is structured knowledge rather than to curate task-specific knowledge as resources” IS 240 – Spring 2013 2013.04.01 - SLIDE 52 Excluded Jeopardy categories • Audiovisual questions (where part of the clue is a picture, recording, or video) • Special Instruction Questions (where the category or clues require a special verbal explanation from the host) • All others, including “puzzle” clues are considered fair game IS 240 – Spring 2013 2013.04.01 - SLIDE 53 Approaches • Tried adapting and combining systems used for TREC QA task, but never worked adequately for the Jeopardy tests • Started a collaborative effort with academic QA researchers call “Open Advancement of Question Answering” OAQA IS 240 – Spring 2013 2013.04.01 - SLIDE 54 DeepQA • The DeepQA system finally developed (and continuing to be developed) is described as: – A massively parallel probabilistic evidencebased architecture – Uses over 100 different techniques for analyzing natural language, identifying sources, finding and generating hypothesis, finding and scoring evidence, and merging and ranking hypotheses – What is important is how these are combined IS 240 – Spring 2013 2013.04.01 - SLIDE 55 DeepQA • Massive parallelism: Exploits massive parallelism in the consideration of multiple interpretations and hypotheses • Many Experts: Facilitates the integration, application and contextual evaluation of a wide range of loosely coupled probabilistic question and content analytics • Pervasive confidence estimation: No component commits to an answer; all components produce features and associated confidences, scoring different question and content interpretations. – An underlying confidence-processing substrate learns how to stack and combine the scores. IS 240 – Spring 2013 2013.04.01 - SLIDE 56 DeepQA • Integrate shallow and deep knowledge: Balance the use of strict semantics and shallow semantics, leveraging many loosely formed ontologies IS 240 – Spring 2013 2013.04.01 - SLIDE 57 DeepQA DeepQA High-Level Architecture from “Building Watson” AI Magazine Fall 2010 IS 240 – Spring 2013 2013.04.01 - SLIDE 58 Question Analysis • Attempts to discover what kind of question is being asked (usually meaning the desired type of result - or LAT Lexical Answer Type) – I.e. “Who is…” needs a person, “Where is…” needs a location. • DeepQA uses a number of experts and combines the results using the confidence framework IS 240 – Spring 2013 2013.04.01 - SLIDE 59 Hypothesis Generation • Takes the results of Question Analysis and produces candidate answers by searching the system’s sources and extracting answer-sized snippets from the search results. • Each candidate answer plugged back into the question is considered a hypothesis • A “lightweight scoring” is performed to trim down the hypothesis set – What is the likelihood of the candidate answer being an instance of the LAT from the first stage? IS 240 – Spring 2013 2013.04.01 - SLIDE 60 Hypothesis and Evidence Scoring • Candidate answers that pass the lightweight scoring then undergo a rigorous evaluation process that involves gathering additional supporting evidence for each candidate answer, or hypothesis, and applying a wide variety of deep scoring analytics to evaluation the supporting evidence • This involves more retrieval and scoring (one method used involves IDF scores of common words between the hypothesis and the source passage) IS 240 – Spring 2013 2013.04.01 - SLIDE 61 Final Merging and Ranking • Based on the deep scoring, the hypotheses and their supporting sources are ranked and merged to select the single best-supported hypothesis • Equivalent candidate answers are merged • After merging the system must rank the hypotheses and estimate confidence based on their merged scores. (A machine-learning approach using a set of know training answers is used to build the ranking model) IS 240 – Spring 2013 2013.04.01 - SLIDE 62 Running DeepQA • A single question on a single processor implementation of DeepQA typically could take up to 2 hours to complete • The Watson system used a massively parallel version of the UIMA framework and Hadoop (both open source from Apache now :) that was running 2500 processors in parallel • They won the public Jeopardy Challenge (easily it seemed) IS 240 – Spring 2013 2013.04.01 - SLIDE 63