the slides

advertisement

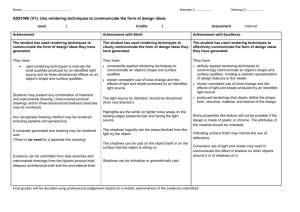

1 Contribution to the study of visual, auditory and haptic rendering of information of contact in virtual environments 9/12/2008 Jean Sreng Advisors: Claude Andriot Anatole Lécuyer 1 Director: Bruno Arnaldi 2 Introduction • Context: Manipulation of solid objects in Virtual Reality • Example applications: industrial virtual assembly / disassembly / maintenance • Focus: perception, simulation, rendering and of contacts between virtual objects 2 3 Outline • State of the art on perception, simulation and rendering of contact • Contributions • Integrated 6DOF multimodal rendering approach • Visual of rendering of multiple contacts • Spatialized haptic rendering of contact • Conclusion 3 Integrated 6DOF Multimodal rendering Visual rendering of multiple contacts Spatialized haptic rendering Human perception of contact 4 Perception of contact Simulation of contact Rendering of contact • Visual perception of contact: • Stereoscopy (Hu et al. 2000) • Motion parallax (Wanger et al. 1992) • Shadows (Wanger et al. 1992, Hu et al. 2002) • Auditory perception of contact: • Contact properties can be directly perceived (Gaver 1993) • Contact sounds conveys information about shape and material (Klatzky 2000, Rochesso 2001) 4 Haptic perception 5 Perception of contact Simulation of contact Rendering of contact • Haptic perception provides an intuitive way to feel the contact (Loomis et al. 1993) : • Tactile perception (patterns at the surface of the skin) • Kinesthetic perception (position and forces) • Physical properties can be perceived through the contact (Klatzky et al. 2003) : • Shape / Texture / Temperature • Weight / Contact forces • Perception of contact features through vibrations • Material (Okamura et al. 1998) • Texture (Lederman et al. 2001) 5 Multimodal perception of contact 6 Perception of contact Simulation of contact Rendering of contact • Known Interaction between modalities • Visual-Auditory interaction - Ex: Sound can shift the perception of impact (Sekuler et al. 1997) • Visual-Haptic interaction - Ex: Pseudo-haptic feedback (Lécuyer et al. 2002) “Stiff” “Soft” • Auditory-Haptic interaction - Ex: Sound can modulate the perception of roughness (Peeva et al. 1997) 6 Simulation of contact 7 Perception of contact Simulation of contact Rendering of contact • Multiple contact models (impact/friction) • Rigid (Newton/Huygens impact law, Coulomb/Amontons friction law) • Locally deformable (Hunt-Crossley impact law, LuGre friction law) • Multiple simulation methods • Collision detection - VPS (McNeely 1999) - LMD (Johnson 2003) • Physical simulation - Constraint based (Baraff 1989) - Penality (Moore 1988) 7 Visual rendering • Visual rendering of the information of contact • Proximity : - Color (McNeely et al.2006) • Contact : - Color (Kitamura et al.1998) - Glyph (Redon et al. 2002) • Force : - Glyph (Lécuyer et al. 2002) 8 8 Perception of contact Simulation of contact Rendering of contact Auditory rendering 9 Perception of contact Simulation of contact Rendering of contact • Realistic rendering of contact sounds • Specific: Impact/Friction/Rolling • Different techniques: FEM (O’Brien 2003), modal synthesis (Van den Doel 2001) • Symbolic rendering (Richard et al. 1994, Massimino 1995, Lécuyer et al. 2002) • Associate an information to a sound effect - Information: Distances / Contact / Forces - Sound effect: Amplitude / Frequencies 9 10 Perception of contact Simulation of contact Rendering of contact Haptic display of contact • Haptic devices (Burdea 96) : • Force feedback • Tactile feedback • Haptic rendering of contact : • Closed loop (McNeely et al. 1999, Johnson 2003) tradeoff between stability / stiffness • Open loop (Kuchenbecker et al. 2006) improve the realism of impact Device Impact 10 Virtual Env. 11 Objectives of this thesis • Improve the simulation, rendering, and perception of contacts in virtual environments • Protocol : Integrated 6DOF multimodal rendering • Integrated 6DOF approach for multisensory rendering techniques • Study of rendering techniques to improve the perception of contact position - Hypothesis of improvement - Experimental implementation - Experimental evaluation 11 Visual rendering of multiple contacts Spatialized haptic rendering 12 Outline Integrated 6DOF multimodal rendering Visual rendering of multiple contacts 12 Spatialized haptic rendering Objectives 13 Integrated 6DOF multimodal rendering • Multiplicity of techniques: • • Contact simulation Sensory rendering • How can we integrate all techniques seamlessly together independently from the simulation ? • Contribution / Overview • Contact formulation (states / events) • Example of contact rendering based on this formulation : Visual, Auditory, Tactile, Force feedback 13 14 Integrated 6DOF multimodal rendering Contact formulation • Simple contact formulation based on : • proximity points a, b • Force f 14 15 Integrated 6DOF multimodal rendering Contact formulation • From this formulation : • • Contact states Temporal evolution of contact states (such as events) : – Higher level information – Adapted to many specific rendering techniques Free motion 15 Impact Contact Detachment 16 Integrated 6DOF multimodal rendering Determination of states and events Impact Contact Detachment • The contact condition : Local linear velocity • The events are defined by : • Impact : • Detachment : 16 Normal 17 Integrated 6DOF multimodal rendering Multimodal rendering architecture 17 18 Integrated 6DOF multimodal rendering Multimodal rendering architecture • Superimpose states and events information: 18 19 Integrated 6DOF multimodal rendering Example of Visual rendering Impact 19 Contact Detachment 20 Integrated 6DOF multimodal rendering Example of Auditory rendering 20 21 Integrated 6DOF multimodal rendering Multimodal rendering platform • Multimodal: • Visual • Auditory • Tactile • force-feedback © Hubert Raguet / CNRS photothèque 21 22 Integrated 6DOF multimodal rendering Preliminary conclusion • We proposed a contact formulation (proximity/force) : • Contact states and events • We developed a multimodal rendering architecture : • Visual (particles / pen) • Auditory (modal synthesis / spatialized) • Tactile (modal synthesis) • 6DOF Haptic enhancement (openloop) 22 23 Outline Integrated 6DOF Multimodal rendering Visual rendering of multiple contacts 23 Spatialized haptic rendering Objectives • Context: complex-shaped objects • Multiple contacts • Difficult interaction Help the user by providing position information • Contribution / Overview • Display the information of proximity / contact / forces - Glyphs - Lights • Subjective evaluation 24 Visual rendering of 24 multiple contacts Visual rendering of 25 multiple contacts Visual rendering of multiple contacts • Visualizing multiple proximity / contact / forces positions Proximity FAR 25 Contact NEAR LOW Contact forces HIGH Visual rendering of 26 multiple contacts Visual rendering using glyphs Proximity FAR 26 Contact NEAR LOW Contact forces HIGH Visual rendering of 27 multiple contacts Visual rendering using glyphs 27 Visual rendering of 28 multiple contacts Glyph filtering • Reduce the number of displayed glyphs • determine “relevance” based on the movement 28 Visual rendering of 29 multiple contacts Glyph filtering • Reduce the number of displayed glyphs • determine “relevance” based on the movement 29 Visual rendering of 30 multiple contacts Glyph filtering • Reduce the number of displayed glyphs • determine “relevance” based on the movement 30 Visual rendering of 31 multiple contacts Glyph filtering • The relevance is determined by comparing : • The local velocity v • The local normal d d 31 v Visual rendering of 32 multiple contacts Visual rendering using lights • Two types of lights : • Spherical lights • Conical lights 32 Visual rendering of 33 multiple contacts Visual rendering using lights 33 Visual rendering of 34 multiple contacts Subjective evaluation • Objective: Determine user’s preferences about the different techniques • Procedure: Participants were asked to perform an industrial assembly operation • Without visual cues • With each visual cues • Conducted on 18 subjects • They had to fill a subjective questionnaire • Which effect : glyph / light / color change / size change / deformation • For which info : forces / distances / blocking / focus attention 34 Visual rendering of 35 multiple contacts Results • The visual effects were globally well appreciated Mean ranking “Lower is better” 5 4 3 2 1 0 Size Color Deformation Lights Distance Force Focus • Significant effects : • • • 35 Glyph Size effect globally appreciated (distance / force) Glyph Deformation effect appreciated to provide force information Light effect appreciated to attract visual attention Preliminary conclusion Visual rendering of 36 multiple contacts • We proposed a visual rendering technique to display multiple contact information • Display Proximity / Contact / Force • Using Glyphs/Lights • We presented a filtering technique to reduce the number of glyphs displayed • We conducted a subjective evaluation • Glyph size: proximity / force • Glyph deformation: force • Lights: focus the visual attention 36 37 Outline Integrated 6DOF Multimodal rendering Visual rendering of multiple contacts 37 Spatialized haptic rendering Objectives Spatialized haptic rendering • Context: Complex-shaped objects Help the user by providing position information • Provide contact position information using : • Visual rendering (particles/glyphs/lights) • Auditory rendering (spatialized sound) Can we provide this position information using haptic rendering ? • Contribution / Overview • Haptic rendering technique based on vibrations - Perceptive evaluation in a 1DOF case • 6DOF Haptic rendering technique - Perceptive evaluation to determine rendering parameters - Subjective evaluation in a 6DOF case 38 38 Spatialized haptic rendering 39 Haptic rendering of contact position • The impact between objects : • • A reaction force of contact A high frequency transient vibrations • This high-frequency transient vibrations depends on : • • • The object’s material (Okamura et. al 1998) The object’s geometry The impact position • Is-it possible to perceive the impact position information using these vibrations? 39 Spatialized haptic rendering Vibrations depending on impact positions • Examine the vibrations produced by a simple object : A cantilever beam • The vibrations depend on the impact position 40 40 Spatialized haptic rendering Simulation of vibrations (Euler-Bernouilli) • General solution : Euler Bernoulli model (EB) 41 41 Spatialized haptic rendering Simplified vibration patterns • Simplified patterns based on the physical behavior • • Maybe easier perception ? Simplified computation • Chosen model : exponentially damped sinusoid • • • 42 Amplitude changes with impact position Frequency changes with impact position Both amplitude and frequency changes 42 43 Simplified vibration patterns Near impact Am Fr 43 AmFr (Consistent) AmCFr (Conflicting) Far impact Evaluation Spatialized haptic rendering • Objective: “Determine it is possible to perceive the impact position using vibration” • Population: 15 subjects • Apparatus : • Virtuose6D device • Sound blocking noise headphones 44 44 Procedure Spatialized haptic rendering • Task: Do two successive impacts. “Between these two impacts which one was the closest one from the hand ?” • 6 models - 2 realistic models (Euler-Bernoulli) (EB1, EB2) - 4 simplified models (Am, Fr, AmFr, AmCFr) • 4 impact positions • 8 random repetitions • Total of 576 trials (40 min) 45 45 Spatialized haptic rendering Results of quantitative evaluation • “How well was the subject able to determine the impact position by sensing the vibration ?” • Overall performance : • • ANOVA Significant (p < 0.007) Paired t-tests (p < 0.05) : – Am – EB1 – EB2 – AmCFr – Fr – EB1 – EB2 Realistic Euler-Bernoulli 46 Simplified 46 Spatialized haptic rendering Results of qualitative evaluation • “How was the subjective feeling of realism ?” • Rate the impact realism : • Paired t-tests (p < 0.05): – Am – EB1 – EB2 – Fr – AmFr – EB2 – Fr – AmCFr Realistic Euler-Bernoulli 47 Simplified 47 Spatialized haptic rendering Quantitative evaluation and inversion • Many participants inverted the interpretation of the vibrations Sensed Perceived Normal 48 Inverted 48 Spatialized haptic rendering Discussion • Global weak inter – subject correlation : • Each subject seems to have his/her own interpretation (inversion or not) • Strong intra – subject consistency : • Subjects seem to be very consistent within his/her interpretation • Several strong inter – subject correlations between models • Several models interpreted the same way • Vibrations can be used to convey impact position information 49 49 Spatialized haptic rendering 6DOF Spatialized haptic rendering • Generalize the previous result for 6DOF manipulation : • 50 Virtual beam model 50 Spatialized haptic rendering Manipulation point and circle of confusion • Different impact positions can generate the same haptic feedback 51 51 Spatialized haptic rendering Perceptive evaluation • Objective: “Is it possible to perceive such complex vibrations: Is it possible to perceive the vibration direction ?” Determine the optimal amplitude / frequency vibration parameters allowing a good direction discrimination • Population: 10 subjects • Test among: ( 4 amplitudes a ) x ( 4 frequencies f ) 52 52 Spatialized haptic rendering Procedure and plan • “On which axis the vibration was applied ?” ● ● ● • 15 blocks of 4 x 4 x 3 = 48 vibrations = 720 trials (35min) ● ● ● 53 53 Spatialized haptic rendering Perceptive evaluation : results • Best performances: • Low frequencies • High amplitudes • Strategy: 4 frequencies f • Most participants relied on intuitive perception 4 amplitudes a 54 54 Spatialized haptic rendering Subjective evaluation • Objective: Evaluation in a real case • Population: 10 subjects • Test without and with vibrations • Subjective ratings • Impact realism • Impact position • Comfort • This evaluation provides encouraging results 55 * 55 Spatialized haptic rendering Preliminary conclusion • We proposed a method to provide impact position directly on the haptic channel information using vibrations based on a vibrating beam • We conducted a 1DOF study and perceptive evaluation (“realistic” / “simplified” models) - Simplified models achieved better performance • We extended this method for 6DOF manipulation • Perceptive study on the perception of vibration direction - Low frequencies / High amplitudes are better • Subjective study on a “real case” - Better subjective perception of impact position 56 56 57 Conclusion • Contributions: • Integrated 6DOF approach for multisensory rendering techniques Integrated 6DOF Multimodal rendering • Study of rendering techniques to improve the perception of contact position - Visual rendering technique to display multiple contact - Haptic rendering technique to provide position information using vibrations 57 Visual rendering of multiple contacts Spatialized haptic rendering 58 Perspective and future work • Computer side: • • • Higher level of information of contact (mobility) Rendering improvements (visual, auditory, tactile, force-feedback) Deeper investigation on vibratory tactile rendering • Human side: • • 58 Perceptive studies: multimodal perceptive effects / pseudo-haptics Quantitative evaluation of the rendering techniques in 6DOF manipulations 59 Publications • Jean Sreng, Anatole Lécuyer, Christine Mégard, and Claude Andriot. Using Visual Cues of Contact to Improve Interactive Manipulation of Virtual Objects in Industrial Assembly/Maintenance Simulations. IEEE Transactions in Visualization and Computer Graphics, 12(5):1013–1020, 2006 • Jean Sreng, Florian Bergez, Jérémie Legarrec, Anatole Lécuyer, Claude Andriot. Using an event-based approach to improve the multimodal rendering of 6DOF virtual contact. In Proceedings of ACM Symposium on Virtual Reality Software and Technology, pages 165–173, 2007 • Jean Sreng, Anatole Lécuyer, Claude Andriot. Using Vibration Patterns to Provide Impact Position Information in Haptic Manipulation of Virtual Objects. In Proceedings of EuroHaptics, pages 589–598, 2008 • Jean Sreng, Anatole Lécuyer, Claude Andriot. Spatialized Haptic Rendering: Improving 6DOF Haptic Simulations with Virtual Impact Position Information, 2009, In Proceedings of IEEE Virtual Reality, 2009, Accepted paper • Jean Sreng, Legarrec, Anatole Lécuyer, Claude Andriot. Approche Evénementielle pour l’Amélioration du Rendu Multimodal 6DDL de Contact Virtuel. Actes des journées de l’Association Française de Réalité Virtuelle, 97–104, 2007 • Jean Sreng, Anatole Lécuyer. Perception tactile de la localisation spatiale des contacts. Sciences et Technologies pour le Handicap, 3(1), 2009, Invited paper 59 60 Thank you. Questions ? • Jean Sreng, Anatole Lécuyer, Christine Mégard, and Claude Andriot. Using Visual Cues of Contact to Improve Interactive Manipulation of Virtual Objects in Industrial Assembly/Maintenance Simulations. IEEE Transactions in Visualization and Computer Graphics, 12(5):1013–1020, 2006 • Jean Sreng, Florian Bergez, Jérémie Legarrec, Anatole Lécuyer, Claude Andriot. Using an event-based approach to improve the multimodal rendering of 6DOF virtual contact. In Proceedings of ACM Symposium on Virtual Reality Software and Technology, pages 165–173, 2007 • Jean Sreng, Anatole Lécuyer, Claude Andriot. Using Vibration Patterns to Provide Impact Position Information in Haptic Manipulation of Virtual Objects. In Proceedings of EuroHaptics, pages 589–598, 2008 • Jean Sreng, Anatole Lécuyer, Claude Andriot. Spatialized Haptic Rendering: Improving 6DOF Haptic Simulations with Virtual Impact Position Information, 2009, In Proceedings of IEEE Virtual Reality, 2009, Accepted paper • Jean Sreng, Legarrec, Anatole Lécuyer, Claude Andriot. Approche Evénementielle pour l’Amélioration du Rendu Multimodal 6DDL de Contact Virtuel. Actes des journées de l’Association Française de Réalité Virtuelle, 97–104, 2007 • Jean Sreng, Anatole Lécuyer. Perception tactile de la localisation spatiale des contacts. Sciences et Technologies pour le Handicap, 3(1), 2009, Invited paper 60