Telecommunications Engineering Topic 2: Modulation and FDMA

advertisement

Telecommunications

Engineering

Topic 3: Modulation and

FDMA

James K Beard, Ph.D.

(215) 204-7932

jkbeard@temple.edu

http://astro.temple.edu/~jkbeard/

February 14, 2005

Topic 3

1

February 14, 2005

Topic 3

27-Apr

20-Apr

13-Apr

6-Apr

30-Mar

23-Mar

16-Mar

9-Mar

2-Mar

23-Feb

16-Feb

9-Feb

2-Feb

26-Jan

19-Jan

Attendance

25

20

15

10

5

0

2

Topics

The Survey

Homework

Problem

Problem

3.30, p. 177, Adjacent channel interference

3.35 p. 178, look at part (a); part (b) was

done in class

Problem 3.36 p. 178, an intermediate difficulty

problem in bit error rate using MSK

Topics

Why follow sampling with coding?

Shannon’s information theory

February 14, 2005

Topic 3

3

Survey Thumbnail

Nine completed surveys

Six incomplete or just looks

Five did not open the survey

Selection biases results?

Only

45% of class gives all results

Other 55% can be best, worst, or more of the

same

February 14, 2005

Topic 3

4

Multiple Choice Questions

All are replies on 1-5 basis

No answers were 1 or 5

Questions

My

background is appropriate

I understand sampled time/frequency

domains

I am comfortable with readings and text

February 14, 2005

Topic 3

5

Multiple Choice Summary

Readings

Time/Frequency

Background

0%

February 14, 2005

20%

40%

Topic 3

60%

80%

100%

6

Study Difficulties Reported

Problems

2.5

p. 29

2.6 p. 33

2.14 p. 67

2.20 p. 77

2.22 p. 81

Examples

2.17

p. 75

Theme example 1 p. 82

February 14, 2005

Topic 3

7

Suggestions

More examples and homework problems

worked through – already in progress

Go over more difficult homework problems

before they are assigned

Warn and correct wrong answers – AIP

Discuss WHY as well as HOW

February 14, 2005

Topic 3

8

Survey Summary

Everybody is OK

Based

on 40% sample

But, nobody answered 5’s

Need

More

coverage of how and why

Fill in for prerequisites

February 14, 2005

Topic 3

9

Homework

Problem 3.30, p. 177, Adjacent channel

interference

Problem 3.35 p. 178, look at part (a); part

(b) was done in class

Problem 3.36 p. 178, an intermediate

difficulty problem in bit error rate using

MSK

February 14, 2005

Topic 3

10

Problem 3.30 Page 177

Adjacent channel interference

G f power spectral density of input

H f Frequency response of channel filter

f channel separation

ACI f

G f H f f

G f H f

2

2

df

df

February 14, 2005

Topic 3

11

Solution

Power of signal in correct filter

PCC

G f H f

2

df

Power of signal in adjacent channel

PAC

G f H f f

2

df

February 14, 2005

Topic 3

12

Problem 3.35 p. 178 (a)

Formulas in table 3.4 page 159

Begin BPSK with

Pe erfc

Eb

,

N0

2

Integrate over Rayleigh distribution

p exp , 0

0

0

1

February 14, 2005

Topic 3

13

Evaluate the Integral

Average BER is

P 0 Pe p d

0

0

1

1

2

1 0

1

4 0

Evaluation of integral is left as ETR

February 14, 2005

Topic 3

14

Problem 3.36 p. 178

Use MSK with a BER of 10-4 or better

AWGN

Use

Table 3.4 or Figure 3.32, pp. 159-160

SNR requirement is about 8.3 dB

Rayleigh fading

Use

Table 3.4 p. 159

Solve for SNR of about 34 dB

February 14, 2005

Topic 3

15

Coding Follows Sampling

Sampling

Simply

converts base signal to elementary

modulation form

Formatting for performance is left to coding

Coding

Removal

of redundancy == source coding

Channel coding == error detection and

correction capability added

February 14, 2005

Topic 3

16

Shannon’s Information Theory

First published in BSTJ article in 1948

Builds

on Nyquist sampling theory

Adds BER concepts to find maximum flow of bits

through a channel limited by

Bandwith

SNR

Channel capacity maximum is

CBits / Sec

February 14, 2005

PT

BW log 2 1

PNoise

Topic 3

17

Other Important Results

Channel-coding theorem

Given

A channel capacity CB/S

Channel bit rate less than channel capacity

Then

There exists a coding scheme that achieves an arbitrarily

high BER

Rate distortion theory – sampling and data

compression losses exempt from channelcoding theorem

February 14, 2005

Topic 3

18

Concept of Entropy

Definition – Average information content

per symbol

Importance

Fundamental

limit on average number of bits

per source symbol

Channel-coding theorem is stated in terms of

entropy

February 14, 2005

Topic 3

19

Equation for Entropy

S source alphabet set

L average number of bits per symbol

K number of symbols in alphabet

pk probability of symbol k in message

1

H S pk log 2 (entropy)

k 0

pk

H S

(coding efficiency)

L

K 1

February 14, 2005

Topic 3

20

Study Problems and Reading

Assignments

Reading assignments

Read

Section 4.6, Cyclic Redundancy Checks

Read Section 4.7, Error-Control Coding

Study examples

Example

4.1 page 197

Problem 4.1 page 197

February 14, 2005

Topic 3

21

Problem 2.4 p. 28

4 GHz microwave link

Towers 100 m and 50 m tall, 3 km apart

Midway between, tower 70 m tall

Radius of Fresnel zone, eq. (2.38) p. 27

Distance

r1

d1 d 2

d1 d 2

d1 = d2 = 1.5 km

0.075m 1500m

3000m

Raise both towers

February 14, 2005

Topic 3

2

7.5m 5m

22

Problem 2.5 p. 29

Similar to 2.4 but LOS is clearly obstructed

Fresnel-Kirchoff diffraction parameter eq.

(2.39) is

2 d1 d 2

h

3.465

d1 d 2

Diffraction loss is 24 dB

For 400 MHz, v = 1.096, loss = 16 dB

February 14, 2005

Topic 3

23

Term Projects

Areas for coverage

Propagation

and noise

Free space

Urban

Modulation

& FDMA

Coding

Demodulation

and detection

Will deploy over Blackboard this week

February 14, 2005

Topic 3

24

Term Project Timeline

First week

Parse and report your understanding

Give estimated parameters including SystemView

system clock rate

Second week

Block out SystemView

Signal generator

Modulator

Through mid-April

Flesh out as class

Due date TBD

February 14, 2005

topics are presented

Topic 3

25

EE320 Digital

Telecommunications

Quiz 1 Report

February 21, 2005

February 14, 2005

Topic 3

26

The Curve

100

90

80

70

60

50

40

30

20

10

0

1

2

3

February 14, 2005

4

5

6

7

8

9 10 11 12 13 14 15 16 17 18 19 20

Topic 3

27

The Answers

See previous lectures/slides

Questions

See Excel spreadsheets

Questions

1, 4

2, 3, 5

See Mathcad spreadsheet

Fresnel

February 14, 2005

integrals for diffraction loss

Topic 3

28

Question 2

Name

Wavelength Ae, Ex. 2.2 p. 16

ABREFA-KODOM

APEHAYA

BARAKAT

BIRCH

BOADO

CARDONE

DO

GEDZAH

MADJAR

P H NGUYEN

T T NGUYEN

T H NGUYEN

PANG

PATEL

ROIDAD

SCHOLL

STRAKER

TANUI

TRAN

ZAYZAY

0.06

0.06122449

0.0625

0.063829787

0.065217391

0.066666667

0.068181818

0.069767442

0.071428571

0.073170732

0.075

0.076923077

0.078947368

0.081081081

0.083333333

0.085714286

0.088235294

0.090909091

0.09375

0.096774194

February 14, 2005

3.534291735

3.773838175

4.021238597

4.276493

4.539601384

4.810563751

5.089380099

5.376050428

5.67057474

5.972953033

6.283185307

6.601271563

6.927211801

7.261006021

7.602654222

7.952156404

8.309512569

8.674722715

9.047786842

9.428704952

Topic 3

Gain, Eq.

(2.9) p. 16

Gain, dB

12337.0055

12651.5224

12936.2879

13190.1835

13412.2221

13601.5486

13757.439

13879.3012

13966.6746

14019.2302

14036.7707

14019.2302

13966.6746

13879.3012

13757.439

13601.5486

13412.2221

13190.1835

12936.2879

12651.5224

40.91209758

41.02142788

41.11809672

41.20250836

41.27500738

41.33588357

41.38537595

41.423676

41.45093014

41.46724168

41.47267206

41.46724168

41.45093014

41.423676

41.38537595

41.33588357

41.27500738

41.20250836

41.11809672

41.02142788

29

Question 3

Name

ABREFA-KODOM

APEHAYA

BARAKAT

BIRCH

BOADO

CARDONE

DO

GEDZAH

MADJAR

P H NGUYEN

T T NGUYEN

T H NGUYEN

PANG

PATEL

ROIDAD

SCHOLL

STRAKER

TANUI

TRAN

ZAYZAY

February 14, 2005

i

0

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

Wavel 1

Wavel 2

0.06

0.059761

0.059524

0.059289

0.059055

0.058824

0.058594

0.058366

0.05814

0.057915

0.057692

0.057471

0.057252

0.057034

0.056818

0.056604

0.056391

0.05618

0.05597

0.055762

0.6

0.59761

0.595238

0.592885

0.590551

0.588235

0.585938

0.583658

0.581395

0.579151

0.576923

0.574713

0.572519

0.570342

0.568182

0.566038

0.56391

0.561798

0.559701

0.557621

Part I Eq.

(2.36) p. 27

7.745966692

7.73052108

7.715167498

7.699905035

7.684732794

7.669649888

7.654655446

7.639748605

7.624928517

7.610194341

7.595545253

7.580980436

7.566499085

7.552100405

7.537783614

7.523547939

7.509392615

7.49531689

7.481320021

7.467401274

h

Nu or v, eq.

(2.39) p. 28

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

0.547722558

0.548816909

0.549909083

0.550999093

0.55208695

0.553172667

0.554256258

0.555337735

0.55641711

0.557494395

0.558569602

0.559642743

0.560713831

0.561782876

0.562849891

0.563914887

0.564977876

0.566038868

0.567097875

0.568154908

Topic 3

Loss, Fig. Nu or v,

2.10 p. 29 2nd freq

-10.639

-10.64753

-10.65605

-10.66458

-10.67311

-10.68163

-10.69016

-10.69868

-10.70721

-10.71574

-10.72426

-10.73279

-10.74132

-10.74984

-10.75837

-10.76689

-10.77542

-10.78395

-10.79247

-10.801

0.173205

0.173551

0.173897

0.174241

0.174585

0.174929

0.175271

0.175613

0.175955

0.176295

0.176635

0.176975

0.177313

0.177651

0.177989

0.178326

0.178662

0.178997

0.179332

0.179666

2nd Loss

-7.501

-7.503895

-7.506789

-7.509684

-7.512579

-7.515474

-7.518368

-7.521263

-7.524158

-7.527053

-7.529947

-7.532842

-7.535737

-7.538632

-7.541526

-7.544421

-7.547316

-7.550211

-7.553105

-7.556

30

Question 5

Name

ABREFA-KODOM

APEHAYA

BARAKAT

BIRCH

BOADO

CARDONE

DO

GEDZAH

MADJAR

P H NGUYEN

T T NGUYEN

T J NGUYEN

PANG

PATEL

ROIDAD

SCHOLL

STRAKER

TANUI

TRAN

ZAYZAY

February 14, 2005

BER

0.000316

0.000251

0.0002

0.000158

0.000126

0.0001

7.94E-05

6.31E-05

5.01E-05

3.98E-05

3.16E-05

2.51E-05

2E-05

1.58E-05

1.26E-05

0.00001

7.94E-06

6.31E-06

5.01E-06

3.98E-06

Qinv(BER) Eb/N0, dB

3.41734328

3.47953449

3.54076432

3.60107467

3.66050453

3.71909027

3.77686584

3.83386302

3.89011158

3.94563947

4.00047298

4.05463684

4.10815439

4.16104764

4.2133374

4.26504337

4.31618421

4.36677762

4.41684041

4.46638855

7.663472171

7.820122959

7.971640442

8.118342568

8.26051902

8.398434441

8.532331217

8.66243188

8.788941206

8.912048035

9.031926873

9.148739293

9.262635176

9.373753798

9.482224805

9.588169073

9.691699474

9.792921563

9.891934194

9.988830071

Topic 3

Rayleigh Rayleigh

SNR

SNR, dB

789.8195

994.518

1252.218

1576.643

1985.071

2499.25

3146.564

3961.483

4987.406

6278.966

7904.944

9951.929

12528.93

15773.18

19857.46

24999.25

31472.39

39621.58

49880.81

62796.41

28.97528

29.97613

30.9768

31.97733

32.97776

33.9781

34.97837

35.97858

36.97875

37.97888

38.97899

39.97907

40.97914

41.97919

42.97924

43.97927

44.9793

45.97932

46.97933

47.97935

31

The Quiz in the Text (1 of 2)

Question 1

Text

pp 3-5

Lectures and slides several times

Question 2

Antenna

gain equations (2.2), (2.3) pp 14

Also equations (2.9), example 2.2, pp 16-17

Question 3

Section

2.3.2 and exa,[;e 2.3 pp 24-29

Two lectures, example worked in class, practice quiz

February 14, 2005

Topic 3

32

The Quiz in the Text (2 of 2)

Question 4

Problem

3.30 p. 177

Given in class

Answer was to give equation that was given in

the problem statement

Question 5

Problem

3.36, given in class

Use table 3.4, figure 3.33, pp. 159-161

February 14, 2005

Topic 3

33

EE320 Convolutional

Codes

James K Beard, Ph.D.

February 14, 2005

Topic 3

34

Bonus Topic: Gray Codes

Sometimes called reflected codes

Defining property: only one bit changes

between sequential codes

Conversion

Binary codes to Gray

Work from LSB up

XOR of bits j and j+1 to get bit j of Gray code

Bit past MSB of binary code is 0

Gray to binary

Work from MSB down

XOR bits j+1 of binary code and bit j of Gray code to get bit j

of binary code

Bit past MSB of binary code is 0

February 14, 2005

Topic 3

35

Polynomials Modulo 2

Definition

Coefficients

are ones and zeros

Values of independent variable are one or zero

Result of computation is taken modulo 2 – a one or

zero

The theory

Well

developed to support many DSP applications

Mathematical theory includes finite fields and other

areas

February 14, 2005

Topic 3

36

Base Concept – Signal

Polynomial

Pose data as a bit stream

Characterize data as impulse response of a filter

with weights 1 and 0

Characterize as z transform

Substitute D for 1/z in transfer function

Example

Signal 11011001

Filter is 1 + (1/z) +(1/z)3+(1/z)4+

(1/z)6

Signal polynomial is 1 + D + D2+D4+D6

Signal and filter polynomials provide base

method for understanding convolutional codes

February 14, 2005

Topic 3

37

Base Concept –Modulo 2

Convolutions

Scenario

Bitstream

into convolution filter

Filter weights are ones and zeros

Output is taken modulo 2 – i.e. a 1 or 0

Result: A modulo 2 convolution converts

one bit stream into another

February 14, 2005

Topic 3

38

Benefits of Concept

Convolution is product of polynomials

Conventional

multiplication of polynomials is

isomorphic to convolution of the sequence of their

coefficients

Taking the resulting coefficients modulo 2 presents us

with the output of a bitstream into a convolution filter

with output modulo 2

These special polynomials have a highly

developed mathematical basis

Implementation in hardware and software is very

simple

February 14, 2005

Topic 3

39

Error-Control Coding

Two categories of channel coding

Forward

error-correction (EDAC)

Automatic-repeat request (handshake)

CRC Codes

Hash

codes of the message

Error detection, but not correction

February 14, 2005

Topic 3

40

Topics in Convolutional Codes

The node diagram is a block diagram

Polynomial representations

Trellis diagrams

Represent signals, convolutions and special polynomials and

polynomial operations

Give us a simple way to understand and analyze convolutions

Give us a mechanism to represent convolution operations as a

finite state machine

Provide a first step in visulaization of the finite state machine

Node diagrams

Provide a simple visualization of the finite state machine

Provide a basis for very simple implementation

February 14, 2005

Topic 3

41

Convolutional Code Steps

Reduce the message to a bit stream

Operate using modulo-2 convolutions

Convolution

filter with short binary mask

Take result modulo 2

Implemented with one-bit shift registers

with multiplexer (see Figure 4.6 p. 196)

February 14, 2005

Topic 3

42

Example 4.1 Page 197

Path 2

Output

Input

1/z

1/z

Path 1

Haykin & Moher

Figure 4.6 p. 196

February 14, 2005

Topic 3

43

Example 4.1 (1 of 4)

Response of Path 1

g 1 D 1 D 2

Response of Path 2

g

2

D 1 D D2

Mutiplex the outputs bit by bit

One

side output, then the other

Produce a longer bit stream

February 14, 2005

Topic 3

44

Example 4.1 (2 of 4)

Signal

Message

bit stream (10011)

Message as a polynomial

m D 1 D D

3

4

Multiply the message polynomial by the

Path 1 and Path 2 filter polynomials

Obtain

two bit streams from resulting

polynomials

Multiplex (interleave) the results

February 14, 2005

Topic 3

45

Example 4.1 (3 of 4)

Polynomial multiplication results

c 1 D 1 D 2 1 D 3 D 4

1 D 2 D3 D 4 D5 D 6

c

2

D 1 D D 2 1 D 3 D 4

1 D D 2 D3 D 6

Messages

Path

1 (1011111)

Path 2 (1111001)

Multiplexing them (11, 10, 11, 11, 01, 01, 11)

February 14, 2005

Topic 3

46

Example 4.1 (4 of 4)

Length of coded message is

Twice

the order of the product polynomials +1

2.(length of message + length of shift registers

- 1) = 2.(5 + 3 - 1)=2.7=14

Shift registers have memory

Simplest

way to clear is to feed zeros

Number of clocks is number of stages

Zeros between message words are tail zeros

February 14, 2005

Topic 3

47

Problem 4.1

Signal and polynomial 1

c1 1,0,0,1,1 1,0,1 1,0,1,1,1,1,1

Signal and polynomial 2

c

2

1, 0, 0,1,1 1,1,1 1,1,1,1, 0, 0,1

Result

c 11,10,11,11,01,01,11

February 14, 2005

Topic 3

48

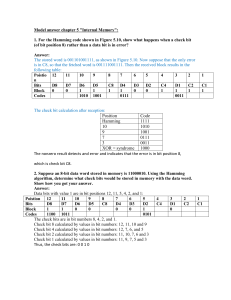

Modulo 2 Convolution

Diagrams

February 14, 2005

Poly

Signal

Resullt: 1

1 0 1

1 0 0 1 1

1

Poly

Signal

Resullt: 1

1 1 1

1 0 0 1 1

1

Poly

Signal

Resullt: 0

1 0 1

1 0 0 1 1

Poly

Signal

Resullt: 1

1 1 1

1 0 0 1 1

1

Poly

Signal

Resullt: 1

1 0 1

1 0 0 1 1

1

Poly

Signal

Resullt: 1

1 1 1

1 0 0 1 1

1

Poly

Signal

Resullt: 1

1 0 1

1 0 0 1 1

1

Poly

Signal

Resullt: 1

1 1 1

1 0 0 1 1

1

Poly

Signal

Resullt: 1

1 0 1

1 0 0 1 1

1

Poly

Signal

Resullt: 0

1 1 1

1 0 0 1 1

1 1

Poly

Signal

Resullt: 1

1 0 1

1 0 0 1 1

1

Poly

Signal

Resullt: 0

1 1 1

1 0 0 1 1

1 1

Poly

Signal

Resullt: 1

1 0 1

1 0 0 1 1

1

Poly

Signal

Resullt: 1

1 1 1

1 0 0 1 1

1

Topic 3

49

Trellis and State Diagrams

Trellis diagram Figure 4.7 p. 198, and

state table 4.2 p. 199

Horizontal

position of node represents time

Top line represents the input

Each row represents a state of the two-path

encoder – a finite state machine

Trace paths produced by input 1’s and 0’s

Paths

produced by 0’s are solid

Paths produced by 1’s are dotted

February 14, 2005

Topic 3

50

Advantages of Trellis and State

Diagrams

Once drawn, output for any message is simple

to obtain

Allowed and non-allowed state transitions are

explicit

State diagram follows directly

Figure

4.8 p. 200

Shows state transitions and causes

Coding output of state diagram simpler than that

of trellis diagram

February 14, 2005

Topic 3

51

States of the Filter

We need the output states

Ordered

pair of bits from Path 1 and Path 2

Objective is tracing through states to get outputs

Output states not the same as register states

Only

four states can be defined from two outputs

Total number of states is defined by the order of

convolution

Current example is three taps

Number of states is 2<order> or 8

We get to eight states by considering each pair of

consecutive input bits

February 14, 2005

Topic 3

52

Drawing the Trellis Diagram

State Table

Begin with a state table

For each paths “state”

For

Draw the solid path for a 0

Draw the dotted path for a 1

For

the last bit 0

the last bit 1

Draw the solid path for a 0

Draw the dotted path for a 1

State

0 0 0

1 0 1

0 1 0

1 1 1

0 1 1

1 1 0

0 0 1

1 0 0

Paths 1, 2

0 0

0 0

0 1

0 1

1 0

1 0

1 1

1 1

This is 16 lines total

February 14, 2005

Topic 3

53

For Output State [0,0]

For the last bit 0

Adding a zero, solid path label is (0,0)

Adding a one, dotted path label is (0,1)

From {0,0,0} to {0,0,0}

Next state is [0,0]

From {0,0,0} to {1,0,0}

Next state is [1,1]

For the last bit 1

Adding a zero, solid path label is (1,0)

From {1,0,1} to {0,1,0}

Next state is [1,0]

Adding a one, dotted path label is (1,1)

From {1,0,1} to {1,1,0}

Next state is [0,1]

February 14, 2005

Topic 3

54

For Output State [0,1]

For the last bit 0

Adding a zero, solid path label is (0,0)

Adding a one, dotted path label is (0,1)

From {0,1,1} to {0,0,1}

Next state is [1,1]

From {0,1,1} to {1,0,1}

Next state is [0,0]

For the last bit 1

Adding a zero, solid path label is (1,0)

From {1,1,0} to {0,1,1}

Next state is [0,1]

Adding a one, dotted path label is (1,1)

From {1,1,0} to {1,1,1}

Next state is [1,0]

February 14, 2005

Topic 3

55

For Output State [1,0]

For the last bit 0

Adding a zero, solid path label is (0,0)

Adding a one, dotted path label is (0,1)

From {0,1,0} to {0,0,1}

Next state is [1,1]

From {0,1,0} to {1,0,1}

Next state is [0,0]

For the last bit 1

Adding a zero, solid path label is (1,0)

From {1,1,1} to {0,1,1}

Next state is [0,1]

Adding a one, dotted path label is (1,1)

From {1,1,1} to {1,1,1}

Next state is [1,0]

February 14, 2005

Topic 3

56

For Output State [1,1]

For the last bit 0

Adding a zero, solid path label is (0,0)

Adding a one, dotted path label is (0,1)

From {0,0,1} to {0,0,0}

Next state is [0,0]

From {0,0,1} to {1,0,0}

Next state is [1,1]

For the last bit 1

Adding a zero, solid path label is (1,0)

From {1,0,0} to {0,1,0}

Next state is [1,0]

Adding a one, dotted path label is (1,1)

From {1,0,0} to {1,1,0}

Next state is [0,1]

February 14, 2005

Topic 3

57

Drawn Trellis Diagram

0,0

0, 0

1,1

0,1

1,0

1,0

0,1

1,0

1,1

0,1

0, 0

1,1

February 14, 2005

0,1

1,0

0, 0

1,1

1,1

0, 0

1,0

0,1

Topic 3

58

State Diagram

1,1

0, 0

[00]

0, 0

1,1

1,0

[10]

February 14, 2005

0, 0

1,1

0,1

1,1

[01]

0,1

1,0

0,1

1,0

0, 0

1,0

Topic 3

[11]

0,1

59

EE320 Decoding

Convolutional Codes

James K Beard, Ph.D.

February 14, 2005

Topic 3

60

Where We are Going

Exploit the Channel Coding Theorem

For

any required channel bit rate CR less than

the channel capacity C

CR C B log2 1 SNR

A coding

exists that achieves an arbitrarily low

BER

Method is error-correcting codes

February 14, 2005

Topic 3

61

Hamming Weight and Distance

Hamming weight

A property

of the code

Equal to the number of 1’s

Hamming distance

Based

on two codes

Equal to the number of 1’s in an XOR

Used in definition of error correction

An

ECC makes the Hamming distance between

characters > 2

Overhead is increase in required bit rate

February 14, 2005

Topic 3

62

Hamming Distance and Error

Correction

Code error correction capability

Upper bound is half the Hamming distance between code

vectors, dfree/2

The length extension due to convolutional codes can allow larger

Hamming distance between input code vectors

NOTE: Gray codes are contrived to have a Hamming

distance of 1 between adjacent characters

The constraint length K

Is equal to the number of convolution delays plus 1

Bounds the error correction capability of two-convolution codes

Table 4.3 p. 201

Our example has K=3, dfree=5, can theoretically correct 2 bits

February 14, 2005

Topic 3

63

Haykin & Moher Table 4.3

Page 201

Maximum Free Distance Attainable for Rate 1/2

Constraint Length K

Systematic Codes

Non-Systematic Codes

2

3

3

3

4

5

4

4

6

5

5

7

6

6

8

7

6

10

8

7 (Note)

10

9

Not Available

12

NOTE: (1) From example polynomials 400, 671 in a non recursive code

February 14, 2005

Topic 3

64

Fundamental of Maximum

Likelihood: Multivariate PDF

Consider N Gaussian random variables

with mean zero and variance one,

zi2

1

1

1 T

p zi

exp , pz z

exp z z

N /2

2

2

2

2

The covariance of z is the identity matrix I

zz

February 14, 2005

T

zi z j i , j I

Topic 3

65

Identities and Definitions

Determinant of product and inverse

1

1

A B A B , A

A

Differential

d x dx1 dx2

Gradient

yi

x x j

y

February 14, 2005

Topic 3

66

Variable Change to Correlated

Variables

Consider the variable change

x A z

The pdf of x is found from the differential

and the Jacobian determinant

z

1

pz z d z pz A x

d x A px x d x

x

1

The covariance R of x is

R x x

T

February 14, 2005

A z z AT A AT

T

Topic 3

67

PDF of Correlated Variables

The pdf of x is

1

px x pz z

A

1 T 1

exp x R x

1/ 2

N /2

2

2 R

1

February 14, 2005

Topic 3

68

With a Mean…

The pdf of a Gaussian vector x of N

elements with covariance R and mean a is

T

1

1

exp x a R x a

2

px x

1/ 2

N /2

2 R

February 14, 2005

Topic 3

69

Maximum Likelihood Estimators

Principle

Given

the pdf of a data vector y as available, for a

given set of parameters x is p(y|x)

Find the set of parameters x hat that maximizes this

pdf for the given set of measurements y

Properties

If

a minimum variance estimator exists, this method

will produce it

If not, the variance will approach the theoretical

minimum – the Cramer-Rao bound – as the amount

of relevant data increases

February 14, 2005

Topic 3

70

Observations on Maximum

Likelihood

All known minimum variance estimators can be

derived using the method of maximum

likelihood; examples include

Mean

as average of samples

Proportion in general population as proportion in a

sample

Statistics and error bounds on estimators are

found as part of the derivation

The method is simple to use

February 14, 2005

Topic 3

71

Our Example

Given a message vector m and its code vector c

and a received vector r

Make an estimate m hat of the message vector

Process

With

the noisy c through the receiver channel

estimate c hat

Select the code m hat that produces a code vector c

tilde has the shortest Hamming distance to c hat

February 14, 2005

Topic 3

72

The MLE for c

Data

y cn

Log likelihood function

ln p y | c

1

N ln 2 ln R y c

2

T

R 1 y c

Solution is Nearest Neighbor

ĉ y

February 14, 2005

Topic 3

73

Assignment

Read 4.7, 4.8, 4.10, 4.11, 4.16

Do problem 4.1 p. 197

Do problem 4.2 p. 198

Do encoding in your term project

February 14, 2005

Topic 3

74

Curve for Backup Quiz

100

90

80

70

60

50

40

30

20

10

0

1

2

February 14, 2005

3

4

5

6

7

8

9 10 11 12 13 14 15 16 17 18 19 20

Topic 3

75

Assignment

Read 5.2, 5.3, 5.5

Look at Problem 4.5 p. 243

Do problems 4.6, 4.7 p. 252

February 14, 2005

Topic 3

76

Interleaving and TDMA

The Viterbi Method

Interleaving

Scatters

the code data stream

Makes low BER communication more robust

through fading medium and interference

Noise performance

TDMA

February 14, 2005

Topic 3

77

Viterbi Algorithm References

Viterbi, A.J., Error bounds for convolutional

codes and an asymptotically optimum

decoding algorithm, IEEE Trans. Inform.

Theory, Vol. IT-13, pp. 260-269, 1967.

Forney, G.D. Jr. The Viterbi algorithm,

Proceedings of the IEEE, Vol. 61, pp. 268278, 1973.

February 14, 2005

Topic 3

78

The Viterbi Method

Use the trellis for decoding

Dynamic programming

A method

originally developed for a control theory

problem by Richard Bellman

Based on working the problem from the end back to

the beginning

Uses the Hamming distance as an optimization

criteria

Crops the growing decision tree by taking the

steps backward in time a few at a time

February 14, 2005

Topic 3

79

Interleaving

Based on the coherence time of a fading

channel; fading due to motion leads to

TCOHERENCE

Maximum range rate

0.3

, fD

2 fD

Interleaving operations

Block

data over times much larger than TCOHERENCE

Reformat in smaller blocks

Multiplex the smaller blocks into an interleaved data

stream

February 14, 2005

Topic 3

80

Interleaver Parameters

Object of interleaving

Data

blackouts of TCOHERENCE cause loss of

less than dfree bits

FEC EDAC coding can bridge these gaps

Make each row

Consist

of at least dfree bits

Last TCOHERENCE or longer

February 14, 2005

Topic 3

81

Interleaver Methods

Methods

Simple

sequential interleaving as just

described

Pseudorandom interleaving

Combine with codes

Use

Viterbi interleaver

Use multiplexer of convolutional codes as

interleaver

February 14, 2005

Topic 3

82

Noise performance

Compare AWGN channels using Figure

4.13 p. 213

Rayleigh fading performance given in

Figure 4.14 p. 214

February 14, 2005

Topic 3

83

Turbo Codes

Revolutionary methodology

Emerged

in 1993 through 1995

Performance approaches Shannon limit

Technique

Encoding

blocked out in Figure 4.5 p. 215

Two systematic codes in parallel, one interleaved

Excess parity bits trimmed or culled

Decoding shown in Figure 4.17 p. 217

Performance shown in Figure 4.18 p. 219

February 14, 2005

Topic 3

84

Next Time

TDMA

Chapter 4 examples

Quiz postponed one week to March 30

Will

cover Chapter 4

Some topics in CDMA

February 14, 2005

Topic 3

85

TDMA

Time-Division Multiple Access (TDMA)

Multiplex

several users into one channel

Alternative to FDMA

Third alternative is CDMA, presented next

Advantages over FDMA

Simultaneous

transmit and receive aren’t required

Single-frequency operation for transmit and receive

Can be combined with interleaving

Can be overlaid on FDMA, CDMA

February 14, 2005

Topic 3

86

Types of TDMA

Wideband

Used

in links such as satellite communications

Frequency channels several MHz wide

Medium band

Global

System for Mobile (GSM) telecommunications

Several broadband links

Narrow band

TIA/EIA/IS-54-C

standard in use for US cell phones

Single frequency channel TDMA

February 14, 2005

Topic 3

87

Advantages of TDMA

overlaying FDMA

Cooperative channel allocation between

base stations with overlapping coverage

(channel-busy avoidance)

Dropouts in some channels from

frequency-dependent fading can be

avoided

Equalization can mitigate frequencydependent fading in medium and broad

band TDMA/FDMA

February 14, 2005

Topic 3

88

Global System for Mobile

(GSM)

Internationally used TDMA/FDMA

From Haykin & Moher 4.17 pp. 236-239

Overview given here

Full description available on WWW

http://ccnga.uwaterloo.ca/~jscouria/GSM/gsmreport.html

Organization

Time blocks

Major frames are 60/13 = 4.615 milliseconds

Eight Time Slots of 577 microseconds in each major frame

156.25 bits per time slot; 271 kBPS data rate

Frequency channels

124 channels 200 kHz wide, 200 kHz apart

Frequency hopping with maximum of 25 MHz

February 14, 2005

Topic 3

89

GSM Characteristics

Frequency allocation (Europe)

Uplink

(to base station) 890 MHz to 915 MHz

Downlink (to handsets) 935 MHz to 960 MHz

Design features counter frequency-selective

fade

Channel

separation matches fading notch width

Frame length matches fading duration

EDAC combined with multilayer interleaving

Intrinsic latency is 57.5 milliseconds

February 14, 2005

Topic 3

90

Subframe Organization

Guard period of 8.25 bits begins the frame

Three tail bits end guard and time slots

Three data blocks

57

bits of data

26 bits of “training data”

57 bits of data

Flag bit precedes training and second data block

Defines

speech vs. digital or training data

Overall efficiency about 75% data

February 14, 2005

Topic 3

91

GSM Coding

Complex speech coder/decoder (CODEC)

Concatenated convolutional codes

Multi-layer interleaving

GMSK channel modulation

About

40 dB adjacent channel rejection

ISI effects are small

An international standard that defines

affordable enabling technologies

February 14, 2005

Topic 3

92

Coherence Time Examples

Mobile terminal moving at 30 km/hr (19 mph)

Frequency allocation about 1.9 GHz

Problem

4.4 p. 210 answer is 9.6 ms (!!!)

Coherence time about 2.84 ms

Frequency of about 900 MHz

European

GSM allocation

Coherence time of about 6 ms

Velocity of 39 km/hr (24 mph) gives coherence time of

4.62 ms frame time

February 14, 2005

Topic 3

93

Problems 4.6, 4.7

Message is 10111… (1’s continue)

Codes are (see p. 253)

Problem

4.6: (11)(10)

Problem 4.7: (1111)(1101)

Find output code stream by polynomial

method

February 14, 2005

Topic 3

94

Solution for Problem 4.6

Solution is simple enough to do by

inspection:

(11,10,11,01,01,01,…)

Feed-through

path embeds signal in the code

This makes the code systematic

February 14, 2005

Topic 3

95

Solution for Problem 4.7

Message polynomial is

m( x ) 1 x 2 x 3 x 4

Generator polynomials are

g ( x ) 1 x x x

(1)

2

3

g (2) ( x ) 1 x x 3

February 14, 2005

Topic 3

96

Solution for Problem 4.7

(concluded)

Code polynomials are

c (1) x g (1) x m x 1 x x 3 x 4

c (2) x g (2) x m x 1 x x 2 x 3 x 5 x 6

Code bits are

(1)

c

x 1,1,0,1,1,0,0,0,

(2)

c

x 1,1,1,1,0,1,1,1,

Code output is

11,11,01,11,10,01,01,

February 14, 2005

Topic 3

97

Simulation of Problem 4.7

(1 of 2)

program main !Execute a convolution code

implicit none

integer,dimension(4)::g1=(/1,1,1,1/),g2=(/1,0,1,1/) !Reverse order

integer,dimension(23)::message=(/0,0,0,1,0,1,1,1,1,1,1,1,1,

1,1,1,1,1,1,1,1,1,1/)

&

integer::i,j,k=4

do i=1,10

print 1000,i,convolve(k,message,g1,i),convolve(k,message,g2,i)

end do

1000 format(i3,": (",i2,",",i2,")")

contains

…

February 14, 2005

Topic 3

98

Simulation of Problem 4.7

(2 of 2)

integer function convolve(k,m,g,i) !Convolve m(i:i+k-1) with g(1:k)

integer,intent(IN)::k,m(*),g(*),i

integer::sumc

!Perform an ordinary convolution and take the result modulo 2

sumc=0

do j=1,k

sumc=sumc+m(i+j-1)*g(j)

end do

convolve=modulo(sumc,2)

end function convolve

end program main

February 14, 2005

Topic 3

99

Simulation Output

1:

2:

3:

4:

5:

6:

7:

8:

9:

10:

February 14, 2005

(

(

(

(

(

(

(

(

(

(

1,

1,

0,

1,

1,

0,

0,

0,

0,

0,

1)

1)

1)

1)

0)

1)

1)

1)

1)

1)

A few minutes with Fortran 95

Your choice of language will do.

Topic 3

100

Interleaving and Coherence

Time

Problem 4.14 page 254

Coherence time

TCOHERENCE

0.3

0.15

2 fD

vehicle speed

BitLossBlockLength TCOHERENCE BitRate

0.15 BitRate

vehicle speed

February 14, 2005

Topic 3

101

Discussion Questions

One-Channel TDMA

What

about both transmit and receive on the

same frequency channel?

Is it a good idea? Why?

What are the advantages and

disadvantages of systematic and nonsystematic codes?

February 14, 2005

Topic 3

102

Assignment

Read 5.2, 5.3, 5.5

Look at problem 5.1 p. 262

Do problem 5.2 p. 263

Next time

Spreading

the spectrum

Gold codes

Galois fields for binary sequences

February 14, 2005

Topic 3

103