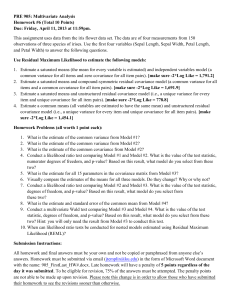

ppt

advertisement

Opinion Detection by Transfer Learning 11-742 Information Retrieval Lab Grace Hui Yang Advised by Prof. Yiming Yang Outline • Introduction • The Problem • Transfer Learning by Constructing Informative Prior • Datasets • Evaluation Method • Experimental Results • Conclusion Introduction • TREC 2006 Blog Track – Opinion Detection Task <num> Number: 851 <title> "March of the Penguins" <desc> Description: Provide opinion of the film documentary "March of the Penguins". <narr> Narrative: Relevant documents should include opinions concerning the film documentary "March of the Penguins". Articles or comments about penguins outside the context of this film documentary are not relevant. Opinion Detection Literature Review • Researchers in Natural Language Processing (NLP) community – Turney (2002) : groups online words whose point mutual information close to "excellent" and "poor" – Riloff & Wiebe (2003): use a high-precision classifier to get high quality opinions and non-opinions, and then extract syntactic patterns. Repeat this process to bootstrap – Pang et al. (2002): treat opinion and sentiment detection and as a text classification problem • Naive Bayes, Maximum Entropy, SVM +unigram pres. (82.9%) – Pang & Lee (2005): use Minicuts to cluster sentences based on their subjectivity and sentiment orientation. • Researchers from data mining community – Morinaga et al. (2002) : use word polarity, syntactic pattern matching rules to extract opinions, PCA to create correspondence between the product names and keywords Existing System • Query Expansion • Document Retrieval • Binary Text Classification by Bayesian Logistic Regression No Available Training Data • Transfer Learning – Transfer knowledge over similar tasks but different domain – Generalize knowledge from limited training data – Discover underlying general structures across domains Transfer Learning Literature Review • Baxter(1997) and Thrun(1996): both used hierarchical Bayesian learning • Lawrence and Platt (2004), Yu et al. (2005): also use hierarchical Bayesian models to learn hyperparameters of Gaussian process • Ando and Zhang (2005): proposed a framework for Gaussian logistic regression for text classification . • Raina et al. (2006): continued this approach and built informative priors for Gaussian logistic regression Transfer Learning • The Approach presented in this project is Inspired by the work done by Raina, Ng & Koller (2006) on text classification • Transferring common knowledge (word dependence) in similar tasks by constructing a informative prior in a Bayesian Logistic Regression Framework Logistic Regression Framework • Logistic regression assumes sigmoid-like data distribution • To avoid overfitting, multivariate Gaussian prior is added on θ • Maximum a posteriori (MAP) Estimation Non-diagonal Covariance • Zero-mean, equal variance Prior – Cannot capture relationship among words • Zero-mean, non-diagonal covariance Prior – Model word dependency in covariance matrix’s off-diagonal entries Pair-wised Covariance • Covariance Definition: • Given zero mean, Get Covariance by MCMC • Markov Chain Monte Carlo (MCMC) • Sample V (V=4) small vocabularies with size S (S=5) containing the two words wi and wjcorresponding to θi and θj. • From each vocabulary, sample T (T=4) training sets with size Z(Z=3) to train an ordinary Log. Reg. model on labeled datasets Get Covariance by MCMC • Subtract a bootstrap estimation of the covariance due to randomness of training set change Learning a Covariance Matrix • Learning a single covariance for pairs of regression coefficients is NOT all we need • Two Challenges: (1) Valid Covariance Matrix – A valid covariance matrix needs to be positive semi-definite (PSD) – Hermitian matrix (square, self-adjoint) with nonnegative eigen values. – Project the matrix on to a PSD cone Learning a Covariance Matrix (2) Pair-wise calculations increase the complexity quadratically with vocabulary size – represent the word dependence as linear combination of underlying features – Learn the coefficients by Least Squared Error Learning a Covariance Matrix By Joint Minimization • λ is the trade-off coefficient between the two objectives. – As λ-> 0, only care about PSD cone – As λ-> 1, only care about word pair relationship – Set to 0.6 Solve the Joint Minimization • Convex problem, converge to global minimum • Fix Σ , minimize over ψ – Use Quadratic Program (QP) Solver • Fix ψ , minimize over Σ – A special semi-definite programming (SDP) – Eigen decomposition and keep the nonnegative values Feature Design • Model word dependency – Wordnet synset – and? • People do not always use the same general syntactic patterns to express opinion – "blah blah is good", – "awesome blah blah!" Target-Opinion Word Pair • Different opinion targets relate to different customary expression – – – – – A person is knowledgeable A computer processor is fast A computer processor is knowledgeable (ill) A person is fast (ill) A computer processor is running like a horse (word polarity test fails) Target-Opinion Word Pair • From training corpus, extract from a positive example – subject and object (excludes pronouns) • “Melvin, pig” – subject and BE-predicate • “lens, clear”, “base, heavy” – modifier and subject • “good, coffee” , “interesting, movie” Word Synonym • Bridge vocabulary gap from training to testing – “This movie is good" in training corpus – "The film is really good" in the testing corpus Feature Vector Log-cooccurrence Target-Opinion Synonym Datasets • Training Corpus – Movie reviews [Pang & Lee from Cornell] • 10,000 sentences (5,000 opinions, 5,000 nonopinions) – Product reviews [Hu & Liu from UIC] • 4,000+ sentences (2,034 opinions, 2,173 nonopinions. • Digital camera, cell phone, DVD player, Jukebox, … Datasets • Test Corpus – TREC 2006 Blog corpus – 3,201,002 articles (TREC reports 3,215,171) – December 2005 to February 2006 – Technorati, Bloglines, Blogpulse … • For each topic, 5,000 passages are retrieved – – – – Using Lemur as search engine 132,399 passages in total 2,648 passages per topic Each passage 1-10 sentences ( less than 100 words) Evaluation Method • Precision at 11-pt recall level • Mean average precision (MAP) • Answers are provided by TREC qrels, – Document ids of documents containing an opinion • Note that our system is developed for opinion detection at sentence level – An averaged score of all the sentences in a retrieved passages – Extract Unique document ids to compare with TREC qrels Experimental Results • Effects of Using Non-diagonal Prior Covariance – Baseline: Using movie reviews to train the Gaussian log. Reg. model with Prior ~N(0,σ2) – Feature Selection: Using common word features in movie reviews and product reviews to train the Gaussian log. Reg. model with Prior ~N(0,σ2) – Informative Prior:Using movie reviews to calculate prior covariance, train the Gaussian log. Reg. model with the informative prior ~N(0,Σ) 32% improvement Experimental Results • Effects of Feature Design – Baseline: Using movie reviews to train the Gaussian log. Reg. model with Prior ~N(0,σ2), bi-gram model – Transfer Learning Using Synonyms: Using informative prior ~N(0,Σ) – Transfer Learning Using Target-Opinion pairs: informative prior ~N(0,Σ) – Transfer Learning Using Both: informative prior ~N(0,Σ) A good feature Experimental Results • Effects on External Dataset Selection Negative Effect of Transfer Learning Why Negative Effect Occurs? • Movie covers more general topics • Product only share 23% topics Conclusion • Applying Transfer Learning in Opinion Detection • Transfer Learning by Informative Prior improves brutal transfer learning by 32% • Discovering a good feature for opinion detection – Target-Opinion pair • Need to be careful when choosing external datasets to help Thank You!