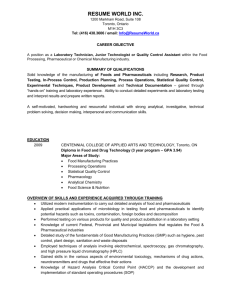

Reed Castle

advertisement

CLEAR Pre-Conference Workshop Testing Essentials Job Analysis- Reed A. Castle, PhD Item Writing- Steven S. Nettles, EdD Test Development- Julia M. Leahy, PhD Standard Setting- Paul D. Naylor, PhD Scaling/Scoring-Lauren J. Wood- PhD, LP 5 topics, 20 minutes and 20 minutes Q&A Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Job Analysis Reed A. Castle, Ph.D. Schroeder Measurement Technologies, Inc. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 What is a Job Analysis? An investigation of the ability requirements that go with a particular job (Credentialing Exam Context). It is the study that helps establish a link between test scores and the content of the profession. The Joint Technical Standards14.14 “The content domain to be covered by a credentialing test should be defined clearly and justified in terms of importance of the content for the credential-worthy performance in an occupation or profession. A rationale should be provided to support a claim that the knowledge or skills being assessed are required for credential-worthy performance in an occupation and are consistent with the purpose for which the licensing or certification program was instituted.” Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Why Conduct a Job Analysis? Need to establish a validity link. Need to articulate a rationale for examination content. Need to reduce the threat of legal challenges. Need to determine what is relatively important practice. Need to understand the profession before we assess it. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Types of Job Analyses Focus Group Traditional Survey-Based Electronic Survey-Based Transportability Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Focus Group Need to Identify the best group of SMEs possible Areas of Practice Geographic representation Demographically Balanced 8 to 12 Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Focus Group Prior to MeetingComprehensive review of profession Job Descriptions Performance Appraisals Curriculum Other job-related documents Create a Master Task List Send list to SMEs prior to meeting to give them chance to review Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Focus Group At MeetingReview Comprehensive Task List Determine which tasks are important Determine which tasks are performed with an appropriate level of frequency Determine which tasks are duplicative Identify and add missing tasks Organize into coherent outline Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Focus Group AdvantagesMay be only solution for new/emerging professions Relatively quick Less expensive Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Focus Group Disadvantages Based on one group (Results may not generalize) May be considered a weaker model when considering validation. May result in complaints from constituents about the content of the test. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Traditional Survey-Based First steps are similar to the focus group (i.e., task list is generated in same manner) After the task list is created, three more issues must be addressed to complete the first survey development meeting. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Traditional Survey-Based First, demographic questions must be developed with two goals in mind. Questions should help describe the sample of respondents Some Question will be used for analyses help generalize across groups (e.g., geographic regions) Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Traditional Survey-Based Second, rating scale(s) should be developed. Minimally, two pieces of information should be collected Importance or significance Frequency of performance Additional scales can be added but may take away from response rate. Shorter is sometimes better. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Traditional Survey-Based Sample Scale combining Importance and Frequency High correlation b/w Freq and Imp Ratings (.95 and higher) Considering both the importance and frequency, how important is this task in relation to the safe, effective, and competent performance of a Testing Professional? If you believe the task is never performed by a Testing Professional, please select the 'Not performed' rating. 0 = Not performed 1 = Minimal importance 2 = Below average or low importance 3 = Average or medium importance 4 = Above average or high importance 5 = Extreme or critical importance Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Traditional Survey-Based SamplingOne of the more important considerations is the sampling model employed. Surveys should be distributed to a sample that is reflective of the entire population. Demographic questions help describe the sample. One should anticipate a low response rate (20%) when planning for an appropriate number of responses. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Traditional Survey-Based Mailing Surveys Enclose a postage paid return envelope. Plan well in advance for international mailings (can be logistically painful with different countries). When bulk mailed, plan extra time. Keep daily track of return volume. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Electronic Survey-Based Identical to traditional, but delivery and return are different. Need Email addresses. Need profession with ready access to Internet. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Electronic Survey-Based Advantages Faster response time. Data entry is no longer needed. Reduced processing time on R & D side. Possibly less expense (less admin costs). Can modify sampling and survey on the fly if needed Sample can be the population with little additional cost. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Electronic Survey-Based Disadvantages Need Email addresses High rate of “bounce-back” Control for ballot stuffing Data compatibility Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Transportability Using the results of other job analysis Determine compatibility or transportability Similar to Focus Group Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Four Types Review Focus Group Traditional Survey-Based Electronic Survey-Based Transportability Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Data Demographics Importance Ratings Frequency Ratings Composite Sub group Analyses Decision Rules Reliability Raters Instrument Survey Adequacy Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Primary Demographics Geographic Region Years Experience Work Setting Position Role/Function Percent Time in certain activities Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Mean Importance Ratings3.0 criterion Task Task Task Task Task Task Task 6 4 1 5 3 7 2 Mean 2.45 2.97 3.21 3.85 3.91 4.25 4.28 Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Out In % Not Performed Ratings, Criterion 25% (75% perform) Task Task Task Task Task Task Task 6 4 1 5 2 3 7 % NP 38% 29% 26% 16% 10% 5% 3% Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Composite Ratings Composite ratings using rating scale Natural Logs (when multiple scales are used) can be calculated and combined based on some weighting scheme. For example, if you want to weight frequency 33.33% and importance 66.66%, you can adjust for this in the composite rating equation. Personal opinion is that you will likely end up in a very similar place if establishing decision criteria on each scale individually. In addition, multiple decision rules is more conservative Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Mean Importance Sub-group Analyses Region Task 1 Task 2 Task 3 Task 4 Task 5 Task 6 Task 7 Africa 3.22 4.21 3.91 2.95 3.88 2.41 4.22 Asia 3.12 4.08 3.87 2.99 3.82 2.85 4.09 N. S. Australia Europe America America 3.01 2.96 3.21 3.18 3.85 3.84 4.51 4.38 3.78 3.75 3.48 3.25 3.03 3.1 2.91 2.89 3.84 3.89 3.78 3.48 2.14 2.47 2.85 2.35 3.85 3.84 4.47 4.25 Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 <=3.0 1 0 0 4 0 6 0 Assessment Type SMEs are asked to determine which assessment type will best measure a given task Multiple choice Performance Essay/short answer Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Cognitive Levels Each task on the content outline requires some level of cognition to perform 3 basic levels exist (from Bloom’s Taxonomy) Knowledge/Recall Application Analysis Steve will discuss in next presentation Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Cognitive Levels Of the remaining tasks-post inclusion decision criteria, SMEs are asked to rate them on a 3 point scale For each major content area, an average rating is calculated The average is applied to specific criteria to determine the number of items by cognitive level for each content area Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Weighting Weighting is usually done with SME’s based on some type of data For example, average importance or composite rating for a given content area Applied to assessment type and cognitive levels. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Specifications/Weights Standard Exclusion/Inclusion criteria Test Specifications Assessment type/Cognitive levels Weights based on rational approach Reflect test-type Statistical Consensus Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Item Writing Steven S. Nettles, EdD Applied Measurement Professionals, Inc. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Overview of Measurement Job Analysis Test Specifications Detailed Content Outline Item Writing Examination Development Standard Setting Administration and Scoring Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Specifications & Detailed Content Outline Developed based on the judgment of an advisory committee as they interpreted job analysis results from many respondents. Guides item writing and examination development. Provides information to candidates. Required! Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Item Writing Workshop Goals appropriate item content and cognitive complexity consistent style and format efficient examination committee work Certified Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 A test item measures one unit of content. contains a stimulus (the question). prescribes a particular response form. The response allows an inference about candidates’ abilities on the specific bit of content. When items are linked to job content, summing all correct item responses allows broader inferences about candidates’ abilities to do a job. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Preparing to Write Each item must be linked to the prescribed part of the Detailed Content Outline. cognitive level (optional). Write multiple-choice items. Three options better for similar ability groups. Five options better for diverse groups. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Why multiple choice? Dichotomous (right/wrong) scoring encourages measurement precision. Validity is strongly supported because each item measures one specific bit of content. Many items sample the entire content area. The flexible format allows measurement of a variety of objectives. Examinees cannot bluff their way to receiving credit (although they can correctly guess). We will talk more about minimizing effective guessing. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Item components include stem. three to four options. one key two to three distractors. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Item Components Stem The statement or question to which candidates respond. The stem can also include a chart, table, or graphic. The stem should clearly present one problem or idea. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Example Stems Direct question Which of the following best describes the primary purpose of the Code of Federal Regulations? Incomplete statement The primary purpose of the CFR includes New writers tend to write clearer direct questions. If you are new to item writing, it may be best to concentrate on that type. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Among the options will be the key With a positively worded stem, the key is the best or most appropriate of the available stem responses. With a negatively worded stem, the key is the least appropriate or worst of the available stem responses. Negatively written items are not encouraged! distractors - plausible yet incorrect responses to the stem Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Cognitive levels Recall Application Analysis Cognitive levels are designated because we recognize that varying dimensions of the job require varying levels of cognition. By linking items to cognitive levels, a test better represents the job, i.e., is more jobrelated. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Cognitive levels Recall items use an effort of rote memorization. are NEVER situationally dependent. have options that frequently start with nouns. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Recall item Which of the following beers is brewed in St. John’s? A. LaBlatts B. Molson C. Moosehead Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Cognitive levels Application items use interpretation, classification, translation, or recognition of elements and relationships have keys that depend on the situation presented in the stem Any item involving manipulations of formulas, no matter how simple, are application level. Items using graphics or data tables will be at least at the application level. If the key would be correct in any situation, then the item is probably just a dressed up recall item. have options that frequently start with verbs. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Application item Which of the following is the best approach when trout-fishing in the Canadian Rockies? A. Use a fly fishing system with a small insect lure. B. Use a spinning system with a medium Mepps lure. C. Use a bait casting system with a large nightcrawler. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Cognitive levels Analysis items use information synthesis, problem solving, and evaluation of the best response. require candidates to find the problem from clues and act toward resolution. have options that frequently start with verbs. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Analysis item Total parenteral nutrition (TPN) is initiated in a nondiabetic patient at a rate of 42 ml/hour. On the second day of therapy, serum and urine electrolytes are normal, urine glucose level is 3% and urine output exceeds parenteral intake. Which of the following is the MOST likely cause of these findings? A. The patient has developed an acute glucose tolerance. B. The patient’s renal threshold for glucose has been exceeded. C. The patient is now a Type 2 diabetic requiring supplemental insulin. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Other Item Types: complex multiple-choice (CMC) are best for complex situations with multiple correct solutions. may incorporate a direct question or incomplete statement stem format. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 CMC items Which of the following lab test results have been associated with fibromyalgia or myofascial pain? 1. Elevated CPK Elements 2. Elevated LDH isoenzyme subsets 3. White blood cell magnesium deficiency 4. EMG abnormalities A. 1 and 3 only B. 1 and 4 only C. 2 and 3 only D. 2 and 4 only Options Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 K - Type Item A child suffering from an acute exacerbation of rheumatic fever usually has 1. An elongated sedimentation rate 2. A prolonged PR interval 3. An elevated antistreptolysin O titer 4. Subcutaneous nodules A. 1, 2, and 3 only B. 1, 3 only C. 2, 4 only D. 4 only E. All are correct (From: Constructing Written Test Questions for the Basic and Clinical Sciences, Case & Swanson, 1996, NBME, Philadelphia, PA) Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Other Item Types: negatively worded Avoid negative wording when a positively worded item (e.g., CMC type) can be used. Negative wording encourages measurement error when able candidates become confused. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Negatively worded items A civil subpoena is valid for all of the following EXCEPT when it is A. served by registered mail. B. accompanied by any required witness fee. C. accompanied by a written authorization from the patient. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Convert negatively worded items to CMC items If you find yourself writing a negatively worded item, finish it. Then consider rewriting it as a CMC item where 2-3 elements are true, and 1 or 2 elements are not included in the key. Don’t write all CMC items to have 3 true and 1 false element. Mix it up – e.g., 2 true and 2 false. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Things to do Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Use an efficient and clear option format. List options on separate lines. Begin each option with a letter (i.e., A, B, C, D) to avoid confusion with numerical answers. Write options in similar lengths. New item writers tend to produce keys that are longer and more detailed than the distractors. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Put as many words as possible into the stem. The psychometrician should recommend A. that the committee write longer, more difficult to read stems. B. that the committee write distractors of length similar to the key. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Write distractors with care Item difficulty largely depends on the quality of the distractors. The finer distinctions candidates must make, the more difficult the item. When writing item stems, you should do all you can to help candidates clearly understand the situation and the question. Distractors should be written with a more ruthless (but not tricky) attitude. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Write distractors you know some candidates will select. Use common misconceptions. Use candidates’ familiar language. Use impressive-sounding and technical words in the distractors. Use scientific and stereotyped phrases, and verbal associations. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Things to avoid Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Avoid using “All of the above or” “None of the above” as options. using stereotypical or prejudicial language. overlapping data ranges. using humorous options. placing similar phrases in the stem and key, even including identical words. writing the key in far more technical, detailed language. producing items related to definitions. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Avoid using modifiers associated with true statements (e.g., may, sometimes, usually) for keys. false statements (e.g., never, all, none, always) for distractors. options having the same meaning. Therefore, both options must be incorrect. using parallel options (mutually exclusive) unless balanced by another pair of parallel options. writing items with undirected stems Use the “undirected stem test” writing items that allow test wise candidates to converge on the key. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Converging on the key A. 2 pills qid B. 4 pills bid C. 2 pills qid D. 6 pills tid Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Are you test wise? You are test wise if you can select the key based on clues given in the item without knowing the content. Please refer to your Pre-test Exercise. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Development Julia M. Leahy, PhD Chauncey Group International Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Development Item Evaluation Job Analysis Test Plan Test Content Experts Pretest Draft Items Edit & Review Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Validity Test specifications derived from job analysis Test items linked to job analysis and test specifications Test items measure content that is relevant to occupation or job. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Validity Content validity refers to the degree to which the items on a licensure/ certification examination are representative of the knowledge and/or skills that are necessary for competent performance Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Validity The specific use of test scores and/or the interpretations of the results. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Validity Supports the appropriateness of the test content to the domain the test is intended to represent. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Form Assembly Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Program Considerations Factors to consider : Computer-Based Testing Linear test forms versus pools of items Continuous testing or windows Issues of exposure Item bank size: probably large Paper-and-Pencil Examinations Single versus multiple administrations Issues of exposure Item bank size: small to large Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Specifications What content is important to test? What content is necessary to be a minimally competent practitioner? How much emphasis should be placed on certain content categories? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Creating Test Specifications Use data from Job Analysis Determine the following: What content do we put in each test? What feedback do we give people who are not successful? What kinds of Questions do we ask? How many of each kind do we ask? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Specifications Should include: Purpose of the Test Intended Population Test Domain and Relative Emphasis Content to be tested Cognitive level to be assessed Mode of Assessment & Item Types Psychometric Characteristics Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Specifications Use test specifications Every time a test form is created To be certain each test form asks questions on important content Validity - Passing the test is supposed to mean that a person knows enough to be considered proficient Fairness It would be unfair if certain content were not on every form Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Using Test Specifications for Item Development You’re not flying blind! Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Factors Influencing Numbers of Forms and Items Needed Annually Test Modality Is the test a paper-and-pencil or a computerbased test? Is both methodologies used? If CBT, will test be administered in windows or continuous? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Factors Influencing Numbers of Forms and Items Needed Annually Test length How many items will be needed for one form? How many forms? How often will forms be changed? What is the allowable percentage of overlap? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Factors Influencing Numbers of Forms and Items Needed Annually Number of test administrations per year Will each administration have a different form? What is the expected test volume per form? How are special test situations handled? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Factors Influencing Numbers of Forms and Items Needed Annually Level of Security Needed: Is this a high-stakes examination for licensure or certification in an occupation or profession? Or is the examination for lowstakes certificates, such as with continuing education or selfassessment? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Factors Influencing Numbers of Forms and Items Needed Annually Organizational Policies: When and under what circumstances can failing candidates repeat the examination? Must items be blocked for repeat candidates? Are there a minimum number of candidate responses required for new/pretest items? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Using Test Specifications Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Form Assembly Select items • Meet the test plan specifications • • • Total number of items Correct distribution of items by domains or subdomains Preference to use items with known statistical performance • Distribution of statistical parameters, such as difficulty and discrimination Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Form Assembly Select items • Determine need to consider non-test plan parameters in form assembly • • Cognitive level Use automatic selection software, if possible • Generate test forms that meet required and preferred parameters Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Form Assembly Consider Test Delivery: CBT • For continuous CBT delivery, large numbers of equivalent forms are needed for security reasons • Every form must meet the same detailed content and statistical specifications • Quality assurance is vital; forms cannot vary in quality, content coverage, difficulty, or pacing • Reproducibility and accuracy of scores and pass/fail decisions must be consistent across forms and over time Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Form Assembly Automatic Item Selection • • • Consider multiple rules for selecting items, such as content codes, statistics, Determine number of forms to reduce exposure Still need to evaluate selected form for overlap Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 5-Step Item Review Process • Grammar • Style • Internal Expert Review • Sensitivity/Fairness • External/Client Review Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Grammar • Review items for spelling, punctuation, and grammar • Usually done by an editor or trained test developer Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Style • Format items to conform with established style guidelines • Use capitalization and bolding as appropriate to alert candidates to words such as: • Maximum, Minimum, and Except Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Internal Review Review by internal experts for verification of content accuracy Is the key correct? Is the key referenced (if applicable)? Are the distractors clearly wrong, yet plausible? Is the item relevant? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness • Candidate Fairness: all candidates should be treated equally & fairly regardless of differences in personal characteristics that are not relevant to the test • Acknowledge the multicultural nature of society and treat its diverse population with respect Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness Review by test developers and/or external organizations Review items for references to gender, race, religion, or any possibly offensive terminology Use only when relevant to the item Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness ETS Sensitivity Review Guidelines and Procedures: Cultural diversity of the United States Diversity of background, cultural traditions and viewpoints Changing roles and attitudes toward groups in the US. Contributions of various groups Role of language in setting and changing attitudes towards various groups Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness Stereotypes: No population group should be depicted as either being inferior or superior Avoid inflammatory material Avoid inappropriate tone Appropriate tone reflects respect and avoids upsetting or otherwise disadvantaging a group of test takers. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness Stereotypes: Examples: Men who are abusers Woman who are depressed African Americans who live in depressed environments 65 and older adults who are frail, elderly and unemployable Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness Stereotypes: Examples: People with disabilities who are nonproductive Using diagnoses or conditions as adjectives Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness How to Avoid Stereotypes: Examples: A depressed patient A diabetic patient The patient with diabetes mellitus An elderly person The patient with depression A 72-year-old person A psychiatric patient A patient with paranoid schizophrenia Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness How to Avoid Stereotypes: Examples: A male who abuses woman A housewife An individual who is a primary caretaker A Hispanic who speaks no English An individual with abusive tendencies (avoid gender) An individual who speaks English as a second language An Asian American who eats Sushi An individual whose diet consists mainly of fish Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness Population diversity: No one population group should be dominant: Ethnic balance: use ethnicity only when necessary. Gender balance: avoid male and females identification if at all possible. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness Ethnic group references: African American or Black; Caucasian or White; Hispanic American; Asian American Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Sensitivity/Fairness Inappropriate tone: Avoid highly inflammatory material that is inappropriate to content of examination Avoid material that is elitist, patronizing, sarcastic, derogatory or inflammatory Examples: lady lawyer; little woman; strongwilled male Avoid terminology that might be known to only one group Examples: stickball; country clubs; maven Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Differential Item Functioning Identifies items that function differently for two groups of examinees or candidates DIF is said to occur for an item when the performance on that item differs systematically for focal and reference group members with the same level of proficiency Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Differential Item Functioning Reference group: Majority group: In nursing, that is general white-females Focal groups: All minority groups Men Non-white ethnic groups Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Differential Item Functioning Use the Mantel-Haenszel (MH) procedure, which matches the reference and focal groups on some measure of proficiency, which generally is the total number right score on the test Requires a minimum number per focal group—can do with a minimum of 40-50 in focal group Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Differential Item Functioning Examples of content related issues: Negative category C DIF was noted for males on content related to woman’s health Positive category C DIF was noted for males on content involving the use of equipment and actions likely to be taken in emergencies Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Differential Item Functioning Negative category C DIF was noted for the focal minority groups on content involving references to/inferences about: assumptions regarding the nuclear family, childrearing, and dominant culture; idiomatic use of language; hypothetical situations requiring "role-playing" Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 External/Client Review Review by external experts for verification of content accuracy Is the key correct? Is the key referenced (if applicable)? Are the distractors clearly wrong, yet plausible? Is the item relevant? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Review and Approval • Item review can be an iterative process • Yes, No, and Yes with modifications • Who has final sign off on items? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Production Paper-and-pencil forms • Determine format • • • • One column; Two column Directions -- back page, if booklet sealed Answer sheets Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Test Production Computer-based tests • Linear forms or test pools • Tutorials • Item appearance: • Top -- bottom • Side by side • Survey forms • Form review Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Item/Pool Review • Establishing a process for item review • How item review relates to item approval • Who should be involved in the item review • How often should items be reviewed Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Timing of Item/Pool Reviews • Establish a schedule for item reviews Anticipate regulatory or industry changes • Review and revise items for content accuracy Use candidate comments for feedback on items • Review and revise items based on statistical information * To be discussed in the afternoon session Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Setting The Cut-Score Paul D. Naylor, Ph.D. Psychometric Consultant Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Standard Setting The process used to arrive at a passing score Lowest score that permits entry to the field Recommended standard Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Standards Mandated Norm-referenced Criterion-referenced Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Mandated Standards Often used in licensing Difficult to defend Not related to minimum qualification Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Norm-referenced Standards Popular in schools Limits entry Inconsistent results Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Criterion-referenced Standards Wide acceptance in professional testing Determines minimum qualification Not test population dependant Exam or item centered Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Procedures Angoff (modfied) Nedelsky Ebell Others Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Minimally Competent Performance Minimum acceptable performance Minimal qualification Borderline It’s all relative Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Angoff Method Judges Selection Training Probabilities Would vs Should Rater agreement Tabulation Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 AV 1 Ratings 2 3 4 5 6 68.3 60 75 70 50 80 75 52.5 60 50 45 45 55 60 72.5 85 80 75 70 70 55 89.2 95 95 90 80 85 90 76.7 75 70 90 70 75 80 77.5 75 70 85 80 85 70 73.33 75.83 65.83 75.0 72.78 75.0 Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 71.66 Application Adjustment Angoff Values Alternative Forms of Exam Passing Score Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 References Livingston, S.A. & Zieky, M.J.(1982). Passing Scores. ETS. Cizek, C.J. Standard setting guidelines. Educational Meausrement: Issues and Practices, 15 (1), 13-21, 12. CLEAR Exam Review (Winter 2001, Summer 2001 and others) Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Presentation Follow-up Please pick up a handout from this presentation -AND/ORPlease give me your business card to receive an e-mail of the presentation materials -ORPresentation materials will be posted on CLEAR’s website Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 QUESTIONS AND ANSWERS THANK YOU! Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Lauren J. Wood, PhD, LP Director of Test Development Experior Assessments: A Division of Capstar Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Scaling and Scoring Objectives: -Describe a number of types of scores that you may wish to report -Define “scaled scores” and describe the scaling process Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Back to the Basics Public Protection versus Candidate Fairness Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scoring Scoring of the examination needs to be considered long before the examination is administered. Important to the examinees that the decision (pass/fail) and the scoring (raw, scaled) be reported in simple/clear language Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Scoring: Who gets the score reports? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scoring: What do you report? Types of scores: -Score outcome: pass/fail, -Score in comparison to a criterion -Score in comparison to others Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scoring: What do you report? Helpful to candidates to report subscore or section information Strengths and weaknesses Plan for remediation Need to caution that subscore or section score is meaningful Number of subscores/questions Can be graphical rather than numeric Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scoring: What do you report? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scoring: When/where do you report the scores? Delayed: Mailed to examinee’s home -group comparisons -scoring/scaling Immediate: At the test site Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scoring Score Reporting: -Compute examinee scores directly (raw scores) -Compute scaled or derived scores Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Three Candidate’s Experience: Exam: Residential Plumbing Exam (Theory) Administration date: August 11, 2003 Cut score: 70 % correct Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Three Candidate’s Score Reports Candidate #2 The Occupational and Professional Licensing Division regrets to inform you that you did not attain a satisfactory grade on the Residential Plumbing examination that you took on August 11, 2003. Your examination grade is: 74 Fail You may retake the examination… Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Three Candidate’s Score Reports Candidate #3 The Occupational and Professional Licensing Division is pleased to inform you that you attained a satisfactory grade on the Residential Plumbing examination that you took on August 11, 2003. Your examination grade is: 66 Pass Congratulations! You may apply for licensure by… Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Three Candidate’s Score Reports Score Result Candidate #1 68 Fail Candidate #2 74 Fail Candidate #3 66 Pass Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Three Candidate’s Score Reports What happens next? Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Three Candidate’s Score Reports Candidate #1 The Occupational and Professional Licensing Division regrets to inform you that you did not attain a satisfactory grade on the Residential Plumbing examination that you took on August 11, 2003. Your examination raw score is: 68 Fail You may retake the examination… Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Three Candidate’s Score Reports Residential Plumber Examination Form #1 Form #2 Form #3 Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling Why develop multiple forms of the same examination? Exam security 2) Examination and question content changes over time 1) Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring How do the forms of the Examination Differ? Item content differs, though the content of the items remains true to the exam content outline Item difficultly, discrimination, etc. differ across the different forms of the examination Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Three Candidate’s Score Reports Residential Plumber Examination Form #1: P-value=.66 Form #2: P-value=.72 Form #3: P-value=.70 Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Equating: The design and statistical procedure that permits scores on one form of a test to be comparable to scores on an alternative form of an examination Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Why equate forms? Adjust for unintended differences in form difficulty Ease in candidate to candidate score interpretation Maintain candidate fairness in the testing process Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring How is this done? There are a number of methods used to equate examination scores Statistical conversions of the scores are applied and the resulting scores are often called “scaled scores” or “derived scores” Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Three Candidate’s Score Reports Raw Score Scaled Score Status Candidate #1 68 67 Fail Candidate #2 74 66 Fail Candidate #3 66 70 Pass Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Three Candidate’s Score Reports Candidate #1 The Occupational and Professional Licensing Division regrets to inform you that you did not attain a satisfactory grade on the Residential Plumbing examination that you took on August 11, 2003. Your examination scaled score is: 67 You may retake the examination… Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Fail Scaling and Scoring Raw score Advantage Meaning clearly understood Disadvantage Can’t make comparisons Specific for each test administration Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Scaled score: Based on test mean, standard deviation and raw score Advantage Make meaningful comparisons Disadvantage Interpretation not clear cut Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling When scaling procedures are performed it is often important to provide an explanation that such procedures will be performed and what these scores will mean to the candidate. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling “The Purpose of Scaling” (Candidate Information Bulletin) Scaling allows scores to be reported on a common scale. Instead of having to remember that a 35 on the examination that you took is equivalent to a 40 on the examination that your friend took, we can use a common scale and report your score as a scaled score of 75. Since we know that your friend’s score of 40 is equal to your score of 35, your friend’s score would also be reported as a scaled score of 75. Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Scaling and Scoring Starts at the beginning of the test development process Report as outcome, relation to criterion, relation to others Delayed or immediate Raw or scaled scores Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003 Presentation Follow-up Please pick up a handout from this presentation -AND/ORPlease give me your business card to receive an e-mail of the presentation materials -ORPresentation materials will be posted on CLEAR’s website Presented at CLEAR’s 23rd Annual Conference Toronto, Ontario September, 2003