Designing and Evaluating Assessment for Introductory Statistics

advertisement

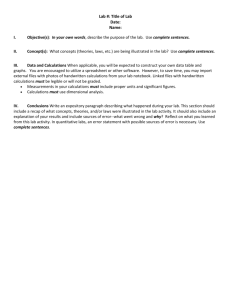

Designing and Evaluating Assessments for Introductory Statistics Minicourse #1 Beth Chance (bchance@calpoly.edu) Bob delMas Allan Rossman NSF grant PI: Joan Garfield 1 Outline Today: Overview of assessment Introductions Assessment goals in introductory statistics Principles of effective assessment Challenges and possibilities in statistics Overview of ARTIST database Friday: Putting an assessment plan together Alternative assessment methods Nitty Gritty details, individual plans 2 Overview Assessment = on-going process of collecting and analyzing information relative to some objective or goal Reflective, diagnostic, flexible, informal Evaluation = interpretation of evidence, judgment, comparison between intended and actual, use information to make improvements 3 Dimensions of Assessment Evaluation of program Monitoring instructional decisions Judge teaching effectiveness Evaluating students Evaluate curricula, allocate resources Give grades, monitor progress Promoting student progress Diagnose student needs 4 Types of Assessment Formative Assessment Summative Assessment In-process monitoring of on-going efforts in attempt to make rapid adjustments Record impact and overall achievement, compare outcomes to goals, decide next steps Example: teaching Example: learning 5 Bloom’s Taxonomy Knowledge Comprehension Application Analysis Synthesis Interrelationships Evaluation 6 An Assessment Cycle Set goals 2. Select methods 3. Gather evidence 4. Draw inference 5. Take action 6. Re-examine goals and methods Example: Introductory course Example: Lesson on sampling distributions 1. 7 Reflect on Goals What do you value? Instructor and student point of view Content, abilities, values At what point in the course should they develop the knowledge and skills? Translate learning outcomes/objectives What should students know and be able to do by the end of the course Must be measurable! 8 Some of My Course Goals Understand basic terms (literacy) Understand the statistical process Be able to reason and think statistically Not just the individual pieces, be able to apply role of context, effect of sample size, caution when using procedures, belief in randomness, association vs. causation Communication and collaboration skills Computer literacy Interest level in statistics 9 Possible Future Goals Process (not just product) of collaboration Learn how to learn Appreciate learning for its own sake Develop the necessary skills to understand both what they have learned and what they do not understand 10 Assess what you value Students value what they are assessed on 11 Example Given the numbers 5, 9, 11, 14, 17, 29 (a) Find the mean (b) Find the median (c) Find the mode (d) Calculate a 95% confidence interval for m 12 “Traditional” Assessment Good for assessing: Isolated computational skills, (short-term) memory retrieval Use and tracking of common misconceptions How many right answers? Provides us with: Consistent and timely scoring Predictor of future performance 13 “Traditional” Assessment Less effective at assessing: Can they explain their knowledge? Can they apply their knowledge? What are the limitations in their knowledge? Can they make good decisions? Can they evaluate? Can they deal with messy data? Role of prior knowledge 14 Focus on what and how students learn, what students can now do Not on what faculty teach 15 Nine Principles (AAHE) Start with educational values Multi-dimensional, integrated, over-time Clearly stated purposes Pay attention to outcomes and process On-going Student representation Important questions Support change Accountability 16 Select Methods Need multiple, complimentary methods Need to extend students less predictable, less discrete Needs to provide indicators for change Need prompt, informative feedback loop observable behavior adequate time On-going, linked series of activities over time Continuous improvement, self-assessment Students must believe in its value 17 Focus on the most prevalent student misconceptions 18 Repeat the Cycle Focus on the process of learning Collaborate External evaluation Continual refinement Feedback to both instructors and students Discuss results with students, motivate responsibility for their own learning Consider other factors Consider unexpected outcomes Don’t try to do it all at once! 19 Use the results of the assessment to improve student learning 20 Challenges in Statistics Education Doing statistics versus being an informed consumer of statistics Statistics vs. mathematics Role of context, messiness of solutions, computers handling the details of calculations, need to defend argument, evaluate based on quality of reasoning, methods, evidence used Have become pretty comfortable with lecture/reproduction format Traditional assessment feels more objective 21 Challenges in Statistics Education Reduce focus on calculation Reveal intuition, statistical reasoning Require meaningful context Purpose, statistical interest Meaningful reason to calculate Careful, detailed examination of data Use of statistical language Meaningful tasks, similar to what will be asked to do “in real life” 22 Some Techniques Multiple choice “What if”, working backwards, “construct situation that” Objective-format questions with identification of false response with explanation or reasoning choices with judgment, critique (when is this appropriate) e.g., comparative judgment of strength of relationship e.g., matching boxplot with normal prob plots Missing pieces of output, background 23 Some Techniques Combine with alternative assessment methods, e.g., projects: see entire process, messiness of real data collection and analysis e.g., case studies: focus on real data, real questions, students doing and communicating about statistics Self-assessment, peer-evaluation 24 What can we learn? Which graph best represents Sampling Distribution questions a distribution of sample means for 500 samples of size 4? A B C D E 25 What can we learn? Asking them to write about their understanding of sampling distributions Now place more emphasis in my teaching on labeling horizontal and vertical axes, considering the observational unit, distinguishing between symmetric and even, spending much more time of the concept of variability Knowing better questions to ask to assess their understanding of the process 26 ARTIST Database First… 27 HW Assignment Assessment Framework WHAT: concept, applications, skills, attitudes, beliefs PURPOSE: why, how used WHO: student, peers, teacher METHOD ACTION/FEEDBACK: and so? 28 HW Assignment Suggest a learning goal, a method, and an action Be ready to discuss with peers, then class, on Friday Sample Final Exam (p. 17) Skills/knowledge being assessed Conceptual/interpretative vs. mechanical/computational 29 Day 2 30 Overview Quick leftovers on ARTIST database? Critiquing sample final exam Implementation issues (exam nitty gritty) Additional assessment methods Holistic scoring/Developing rubrics Your goal/method/action Developing assessment plan Wrap-up/Evaluations 31 Sample Final Exam In-class component (135 minutes) What skills/knowledge are being assessed? Conceptual/interpretative vs. Computational/mechanical? 32 Sample Exam Question 1 Stemplot Shape of distribution Appropriateness of numerical summaries C/I: 5, C/M: 3 33 Sample Exam Question 2 Bias Precision Sample size C/I: 8, C/M: 0 No calculations No recitation of definitions 34 Sample Exam Question 3 Normal curve Normal calculations C/I: 4, C/M: 3 35 Sample Exam Question 4 Sampling distribution, CLT Sample size Empirical rule C/I: 4, C/M: 0 Students would have had practice Explanation more important than selection 36 Sample Exam Question 5 Confidence interval Significance test, p-value Practical vs. statistical significance C/I: 7, C/M: 2 No calculations needed Need to understand interval vs. test 37 Sample Exam Question 6 Experimentation Randomization Random number table C/I: 4, C/M: 4 Tests data collection issue without requiring data collection 38 Sample Exam Question 7 Experimental design Variables Confounding C/I: 13, C/M: 0 Another question on data collection issues 39 Sample Exam Question 8 Two-way table Conditional proportions Chi-square statistic, test Causation C/I: 5, C/M: 9 Does not require calculations to conduct test 40 Sample Exam Question 9 Boxplots ANOVA table Technical assumptions C/I: 7, C/M: 3 Even calculations require understanding table relationships 41 Sample Exam Question 10 Scatterplot, association Regression, slope, inference Residual, influence Prediction, extrapolation C/I: 15, C/M: 0 Remarkable in regression question! 42 Sample Exam Question 11 Confidence interval, significance test Duality C/I: 9, C/M: 2 Again no calculations required 43 Sample Exam C/I: 79, C/M: 28 (74% conceptual) Coverage experimental design, randomization bias, precision, confounding stemplot, boxplots, scatterplots, association normal curve, sampling distributions confidence intervals, significance tests chi-square, ANOVA, regression 44 Nitty Gritty External aids… Process of constructing exam… Timing issues… Student preparation/debriefing… 45 Beyond Exams Combine with additional assessment methods, e.g., projects: see entire process, messiness of real data collection and analysis e.g., case studies: focus on real data, real questions, students doing and communicating about statistics generation instead of only validation… 46 Beyond Exams (p. 8)… Written homework assignments/lab assignments Minute papers Expository writings Portfolios/journals Student projects Paired quizzes/group exams Concept Maps 47 Student Projects Best way to demonstrate to students the practice of statistics Experience the fine points of research Experience the “messiness” of data Statistician’s role as team member From beginning to end Formulation and Explanation Constant Reference 48 Student Projects Choice of Topic Choice of Group Ownership In-class activities first Periodic Progress Reports Peer Review Guidance/Interference Early in process Presentation (me, alum, fellow student) Full Lab Reports statweb.calpoly.edu/chance/stat217/projects.html 49 Project Issues Assigning Grades, individual accountability Insignificant/Negative Results Reward the Effort Iterative Long vs. Short projects Encourage/expect revision Coverage of statistical tools Workload 50 Holistic Scoring Not analytic - each part = X points Problem is graded as a whole Calculations are one of many parts Strengths in one section can balance weaknesses in another 51 Holistic Scoring Did the student demonstrate knowledge of the statistical concept involved? Did the student communicate a clear explanation of what was done in the analysis and why? Did the student express a clear statement of the conclusions drawn? 52 Holistic Scoring May lose points if don’t clearly explain why method was chosen assumptions of method line of reasoning final conclusion in context 53 Developing Rubrics Scoring guide/plan Focus on goal/purpose of the question Consistency, inter-rater reliability What information do you hope to learn based on the student’s performance Identify valueable student behavior/correct characteristics List those characteristics in observable terms (Best/Good/Fair/Poor) 54 Developing Rubrics Multiple procedures, variety of techniques Can you see the students’ thought processes, knowledge of assumptions 55 Developing Assessment Plan Match (most important) instructional goals Multiple and varied indicators Inter-related, complementary Well-defined, well-integrated throughout course Start with learning outcomes, own questions Detailed expectations, part of learning process Goals understood by students Promote self-reflection, responsibility, trust 56 Developing Assessment Plan Timely, consistent feedback indicators for change, feedback loop, reinforcement Individual and group accountability Openness to other (justified) interpretations, reward thoughtfulness, creativity Not all at once, Not too much Collaborate Continual reflection, refinement Assess what you value 57 Cautions! Consider time requirements for students and instructor! Easier to solve than to explain With experience, become more efficient Provide sufficient guidance Provide students with familiarity and clear understanding of your expectations May not be used to being required to think! Less comfortable writing in complete sentences 58 Wrap-Up 59