ppt

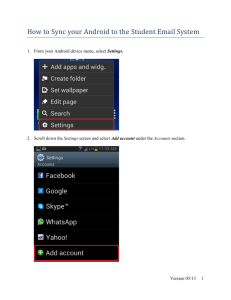

advertisement

QuickSync: Improving Synchronization Efficiency for Mobile Cloud Storage Services Yong Cui, Zeqi Lai, Xin Wang*, Ningwei Dai, Congcong Miao Tsinghua University Stony Brook University* 1 Outline Background Measurement & Analysis Design & Implementation Evaluation Conclusion The way we store data… Mobile Cloud Storage Services (MCSS) Data entrance of Internet – Major players: Dropbox, Google Drive, OneDrive, Box, … – Large user base: Dropbox has more than 300 million users – More and more mobile devices/data: MCSS helps to manage data across your multiple devices Basic function of MCSS – Storing, sharing, synchronizing data from anywhere, on any device, at anytime via ubiquitous cellular/Wi-Fi networks Architecture & Capabilities of MCSS Architecture – Control server: metadata management; Storage server: contents – Sync process with your multiple clients Sync efficiency is one of the most important thing for MCSS. Architecture & Capabilities of MCSS Key capabilities – – – – Chunking: splitting a large file into multiple data units with certain size Bundling: multiple small chunks as a single chunk Deduplication: avoiding the retransmission of content already available in the cloud Delta-encoding: only transmitting the modified portion of a file Can data be synchronized efficiently? Ideal – These capabilities MAY efficiently synchronize our data in mobile/wireless environments (high delay & intermittent connections) Reality – The sync time is much longer than expected with various network conditions! Challenges of improving sync efficiency – – – These capabilities are useful or enough? What’s their relationship? Novel angle of view: storage techniques & network techniques Close source & encrypted traffic—hard to identify the root cause Aim of our work We identify, analyze and address the sync inefficiency problem of modern mobile cloud storage services. Outline Background Measurement & Analysis Design & Implementation Evaluation Conclusion Measurement Methodology Understand the sync protocol – – – Methodology: trace app encrypted traffic Decryption for in-depth analysis: hijack SSL socket of Dropbox Three sync/upload stages: sync preparation, data sync, sync finish Arrangement of our measurement Exp. Capabilities Dropbox Google Drive OneDrive Seafile - Chunking 4MB 8MB Var. Var. 1 Dedup. ✔ ✗ ✗ ✔ 2 Delta encoding ✔ ✗ ✗ ✗ 3 Bundling ✔ ✗ ✗ ✗ Identifying the sync inefficiency problem Redundancy deduplication – – – – Seafile eliminates more redundancy than Dropbox on a same data set Seafile uses smaller content-define chunk size than Dropbox But Seafile may need more sync time even with reduced traffic! Finding more redundancy needs more CPU time DER: deduplicated file size/the original file size Root cause analysis Why less data cost more time? – Chunking is closely related to deduplication – Fixed-size is efficient in good network conditions – Aggressive chunking helps in high delay environments – Trade-off between traffic amount and CPU time with various network conditions – Network-based chunking may be important Identifying the sync inefficiency problem Dropbox fails on incremental sync with delta encoding – 3 operations (flip bits, insert, delete) over continuous bytes of a – synced test file Insert 2MB at head of a 40MB file, but 20MB transmitted TUO: generated traffic size /expected traffic size Root cause analysis Why the incremental sync fails – – – A large file is split into multiple chunks Delta-encoding is only performed between mapped chunks Chunking is the basis for delta-encoding Identifying the sync inefficiency problem Incremental sync failure for continuous modification – Modify files continuously, which are under the transmissions – process to the cloud (temporary files of MS word or VMware) Incremental sync fails with long delay network Root cause analysis – – The metadata is updated only after chunks are successfully uploaded. A chunk under sync process cannot be used for delta-encoding. TUO: generated traffic size /size of revised file Identifying the sync inefficiency problem Bandwidth inefficiency – Synchronize files differing in size – Sync is not efficient for large # of – small files in high RTT conditions BUE: measured throughput / theoretical TCP bandwidth Root cause analysis – Client waits for ack from server before transmit next chunk – Sequential ack for each small chunk and even too many new – connections bear the TCP slow start, especially with high RTT Bundling is quite important Identifying the sync inefficiency problem Bandwidth inefficiency – Bundling small chunks is important (only dropbox implements) – Bandwidth utilization decreases with large files Root cause analysis – Dropbox uses up to 4 concurrent TCP connections – Client waits for all of 4 connections to finish before next iteration – Several connections may be idle between two iterations Outline Background and Architecture Measurement & Analysis Design & Implementation Evaluation Conclusion QuickSync: improving sync efficiency System overview with 3 techniques – – – Propose network-aware content-defined chunker to identify redundant data Design improved incremental sync approach that correctly performs delta-encoding between similar chunks to reduce sync traffic Use delay-batched ack to improve the bandwidth utilization Design detail: Network-aware Chunker Network-aware chunker – Intuitively, prefer larger chunks when the bandwidth is sufficient, – – and make aggressive chunking in slow networks Predefine several chunking strategies in QuickSync Client selects a chunking strategy for a file to optimize sync time Total comp. time Byte comp. Data size time Total comm. time Segment_size*cwnd/RTT Design detail: Network-aware Chunker Virtual chunks in the server – – – – A file needs to sync with multiple clients in various networks Avoid storing multiple copies of a file with various chunk methods Propose virtual chunk to only store the offset and length which can be used to generated the pointers to the content Support multi-granularity deduplication without additional copies Design detail: Redundancy eliminator Two-step sketch-based mapping – Hash match: two chunks are identical – Sketch match: two chunks are similar – Performing delta-encoding between two most similar chunks Buffering uncompletely synced chunks on client – Chunks waiting to be uploaded or performed delta-encoding – are temporarily buffered in local device So incremental sync works in the middle of a sync process (for the use cases of MS Word or VMware temp files) Design detail: Batched Syncer Batched transmission – Defer the app-layer ack to the end of sync process, and – actively check the un-acked chunks upon the connection interruption Check will be triggered: when the client captures a network exception, or time out Reuse existing network connections – Reuses the storage connection to transfer multiple chunks, avoiding the overhead of duplicate TCP/SSL handshakes QuickSync implementation Implementation over Dropbox – Unable to directly implement – – – on close-source Dropbox Design proxy-based architecture Implement our functionality between client on Galaxy Nexus & proxy in EC2 Leverage Samplebyte for chunking and librsync for delta encoding Implementation over Seafile – The proxy architecture adds additional overhead – Full implementation with Seafile, an open source system, on both client & server Outline Background and Architecture Measurement & Analysis Design & Implementation Evaluation Conclusion Impact of the Network-aware Chunker Setup – Data set: 200GB backup – RTT setting: 30~600ms Performance – Up to 31% sync speed improvement CPU overhead – Client: <12.3% – Server: <0.5% (per user) Results show – Network-aware chunker works well Impact of the Redundancy Eliminator Setup & Results – Conduct the same set of experiments, where TUO = 5-10 – Traffic utilization overhead decreases to 1 (insert) – Results show: Sketch-based mapping works well – QuickSync only synchronizes new contents under arbitrary number of modifications: local buffering works well Impact of the Batched Syncer Setup – Uploading files differing in size Performance – Bandwidth utilization improves up – to 61% (better with high RTT) Delayed ack works well Exception recovery – Delayed ack MAY lead high – overhead on exception recovery Result of active check: overhead is less than Seafile and Dropbox Performance of the Integrated system Setup – Practical sync workloads on Windows / Android – Source code backup, MS Powerpoint editing, data backup, photo sharing, … Performance – Traffic size reduction: up to 80.3% / 63.4% (Win / Android) – Sync time reduction: up to 51.8% / 52.9% (Win / Android) Outline Background and Architecture Measurement & Analysis Design & Implementation Evaluation Conclusion Conclusion Measurement study & sync inefficiency analysis – The basic capabilities are quite important for sync efficiency – They are far away to perform well – Network parameters are also important QuickSync: design, implementation, evaluation – Three key techniques – Implementation over Dropbox & Seafile – Practical sync workloads show the efficiency on both traffic size reduction and sync time reduction Welcome to IETF Standard sync protocol – Third-party apps are easy to develop – APIs will be unnecessary or at least simplified – Sync or file sharing among different services is available – Easy to improve Internet storage services Many interests from IETF – We introduced this idea in IETF 93 in July 2015 – IETFer: good topic for a new working group Our mail list: storagesync@ietf.org Wiki: https://github.com/iss-ietf/iss/wiki/Internet-Storage-Sync Thank you! Questions? Server-side storage overhead Server-side overhead: measurement & analysis – Using smaller chunks will increase the metadata overhead – (# of chunks) The additional overhead of QuickSync is only a small fraction of the overall storage cost very small fraction Related work Measurement study – – – – Measurement & performance comparison for enterprise cloud storage service [IMC’10] Measurement & benchmarking for personal cloud storage [IMC’12] [IMC’13] Sync traffic waste [IMC’14] Our measurement identify and reveal the root cause of sync inefficiency in wireless networks Related work Storage system – – – – Enterprise data backup system [FAST’ 14] Data consistency in sync services [FAST’ 14] Reducing sync overhead [Middleware’ 13] QuickSync focuses on performance in wireless networks, and introduce network-related consideration Related work CDC and delta encoding – – – – Content defined chunking [FAST’ 08] [FAST’ 12] [INFOCOM’ 14] [SIGCOMM’ 00] Delta encoding: rsync, https://rsync.samba.org/ QuickSync utilizes CDC addressing for a unique purpose, adaptively selecting the optimized chunk size to achieve sync efficiency We also improve the delta encoding and address the limitation in MCSS