CS471-8/14 - George Mason University Department of Computer

advertisement

CS 471 - Lecture 7

Distributed Coordination

George Mason University

Fall 2009

Distributed Coordination

Time in Distributed Systems

Logical Time/Clock

Distributed Mutual Exclusion

Distributed Election

Distributed Agreement

GMU – CS 571

7.2

Time in Distributed Systems

Distributed Systems No global clock

Algorithms for clock synchronization are useful for

•

•

concurrency control based on timestamp ordering

distributed transactions

Physical clocks - Inherent limitations of clock

synchronization algorithms

Logical time is an alternative

•

GMU – CS 571

It gives the ordering of events

7.3

Time in Distributed Systems

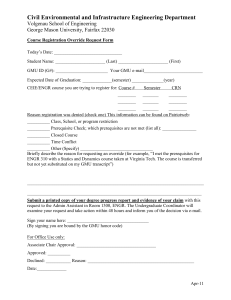

Updating a replicated database and leaving it in an inconsistent

state. Need a way to ensure that the two updates are performed

in the same order at each database.

GMU – CS 571

7.4

Clock Synchronization Algorithms

Even clocks on different computers that start out synchronized will

typically skew over time. The relation between clock time and UTC

(Universal Time, Coordinated) when clocks tick at different rates.

Is it possible to synchronize all clocks in a distributed system?

GMU – CS 571

7.5

Physical Clock Synchronization

Cristian’s Algorithm and

NTP (Network Time

Protocol) – periodically

get information from a

time server (assumed to

be accurate).

Berkeley – active time

server uses polling to

compute average time.

Note that the goal is the

have correct ‘relative’ time

GMU – CS 571

7.6

Logical Clocks

Observation: It may be sufficient that every node agrees on a

current time – that time need not be ‘real’ time.

Taking this one step further, in some cases, it is often adequate

that two systems simply agree on the order in which system

events occurred.

GMU – CS 571

7.7

Ordering events

In a distributed environment, processes can

communicate only by messages

Event: the occurrence of a single action that a

process carries out as it executes

e.g. Send, Receive, update internal variables/state..

For e-commerce applications, the events may be

‘client dispatched order message’ or ‘merchant

server recorded transaction to log’

Events at a single process pi can be placed in a total

ordering by recording their occurrence time

Different clock drift rates may cause problems in

global event ordering

GMU – CS 571

7.8

Logical Time

The order of two events occurring at two different

computers cannot be determined based on their

“local” time.

Lamport proposed using logical time and logical

clocks to infer the order of events (causal

ordering) under certain conditions (1978).

The notion of logical time/clock is fairly general

and constitutes the basis of many distributed

algorithms.

GMU – CS 571

7.9

“Happened Before” relation

Lamport defined a “happened before” relation ()

to capture the causal dependencies between

events.

A B, if A and B are events in the same process

and A occurred before B.

A B, if A is the event of sending a message m in

a process and B is the event of the receipt of the

same message m by another process.

If AB, and B C, then A C (happened-before

relation is transitive).

GMU – CS 571

7.10

“Happened Before” relation

p1

a

b

m1

Phy sical

time

p2

c

d

m2

p3

e

f

a b (at p1) c d (at p2); b c ; also d f

Not all events are related by the “” relation (partial

order). Consider a and e (different processes and no

chain of messages to relate them)

If events are not related by “”, they are said to be

concurrent (written as a || e)

GMU – CS 571

7.11

Logical Clocks

In order to implement the “happened-before”

relation, introduce a system of logical clocks.

A logical clock is a monotonically increasing

software counter. It need not relate to a physical

clock.

Each process pi has a logical clock Li which can be

used to apply logical timestamps to events

GMU – CS 571

7.12

Logical Clocks (Update Rules)

LC1: Li is incremented by 1 before each internal

event at process pi

LC2: (Applies to send and receive)

• when process pi sends message m, it increments Li

by 1 and the message is assigned a timestamp t = Li

• when pj receives (m,t) it first sets Lj to max(Lj, t)

and then increments Lj by 1 before timestamping the

event receive(m)

GMU – CS 571

7.13

Logical Clocks (Cont.)

p1

1

2

a

b

p2

m1

3

4

c

d

Phy sical

time

m2

1

5

e

f

p3

each of p1, p2, p3 has its logical clock initialized to zero.

the indicated clock values are those immediately after the

events.

for m1, 2 is piggybacked and c gets max(0,2)+1 = 3

GMU – CS 571

7.14

Logical Clocks

(a) Three processes, each with its own clock. The clocks run at different rates.

(b) As messages are exchanged, the logical clocks are corrected.

GMU – CS 571

7.15

Logical Clocks (Cont.)

e e’ implies L(e) < L(e’)

The converse is not true, that is L(e) < L(e') does not

imply e e’.

(e.g. L(b) > L(e) but b || e)

In other words, in Lamport’s system, we can

guarantee that if L(e) < L(e’) then e’ did not happen

before e. But we cannot say for sure whether e

“happened-before” e’ or they are concurrent by just

looking at the timestamps.

GMU – CS 571

7.16

Logical Clocks (Cont.)

Lamport’s “happened before” relation defines an

irreflexive partial order among the events in the

distributed system.

Some applications require that a total order be

imposed on all the events.

We can obtain a total order by using clock values at

different processes as the criteria for the ordering

and breaking the ties by considering process

indices when necessary.

GMU – CS 571

7.17

Logical Clocks

The positioning of Lamport’s logical clocks in distributed systems.

GMU – CS 571

7.18

An Application:

Totally-Ordered Multicast

Consider a group of n distributed processes.

At any time, m ≤ n processes may be multicasting

“update” messages to each other.

• The parameter m is not known to individual nodes

How can we devise a solution to guarantee that all

the updates are performed in the same order by all

the processes, despite variable network latency?

Assumptions

• No messages are lost (Reliable delivery)

• Messages from the same sender are received in the

order they were sent (FIFO)

• A copy of each message is also sent to the sender

GMU – CS 571

7.19

An Application of

Totally-Ordered Multicast

Updating a replicated database and leaving it in an

inconsistent state.

GMU – CS 571

7.20

Totally-Ordered Multicast (Cont.)

Each multicast message is always time-stamped

with the current (logical) time of its sender.

When a (kernel) process receives a multicast

update request:

• It first puts the message into a local queue instead

of directly delivering to the application (i.e. instead

of blindly updating the local database). The local

queue is ordered according to the timestamps of

update-request messages.

• It also multicasts an acknowledgement to all other

processes (naturally, with a timestamp of higher

value).

GMU – CS 571

7.21

An Application:

Totally-Ordered Multicast (Cont.)

Local Database Update Rule

A

process can deliver a queued message to the

application it is running (i.e. the local database

can be updated) only when that message is at

the head of the local queue and has been

acknowledged by all other processes.

GMU – CS 571

7.22

An Application:

Totally-Ordered Multicast (Cont.)

For example: process 1 and process 2 each want to

perform an update.

1. Process 1 sends update requests with timestamp tx

to itself and process 2

2. Process 2 sends update requests with timestamp ty

to itself and process 1

3. When each process receives the requests, it puts the

requests on their queues in timestamp order. If tx =

ty then process 1’s request is first. NOTE: the

queues will be identical.

4. Each sends out acks to the other (with larger

timestamp).

5. The same request will be on the front of each queue

– once the ack for the process on the front of the

queue is received, its update is processed and

removed.

GMU – CS 571

7.23

Why can’t this scenario happen?

At DB 1:

Received request1 and request2

with timestamps 4 and 5, as well

as acks from ALL processes of

request1. DB1 performs request1.

4: request1

At DB 2:

Received request2 with timestamp

5, but not the request1 with

timestamp 4 and acks from ALL

processes of request2. DB2

performs request2.

6: ack

Violation of FIFO assumption (slide 19):

request 1 must have already been

received for DB2 to have received

the ack for its request

DB1

??

DB2

5:request2

GMU – CS 571

receive ack

[timestamp > 6]

7.24

Distributed Mutual Exclusion (DME)

Assumptions

• The system consists of n processes; each process Pi

resides at a different processor.

• For the sake of simplicity, we assume that there is only

one critical section that requires mutual exclusion.

• Message delivery is reliable

• Processes do not fail (we will later discuss the

implications of relaxing this).

The application-level protocol for executing a critical

section proceeds as follows:

• Enter() : enter critical section (CS) – block if necessary

• ResourceAccess(): access shared resources in CS

• Exit(): Leave CS – other processes may now enter.

GMU – CS 571

7.25

DME Requirements

Safety (Mutual Exclusion): At most one process

may execute in the critical section at a time.

Bounded-waiting: Requests to enter and exit

the critical section eventually succeed.

GMU – CS 571

7.26

Evaluating DME Algorithms

Performance criteria

The bandwidth consumed, which is proportional

to the number of messages sent in each entry

and exit operation.

The synchronization delay which is necessary

between one process exiting CS and the next

process entering it.

• When evaluating the synchronization delay,

we should think of the scenario in which a process

Pa is in the CS, and Pb, which is waiting, is the next

process to enter.

• The maximum delay between Pa’s exit and Pb’s

entry is called the “synchronization delay”.

GMU – CS 571

7.27

DME: The Centralized Server Algorithm

GMU – CS 571

One of the processes in the system is chosen to

coordinate the entry to the critical section.

A process that wants to enter its critical section

sends a request message to the coordinator.

The coordinator decides which process can

enter the critical section next, and it sends that

process a reply message.

When the process receives a reply message

from the coordinator, it enters its critical

section.

After exiting its critical section, the process

sends a release message to the coordinator and

proceeds with its execution.

7.28

Mutual Exclusion:

A Centralized Algorithm

a)

b)

c)

Process 1 asks the coordinator for permission to enter a critical region.

Permission is granted

Process 2 then asks permission to enter the same critical region. The

coordinator does not reply.

When process 1 exits the critical region, it tells the coordinator, when then

replies to 2

GMU – CS 571

7.29

DME: The Central Server Algorithm

(Cont.)

Safety?

Bounded waiting?

This scheme requires three messages per criticalsection entry: (request and reply)

• Entering the critical section –even when no process

currently is in CS– takes two messages.

• Exiting the critical section takes one release message.

The synchronization delay for this algorithm is the

time taken for a round-trip message.

Problems?

GMU – CS 571

7.30

DME: Token-Passing Algorithms

A number of DME algorithms are based on

token-passing.

A single token is passed among the nodes.

The node willing to enter the critical section will

need to possess the token.

Algorithms in this class differ in terms of the

logical topology they assume, run-time message

complexity and delay.

GMU – CS 571

7.31

DME – Ring-Based Algorithm

Idea: Arrange the processes in a logical ring.

The ring topology may be unrelated to the

physical interconnections between the

underlying computers.

Each process pi has a communication channel

to the next process in the ring, p(i+1)mod N

A single token is passed from process to

process over the ring in a single direction.

Only the process that has the token can enter

the critical section.

GMU – CS 571

7.32

DME – Ring-Based Algorithm (Cont.)

p

1

p

2

p

n

p

3

p

4

Token

GMU – CS 571

7.33

DME – Ring-Based Algorithm (Cont.)

If a process is not willing to enter the CS when it

receives the token, then it immediately forwards

it to its neighbor.

A process requesting the token waits until it

receives it, but retains it and enters the CS.

To exit the CS, the process sends the token to

its neighbor.

Requirements:

• Safety?

• Bounded-waiting?

GMU – CS 571

7.34

DME – Ring-Based Algorithm (Cont.)

Performance evaluation

• To enter a critical section may require between 0

and N messages.

• To exit a critical section requires only one

message.

• The synchronization delay between one process’

exit from CS and the next process’ entry is

anywhere from 1 to N-1 message transmissions.

GMU – CS 571

7.35

Another token-passing algorithm

Token Direction 1

p1

p2

p3

p4

p5

p6

Token Direction 2

Nodes are arranged as a logical linear tree

The token is passed from one end to another

through multiple hops

When the token reaches one end of the tree, its

direction is reversed

A node willing to enter the CS waits for the token,

when it receives, holds it and enters the CS.

GMU – CS 571

7.36

DME - Ricart-Agrawala Algorithm[1981]

A Distributed Mutual Exclusion (DME) Algorithm

based on logical clocks

Processes willing to enter a critical section

multicast a request message, and can enter only

when all other processes have replied to this

message.

The algorithm requires that each message

include its logical timestamp with it. To obtain a

total ordering of logical timestamps, ties are

broken in favor of processes with smaller

indices.

GMU – CS 571

7.37

DME – Ricart-Agrawala Algorithm (Cont.)

Requesting the Critical Section

• When a process Pi wants to enter the CS, it sends a

timestamped REQUEST message to all processes.

• When a process Pj receives a REQUEST message

from process Pi, it sends a REPLY message to

process Pi if

Process Pj is neither requesting nor using the CS, or

Process Pj is requesting and Pi’s request’s timestamp

is smaller than process Pj’s own request’s timestamp.

(If none of these two conditions holds, the request is

deferred.)

GMU – CS 571

7.38

DME – Ricart-Agrawala Algorithm (Cont.)

Executing the Critical Section:

Process Pi enters the CS after it has received

REPLY messages from all other processes.

Releasing the Critical Section:

When process Pi exits the CS, it sends REPLY

messages to all deferred requests.

Observe: A process’ REPLY message is blocked

only by processes that are requesting the CS with

higher priority (smaller timestamp). When a

process sends out REPLY messages to all the

deferred requests, the process with the next

highest priority request receives the last needed

REPLY message and enters the CS.

GMU – CS 571

7.39

Distributed Mutual Exclusion: Ricart/Agrawala

a)

b)

c)

Processes 0 and 2 want to enter the same critical region at the same

moment.

Process 0 has the lowest timestamp, so it wins.

When process 0 is done, it sends an OK also, so 2 can now enter the

critical region.

GMU – CS 571

7.40

DME – Ricart-Agrawala Algorithm (Cont.)

The algorithm satisfies the Safety requirement.

• Suppose two processes Pi and Pj enter the CS at

the same time, then both of these processes must

have replied to each other. But since all the

timestamps are totally ordered, this is impossible.

Bounded-waiting?

GMU – CS 571

7.41

DME – Ricart-Agrawala Algorithm (Cont.)

Gaining the CS entry takes 2 (N – 1) messages in

this algorithm.

The synchronization delay is only one message

transmission time.

The performance of the algorithm can be further

improved.

GMU – CS 571

7.42

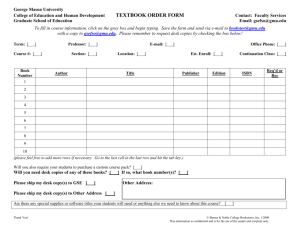

Comparison

Algorithm

Messages per

entry/exit

Delay before entry (in

message times)

Problems

Centralized

3

2

Coordinator crash

Distributed

(Ricart/Agrawala)

2(n–1)

2(n–1)

Crash of any process

Token ring

1 to

0 to n – 1

Lost token, process

crash

A comparison of three mutual exclusion algorithms.

GMU – CS 571

7.43

Election Algorithms

Many distributed algorithms employ a coordinator

process that performs functions needed by the other

processes in the system

• enforcing mutual exclusion

• maintaining a global wait-for graph for deadlock

detection

• replacing a lost token

• controlling an input/output device in the system

If the coordinator process fails due to the failure of

the site at which it resides, a new coordinator must

be selected through an election algorithm.

GMU – CS 571

7.44

Election Algorithms (Cont.)

We say that a process calls the election if it

takes an action that initiates a particular run of

the election algorithm.

• An individual process does not call more than one

election at a time.

• N processes could call N concurrent elections.

At any point in time, a process pi is either a

participant or non-participant in some run of

the election algorithm.

The identity of the newly elected coordinator

must be unique, even if multiple processes call

the election concurrently.

GMU – CS 571

7.45

Election Algorithms (Cont.)

Election algorithms assume that a unique

priority number Pri(i) is associated with each

active process pi in the system Larger

numbers indicate higher priorities.

Without loss of generality, we require that the

elected process be chosen as the one with the

largest identifier.

How to determine identifiers?

GMU – CS 571

7.46

Ring-Based Election Algorithm

Chang and Roberts (1979)

During the execution of the algorithm, the processes

exchange messages on a unidirectional logical ring

(assume clock-wise communication).

Assumptions:

• no failures occur during the execution of the algorithm

• reliable message delivery

GMU – CS 571

7.47

Ring-Based Election Algorithm (Cont.)

Initially, every process is marked as a nonparticipant in an election.

Any process can begin an election (if, for example,

it discovers that the current coordinator has failed).

It proceeds by marking itself as a participant,

placing its identifier in an election message and

sending it to its clockwise neighbor.

GMU – CS 571

7.48

Ring-Based Election Algorithm (Cont.)

When a process receives an election message, it

compares the identifier in the message with its own.

• If the arrived identifier is greater, then it forwards the

message to its neighbor. It also marks itself as a

participant.

• If the arrived identifier is smaller and the receiver is

not a participant then it substitutes its own identifier

in the election message and forwards it. It also marks

itself as a participant.

• If the arrived identifier is smaller and the receiver is a

participant, it does not forward the message.

• If the arrived identifier is that of the receiver itself,

then this process’ identifier must be the largest, and it

becomes the coordinator.

GMU – CS 571

7.49

Ring-Based Election Algorithm (Cont.)

The coordinator marks itself as a nonparticipant once more and sends an elected

message to its neighbor, announcing its

election and enclosing its identity.

When a process pi receives an elected message,

it marks itself as a non-participant, sets its

variable coordinator-id to the identifier in the

message, and unless it is the new coordinator,

forwards the message to its neighbor.

GMU – CS 571

7.50

Ring-Based Election Algorithm (Example)

•3

•17

•4

•24

•9

•1

•15

•28

•24

Note: The election was started by process 17.

The highest process identifier encountered so far is 24.

Participant processes are shown darkened

GMU – CS 571

7.51

Ring-Based Election Algorithm (Cont.)

Observe:

• Only one coordinator is selected and all the

processes agree on its identity.

• The non-participant and participant states are used

so that messages arising when another process

starting another election at the same time are

extinguished as soon as possible.

If only a single process starts an election, the worstcase performing case is when its anti-clockwise

neighbor has the highest identifier

(requires 3N – 1 messages).

GMU – CS 571

7.52

The Bully Algorithm

Garcia-Molina (1982).

Unlike the ring-based algorithm:

• It allows crashes during the algorithm execution

• It assumes that each process knows which

processes have higher identifiers, and that it can

communicate with all such processes directly.

• It assumes that the system is synchronous (uses

timeouts to detect process failures)

Reliable message delivery assumption.

GMU – CS 571

7.53

The Bully Algorithm (Cont.)

A process begins an election when it notices,

through timeouts, that the coordinator has

failed.

Three types of messages

• An election message is sent to announce an

election.

• An answer message is sent in response to an

election message.

• A coordinator message is sent to announce the

identity of the elected process (the “new

coordinator”).

GMU – CS 571

7.54

The Bully Algorithm (Cont.)

How can we construct a reliable failure detector?

There is a maximum message transmission delay

Ttrans and a maximum message processing delay

Tprocess

If a process does not receive a reply within

T = 2 Ttrans + Tprocess then it can infer that the

intended recipient has failed Election will be

needed.

GMU – CS 571

7.55

The Bully Algorithm (Cont.)

The process that knows it has the highest identifier can

elect itself as the coordinator simply by sending a

coordinator message to all processes with lower

identifiers.

A process with lower identifier begins an election by

sending an election message to those processes that

have a higher identifier and awaits an answer message

in response.

• If none arrives within time T, the process considers itself

the coordinator and sends a coordinator message to all

processes with lower identifiers.

• If a reply arrives, the process waits a further period T’ for

a coordinator message to arrive from the new coordinator.

If none arrives, it begins another election.

GMU – CS 571

7.56

The Bully Algorithm (Cont.)

If a process receives an election message, it

sends back an answer message and begins

another election (unless it has begun one

already).

If a process receives a coordinator message, it

sets its variable coordinator-id to the identifier

of the coordinator contained within it.

GMU – CS 571

7.57

The Bully Algorithm: Example

election

C

election

Stage 1

p

1

answer

p

2

p

3

p

4

answer

•P1 starts the election once it notices that P4 has failed

•Both P2 and P3 answer

The election of coordinator p2,

after the failure of p4 and then p3

GMU – CS 571

7.58

The Bully Algorithm: Example

election

election

election

C

Stage 2

p

1

p

2

answer

p

p

3

4

•P1 waits since it knows it can’t be the coordinator

•Both P2 and P3 start elections

•P3 immediately answers P2 but needs to wait on P4

•Once P4 times out, P3 knows it can be coordinator but…

The election of coordinator p2,

after the failure of p4 and then p3

GMU – CS 571

7.59

The Bully Algorithm (Example)

timeout

Stage 3

p

1

p

2

p

3

p

4

•If P3 fails before sending coordinator message, P1 will eventually

start a new election since it hasn’t heard about a new coordinator

GMU – CS 571

7.60

The Bully Algorithm (Example)

Eventually.....

coordinator

C

p

1

GMU – CS 571

p

2

7.61

p

3

p

4

The Bully Algorithm (Cont.)

What happens if a crashed process recovers and

immediately initiates an election?

If it has the highest process identifier (for example P4 in

previous slide), then it will decide that it is the

coordinator and may choose to announce this to other

processes.

• It will become the coordinator, even though the current

•

•

coordinator is functioning (hence the name “bully”)

This may take place concurrently with the sending of

coordinator message by another process which has

previously detected the crash.

Since there are no guarantees on message delivery order,

the recipients of these messages may reach different

conclusions regarding the id of the coordinator process.

GMU – CS 571

7.62

The Bully Algorithm (Cont.)

Similarly, if the timeout values are inaccurate

(that is, if the failure detector is unreliable), then

a process with large identifier but slow response

may cause problems.

Algorithm’s performance:

• Best case: The process with second largest

identifier notices the coordinator’s failure N – 2

messages.

• Worst-case: The process with the smallest

identifier notices the failure O(N2) messages

GMU – CS 571

7.63

Election in Wireless environments (1)

Traditional election algorithms assume that

communication is reliable and that topology does

not change.

This is typically not the case in many wireless

environments

Vasudevan [2004] – elect the ‘best’ node in ad hoc

networks

GMU – CS 571

7.64

Election in Wireless environments (1)

1. To elect a leader, any node in the network can start an

2.

3.

4.

5.

election by sending an ELECTION message to all nodes in

its range.

When a node receives an ELECTION for the first time, it

chooses the sender as its parent and sends the message to

all nodes in its range except the parent.

When a node later receives additional ELECTION messages

from a non-parent, it merely acknowledges the message

Once a node has received acknowledgements from all

neighbors except parent, it sends an acknowledgement to

the parent. This acknowledgement will contain information

about the best leader candidate based on resource

information of neighbors.

Eventually the node that started the election will get all this

information and use it to decide on the best leader – this

information can be passed back to all nodes.

GMU – CS 571

7.65

Elections in Wireless Environments (2)

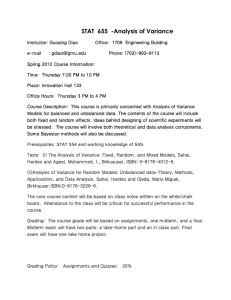

Election algorithm in a wireless network, with node a as the source. (a) Initial

network. (b) The build-tree phase

GMU – CS 571

7.66

Elections in Wireless Environments (3)

The build-tree phase

GMU – CS 571

7.67

Elections in Wireless Environments (4)

The build-tree phase and reporting of best node to

source.

GMU – CS 571

7.68

Reaching Agreement

There are applications where a set of processes

wish to agree on a common “value”.

Such agreement may not take place due to:

• Unreliable communication medium

• Faulty processes

Processes may send garbled or incorrect

messages to other processes.

A subset of the processes may collaborate

with each other in an attempt to defeat the

scheme.

GMU – CS 571

7.69

Agreement with Unreliable Communications

Process Pi at site A, sends a message to

process Pj at site B.

To proceed, Pi needs to know if Pj has received

the message.

Pi can detect transmission failures using a timeout scheme.

GMU – CS 571

7.70

Agreement with Unreliable Communications

Suppose that Pj also needs to know that Pi has

received its acknowledgment message, in order to

decide on how to proceed.

Example: Two-army problem

Two armies need to coordinate their action

(“attack” or “do not attack”) against the enemy.

• If only one of them attacks, the defeat is certain.

• If both of them attack simultaneously, they will win.

• The communication medium is unreliable, they use

acknowledgements.

• One army sends “attack” message to the other.

Can it initiate the attack?

GMU – CS 571

7.71

Agreement with Unreliable Communication

Messenger

Blue Army #2

Blue Army #1

Red Army

The two-army problem

GMU – CS 571

7.72

Agreement with Unreliable Communications

In the presence of unreliable communication

medium, two parties can never reach an agreement,

no matter how many acknowledgements they send.

• Assume that there is some agreement protocol that

terminates in a finite number of steps. Remove any

extra steps at the end to obtain the minimum-length

protocol that works.

• Some message is now the last one and it is essential

to the agreement. However, the sender of this last

message does not know if the other party received it

or not.

It is not possible in a distributed environment for

processes Pi and Pj to agree completely on their

respective states with 100% certainty in the face of

unreliable communication, even with non-faulty

processes.

GMU – CS 571

7.73

Reaching Agreement with Faulty Processes

Many things can go wrong…

Communication

• Message transmission can be unreliable

• Time taken to deliver a message is unbounded

• Adversary can intercept messages

Processes

• Can fail or team up to produce wrong results

Agreement very hard, sometime impossible, to

achieve!

GMU – CS 571

7.74

Agreement in Faulty Systems - 5

System of N processes, where

each process i will provide a

value vi to each other. Some

number of these processes may

be incorrect (or malicious)

Goal: Each process learn the true

values sent by each of the correct

processes

The Byzantine agreement problem for three

nonfaulty and one faulty process.

GMU – CS 571

7.75

Byzantine Agreement Problem

Three or more generals are to agree to attack or retreat.

Each of them issues a vote. Every general decides to

attack or retreat based on the votes.

But one or more generals may be “faulty”: may supply

wrong information to different peers at different times

Devise an algorithm to make sure that:

• The “correct” generals should agree on the same

decision at the end (attack or retreat)

Lamport, Shostak, Pease. The Byzantine General’s Problem. ACM TOPLAS, 4,3, July 1982, 382-401.

GMU – CS 571

7.76

Impossibility Results

General 1

General 1

attack

attack

General 3

General 2

retreat

attack

General 3

General 2

retreat

retreat

No solution for three processes can handle a single traitor.

In a system with m faulty processes agreement can be achieved

only if there are 2m+1 (more than 2/3) functioning correctly.

GMU – CS 571

7.77

Byzantine General Problem: Oral Messages

Algorithm

1

P1

P2

1

P3

GMU – CS 571

1

P4

7.78

Phase 1: Each process

sends its value (troop

strength) to the other

processes. Correct

processes send the

same (correct) value to

all. Faulty processes

may send different

values to each if

desired (or no

message).

Assumptions: 1) Every message that is

sent is delivered correctly; 2) The

receiver of a message knows who sent

it; 3) The absence of a message can be

detected.

Byzantine General Problem

Phase 1: Generals announce their troop strengths to each other

2

P1

P2

2

2

P4

P3

GMU – CS 571

7.79

Byzantine General Problem

Phase 1: Generals announce their troop strengths to each other

P1

P2

4

4

4

P4

P3

GMU – CS 571

7.80

Byzantine General Problem

Phase 2: Each process uses the messages to create a vector of

responses – must be a default value for missing messages.

Each general construct a vector with all troops

P1

P2

P3

P4

1

2

x

4

P1

P2

x

P3

GMU – CS 571

P1

P2

P3

P4

1

2

y

4

P1

P2

P3

P4

1

2

z

4

y

z

P4

7.81

Byzantine General Problem

Phase 3: Each process sends its vector to all other

processes.

Phase 4: Each process the information received from

every other process to do its computation.

P1

P2

P3

P4

P2

1

2

y

4

P3

a

b

c

d

1

2

z

4

P1

P2

P3

P4

2,

?,

P3

P4

1

2

x

4

e

f

g

h

1

2

z

4

(1,

2,

?,

4)

P1

P2

P3

P4

1

2

x

4

1

2

y

4

h

i

j

k

(1,

2,

?,

4)

(e, f, g, h)

4)

(h, i, j, k)

P3

P4 P1

P2

P3

GMU – CS 571

P2

P4

(a, b, c, d)

(1,

P1

P1

7.82

Byzantine General Problem

A correct algorithm can be devised only if

n3m+1

At least m+1 rounds of message exchanges are

needed (Fischer, 1982).

Note: This result only guarantees that each

process receives the true values sent by correct

processors, but it does not identify the correct

processes!

GMU – CS 571

7.83

Byzantine Agreement Algorithm (signed messages)

–

Adds the additional assumptions:

(1)A loyal general’s signature cannot be forged and any alteration

of the contents of the signed message can be detected.

(2)Anyone can verify the authenticity of a general’s signature.

– Algorithm SM(m):

1. The general signs and sends his value to every lieutenant.

2. For each i:

1. If lieutenant i receives a message of the form v:0 from the

commander and he has not received any order, then he lets Vi

equal {v} and he sends v:0:i to every other lieutenant.

2. If lieutenant i receives a message of the form v:0:j1:…:jk and v is

not in the set Vi then he adds v to Vi and if k < m, he sends the

message v:0:j1:…:jk:i to every other lieutenant other than j1,…,jk

3. For each i: When lieutenant i will receive no more messages, he

obeys the order in choice(Vi).

–

Algorithm SM(m) solves the Byzantine General’s problem if there

are at most m traitors.

GMU – CS 571

7.84

Signed messages

General

General

attack:0

attack:0

???

Lieutenant 1

retreat:0:2

Lieutenant 2

Lieutenant 1

attack:0:1

Lieutenant 2

attack:0:1

SM(1) with one traitor

GMU – CS 571

retreat:0

attack:0

7.85

Global State (1)

The ability to extract and reason about the global state of a distributed

application has several important applications:

•

•

•

•

distributed

distributed

distributed

distributed

garbage collection

deadlock detection

termination detection

debugging

While it is possible to examine the state of an individual process,

getting a global state is problematic.

Q: Is it possible to assemble a global state from local states in the

absence of a global clock?

GMU – CS 571

7.86

Global State (2)

Consider a system S of N processes pi (i = 1, 2, …, N). The

local state of a process pi is a sequence of events:

• history(pi) = hi = <ei0,ei1,ei2,…>

We can also talk about any finite prefix of the history:

• hik = <ei0,ei1,…,eik>

An event is either an internal action of the process or the

sending or receiving of a message over a communication

channel (which can be recorded as part of state). Each event

influences the process’s state; starting from the initial state

si0, we can denote the state after the kth action as sik.

The idea is to see if there is some way to form a global

history

• H = h1 h 2 … h N

This is difficult because we can’t choose just any prefixes to

use to form this history.

GMU – CS 571

7.87

Global State (3)

A cut of the system’s execution is a subset of its global history

that is a union of prefixes of process histories:

C = h1c1 h2c2 … hNcN

The state si in the global state S corresponds to the cut C that is of

pi immediately after the last event processed by pi in the cut. The

set of events {eici:i = 1,2,…,N} is the frontier of the cut.

p1

e10

e11

e20

p2

p3

e12

e21

e31 e32

e30

Frontier

GMU – CS 571

7.88

Global State (4)

A cut of a system can be inconsistent if it contains receipt of a message

that hasn’t been sent (in the cut).

A cut is consistent if, for each event it contains, it also contains all the

events that happened-before (→) the event:

events e C, f → e f C

A consistent global state is one that corresponds to a consistent cut.

GMU – CS 571

7.89

Global State (5)

The goal of Chandy & Lamport’s ‘snapshot’ algorithm is to record the

state of each of a set of processes such a way that, even though the

combination of recorded states may never have actually occurred,

the recorded global state is consisent.

Algorithm assumes that:

Neither channels or process fail and all messages are delivered

exactly once

Channels are unidirectional and provide FIFO-ordered message

delivery

There is a distinct channel between any two processes that

communicate

Any process may initiate a global snapshot at any time

Processes may continue to execute and send and receive normal

messages while the snapshot is taking place.

GMU – CS 571

7.90

Global State (6)

a)

Organization of a process and channels for a distributed

snapshot. A special marker message is used to signal the need

for a snapshot.

GMU – CS 571

7.91

Global State (7)

Marker receiving rule for process pi

On pi’s receipt of a marker message over channel c

if (pi has not yet recorded its state) it

records its process state

records the state of channel c as the empty set

turns on recording of messages arriving over all incoming

channels

else

pi records the state of c as the set of messages it has

received over c since it saved its state

end if

Marker sending rule for process pi

After pi has recorded its state, for each outgoing channel c:

pi sends one marker message over c (before it sends any other message

over c)

GMU – CS 571

7.92

Global State (8)

b)

c)

d)

Process Q receives a marker for the first time and records its local state. It

then sends a marker on all of its outgoing channels.

Q records all incoming messages

Once Q receives another marker for all its incoming channels and finishes

recording the state of the incoming channel

GMU – CS 571

7.93