weird_259_2007

advertisement

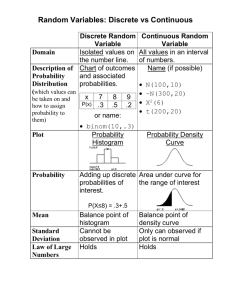

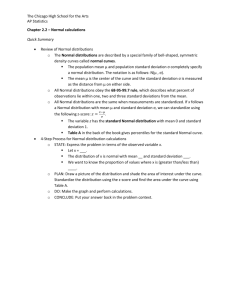

Weird and Normal Homework • Two people asked about the “correct” bin size or number of bins for a histogram. There are many algorithms but in general, aim for 7 +/- 2. This is not a rule, just my general suggestion. Weird • If you measure the height of a randomly selected woman at Stanford, you could get a woman who was 5’11”. It would be a bit weird but not too odd. If you were to get a sample of 10 random women and get an average of 5’11”, it would be very weird. • You want a method to quantify how unusual that is. Quantifying Weird • To quantify that oddity you need to know one thing. What is the pattern of the heights in the population? • You could describe that with an empirical distribution (look where the data falls in a histogram of real data) or with a theoretical distribution (look where the data falls in mathematical density). In either case you just want to come up with a way to describe a histogram of the data if you were looking at the population. Density • There are a few commonly used density functions to describe the patterns in the data. Density functions you will care about are the Poisson (for describing count data) and the exponential (for describing survival), but arguably the most important is the Gaussian, aka Normal, density. • The easiest way to look at a probability density (continuous data) or mass function (categorical data) is to download R and the Rcmdr package. R stuff • The closest place to get R is here: cran.cnr.berkeley.edu/bin/windows/base/release.htm • Once you get R, install the Rcmdr package (you don’t need Rcmdr.HH) from USA (CA1) using the Packages menu. • Then type library(Rcmdr) and push Enter. Exploring Distributions • The tools you will want to use to explore distributions are clearly labeled: 0.4 Normal Distribution: = 0, =1 0.2 0.1 0.0 Density 0.3 The normal is a two parameter distribution. The mean describes the location and the standard deviation describes the shape. -3 -2 -1 0 x 1 2 3 How does a normal curve work? • You describe a normal curve using only two bits of information: the mean and the standard deviation. – Location = the mean aka μ – Shape = the variance aka σ μ=0 σ=1 μ=0 σ=2 μ=4 σ=1 0.2 0.1 0.0 Density 0.3 0.4 SDs are where the curve goes from convex to concave and back. -3 -2 -1 0 x 1 2 3 Distributions • Lots of distributions are based on the formula y exp( x ) m • Y = exp(-x2) rescaled so the integral (area under the curve) is one is the normal distribution. 0.0 0.0 0.2 0.2 0.4 0.4 y y 0.6 0.6 0.8 0.8 1.0 1.0 0.0 0.2 0.2 0.4 0.4 y y 0.6 0.6 0.8 0.8 1.0 1.0 m=1 -3 -3 -2 -2 -1 -1 0 0 x m=2 1 1 2 2 3 3 -3 -3 -2 -2 -1 -1 0 x x m=3 m=4 0 x 1 2 3 1 2 3 Moments • The normal distribution is described by those two parameters but in real life you may want to describe data with several statistics. y n y y n 2 y y n 3 Skew y y n 4 Kurtosis 1.0 0.8 0.0 0.2 0.2 0.4 0.4 y y2 0.6 0.6 0.8 Leptokurtosis -0.792628724459993 1.0 -0.139899436960595 -2 -1 0 1 2 3 -3 -2 -1 0 1 -1.10186607399571 2 3 0.6 Platykurtosis 0.0 0.2 0.4 y4 0.0 0.2 0.4 y3 0.6 1.0 -0.992091841121729 0.8 x 1.0 x 0.8 -3 -3 -2 -1 0 x 1 2 3 -3 -2 -1 0 x 1 2 3 This is really a probability mass function because the values are counts, therefore it is discrete. The Poisson is a one parameter distribution. The mean describes the pattern of the counts. Exponential Distribution: rate = 1 0.4 0.0 Density 0.8 The Exponential is a one parameter distribution. The rate describes the patterns. 0 2 4 x 6 Looking at Your Data • The bell shaped curve can be drawn so that the area underneath it has any area but it is typically tweaked so that its area is 1. f ( x) 1 2 1 x 2 ( ) 2 e There are only two interesting parameters here: and . The other weird letters are just constants =3.14159 e=2.71828 Why bother with the math? • You want a way to describe the likelihoods (probability) of every possible value that could be drawn from a population. A mathematically convenient way to do that is to say the total probability (which by definition is 1) is synonymous with the total area. If the area is equivalent to probability, then it is obvious that a small area corresponds to a small probability. • If the area is a score between 0 and 1, then you can quickly set rules on what is weird. If the scale is from 0 to 1, then for me, something that occurs .01 of the time is pretty odd. In Hieroglyphics 1 2 1 x 2 ( ) e 2 dx 1 What is weird? • No matter what and are, the area between - and + is about 68%, the area between -2 and +2 is about 95%, and the area between -3 and +3 is about 99.7%. Almost all values fall within 3 standard deviations. • If an observed value is greater than the mean plus two SDs, that is weird. The same is true if it is less than the mean minus two SDs. If it is more extreme than the mean +/- 3 SD, it is VERY weird. 68% of the data 95% of the data 99.7% of the data If you speak math: 1 e 2 1 x 2 ( ) 2 2 1 x ( 1 2 e 2 2 3 1 e 3 2 dx .68 )2 1 x 2 ( ) 2 dx .95 dx .997 How good is that rule for real data? In theory, lots of distributions can be well approximated by a normal distribution. If something is caused by many independent factors, look for it to be approximately normally distributed. Check some example data: The mean of the weight of the women = 127.8 The standard deviation (SD) = 15.5 68% of 120 = .68x120 = ~ 82 runners In fact, 79 runners fall within 1-SD (15.5 lbs) of the mean. 112.3 127.8 143.3 25 20 P e r c e n t 15 10 5 0 80 90 100 110 120 POUNDS 130 140 150 160 95% of 120 = .95 x 120 = ~ 114 runners In fact, 115 runners fall within 2-SD’s of the mean. 96.8 127.8 158.8 25 20 P e r c e n t 15 10 5 0 80 90 100 110 120 POUNDS 130 140 150 160 99.7% of 120 = .997 x 120 = 119.6 runners In fact, all 120 runners fall within 3-SD’s of the mean. 81.3 127.8 174.3 25 20 P e r c e n t 15 10 5 0 80 90 100 110 120 POUNDS 130 140 150 160 Working with a Normal • You are still faced with the question of how do you determine if a value you observe is weird (unexpected) given the hypothetical normal population distribution. To do that, you just need to map the observed value into the area on the curve. If it is out in a range with a very small area, it would occur rarely. Example • Suppose SAT scores roughly follow a normal distribution in the U.S. population of college-bound students (with range restricted to 200-800), and the average math SAT is 500 with a standard deviation of 50, then: – 68% of students will have scores between 450 and 550 – 95% will be between 400 and 600 – 99.7% will be between 350 and 650 0.008 0.006 0.004 0.002 0.000 320 360 400 440 480 520 560 600 640 Example • BUT… What if you wanted to know the math SAT score corresponding to the 90th percentile (=90% of students are lower)? 1 x 500 2 P(X≤Q) = .90 Q ( ) 1 (50) 200 2 e 2 50 dx .90 <math phobic students please don’t implode here> Common Densities • Wouldn’t it be nice if somebody had already worked out what is weird (say > 90%) in a distribution where the mean is 500 and the SD is 50? • There are a couple of distributions where people have actually worked out those values and some weird people keep the values in their heads. – The common IQ tests have a mean of 100 and a SD of 15. Ask a developmental psychologist what score is like the top 1% and they are likely to know. A fine trick! • Instead of relying on your ability to find some savant who knows the percentiles describing a density with a mean of 500 and SD of 50, you can convert the values to a different scale where you know the percentiles. • People typically convert the data so that it is measured in terms of standard deviations from the mean. Z (aka zee-score or zed-score) • The typical conversion is to rescale your data so the mean is 0 and a standard deviation is 1 unit. So for the SAT example, a score of 500 is 0 on the Z scale. A 550 is scored as 1 and 400 is -2 on the Z scale. • You then become a master on the Z scale and learn things like -2 to 2 on the scale covers about 95% of the distribution. It really is about +/- 1.96. The Standard Normal (Z): • The formula for the standardized normal probability density function is less horrible and you can easily get the probabilities out of a statistical package: 1 p( Z ) e (1) 2 1 Z 0 2 ( ) 2 1 1 e 2 1 ( Z )2 2 The Standard Normal Distribution (Z) All normal distributions can be converted into the standard normal curve by subtracting the mean and dividing by the standard deviation: X Z Somebody calculated all the integrals for the standard normal and put them in a table! So we never have to integrate! Comparing X and Z Units 500 0 600 2.0 X Z ( = 500, = 50) ( = 0, = 1) Example • For example, what’s the probability of getting a math SAT score of 575 or less, =500 and =50? 575 500 Z 1.5 50 i.e., a score of 575 is 1.5 standard deviations above the mean 575 P( X 575) 1 (50) 200 2 1.5 1 x 500 2 ( ) e 2 50 dx 1 2 1 Z2 e 2 dz <math phobic students, please refrain from exploding here> Where is that in the density? • If you have R, it is trivially easy to look it up: 0.4 Tails? 0.2 0.1 0.0 Density 0.3 About 93% of the area is below the 1.5 Z mark. -3 -2 -1 0 Z score 1 2 3 0.004 0.002 0.000 Density 0.006 0.008 Z scores are so last century… 350 400 450 500 Z score 550 600 650 QQ plots • We have been talking about comparing a data point against a hypothetical normal distribution, but data is not really normally distributed. How do you tell if a sample is normally distributed? First and foremost, you plot your values against a hypothetical distribution. Checking for Normality You are good unless the p-values here get small. These tests are too sensitive for many uses (because of the CLT). 350 400 450 500 550 theScores$rnorm.1...100..mean...500..sd...50. 600 QQ with Rcmdr -2 -1 0 norm quantiles 1 2 CDF, PDF, Density • There are four common ways of looking at a distribution. • If you start on the left side of a bell shaped curve, you can draw how likely a value is to be at or less than that value (the CDF) or you can measure how likely a value is at that location (sort of). -2 0.0 0.2 -1 0.6 -3 1 0.8 2 1.0 -3 -2 -2 -1 -1 0 0 z 1 1 2 2 3 0 Quantile (Z) 0.4 Probability -4 3 -3 0.0 -2 0.2 -1 0 z 0.4 p 1 0.6 2 0.8 3 z 1.0 0.0 0 100 150 0.1 0.2 0.3 Probability density 50 frequency 0.4 Size Matters • When you take a sample of size 1 from a normally distributed population, you are unlikely to get a sample mean which is far from the population mean. If you have a sample size of 5, you are less likely to get a sample mean far from the population mean. If you have a sample size of 30, you are very unlikely to get a sample mean from the population. • How unlikely is that? Sampling Distribution • You need to know what the distribution of the samples looks like. Intuitively, you know that you are not likely to get very many samples, say, of 30 people, where the sample mean (e.g., observed score is 600) is far from the population mean (population mean is 500). • As it happens, the means from the samples will cluster around the population mean. 1500 1000 500 0 Frequency 2000 2500 Actual scores 300 400 500 scores 600 700 400 200 0 Frequency 600 800 Bunch of Means 300 400 500 bunchOfMeans 600 700 1500 0 500 Frequency Actual Scores 300 400 500 600 700 600 700 600 700 scores 600 200 0 400 500 bunchOfMeans 200 600 Bunch of Means sample N = 20 0 The distribution of the means from sample size of 20 is narrower still (and bell-shaped). 300 Frequency The distribution of the means from sample size of 5 is narrower than the original values (and bell shaped). Frequency Bunch of Means sample N = 5 300 400 500 bunchOfMeans20 Sampling Distributions • You can therefore describe the sampling distributions as a bell shaped curve and furthermore, use your knowledge about the normal distribution to conclude what is a weird sample. You just use the same logic as you used with a single value. Not Normal • What happens when you calculate means from a population distribution that does not look normal? Doors (hurt) • This is a distribution describing the count of hard things I walk into in a typical day. On average I walk into about .7 doorframes a day. 4000 2000 0 Frequency 6000 Histogram of counts 0 2 4 counts 6 Sampling from a Poisson Distribution • If you take a sample count of the number of things I walk into in a day, you are not likely to get a lot of days with 4 doors in 1 day. If you sample 5 random days and calculate the mean, you could get an average of me walking into 4 things but it would be darn weird… • Look at the means taken from 5000 samples of 5 days picked out at random. Histogram of Means 2500 2000 1500 1000 500 Note this is drawn on the original scale. 0 Frequency As expected, you don’t see many (if any) samples where I walked into an average of 4 things across 5 randomly selected days. 3000 Histogram of bunchOfMeans 0 2 4 bunchOfMeans 6 Relax the Scale 600 400 200 0 Frequency Look at the pattern in the means! It looks sort of kind of like a normal distribution! 800 1000 Histogram of bunchOfMeans 0.0 0.5 1.0 bunchOfMeans 1.5 2.0 600 0 200 Frequency 1000 Histogram of the means N = 5 0.0 0.5 1.0 1.5 bunchOfMeans 600 0 200 Frequency 1000 Histogram of the means N = 15 0.0 0.5 1.0 1.5 bunchOfMeans15 0 400 800 1200 Histogram of the means N = 30 Frequency That looks like a normal distribution. 0.0 0.5 1.0 bunchOfMeans30 1.5 Weird and Normal • The weird fact that the distribution of sample means is shaped like a normal curve irrespective of the original distribution (if your sample size is big enough), is called the Central Limit Theorem (CLT). • If you can describe sample means using a bell shaped curve, you can describe what is a weird sample! You’re just using the same simple logic as when you compare one person vs. a population distribution.