Principles of Appetitive Conditioning

advertisement

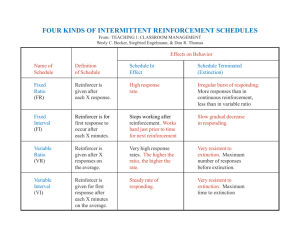

1 PRINCIPLES OF APPETITIVE CONDITIONING Chapter 6 Early Contributors 2 Thorndike’s Contribution Emphasized Laws of Behavior Demonstrated trial by trial learning S-R learning Skinner’s contribution Emphasized Contingency A specified relationship between behavior and reinforcement in a given situation The environment “sets” the contingencies S(R->O) A “Faux” Distinction 3 Instrumental conditioning A conditioning procedure in which the environment constrains the opportunity for reward (discrete trial) Operant conditioning When a specific response produces reinforcement, and the frequency of the response determines the amount of reinforcement obtained (continuous responding, schedules of reinforcement) Thorndikes’ Law of Effect 4 S-R associations are stamped in by reward (satisfiers) Stimulus Response Thorndike: “What is learned?” 5 Habit Learning S Reinforcement “stamps in” this connection R Is that it? 6 Instrumental Association S R ? ? Pavlovian Association O “O” matters 7 The Importance of Past Experience Depression/Negative Contrast The effect in which a shift from high to low reward magnitude produces a lower level of response than if the reward magnitude had always been low. Elation/Positive Contrast The effect in which a shift from low to high reward magnitude produces a greater level of responding than if the reward magnitude had always been high. Negative and Positive Contrast 8 Logic of Devaluation Experiment 9 Max Responding R-O or Goal Directed: Controlled by the current value of the reinforced, and so it should be reduced to zero after devaluation. S-R or Habit: Responding that is not controlled by the current value of the reward, and so it is insensitive to reinforcer devaluation. Min Normal Devalued R-O Association (aka the instrumental association) 10 Phase 1 Devaluation Push LeftPellet Pellet+LiCl Number of Pushes Push RightSucrose Sucrose+LiCl Test Right? Left? Right Pushes Left Pushes Devalued Pellet Devalued Sucrose Summary of Devaluation 11 Neutered male rats lower but do not eliminate their responding previously associated with access to a “ripe” female rat. Rats satiated for reward#1 preferentially lower responding to get reward#1 more than reward#2. Goal devaluation effects tend to shrink with continued training and goal-directed responding is replaced by habit learning. S-O Association (aka Pavlovian Association) 12 Stage 1 Stage 2 Test RightPellet TonePellet Tone: Left? Right? LeftSucrose LightSucrose Light: Left? Right? Number of Presses Left Right Tone Light Skinner’s Contributions 13 Automatic Easy measurements that can be compared across species Three Terms Define the Contingency 14 Three term contingency Discriminative stimulus (S+ or S-) Operant (R) Consequence (O) Operant Strengthened 15 S+ R O Bite Light-On Skinner Box Groom Lick Reinforcer Rear Push Lever Techniques and Concepts 16 Shaping: Successive approximations Require closer and closer appoximations to the target behaviour Secondary Reinforcers: Stimuli accompanying reinforcer delivery Marking: Feedback that a response had occurred Shaping 17 Shaping (or successive approximation procedure) Select a highly occurring operant behavior, then slowly changing the contingency until the desired behavior is learned Training a Rat to Bar Press 18 Step 1: reinforce for eating out of the food dispenser Step 2: reinforce for moving away from the food dispenser Step 3: reinforce for moving in direction of bar Step 4: reinforce for pressing bar Appetive Reinforcers 19 Primary reinforcer An activity whose reinforcing properties are innate Secondary reinforcer An event that has developed its reinforcing properties through its association with primary reinforcers Primary Reward Magnitude 20 The Acquisition of an Instrumental or Operant Response The greater the magnitude of the reward, the faster the task is learned The differences in performance may reflect motivational differences Magnitude 21 Primary Reward and Degraded Contingency 22 = bar press Perfect contingency Strong Responding Degraded contingency Weak Responding = food Strength of Secondary Reinforcers 23 Several variables affect the strength of secondary reinforcers The magnitude of the primary reinforcer The greater the number of primary-secondary pairings, the stronger the reinforcing power of the secondary reinforcer The time elapsing between the presentation of the secondary reinforcer and the primary reinforcer affects the strength of the secondary reinforcer Primary-Secondary Pairings 24 Schedules of Reinforcement 25 Schedules of reinforcement A contingency that specifies how often or when we must act to receive reinforcement Schedules of Reinforcement 26 Fixed Ratio Reinforcement is given after a given number of responses Short pauses Variable Ratio After a varying number of responses Schedules of Reinforcement 27 Fixed Interval First response after a given interval is rewarded FI Scallop Variable Interval Like FI but varies with a given average Scallop disappears Fixed Interval Schedule 28 Fixed interval schedule Reinforcement is available only after a specified period of time and the first response emitted after the interval has elapsed is reinforced Scallop effect Experience - the ability to withhold the response until close to the end of the interval increases with experience The pause is longer with longer FI schedules Variable Interval Schedules 29 Variable interval schedule An average interval of time between available reinforcers, but the interval varies from one reinforcement to the next contingency Characterized by steady rates of responding The longer the interval, the lower the response rate Scallop effect does not occur on VI schedules Encourages S-R habit learning Some Other Schedules 30 DRL, Differential reinforcement for low rates of responding DRH, Differential reinforcement for high rates of responding DR0, Different reinforcement of anything but the target behavior Compound Schedules 31 Compound schedule A complex contingency where two or more schedules of reinforcement are combined Schedule this…. 32 Concurrent schedules permit the subject to alternate between different schedules; or to repeatedly choose between working on different schedules A B $5VI-30 today $50 VI-60 wait Matching Law 33 B1/(B1+B2) = R1/(R1+R2) B stands for numbers of a certain behavior R stands for numbers of a reinforcers earned Sniffy the Rat 34 Schedule Behavior B1/(B1+B2) R1/(R1+R2) VI-30 vs VI-10 25% 25% VI-10 vs VI-30 75% 75% VI-10 vs VI-50 83.3% 83.3% VI-50 vs VI-10 16.7% 16.7% VI-30 vs VI-30 50% 50% VI-10 vs VI-10 50% 50% “1” vs “2” Typical Result 35 Deviations From Matching 36 Bias represents a preference for responding on one response more than the other that has nothing to do with the schedules programmed one pigeon key requires more force to close its contact than the other, so that the pigeon has to peck harder one food hopper delivers food more quickly than another Sensitivity 37 Overmatching -- the relative rate of responding is more extreme than predicted by matching. The subject appears to be “too sensitive" to the schedule differences. Undermatching -- the relative rate of responding on a key is less extreme than predicted by matching. The subject appears to by “insensitive" to the schedule differences. Overmatching Poor Self-Control 39 A B small Direct Choice (Concurrent Schedule) LARGE Self-Control and Overmatching 40 Concurrent Choice Human and nonhumans often chose a immediate small reward over a larger delayed reward (delayed rewards are “discounted”) Another Example of Impulsivity 41 “Free” reinforcers given every 20s Lever press advances delivery of the first pellet, and deletes the second pellet 20s 40s 60s So, if you press at 2 seconds, you get a pellet immediately, but you get no other pellets until the 60 second pellet is available. Delay of Reinforcement 42 Delayed reinforcers are steeply discounted Loss of self-control and impulsivity 100 90 Reinforcer Potency 80 70 small immediate 60 50 large delayed 40 30 20 10 0 -9 -6 Delay -3 0 A B 43 A B small Concurrent Chain (Pre-committment) LARGE Behavioral Methods for Self Control 44 Pre-commitment Self-Exclusion Contracts Distraction Modeling Shaping Waiting Reduce delay for small Increase delay for large The Discontinuance of Reinforcement 45 Extinction The elimination or suppression of a response caused by the discontinuation of reinforcement or the removal of the unconditioned stimulus When reinforcement is first discontinued, the rate of responding remains high Under some conditions, it even increases Extinction Paradox 46 Stronger Learning ≠ Slower Extinction Partial Reinforcement Extinction Effect or PREE Importance of Consistency of Reward 47 Extinction is slower following partial rather than continuous reinforcement Partial reinforcement extinction effect (PREE): the greater resistance to extinction of an instrumental or operant response following intermittent rather than continuous reinforcement during acquisition One of the most reliable phenomena in psychology Acquisition with Differing Percentages 48 100% Speed 80/50/30% Day Extinction with Differing Percentages Speed 49 80% 100% Day 50% 30% Explanations 50 Mowrer-Bitterman Discrimination Hypothesis Amsel’s Frustration Theory (Emotional) Capaldi’s Sequential Theory (Cognitive) Theios Experiment (not just discrimination) 51 PHASE 1 G1 PHASE 2 EXT 100% 0% G2 100% 100% 0% G3 50% 100% 0% G4 50% - 0% 52 PHASE 1 Speed G1 G1, G2 100% PHASE 2 EXT 100% 0% G2 100% 100% 0% G3 50% 100% 0% G4 50% - 0% G3, G4 50% Extinction Trials Amsel’s Frustration Theory 53 Amsel’s Frustration Theory 54 100% Reinforcement Group Amsel’s Frustration Theory 55 50% Reinforcement Group Amsel (Percentage Reinforcement) Speed 56 100% Extinction Trials 50% Amsel’s Frustration Theory 57 EXT GROUP 1 T F 100% T- BETWEEN SUBJECT PREE GROUP 2 WITHIN SUBJECT TRIALS 1,3,6…. TRIALS 2,4,5…. N F 50% TF 100% N- T- Reversed PREE NF 50% N- Influence of Reward Magnitude 58 The influence of reward magnitude on resistance is dependent upon the amount of acquisition training. With extended acquisition, a small consistent reward may produce more resistance to extinction than a large reward (absence more frustrating). Reward Magnitude and Percentage 59 Sequential Theory 60 Sequential theory If reward follows nonreward, the animal will associate the memory of the nonrewarded experience with the operant or instrumental response During extinction, the only memory present after the first nonrewarded experience is that of the nonrewarded experience 61 Animals receiving continuous reward do not experience nonrewarded responses and so they do not associate nonrewarded responses with later reward Thus, the memory of receiving a reward after persistence in the face of nonreward becomes a cue for continued responding # of N-R transitions N length Variability of N-length 62 What is the significance of PRE? It encourages organisms to persist even though every behavior is not reinforced In the natural environment, not every attempt to attain a desired goal is successful PRE is adaptive because it motivates animals not to give up too easily