Power Point slides

advertisement

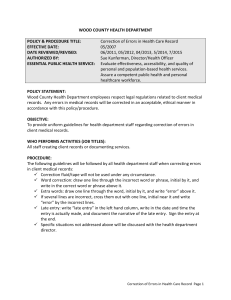

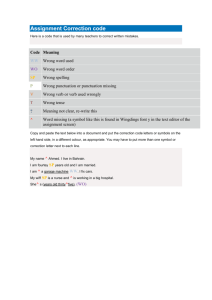

Automatic Spelling Correction

Probability Models and Algorithms

•

•

•

•

•

Motivation and Formulation

Demonstration of a Prototype Program

The Underlying Probability Models

Algorithms for Automatic Correction

Conclusion

Motivation and Formulation

• A set of words: the vocabulary

• Single-word correction: Given any

character string S that may or may not

belong to , match S with the most likely

word W in .

• Example: = {is, are, am}

iis is

ae are

anam

Motivation and Formulation

• Multiple-word correction: Given a series

of character string S1S2…Sm, each of which

may or may not belong to , match them

with the most likely word series

W1W2…Wm formed by words from .

• Example: = {I, is, are, am}

ii bn I am

Motivation and Formulation

• Given a word w, what do we mean by the

most likely word for w in ?

Needs some probability models

• How to find the most likely word for w?

Needs to develop algorithms

Probability Models: Typical Typos

Errors in the transition of mental states

– Repeating characters: iis is

– Skipping characters: ae are

Mentally right, but the finger wrongly land in

a nearby key

– anam

Probability Models

• The Word Model:

for each word w, how do we

probabilistically transition from one mental

state of trying to type some character in the

word to another.

e.g.

Ideally:

are

but things like: a a r e

a e

could happen.

Probability Models

• The keyboard model :

(i.e. the acoustic model in speech recognition)

for a mental state of trying to type a

character c in a word what is the probability

distribution over the actual keys touched.

e.g.

Ideally: you want to type a you touch a

but you might touch b, q , z , s , w , x , …

Probability Models

• The Language Model: (i.e. the sentence model)

How do we put words together to form

sentences?

• The language model is not absolutely

necessary for single-word correction, but it

can further improve the accuracy and

multiple-word correction by considering the

context.

Probability Models

• The Language Model: (i.e. the sentence model)

For example, a bigram language model shows how likely each

individual word will appear in a sentence and how likely one

word will follow another word . Such knowledge can help :

I an

I am

Ia

e.g. you see two words:

I an

are much more likely generated from

than from

Algorithms

Calculate the probability of generating a character

string S of s characters when trying to type a word W

of w characters.

•

•

O(sw2) using dynamic programming

O(ws) using a naïve approach

Algorithms

Single-word correction:

Determine the most likely word from a vocabulary of v

words (with maximally w characters per word) for a

string S of s characters.

• O(vsw2) using dynamic programming

• For each word W in the vocabulary, calculate the

probability of generating S from W, weighted by

individual word frequency, find the most like one.

Algorithms

Multiple-word correction:

Determine the most likely word series W1W2…Wm of

m words from a vocabulary of v words (with

maximally w characters in each word there) for m

strings S1S2…Sm of (with maximally s characters in

each string).

Conclusion

• Similar modeling and analysis applicable to

speech recognition

• Mathematical structures provides powerful

tools for modeling and analysis

• Design and analysis of algorithms important

to real-world problem solving

• Mathematical structures and algorithms:

two key components of modern AI research.